A New Method Based on the RKHSM for Solving Systems of Nonlinear IDDEs with Proportional Delays

Abstract

An efficient computational method is given in order to solve the systems of nonlinear infinite-delay-differential equations (IDDEs) with proportional delays. Representation of the solution and an iterative method are established in the reproducing kernel space. Some examples are displayed to demonstrate the computation efficiency of the method.

1. Introduction

In functional-differential equations (FDEs), there is a class of infinite-delay-differential equations (IDDEs) with proportional delays such systems are often encountered in many scientific fields such as electric mechanics, quantum mechanics, and optics. In view of this, developing the research for this class of IDDEs possesses great significance on theory and practice, for this attracts constant interest of researchers. Ones have found that there exist very different mathematical challenges between FDEs with proportional delays and those with constant delays. Some researches on the numerical solutions and the corresponding analysis for the linear FDEs with proportional delays have been presented by several authors. In the last few years, there has been a growing interest in studying the existence of solutions of functional differential equations with state dependent delay [1–7]. Initial-value problem for neutral functional-differential equations with proportional time delays had been studied in [8–11]; in the literature [11] authors had discussed the existence and uniqueness of analytic solution of linear proportional delays equations.

Ishiwata et al. used the rational approximation method and the collocation method to compute numerical solutions of delay-differential equations with proportional delays in [12, 13]. At [14–17], Hu et al. gave the numerical method to compute numerical solutions of neutral delay differential equations. For neutral delay differential equations with proportional delays, Chen and Wang proposed the variational iteration method [18] and the homotopy perturbation method [19]. Recently, time-delay systems become interested in applications like population growth models, transportation, communications, and agricultural models so those systems were widely studied both in a theoretical aspect and in that of related applications [20–22].

The paper is organized as follows. In Section 2, some definitions of the reproducing kernel spaces are introduced. In Section 3, main results and the structure of the solution for operator equation are discussed. Existence of the solution to (2) and an iterative method are developed for the kind of problems in the reproducing kernel space. We verify that the approximate solution converges to the exact solution uniformly. In Section 4, some experiments are given to demonstrate the computation efficiency of the algorithm. The conclusion is given in Section 5.

2. Preliminaries

Definition 1 (see [25] reproducing kernel.)Let M be a nonempty abstract set. A function K : M × M → C is a reproducing kernel of the Hilbert space H if and only if

- (a)

∀y ∈ M, K(·, x) ∈ H,

- (b)

∀y ∈ M, ∀u ∈ H, 〈u(·), K(·, y)〉 = u(y).

The condition (b) is called “the reproducing property”; a Hilbert space which possesses a reproducing kernel is called a Reproducing Kernel Hilbert Space (RKHS).

Next, two reproducing kernel spaces are given.

Definition 2. W2[0, +∞) = {u(x)∣u′ is absolutely continuous real value functions, u′′ ∈ L2[0, +∞), u(0) = 0}.

W2[0, +∞) is a Hilbert space, for u(x), v(x) ∈ W2[0, +∞); the inner product and norm in W2[0, +∞) are given by

Theorem 3. The space W2[0, +∞) is a reproducing kernel space that is, for any u(y) ∈ W2[0, +∞) and each fixed x ∈ [0, +∞), there exists Rx(y) ∈ W2[0, +∞), y ∈ [0, +∞), such that u(x) = 〈Rx(y), u(y)〉. And the corresponding reproducing kernel can be represented as follows [26]:

Definition 4. W1[0, +∞) = {u(x)∣u is absolutely continuous real-valued function, u′ ∈ L2[0, +∞)}.

The inner product and norm in W1[0, +∞) can be defined by

3. Statements of the Main Results

In this section, the implementation method of obtaining the solution of (2) is proposed in the reproducing kernel space W2[0, +∞).

Lemma 5. Assume that are dense in [0, +∞); then is a complete system in W2[0, +∞) and .

Proof. Ones have

Clearly, ψi(x) ∈ W2[0, +∞).

For any u(x) ∈ W2[0, +∞), let 〈u(x), ψi(x)〉 = 0, i = 1,2, …, which means that

3.1. Construction the of Iterative Sequence and

Lemma 6. Let be dense on [0, +∞); if the solution of (2) is unique, then the solution satisfies the form

Proof. Note that 〈u(x), φi(x)〉 = u(xi) and is an orthonormal basis of W2[0, +∞); hence according to Lemma 5 we have

3.2. The Boundedness of Sequence un(x) and vn(x)

Lemma 7. For x ∈ [0, +∞), y, z ∈ (−∞, +∞), and F(x, y, z), G(x, y, z) are continuous bounded functions on [0, +∞), we have that and are bounded.

Proof. By the expression of Mn(x), and the assumptions, we know that Mn(x) and are bounded. In the following, we will discuss the boundedness of .

Since the function which is in is dense in W2[0, +∞), without loss of generality, we assume that is continuous.

Note that

Lemma 8. Assume that F(x, y, z), G(x, y, z) are continuous bounded functions for x ∈ [0, +∞), y, z ∈ (−∞, +∞), and F(x, y, z), G(x, y, z) ∈ W1[0, +∞) as y = y(x) ∈ W2, z = z(x) ∈ W2, then

3.3. Construction the of Another Iterative Sequence un(x) and vn(x)

Theorem 9. Let be dense on [0, +∞); if the solution of (2) exists and unique, then the solution satisfies the form

Proof. Note that 〈u(x), φi(x)〉 = u(xi) and is an orthonormal basis of W2[0, +∞); hence we have

Lemma 10. The following iterative sequences

Proof. If j = 1,

Theorem 11. The iterative form

Proof. In Lemma 10, let n − 1 = k; then

So, by Theorem 11 and Lemma 8, we have the following Theorem.

Lemma 13. If u(x) and v(x) ∈ W2[0, +∞), then there exists M1, M2 > 0, such that |u(x)|≤ and .

Proof. It is easy to obtain from the properties in the reproducing kernel space.

By Lemma 13 and Theorem 12, it is easy to obtain the following Lemma 14.

Lemma 14. If , , xn → y(n → ∞), and F(x, y, z) and G(x, y, z) satisfy the conditions of Lemma 8, then

Theorem 15. Let be dense in [0, +∞), and F(x, y, z) and G(x, y, z) satisfy the conditions of Lemma 8, then the n-term approximate solutions un(x) and vn(x) in (35) converge to the exact solution u(x) and v(x) of (2), respectively, and , , where and are given, respectively, by (36), and (37).

Proof. (1) Firstly, we will prove the convergence of un(x), vn(x).

By (35), we infer that

(2) Secondly, we will prove that and are the solutions of (2).

By Lemma 14 and the proof of (1), we may know that un(x), and vn(x) respectively, converge uniformly to and . Taking limits in (35), we have

If n = 2, then

From (70) and (71), it is clear that

In the proof of the convergence in Theorem 15 we only use ∥un∥ ≤ C, ∥vn∥ ≤ D; thus we obtain the following corollary.

Corollary 16. Suppose ∥un∥ and ∥vn∥ are bounded; then the iterative sequence (35) is convergent to the exact solution of (2).

Theorem 17. Assume u(x) and v(x) are the solutions of (2), and are the approximate errors of un(x), vn(x), where un(x) and vn(x) are given by (35). Then the errors , are monotone decreasing in the sense of .

4. Numerical Examples

In order to demonstrate the efficiency of our algorithm for solving (2), we will present two numerical examples in the reproducing kernel space W2[0, +∞). Let n be the number of discrete points in [0, +∞). Denote , . All computations are performed by the Mathematica 5.0 software package. Results obtained by the method are compared with the exact solution of each example and are found to be in good agreement with each other.

Example 18. In this example we consider the problem

Step 1. By the method of the appendix, the corresponding reproducing kernel functions can be obtained.

Step 2. Choosing a dense subset in [0,1], then we get the orthogonalization coefficients βik.

Step 3. According to (10), we can get the normal orthogonal systems .

Step 4. Selecting the initial value u0(x) = v0(x) = 0, we obtain u1(x), u2(x), …, u50(x) and v1(x), v2(x), …, v50(x) by (19) developed in the paper.

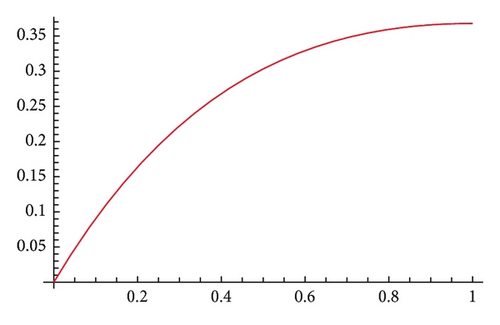

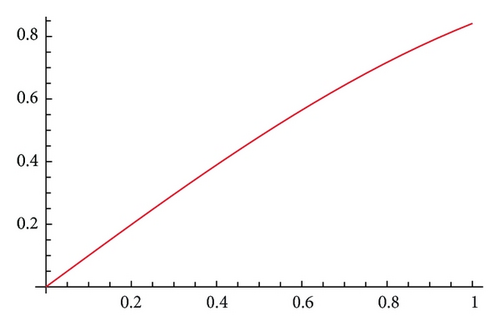

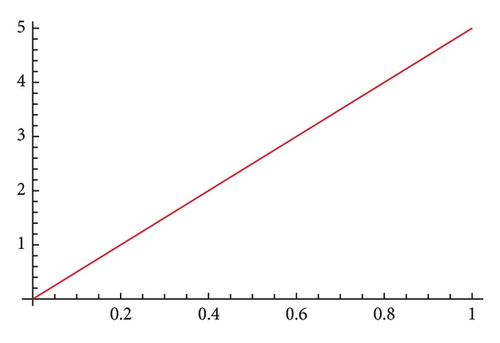

The graphs of the superimposed image emerge in Figure 1. At the same time, we have computed the approximate solutions un(x) and vn(x) (n = 300, 900) in [0,3] and also calculated the relative errors and in Table 1. The root mean square errors (RMSE) about u(x) with un and v(x) with vn are shown in Table 2.

| Node | ||||

|---|---|---|---|---|

| 0.3 | 4.97833e − 5 | 8.4527e − 5 | 5.53163e − 6 | 9.39806e − 6 |

| 0.6 | 4.43080e − 5 | 7.3330e − 5 | 4.92288e − 6 | 8.15664e − 6 |

| 0.9 | 3.86801e − 5 | 6.6731e − 5 | 4.29733e − 6 | 7.42512e − 6 |

| 1.2 | 3.41875e − 5 | 6.1845e − 5 | 3.79797e − 6 | 6.88400e − 6 |

| 1.5 | 3.09088e − 5 | 5.7095e − 5 | 3.43349e − 6 | 6.35872e − 6 |

| 1.8 | 2.87391e − 5 | 5.1303e − 5 | 3.19221e − 6 | 5.71898e − 6 |

| 2.1 | 2.75649e − 5 | 4.3153e − 5 | 3.06149e − 6 | 4.81984e − 6 |

| 2.4 | 2.7288e − 5 | 3.0422e − 5 | 3.03034e − 6 | 3.41736e − 6 |

| 2.7 | 2.7829e − 5 | 6.7566e − 5 | 3.09008e − 6 | 8.12936e − 7 |

| 3.0 | 2.90670e − 5 | 7.5017e − 5 | 3.23206e − 6 | 8.72219e − 6 |

| n | ||

|---|---|---|

| 300 | 9.95194e − 6 | 4.00007e − 5 |

| 900 | 1.10551e − 6 | 4.45554e − 6 |

Example 19. Considering equations

| Node | ||||

|---|---|---|---|---|

| 0.4 | 8.06470e − 5 | 4.94886e − 5 | 3.58334e − 5 | 2.20033e − 5 |

| 0.8 | 1.07473e − 4 | 5.27211e − 5 | 4.77632e − 5 | 2.34475e − 5 |

| 1.2 | 2.56877e − 4 | 5.32904e − 5 | 1.14199e − 4 | 2.37040e − 5 |

| 1.6 | 1.19110e − 4 | 5.01608e − 5 | 5.29170e − 5 | 2.23098e − 5 |

| 2.0 | 8.56122e − 5 | 4.35915e − 5 | 3.80383e − 5 | 1.93810e − 5 |

| 2.4 | 2.27843e − 4 | 3.52666e − 5 | 1.01280e − 4 | 1.56705e − 5 |

| 2.8 | 1.38237e − 4 | 2.74018e − 5 | 6.13912e − 5 | 1.21676e − 5 |

| 3.2 | 7.26035e − 5 | 2.15572e − 5 | 3.22429e − 5 | 9.56745e − 6 |

| 3.6 | 1.86684e − 5 | 1.80587e − 5 | 8.29627e − 5 | 8.01449e − 6 |

| 4.0 | 1.50042e − 4 | 1.64230e − 5 | 6.66348e − 5 | 7.14957e − 6 |

| n | ||

|---|---|---|

| 800 | 1.87807e − 3 | 7.85856e − 5 |

| 1200 | 8.34276e − 4 | 3.48826e − 5 |

5. Conclusion

In this paper, RKHSM has been successfully applied to find the solutions of systems of nonlinear IDDEs with proportional delays. The efficiency and accuracy of the proposed decomposition method were demonstrated by two test problems. It is concluded from above tables and figures that the RKHSM is an accurate and efficient method to solve IDDEs with proportional delays. Moreover, the method is also effective for solving some nonlinear initial-boundary value problems and nonlocal boundary value problems.

Acknowledgments

This research is supported by the National Natural Science Foundation of China (61071181), the Educational Department Scientific Technology Program of Heilongjiang Province (12531180, 12512133, and 12521148), and the Academic Foundation for Youth of Harbin Normal University (KGB201226).

Appendix

The Reproducing Kernel Space W2[0, m]

Theorem A.1. The space W2[0, m] is a reproducing kernel space; that is, for any u(y) ∈ W2[0, m] and each fixed x ∈ [0, m], there exists Rx(y) ∈ W2[0, m], y ∈ [0, m], such that . The reproducing kernel Rx(y) can be denoted by

Proof. Applying to the integrations by parts for (A.1), we have

Since Rx(y) ∈ W2[0, m], it follows that

For u(y) ∈ W2[0, m], thus, u(0) = 0.

Suppose that Rx(y) satisfies the following generalized differential equations:

In the following, we will get the expression of the reproducing kernel Rx(y).

The characteristic equation of is given by λ4 − 5λ2 + 4 = 0, and the characteristic roots are λ1,2 = ±1, λ3,4 = ±2.

We denote Rx(y) by