Feedback Control Method Using Haar Wavelet Operational Matrices for Solving Optimal Control Problems

Abstract

Most of the direct methods solve optimal control problems with nonlinear programming solver. In this paper we propose a novel feedback control method for solving for solving affine control system, with quadratic cost functional, which makes use of only linear systems. This method is a numerical technique, which is based on the combination of Haar wavelet collocation method and successive Generalized Hamilton-Jacobi-Bellman equation. We formulate some new Haar wavelet operational matrices in order to manipulate Haar wavelet series. The proposed method has been applied to solve linear and nonlinear optimal control problems with infinite time horizon. The simulation results indicate that the accuracy of the control and cost can be improved by increasing the wavelet resolution.

1. Introduction

Optimal control is an important branch of mathematics and has been widely applied in a number of fields, including engineering, science, and economics. Although, the necessary and sufficient conditions for optimality have already been derived for H2 and H∞ optimal controls, they are only useful for finding analytical solutions for quite restricted cases. If we assume full-state knowledge, and if the optimal control problem is linear, then the optimal control is a linear feedback of the state, which is obtained by solving a matrix Riccati equation. However, if the system is nonlinear, then the optimal control is a state feedback function, which depends on the solution to a Hamilton-Jacobi-Bellman equation (HJB) or a Hamilton-Jacobi-Issac equation (HJI) for H2 or H∞ optimal control problem, respectively [1], and is usually difficult to solve analytically. Feng et al. [2] have solved an HJI equation iteratively by solving a sequence of HJB equation. In this paper, we are more concerned with approximate solution for HJB equation. Among numerous computational approach for solution of HJI equation, we refer in particular to [3–5]. Robustness of nonlinear state feedback is discussed in [6].

Broadly speaking, and in general, numerical methods for solving optimal control problem are divided into two categories: direct and indirect methods. The direct methods reduce optimal control problem to a nonlinear programming problem, by parameterizing or discretizing the infinite-dimensional optimal control problem, into finite-dimensional optimization problem. On the other hand, the indirect methods solve HJB equation or the first order necessary condition for optimality, which are obtained from Pontryagin minimum principle. Both these methods are important for solving optimal control problems; however, the difference between them is that the indirect methods are believed to yield more accurate result, whereas the direct methods tend to have better convergence properties. von Stryk and Bulirsch [7] have used both direct and indirect methods to solve optimal control problem for trajectory optimization in Apollo capsule. Beard et al. [8] have introduced Generalized Hamilton-Jacobi-Bellman equation to successively approximate solution of the HJB equation. Given an arbitrary stabilizing control law, their method can be used to improve the performance of the control. Moreover, Jaddu [9] has reported some numerical methods to solve unconstrained and constrained optimal control problems, by converting optimal control problems into quadratic programming problem. He has used a parameterization technique using the Chebyshev polynomials. Meanwhile, Beeler et al. [10] have performed a comparison study of five different methods for solving nonlinear control systems and studied the performance of the methods on several test problems. Park and Tsiotras [11] have proposed a successive wavelet collocation algorithm which used interpolating wavelets, to iteratively solve the Generalized Hamilton-Jacobi-Bellman equation and the corresponding optimal control law.

Wavelet basis that has compact support allows us to better represent functions with sharp spikes or edges than other bases. This property is advantageous in many applications in signal or image processing. In addition, the availability of fast transform makes it attractive as a computational tool. Numerical solutions of integral and differential equations have been discussed in many papers, which basically fall either in the class of spectral Galerkin and Collocation methods or finite element and finite difference methods.

Haar wavelet is the simplest orthogonal wavelet with a compact support. Chan and Hsiao [12] have used the Haar operational matrix method to solve lumped and distributed parameter systems. Hsiao and Wang [13] have solved optimal control of linear time-varying systems via Haar wavelets. Dai and Cochran Jr. [14] have considered a Haar wavelet technique to transform optimal control problems into nonlinear programming (NLP) parameters at collocation points. This NLP can be solved using nonlinear programming solver such as SNOPT.

In the present paper we have considered the method of Beard et al. [8] to successively approximate the solution of HJB equation. Instead of using the Galerkin method with polynomial basis, we have used collocation method with Haar wavelet basis to solve the Generalized Hamilton-Jacobi-Bellman equation. Galerkin method requires the computation of multidimensional integrals which makes the method impractical for higher order systems [15]. The main advantage of using collocation method in general is that computational burden of solving Generalized Hamilton-Jacobi-Bellman equation is reduced to matrix computation only. Our new successive Haar wavelet collocation method is used to solve linear and nonlinear optimal control problems. In the process of establishing the method we have to define new operational matrices of integration for a chosen stabilizing domain and new operational matrix for the product of two dimensions Haar wavelet functions.

2. Haar Wavelets

3. Haar Wavelet Operational Matrices

Step 1. Let be a matrix of C, or equivalently .

Step 2. Compute , i = 1,2, …, m according to (11) using the column as the coefficient vector.

Step 3. For i = 1,2, …, m, compute .

Step 4. Form a big matrix by concatenating all vectors from Step 3; that is, .

Step 5. For each row k of matrix S, compute Ni,j according to (11) using the row Sk as the coefficient vector.

Step 6. Form the matrix as follows:

Step 7. End.

4. Problem Statement

5. The Successive Haar Wavelet Collocation Method

The following section describes the successive Haar wavelet collocation method (SHWCM) used for obtaining the two dimensional numerical solution to the HJB equation. In every step of this algorithm, an approximate solution to the GHJB equation (25) has been identified, namely, ∂V(i)/∂x, V(i), and u(i); all can be approximately expressed in term of Haar wavelets. As i → ∞, V(i) and u(i) will approach the optimal solution V* and u*, respectively.

By using the solution of GHJB equation (29), a feedback control law u(1) is constructed using (30), which improves the efficiency of u(0). The solution of the Hamilton-Jacobi-Bellman equation is uniformly approximated by repeating the above process.

6. Numerical Examples

To show the efficiency of the proposed method, we applied our method to a linear quadratic optimal control problem and two nonlinear quadratic optimal control problems.

Example 1. Consider the following linear quadratic regulator (LQR):

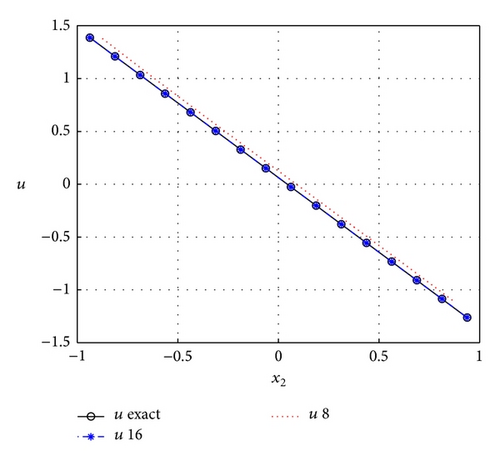

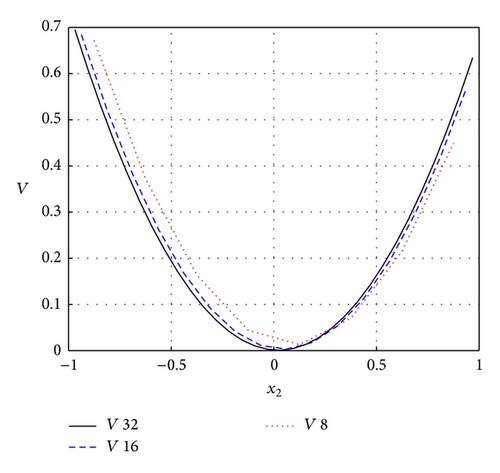

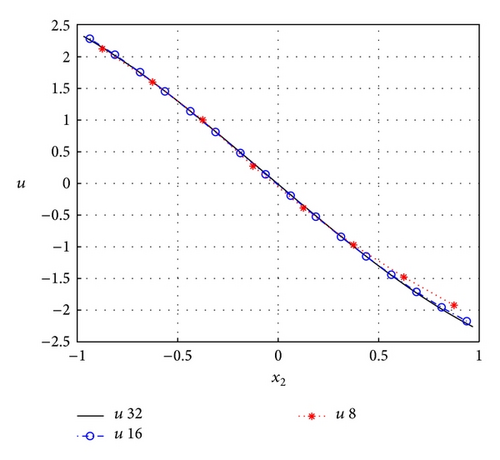

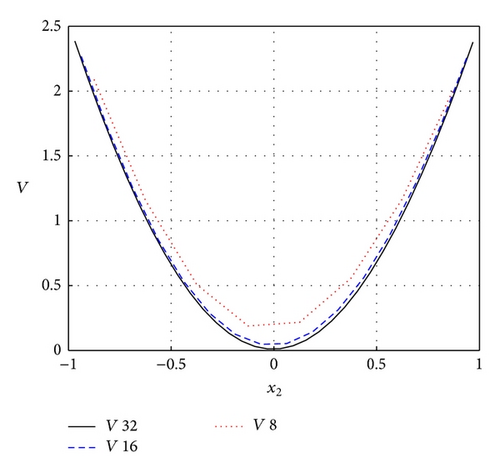

To solve this problem we take the initial stabilizing control u(0)(x) = −x1 − x2. Tables 1 and 2 show sample iteration results for u(i) and V(i), respectively, when m = 8, x1 = −1/8. The iteration is terminated when the difference between two successive controls is less than ϵ = 0.001. Subsequent, in order to display two-dimensional plots, we fix the value for x1 at x1[m/2] = −τ/m and x2 ∈ [−1,1). Figure 1 shows that for the particular LQR problem, the usage of m = 16 is enough to approximate the exact optimal feedback control ; however, to approximate the exact cost function we require higher value of m as shown in Figure 2.

| x2 | u(0) | u(1) | u(2) | u(3) | u(4) | uexact |

|---|---|---|---|---|---|---|

| −7/8 | 1.0000 | 1.4463 | 1.3772 | 1.3786 | 1.3793 | 1.3624 |

| −5/8 | 0.7500 | 1.0636 | 1.0114 | 1.0130 | 1.0136 | 1.0089 |

| −3/8 | 0.5000 | 0.68889 | 0.6548 | 0.6548 | 0.6550 | 0.6553 |

| −1/8 | 0.2500 | 0.3135 | 0.3027 | 0.3017 | 0.3015 | 0.3018 |

| 1/8 | 0 | −0.0615 | −0.0515 | −0.0519 | −0.0520 | −0.0518 |

| 3/8 | −0.2500 | −0.4397 | −0.4080 | −0.4053 | −0.4049 | −0.4053 |

| 5/8 | −0.5000 | −0.8137 | −0.7584 | −0.7571 | −0.7572 | −0.7589 |

| 7/8 | −0.7500 | −1.1880 | −1.1123 | −1.1130 | −1.1135 | −1.1124 |

| x2 | V(0) | V(1) | V(2) | V(3) | Vexact |

|---|---|---|---|---|---|

| −7/8 | 0.7051 | 0.6709 | 0.6712 | 0.6714 | 0.6618 |

| −5/8 | 0.3914 | 0.3723 | 0.3722 | 0.3723 | 0.3654 |

| −3/8 | 0.1723 | 0.1640 | 0.1637 | 0.1637 | 0.1574 |

| −1/8 | 0.0470 | 0.0444 | 0.0442 | 0.0441 | 0.0377 |

| 1/8 | 0.0155 | 0.0130 | 0.0130 | 0.0130 | 0.0065 |

| 3/8 | 0.0781 | 0.0704 | 0.0701 | 0.0701 | 0.0636 |

| 5/8 | 0.2348 | 0.2162 | 0.2154 | 0.2153 | 0.2091 |

| 7/8 | 0.4850 | 0.4500 | 0.4492 | 0.4492 | 0.4431 |

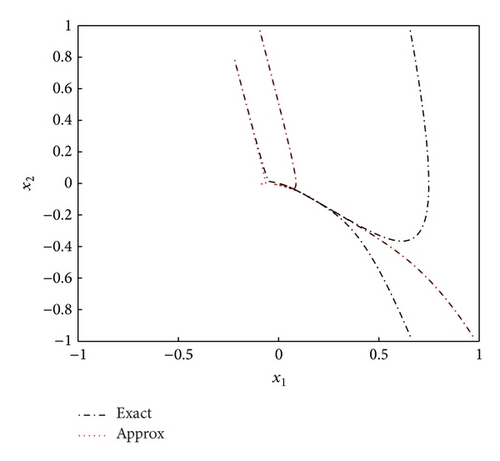

Example 2. Consider the following nonlinear optimal control problem [15]:

Example 3. Consider the following optimal control problem [8]:

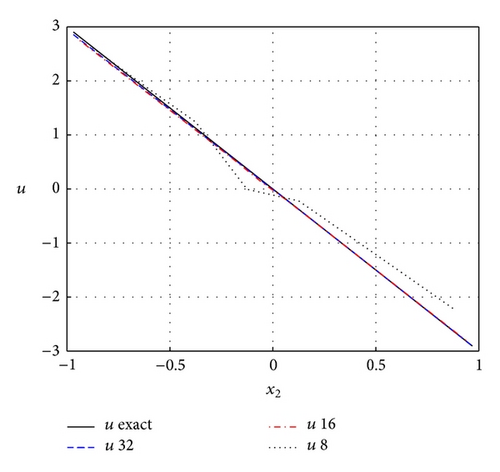

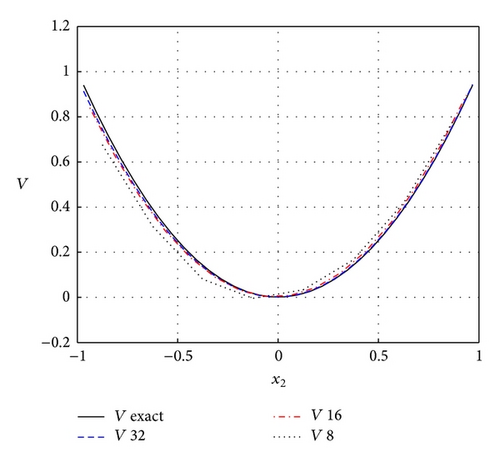

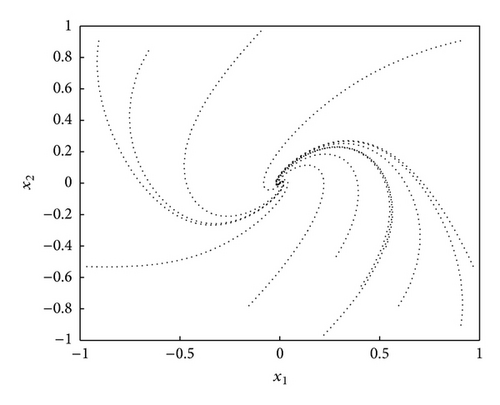

The initial stabilizing control u(0)(x) = 0.4142x1 − 1.3522x2 can be obtained using feedback linearization method as outlined in [20]. The optimal feedback control and cost function obtained using SHWCM for various resolution m = 8, 16, and 32 are illustrated in Figures 6 and 7, respectively. We believe that, by increasing Haar wavelet resolution, the SHWCM will be capable of yielding more accurate results. Figure 8 shows simulation of the system trajectories.

7. Conclusion

In this paper we had proposed a new numerical method for solving the Hamilton-Jacobi-Bellman equation, which appears in the formulation of optimal control problems. Our approach uses a combination of successive Generalized Hamilton-Jacobi-Bellman equation and Haar wavelets operational matrix methods. The proposed approach is simple and stable and has been tested on linear and nonlinear optimal control problem in two-dimensional state space. Generally, by using our method, the approximate solutions for optimal feedback control require lower resolution, than the approximate solutions for the cost function. However, in both cases, it is clear that more accurate results can be obtained by increasing the resolution of Haar wavelet.

Acknowledgments

The authors are very grateful to the referees for their valuable comments and suggestions, which greatly improved the presentation of this paper. This research has been funded by University of Malaya, under Grant No. RG208-11AFR.