Achieving Synchronization in Arrays of Coupled Differential Systems with Time-Varying Couplings

Abstract

We study complete synchronization of the complex dynamical networks described by linearly coupled ordinary differential equation systems (LCODEs). Here, the coupling is timevarying in both network structure and reaction dynamics. Inspired by our previous paper (Lu et al. (2007-2008)), the extended Hajnal diameter is introduced and used to measure the synchronization in a general differential system. Then we find that the Hajnal diameter of the linear system induced by the time-varying coupling matrix and the largest Lyapunov exponent of the synchronized system play the key roles in synchronization analysis of LCODEs with identity inner coupling matrix. As an application, we obtain a general sufficient condition guaranteeing directed time-varying graph to reach consensus. Example with numerical simulation is provided to show the effectiveness of the theoretical results.

1. Introduction

Complex networks have widely been used in theoretical analysis of complex systems, such as Internet, World Wide Web, communication networks, and social networks. A complex dynamical network is a large set of interconnected nodes, where each node possesses a (nonlinear) dynamical system and the interaction between nodes is described as diffusion. Among them, linearly coupled ordinary differential equation systems (LCODEs) are a large class of dynamical systems with continuous time and state.

For decades, a large number of papers have focused on the dynamical behaviors of coupled systems [3–5], especially the synchronizing characteristics. The word “synchronization” comes from Greek; in this paper the concept of local complete synchronization (synchronization for simplicity) is considered (see Definition 3). For more details, we refer the readers to [6] and the references therein.

Synchronization of coupled systems have attracted a great deal of attention [7–9]. For instances, in [7], the authors considered the synchronization of a network of linearly coupled and not necessarily identical oscillators; in [8], the authors studied globally exponential synchronization for linearly coupled neural networks with time-varying delay and impulsive disturbances. Synchronization of networks with time-varying topologies was studied in [10–16]. For example, in [10], the authors proposed the global stability of total synchronization in networks with different topologies; in [16], the authors gave a result that the network will synchronize with the time-varying topology if the time-average is achieved sufficiently fast.

Synchronization of LCODEs has also been addressed in [17–19]. In [17], mathematical analysis was presented on the synchronization phenomena of LCODEs with a single coupling delay; in [18], based on geometrical analysis of the synchronization manifold, the authors proposed a novel approach to investigate the stability of the synchronization manifold of coupled oscillators; in [19], the authors proposed new conditions on synchronization of networks of linearly coupled dynamical systems with non-Lipschitz right-handsides. The great majority of research activities mentioned above all focused on static networks whose connectivity and coupling strengths are static. In many applications, the interaction between individuals may change dynamically. For example, communication links between agents may be unreliable due to disturbances and/or subject to communication range limitations.

In this paper, we consider synchronization of LCODEs with time-varying coupling. Similar to [17–19], time-varying coupling will be used to represent the interaction between individuals. In [6, 13], they showed that the Lyapunov exponents of the synchronized system and the Hajnal diameter of the variational equation play key roles in the analysis of the synchronization in the discrete-time dynamical networks. In this paper, we extend these results to the continuous-time dynamical network systems. Different from [11, 16], where synchronization of fast-switching systems was discussed, we focus on the framework of synchronization analysis with general temporal variation of network topologies. Additional contributions of this paper are that we explicitly show that (a) the largest projection Lyapunov exponent of a system is equal to the logarithm of the Hajnal diameter, and (b) the largest Lyapunov exponent of the transverse space is equal to the largest projection Lyapunov exponent under some proper conditions.

The paper is organized as follows: in Section 2, some necessary definitions, lemmas, and hypotheses are given; in Section 3, synchronization of generalized coupled differential systems is discussed; in Section 4, criteria for the synchronization of LCODEs are obtained; in Section 5, we obtain a sufficient condition ensuring directed time-varying graph reaching consensus; in Section 6, example with numerical simulation is provided to show the effectiveness of the theoretical results; the paper is concluded in Section 7.

Notions. denotes the n-dimensional vector with all components zero except the kth component 1, 1n denotes the n-dimensional column vector with each component 1; for a set in some Euclidean space U, denotes the closure of U, Uc denotes the complementary set of U, and A∖B = A∩Bc; for , ∥u∥ denotes some vector norm, and for any matrix A = (aij) ∈ ℝn,m, ∥A∥ denotes some matrix norm induced by vector norm, for example, and ; for a matrix A = (aij) ∈ ℝn,m, |A| denotes a matrix with |A | = (|aij|); for a real matrix A, denotes its transpose and for a complex matrix B, B* denotes its conjugate transpose; for a set in some Euclidean space W, 𝒪(W, δ) = {x : dist (x, W) < δ}, where dist (x, W) = inf y∈W ∥x − y∥; #J denotes the cardinality of set J; ⌊z⌋ denotes the floor function, that is, the largest integer not more than the real number z; ⊗ denotes the Kronecker product; for a set in some Euclidean space W, Wm denote the Cartesian product W × ⋯×W (m times).

2. Preliminaries

For the functions fi : ℝnm × ℝ+ → ℝn, i = 1,2, …, m, we make the following assumption.

Assumption 1. (a) There exists a function f : ℝn → ℝn such that fi(s, s, …, s, t) = f(s) for all i = 1,2, …, m, s ∈ ℝn, and t ≥ 0; (b) for any t ≥ 0, fi(·, t) is C1-smooth for all , and by denotes the Jacobian matrix of with respect to x ∈ ℝnm; (c) there exists a locally bounded function ϕ(x) such that ∥DFt(x)∥≤ϕ(x) for all (x, t) ∈ ℝnm × ℝ+; (d) DFt(x) is uniformly locally Lipschitz continuous: there exists a locally bounded function K(x, y) such that

We say a function g(y) : ℝq → ℝp is locally bounded if for any compact set K ⊂ ℝq, there exists M > 0 such that ∥g(y)∥≤M holds for all y ∈ K.

Since f(·) is C1-smooth, then s(t) can be denoted by the corresponding continuous semiflow s(t) = ϑ(t)s0 of the intrinsic system (5). For ϑ(t), we make following assumption.

Assumption 2. The system (5) has an asymptotically stable attractor: there exists a compact set A ⊂ Rn such that (a) A is invariant through the system (5), that is, ϑ(t)A ⊂ A for all t ≥ 0; (b) there exists an open bounded neighborhood U of A such that ; (c) A is topologically transitive; that is, there exists s0 ∈ A such that ω(s0), the ω limit set of the trajectory ϑ(t)s0, is equal to A [3].

Definition 3. Local complete synchronization (synchronization for simplicity) is defined in the sense that the set

Next we give some lemmas which will be used later, and the proofs can be seen in the appendix.

Lemma 4. Under Assumption 1, one has

Lemma 5. Under Assumptions 1 and 2, there exists a compact neighborhood W of A such that for all t ≥ t′ ≥ 0 and ⋂t≥0 ϑ(t)W = A.

From [20], we can give the results on the existence, uniqueness, and continuous dependence of (2) and (9).

Lemma 6. Under Assumption 2, each of the differential equations (2) and (9) has a unique solution which is continuously dependent on the initial condition.

Thus, the solution of the linear system (9) can be written in matrix form.

Definition 7. Solution matrix U(t, t0, s0) of the system (9) is defined as follows. Let U(t, t0, s0) = [u1(t, t0, s0), …, unm(t, t0, s0)], where uk(t, t0, s0) denotes the kth column and is the solution of the following Cauchy problem:

Definition 8. For a time varying system denoted by Dℱ, we can define its Hajnal diameter of the variational system (9) as follows:

Lemma 9 (Grounwell-Beesack′s inequality). If function v(t) satisfies the following condition:

Based on Assumption 1, for the solution matrix U, we have the following lemma.

Lemma 10. Under Assumption 1, one has the following:

- (1)

, where denotes the solution matrix of the following Cauchy problem:

() - (2)

for any given t ≥ 0 and the compact set W given in Lemma 5, U(t + t0, t0, s0) is bounded for all t0 ≥ 0 and s0 ∈ W and equicontinuous with respect to s0 ∈ W.

Definition 11. We define the following linear differential system by the projection variational system of (9) along the directions P2:

Definition 12. For any time varying variational system , we define the Lyapunov exponent of the variational system (9) as follows:

Then, we have the following lemma.

Lemma 13. λP(Dℱ, s0) = log diam(Dℱ, s0).

Remark 14. From Lemma 13, we can see that the largest projection Lyapunov exponent is independent of the choice of matrix P.

Assumption 15. (a) ϱ(t) is a continuous semiflow; (b) DF(s, ω) is a continuous map for all (s, ω) ∈ ℝn × Ω.

- (1)

, where ck, k = 1, 2, …, n, are constants;

- (2)

if , which is finite, then χ[1/(u(t))] = −α;

- (3)

χ[u(t) + v(t)] ≤ max {χ[u(t)], χ[v(t)]};

- (4)

for a vector-value or matrix-value function U(t), we define χ[U(t)] = χ[∥U(t)∥].

- (1)

L(t) ∈ C1[0, +∞);

- (2)

L(t), , L−1(t) are bounded for all t ≥ 0.

Lemma 16. Suppose that Assumptions 1, 2, and 15 are satisfied. Let {σ1, σ2, …, σn, σn+1, …, σnm} be the Lyapunov exponents of the variational system (26), where {σ1, …, σn} correspond to the synchronization space and the remaining correspond to the transverse space. Let λT(Dℱ, s0, ω0) = max i≥n+1 σi and λS(Dℱ, s0, ω0) = max 1≤i≤n σi. If (a) the linear system (17) is a regular system, (b) ∥DF(s(t), ϱ(t)ω0)∥ ≤ M for all t ≥ 0, (c) λP(Dℱ, s0, ω0) ≠ λS(Dℱ, s0, ω0), then λT(Dℱ, s0, ω0) = λP(Dℱ, s0, ω0).

3. General Synchronization Analysis

In this section we provide a methodology based on the previous theoretical analysis to judge whether a general differential system can be synchronized or not.

Theorem 17. Suppose that W ∈ ℝn is the compact subset given in Lemma 5, and Assumptions 1 and 2 are satisfied. If

Proof. The main techniques of the proof come from [3, 6] with some modifications. Let ϑ(t) be the semiflow of the uncoupled system (5). By the condition (32), there exist d satisfying and T1 ≥ 0 such that , and . For each s0 ∈ W, there must exist t(s0) ≥ T1 such that for all t0 ≥ 0. According to the equicontinuity of U(t0 + t(s0), t0, s0), there exists δ > 0 such that for any , for all t0 ≥ 0. According to the compactness of W, there exists a finite positive number set 𝒯 = {t1, t2, …, tv} with tj ≥ T1 for all j = 1, 2, …, v such that for any s0 ∈ W, there exists tj ∈ 𝒯 such that diam (U(t0 + tj, t0, s0)) < 1/3 for all t0 ≥ 0. Let x(t) be the collective states {x1(t), …, xm(t)} which is the solution of the coupled system (2) with initial condition , i = 1,2, …, m. And let s(t) be the solution of the synchronization state equation (5) with initial condition . Then, letting Δxi(t) = xi(t) − s(t), we have

Thus, we are to prove synchronization step by step.

For any x0 ∈ Wα, there exists t′ = t(x0) ∈ 𝒯 such that

Then, reinitiated with time t′ + t0 and condition x(t′ + t0), continuing with the phase above, we can obtain that lim t→∞max i,j∥xi(t) − xj(t)∥ = 0. Namely, the coupled system (2) is synchronized. Furthermore, from the proof, we can conclude that the convergence is exponential with rate O(δt) where , and uniform with respect to t0 ≥ 0 and x0 ∈ Wα. This completes the proof.

Remark 18. According to Assumption 2 that attractor A is asymptotically stable and the properties of the compact neighbor W given in Lemma 5, we can conclude that the quantity

If the timevariation is driven by some MDS(Ω, ℬ, ℙP, ϱ(t)) and there exists a metric dynamical system {W × Ω, F, P, π(t)}, where F is the product σ-algebra on W × Ω, P is the probability measure, and π(t)(s0, ω) = (θ(t)s0, ϱ(t)ω). From Theorem 17, we have the following.

Corollary 19. Suppose that the conditions in Lemma 16 are satisfied, W × Ω is compact in the topology defined in this MDS, the semiflow π(t) is continuous, and on W × Ω the Jacobian matrix DF(θ(t)s0, ϱ(t)ω) is continuous. Let be the Lyapunov exponents of this MDS with multiplicity and correspond to the synchronization space. If

4. Synchronization of LCODEs with Identity Inner Coupling Matrix and Time-Varying Couplings

Assumption 20. (a) lij(t) ≥ 0, i ≠ j are measurable and ; (b) there exists M1 > 0 such that |lij(t)| ≤ M1 for all i, j = 1,2, …, m.

Similarly, we can define the Hajnal diameter of the following linear system:

By Theorem 17, we have the following theorem.

Theorem 21. Suppose Assumptions 1, 2, and 20 are satisfied. Let μ be the largest Lyapunov exponent of the synchronized system , that is,

Proof. Considering the variational equation of (41):

For the linear system (42), we firstly have the following lemma.

Lemma 22 (see [22].)V(t, t0) is a stochastic matrix.

From Lemmas 13 and 16, we have the following corollary.

Corollary 23. log diam(ℒ) = λP(ℒ), where λP(ℒ) denotes the largest one of all the projection Lyapunov exponents of system (41). Moreover, if the conditions in Lemma 16 are satisfied, then log diam (ℒ) = λT(ℒ), where λT(ℒ) denotes the largest one of all the Lyapunov exponents corresponding to the transverse space, that is, the space orthogonal to the synchronization space.

If L(t) is periodic, we have the following.

Corollary 24. Suppose that L(t) is periodic. Let ςi, i = 1,2, …, m, are the Floquet multipliers of the linear system (42). Then, there exists one multiplier denoted by ς1 = 1 and .

If L(t) = L(ϱ(t)ω) is driven by some MDS(Ω, ℬ, P, ϱ(t)), from Corollaries 19 and 23, we have the following corollary.

Corollary 25. Suppose L(ω) is continuous on Ω and conditions in Lemma 16 are satisfied. Let , ςi, i = 1,2, …, m, be the Lyapunov exponents of the linear system (42) with ς1 = 0, and . If μ + ς < 0, then the coupled system (41) is synchronized.

Let ℐ be the set consisting of all compact time intervals in [0, +∞) and 𝒢 be the the set consisting of all graph with vertex set 𝒩 = {1,2, …, m}.

Definition 26. We say that the LCODEs (41) has a δ-spanning tree across the time interval I if the corresponding graph G(I, δ) has a spanning tree.

Theorem 27. Suppose Assumption 20 is satisfied. diam (ℒ) < 1 if and only if there exist δ > 0 and T > 0 such that the LCODEs (41) has a δ-spanning tree across any T-length time interval.

Remark 28. Different from [16], we do not need to assume that L(t) has zero column sums and the timeaverage is achieved sufficiently fast.

Before proving this theorem, we need the following lemma.

Lemma 29. If the LCODEs (41) has a δ-spanning tree across any T-length time interval, then there exist δ1 > 0 and T1 > 0 such that V(t, t0) is δ1-scrambling for any T1-length time interval.

Proof of Theorem 27. Sufficiency. From Lemma 29, we can conclude that there exist δ1 > 0, δ′ > 0, and T1 > 0 such that V(t, t0) is δ1-scrambling across any T1-length time interval and . For any t ≥ t0, let t − t0 = pT1 + T′, where p is an integer and 0 ≤ T′ < T1 and tl = t0 + lT1, 0 ≤ l ≤ p. Then, we have

Necessity. Suppose that for any T ≥ 0 and δ > 0, there exists t0 = t0(T, δ), does not have a δ-spanning tree. According to the condition, there exist 1 > d > diam (ℒ), ϵ > 0, and T′ > 0 such that diam (V(t + t0)) < dt for all t0 ≥ 0 and t ≥ T′ and . Thus, picking T > T′, , t1 = t0(T, δ), and , there exist two vertex set J1 and J2 such that if i ∈ J1 and j ∉ J1, or i ∈ J2 and j ∉ J2. For each i ∈ J1 and j ∉ J1, we have

5. Consensus Analysis of Multiagent System with Directed Time-Varying Graphs

Definition 30. We say the differential system (57) reaches consensus if for any x(t0) ∈ ℝm, ∥xi(t) − xj(t)∥→0 as t → ∞ for all i, j ∈ 𝒩.

Let G(I, δ) defined as before. We say that the digraph G(t) has a δ-spanning tree across the time interval I if G(I, δ) has a spanning.

Theorem 31. Suppose Assumption 20 is satisfied. The system (57) reaches consensus if and only if there exist δ > 0 and T > 0 such that the corresponding digraph G(t) has a δ-spanning tree across any T-length time interval.

Proof. Since f ≡ 0, we have μ = 0 in Theorem 21. This completes the proof according to Theorems 27 and 21.

6. Numerical Examples

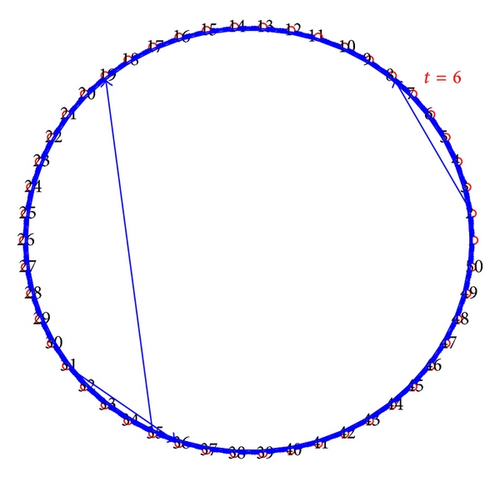

The network with time-varying topology we used here is NW small-world network with a time-varying coupling, which was introduced as the blinking model in [11, 27]. The time-varying network model algorithm is presented as follows: we divide the time axis into intervals of length τ, in each interval: (a) begin with the nearest neighbor coupled network consisting of m nodes arranged in a ring, where each node i is adjacent to its 2k-nearest neighbor nodes; (b) add a connection between each pair of nodes with probability p, which usually is a random number between [0, 0.1]; for more details, we refer the readers to [11]. Figure 2 shows the time-varying structure of shortcut connections in the blinking model with m = 50 and k = 3.

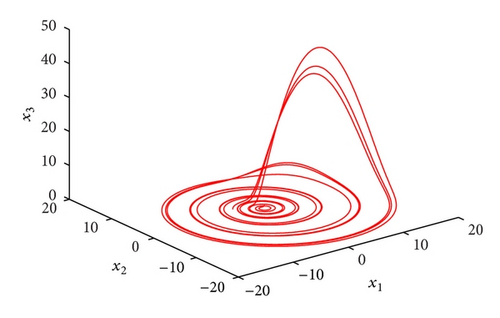

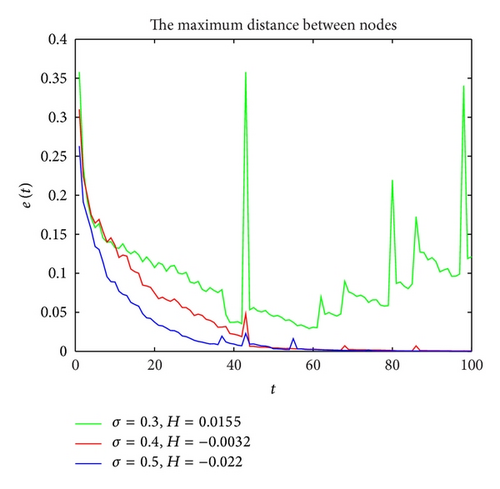

Let e(t) = max 1≤i<j≤50∥xi(t) − xj(t)∥ denotes the maximum distance between nodes at time t. Let , for some sufficiently large T > 0 and R > 0. Let H = μ + ς defined in Corollary 25. As described in Corollary 25, two steps are needed for verification: (a) calculating the largest Lyapunov exponent of the uncoupled synchronized system (60), μ and (b) calculating the second largest Lyapunov exponent of the linear system (42). In detail, we use Wolf′s method [28] to compute μ and the Jacobian method [29] to compute Lyapunov spectra of (42). More details can be found in [28–30]. Figure 3 shows convergence of the maximum distance between nodes during the topology evolution with a different coupling strength σ. It can be seen from Figure 3 that the dynamical network system (61) can be synchronized with σ = 0.4 and σ = 0.5.

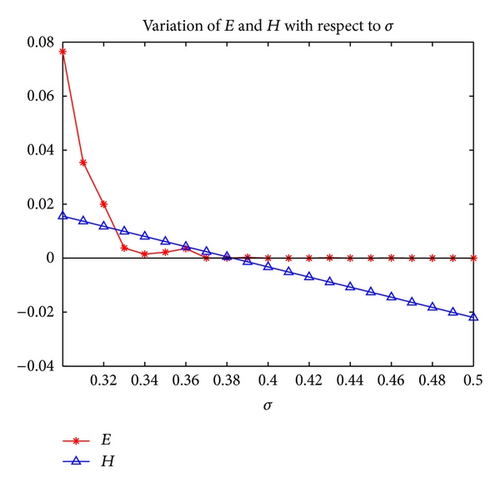

We pick the time length 200. Let T = 190 and R = 10. And choose initial state randomly from the interval [0, 1]. Figure 4 shows the variation of E and H with respect to the coupling strength σ. It can be seen that the parameter (coupling strength σ) region where H is negative coincides with that of synchronization, that is, where E is near zero. This verified the theoretical result (Corollary 25). In addition, we find that σ ≈ 0.38 is the threshold for synchronizing the coupled systems in this case.

7. Conclusions

In this paper, we present a theoretical framework for synchronization analysis of general coupled differential dynamical systems. The extended Hajnal diameter is introduced to measure the synchronization. The coupling between nodes is timevarying in both network structure and reaction dynamics. Inspired by the approaches in [6, 13], we show that the Hajnal diameter of the linear system induced by the time-varying coupling matrix and the largest Lyapunov exponent of the synchronized system play the key roles in synchronization analysis of LCODEs. These results extend synchronization analysis of discrete-time network in [6] to continuous-time case. As an application, we obtain a very general sufficient condition ensuring directed time-varying graph reaching consensus, and the way we get this result is different from [25]. An example of numerical simulation is provided to show the effectiveness the theoretical results. Additional contributions of this paper are that we explicitly show that the largest projection Lyapunov exponent, the Hajnal diameter, and the largest Lyapunov exponent of the transverse space are equal to each other in coupled differential systems (see Lemmas 13 and 16), which was proved in [6] for couple discrete-time systems.

Acknowledgments

This work is jointly supported by the National Key Basic Research and Development Program (no. 2010CB731403), the National Natural Sciences Foundation of China under Grant (nos. 61273211 and 61273309), the Shanghai Rising-Star Program (no. 11QA1400400), the Marie Curie International Incoming Fellowship from the European Commission under Grant FP7-PEOPLE-2011-IIF-302421, and the Laboratory of Mathematics for Nonlinear Science, Fudan University.

Appendix

Proof of Lemma 5. Let U be a bounded open neighborhood of A satisfying and Ut = {x ∈ Rn : ϑ(τ)x ∈ U, 0 ≤ τ ≤ t}. This implies if t′ ≥ t ≥ 0, Ut is an open set due to the continuity of the semiflow ϑ(t), and ϑ(δ)Ut ⊂ Ut−δ for all t ≥ δ ≥ 0. Let V = ⋂t≥0 Ut. We claim that there exists t0 ≥ 0 such that V = Ut for all t ≥ t0.

For any δ > 0, let tn = nδ and . We can conclude that . We will prove in the following that there exists n0 such that . Otherwise, there always exists xn ∈ Un∖Un+1 for n ≥ 0. Let . We have (i) and (ii) yn ∉ U. For any limit point of yn, can be either finite or infinite. For both cases, which implies . However, the claim (i) implies that , which contradicts with the claim (ii). This completes the proof by letting .

Proof of Lemma 10. (a) For any initial condition with the form δx0 = 1m ⊗ u0, the solution of (11) can be according to Lemma 4. This implies the first claim in this lemma.

(b) According to Lemma 5, there exists K1 > 0 such that s(t), the solution of (5), satisfies ∥s(t)∥≤K1 for all s0 ∈ W and t ≥ 0. So, there exists K > 0 such that ∥DFt(s(t))∥≤K according to the 3th item of Assumption 1. Write the solution of (11) δx(t) = U(t, t0, s0)δx0 as

For any , let s(t) and s′(t) be the solution of the synchronized state equation (5) with initial condition s(t0) = s0 and , respectively. We have

Proof of Lemma 13. We define the projection joint spectral radius as follows:

Second, it is clear that log ρP(Dℱ, s0) ≥ λP(Dℱ, s0). We will prove that log ρP(Dℱ, s0) = λP(Dℱ, s0). Otherwise, there exists some r, r0 > 0 satisfying . If so, there exists a sequence tk↑∞ as k → ∞, , and vk ∈ ℝn(m−1) with ∥vk∥ = 1 such that for all k ∈ 𝒩. Then, there exists a subsequence with . Let {e1, e2, …, en(m−1)} be a normalized orthogonal basis of ℝn(m−1). And, let . We have for all j = 1, …, n(m − 1). Thus, there exists L > 0 such that

Proof of Lemma 16. Let . We have

Write , where y(t) ∈ ℝn. Then, we have

We write λP(Dℱ, s0, ω0), λS(Dℱ, s0, ω0), and λT(Dℱ, s0, ω0) by λP, λS, and λT, respectively for simplicity.

Case 1 (λP > λS). We can conclude that χ[z(t)] ≤ λP and

Claim 1 (). Considering the linear system

Considering the lower-triangular matrix , its transpose can be regarded as the solution matrix of the adjoint system of (A.18):

Noting that

Case 2 (λP < λS). For any ϵ with 0 < ϵ < (λS − λP)/3, there exists T > 0 such that

for all t ≥ T. Define the subspace of ℝnm:

Proof of Lemma 22. Since L(t) satisfies Assumption 20, if the initial condition is u(t0) = 1m, then the solution must be u(t) = 1m, which implies that each row sum of V(t, t0) is one. Then, we will prove all elements in V(t, t0) are nonnegative. Consider the ith column of V(t, t0) denoted by Vi(t, t0) which can be regarded as the solution of the following equation:

Proof of Lemma 29. Consider the following Cauchy problem: