Accumulative Approach in Multistep Diagonal Gradient-Type Method for Large-Scale Unconstrained Optimization

Abstract

This paper focuses on developing diagonal gradient-type methods that employ accumulative approach in multistep diagonal updating to determine a better Hessian approximation in each step. The interpolating curve is used to derive a generalization of the weak secant equation, which will carry the information of the local Hessian. The new parameterization of the interpolating curve in variable space is obtained by utilizing accumulative approach via a norm weighting defined by two positive definite weighting matrices. We also note that the storage needed for all computation of the proposed method is just O(n). Numerical results show that the proposed algorithm is efficient and superior by comparison with some other gradient-type methods.

1. Introduction

2. Derivation of the New Diagonal Updating via Accumulative Approach

2.1. Accumulative MD Algorithm

Step 1. Choose an initial point x0 ∈ Rn, and a positive definite matrix B0 = I.

Let k : = 0.

Step 2. Compute gk. If ∥gk∥ ≤ ϵ, stop.

Step 3. If k = 0, set x1 = x0 − (g0/∥g0∥). If k = 1 set rk = sk and wk = yk go to Step 5.

Step 4. If k ≥ 2 and M = I is considered, compute from (2.13).

Else if M = Bk, compute from (2.14).

Compute δk, rk, wk and ηk, from (2.15), (2.16), (2.17), and (2.20), respectively.

If , set rk = sk and wk = yk.

Step 5. Compute and calculate αk > 0 such that the Armijo [11], condition holds:

, where σ ∈ (0,1) is a given constant.

Step 6. Let , and update Bk+1 by (2.19).

Step 7. Set k : = k + 1, and return to Step 2.

3. Convergence Analysis

This section is devoted to study the convergence of accumulative MD algorithm, when applied to the minimization of a convex function. To begin, we give the following result, which is due to Byrd and Nocedal [12] for the step generated by the Armijo line search algorithm. Here and elsewhere, ∥·∥ denotes the Euclidean norm.

Theorem 3.1. Assume that f is a strictly convex function. Suppose the Armijo line search algorithm is employed in a way that for any dk with , the step length, αk satisfies

We can apply Theorem 3.1 to establish the convergence of some Armijo-type line search methods.

Theorem 3.2. Assume that f is a strictly convex function. Suppose that the Armijo line search algorithm in Theorem 3.1 is employed with dk chosen to obey the following conditions: there exist positive constants c1 and c2 such that

Proof. By (3.4), we have that either (3.2) or (3.6) becomes

To prove that the accumulative MD algorithm is globally convergent when applied to the minimization of a convex function, it is sufficient to show that the sequence {∥Bk∥} generated by (2.19)-(2.20) is bounded both above and below, for all finite k so that its associated search direction satisfies condition (3.4). Since Bk is diagonal, it is enough to show that each element of Bk says ; i = 1, …, n is bounded above and below by some positive constants. The following theorem gives the boundedness of {∥Bk∥}.

Theorem 3.3. Assume that f is strictly convex function where there exists positive constants m and M such that

Proof. Let be the ith element of Bk. Suppose B0 is chosen such that , where ω1 and ω2 are some positive constants.

Case 1. If (2.18) is satisfied, we have

Case 2. If (2.18) is violated, then the updating formula for B1 becomes

Because η0 ≤ 1 also implies that , then this fact, together with the convexity property (3.8), and the definition of η give

Hence, in both cases, is bounded above and below, by some positive constants. Since the upper and lower bound for is, respectively, independent to k, we can proceed by using induction to show that is bounded, for all finite k.

4. Numerical Results

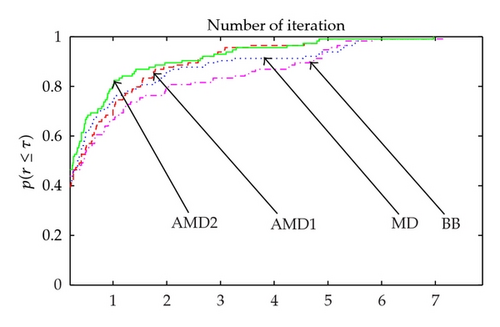

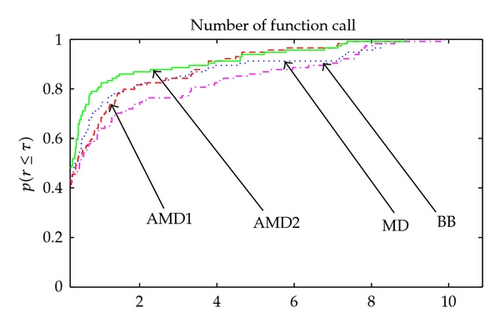

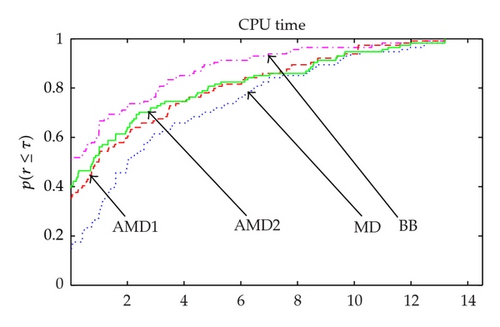

In this section, we examine the practical performance of our proposed algorithm in comparison with the BB method and standard one-step diagonal gradient-type method (MD). The new algorithms are referred to as AMD1 and AMD2 when M = I and M = Bk are used, respectively. For all methods we employ Armijo line search [11] where σ = 0.9. All experiments in this paper are implemented on a PC with Core Duo CPU using Matlab 7.0. For each run, the termination condition is that ∥gk∥ ≤ 10−4. All attempts to solve the test problems were limited to a maximum of 1000 iterations. The test problems are chosen from Andrei [13] and Moré et al. [14] collections. The detailed test problem is summarized in Table 1. Our experiments are performed on a set of 36 nonlinear unconstrained problems, and the problems vary in size from n = 10 to 10000 variables. Figures 1, 2, and 3 present the Dolan and Moré [15] performance profile for all algorithms subject to the iteration, function call, and CPU time.

| Problem | Dimension | References |

|---|---|---|

| Extended Trigonometric, Penalty 1, Penalty 2 | 10, …, 10000 | Moré et al. [14] |

| Quadratic QF2, Diagonal 4, Diagonal 5, Generalized Tridiagonal 1 | ||

| Generalized Rosenbrock, Generalized PSC1, Extended Himmelblau | ||

| Extended Three Exponential Terms, Extended Block Diagonal BD1 | ||

| Extended PSC1, Raydan 2, Extended Tridiagonal 2, Extended Powell | ||

| Extended Freudenstein and Roth, Extended Rosenbrock | 10, …, 10000 | Andrei [13] |

| Extended Beale, Broyden Tridiagonal, Quadratic Diagonal Perturbed | 10, …, 1000 | Moré et al. [14] |

| Perturbed Quadratic, Quadratic QF1, Diagonal 1, Diagonal 2, Hager | ||

| Diagonal 3, Generalized Tridiagonal 2, Almost perturbed Quadratic | ||

| Tridiagonal perturbed quadratic, Full Hessian FH1, Full Hessian FH2 | ||

| Raydan 1, EG2, Extended White and Holst | 10, …, 1000 | Andrei [13] |

From Figure 1, we see that AMD2 method is the top performer, being more successful than other methods in the number of iteration. Figure 2 shows that AMD2 method requires the fewest function calls. From Figure 3, we observe that the AMD2 method is faster than MD and AMD1 methods and needs reasonable time to solve large-scale problems when compared to the BB method. At each iteration, the proposed method does not require more storage than classic diagonal updating methods. Moreover, a higher-order accuracy in approximating the Hessian matrix of the objective function makes AMD method need less iterations and less function evaluation. The numerical results by the tests reported in Figures 1, 2, and 3 demonstrate clearly the new method AMD2 shows significant improvements, when compared with BB, MD, and AMD1. Generally, M = Bk performs better than M = I. It is most probably due to the fact that Bk is a better Hessian approximation than the identity matrix I.

5. Conclusion

In this paper, we propose a new two-step diagonal gradient method as view of accumulative approach for unconstrained optimization. The new parameterization for multistep diagonal gradient-type method is developed via employing accumulative approach. The new technique is devised for interpolating curves which are the basis of multistep approach. Numerical results show that the proposed method is suitable to solve large-scale unconstrained optimization problems and more stable than other similar methods in practical computation. The improvement that our proposed methods bring does come at a complexity cost of O(n) while others are about O(n2) [9, 10].