An Elite Decision Making Harmony Search Algorithm for Optimization Problem

Abstract

This paper describes a new variant of harmony search algorithm which is inspired by a well-known item “elite decision making.” In the new algorithm, the good information captured in the current global best and the second best solutions can be well utilized to generate new solutions, following some probability rule. The generated new solution vector replaces the worst solution in the solution set, only if its fitness is better than that of the worst solution. The generating and updating steps and repeated until the near-optimal solution vector is obtained. Extensive computational comparisons are carried out by employing various standard benchmark optimization problems, including continuous design variables and integer variables minimization problems from the literature. The computational results show that the proposed new algorithm is competitive in finding solutions with the state-of-the-art harmony search variants.

1. Introduction

In 2001, Geem et al. [1] proposed a new metaheuristic algorithm, harmony search (HS) algorithm, which imitates the behaviors of music improvisation process. In that algorithm, the harmony in music is analogous to the optimization solution vector, and the musicians improvisations are analogous to local and global search schemes in optimization techniques. The HS algorithm does not require initial values for the decision variables. Furthermore, instead of a gradient search, the HS algorithm uses a stochastic random search that is based on the harmony memory considering rate and the pitch adjusting rate so that derivative information is unnecessary. These features increase the flexibility of the HS algorithm and have led to its application to optimization problems in different areas including music composition [2], Sudoku puzzle solving [3], structural design [4, 5], ecological conservation [6], and aquifer parameter identification [7]. The interested readers may refer the review papers [8–10] and the references therein for further understanding.

HS algorithm is good at identifying the high performance regions of the solution space at a reasonable time but gets into trouble in performing local search for numerical applications. In order to improve the fine-tuning characteristic of HS algorithm, Mahdavi et al. [11] discussed the impacts of constant parameters on HS algorithm and presented a new strategy for tuning these parameters. Wang and Huang [12] used the harmony memory (HM) (set of solution vectors) to automatically adjust parameter values. Fesanghary et al. [13] use sequential quadratic programming technique to speed up local search and improve precision of the HS algorithm solution. Omran and Mahdavi [14] proposed a so-called the global best HS algorithm, in which concepts from swarm intelligence are borrowed to enhance the performance of HS algorithm such that the new harmony can mimic the best harmony in the HM. Also, Geem [15] proposed a stochastic derivative for discrete variables based on an HS algorithm to optimize problems with discrete variables and problems in which the mathematical derivative of the function cannot be analytically obtained. Pan et al. [16] used the good information captured in the current global best solution to generate new harmonies. Jaberipour and Khorram [17] described two HS algorithms through parameter adjusting technique. Yadav et al. [18] designed an HS algorithm which maintains a proper balance between diversification and intensification throughout the search process by automatically selecting the proper pitch adjustment strategy based on its HM. Pan et al. [19] divided the whole HM into many small-sized sub-HMs and performed the evolution in each sub-HM independently and thus presented a local-best harmony search algorithm with dynamic subpopulations. Later on, the excellent ideas of mutation and crossover strategies used in [19] were adopted in designing the differential evolution algorithm and obtained perfect result for global numerical optimization by Islam et al. [20].

Considering that, in political science and sociology, a small minority (elite) always holds the most power in making the decisions, that is, elite decision making. One could image that the good information captured in the current elite harmonies can be well utilized to generate new harmonies. Thus, in our elite decision making HS (EDMHS) algorithm, the new harmony will be randomly generated between the best and the second best harmonies in the historic HM, following some probability rule. The generated harmony vector replaces the worst harmony in the HM, only if its fitness (measured in terms of the objective function) is better than that of the worst harmony. These generating and updating procedures repeat until the near-optimal solution vector is obtained. To demonstrate the effectiveness and robustness of the proposed algorithm, various benchmark optimization problems, including continuous design variables and integer variables minimization problems, are used. Numerical results reveal that the proposed new algorithm is very effective.

This paper is organized as follows. In Section 2, a general harmony search algorithm and its recently developed variants will be reviewed. Section 3 introduces our method that has “Elite-Decision-Making” property. Section 4 presents the numerical results for some well-known benchmark problems. Finally, conclusions are given in the last section.

2. Harmony Search Algorithm

2.1. The General HS Algorithm

-

HMS: harmony memory size,

-

HMCR: harmony memory considering rate,

-

PAR: pitch adjusting rate,

-

bw: bandwidth vector.

Remarks 2.1. HMCR, PAR, and bw are very important factors for the high efficiency of the HS methods and can be potentially useful in adjusting convergence rate of algorithms to the optimal solutions. These parameters are introduced to allow the solution to escape from local optima and to improve the global optimum prediction of the HS algorithm.

The procedure for a harmony search, which consists of Steps 1–4.

Step 1. Create and randomly initialize an HM with HMS. The HM matrix is initially filled with as many solution vectors as the HMS. Each component of the solution vector is generated using the uniformly distributed random number between the lower and upper bounds of the corresponding decision variable , where i ∈ [1, N].

The HM with the size of HMS can be represented by a matrix as

Step 2. Improvise a new harmony from the HM or from the entire possible range. After defining the HM, the improvisation of the HM, is performed by generating a new harmony vector . Each component of the new harmony vector is generated according to

Step 3. Update the HM. If the new harmony is better than the worst harmony in the HM, include the new harmony into the HM and exclude the worst harmony from the HM.

2.2. The Improved HS Algorithm

Numerical results reveal that the HS algorithm with variable parameters can find better solutions when compared to HS and other heuristic or deterministic methods and is a powerful search algorithm for various engineering optimization problems, see [11].

2.3. Global Best Harmony Search (GHS) Algorithm

2.4. A Self-Adaptive Global Best HS (SGHS) Algorithm

3. An Elite Decision Making HS Algorithm

The key differences between the proposed EDMHS algorithm and IHS, GHS, and SGHS are in the way of improvising the new harmony.

3.1. EDMHS Algorithm for Continuous Design Variables Problems

The EDMHS has exactly the same steps as the IHS with the exception that Step 3 is modified as follows.

3.2. EDMHS Algorithm for Integer Variables Problems

Many real-world applications require the variables to be integers. Methods developed for continuous variables can be used to solve such problems by rounding off the real optimum values to the nearest integers [14, 21]. However, in many cases, rounding-off approach may result in an infeasible solution or a poor suboptimal solution value and may omit the alternative solutions.

4. Numerical Examples

This section is about the performance of the EDMHS algorithm for continuous and integer variables examples. Several examples taken from the optimization literature are used to show the validity and effectiveness of the proposed algorithm. The parameters for all the algorithm are given as follows: HMS = 20, HMCR = 0.90, PARmin = 0.4, PARmax = 0.9, bwmin = 0.0001, and bwmax = 1.0. In the processing of the algorithm, PAR and bw are generated according to (2.5) and (2.6), respectively.

4.1. Some Simple Continuous Variables Examples

For the following five examples, we adopt the same variable ranges as presented in [4]. Each problem is run for 5 independent replications, the mean fitness of the solutions for four variants HS algorithm, IHS, SGHS, SGHS, and EDMHS, is presented in tables.

4.1.1. Rosenbrock Function

| Variables | IHS | GHS | SGHS | EDMHS |

|---|---|---|---|---|

| x1 | 1.0000028617324386 | 0.9913653798835682 | 1.0000082201314386 | 0.9999992918896516 |

| x2 | 1.0000062226347253 | 0.9837656861940776 | 1.0000169034081148 | 0.9999985841159521 |

| f1(x) | 0.0000000000331057 | 0.0001667876726056 | 0.0000000000890147 | 0.0000000000005014 |

4.1.2. Goldstein and Price Function I (with Four Local Minima)

| Variables | IHS | GHS | SGHS | EDMHS |

|---|---|---|---|---|

| x1 | 0.0000043109765698 | −0.0108343859912985 | −0.0000010647548017 | −0.0000022210968748 |

| x2 | −0.9999978894568922 | −1.0091267108154769 | −1.0000037827893109 | −1.0000008657021768 |

| f(x) | 3.0000000046422932 | 3.0447058568657721 | 3.0000000055974083 | 3.0000000011515664 |

4.1.3. Eason and Fenton’s Gear Train Inertia Function

| Variables | IHS | GHS | SGHS | EDMHS |

|---|---|---|---|---|

| x1 | 1.7434541913586368 | 1.7131403370902785 | 1.7434648607226395 | 1.7434544417399731 |

| x2 | 2.0296978640691021 | 2.0700437540873073 | 2.0296831598594332 | 2.0296925490097708 |

| f(x) | 1.7441520055927637 | 1.7447448145676987 | 1.7441520056712445 | 1.7441520055905921 |

4.1.4. Wood Function

| Variables | IHS | GHS | SGHS | EDMHS |

|---|---|---|---|---|

| x1 | 0.9367413185752959 | 0.9993702652662329 | 0.9917327966129160 | 1.0001567183702584 |

| x2 | 0.8772781982936317 | 0.9987850979456709 | 0.9835814785067265 | 1.0003039053776117 |

| x3 | 1.0596918740170123 | 0.9993702652662329 | 1.0081526992384837 | 0.9998357549633209 |

| x4 | 1.1230215213184420 | 0.9987850979456709 | 1.0164353912102084 | 0.9996725376532794 |

| f(x) | 0.0136094062872233 | 0.0000602033138483 | 0.0002433431550602 | 0.0000001061706105 |

4.1.5. Powell Quartic Function

| Variables | IHS | GHS | SGHS | EDMHS |

|---|---|---|---|---|

| x1 | −0.0383028653671760 | −0.0256621703960072 | 0.0334641210434073 | −0.0232662093056917 |

| x2 | 0.0038093414837046 | 0.0023707007810820 | −0.0033373644857512 | 0.0023226342970439 |

| x3 | −0.0195750968208506 | −0.0199247989791340 | 0.0159748222727847 | −0.0107227792768697 |

| x4 | −0.0195676609811871 | −0.0199247989791340 | 0.0160018633328343 | −0.0107574107951817 |

| f(x) | 0.0000046821615160 | 0.0000070109937353 | 0.0000024921236096 | 0.0000005715572753 |

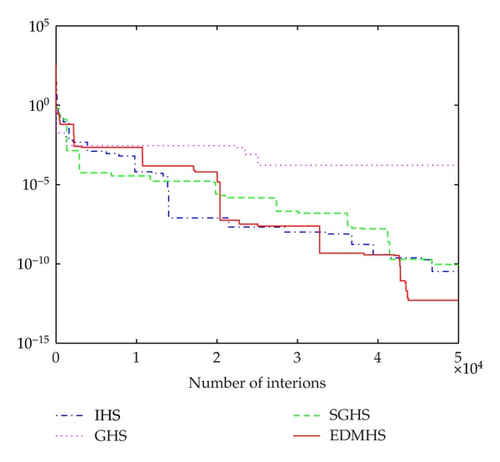

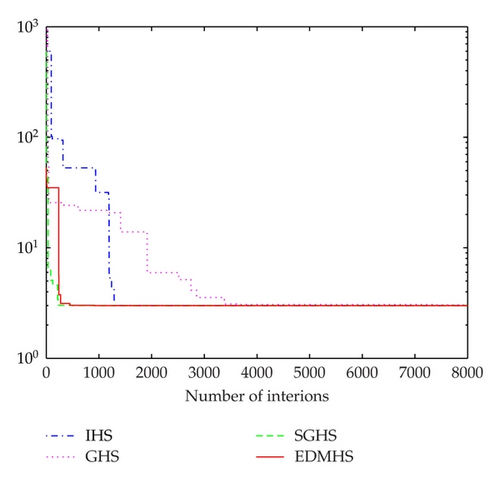

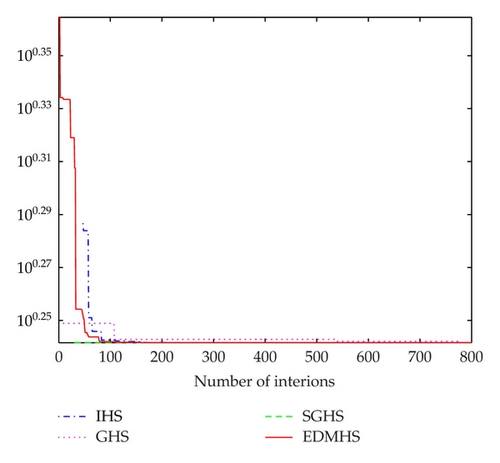

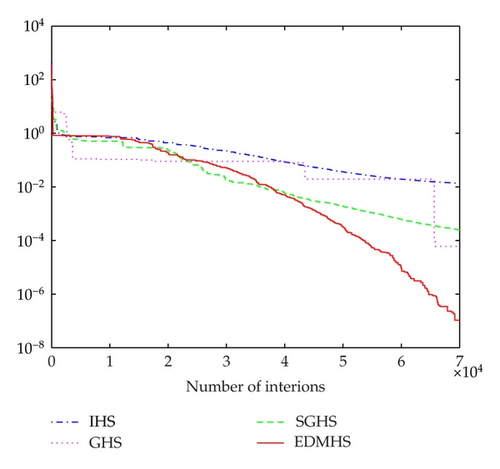

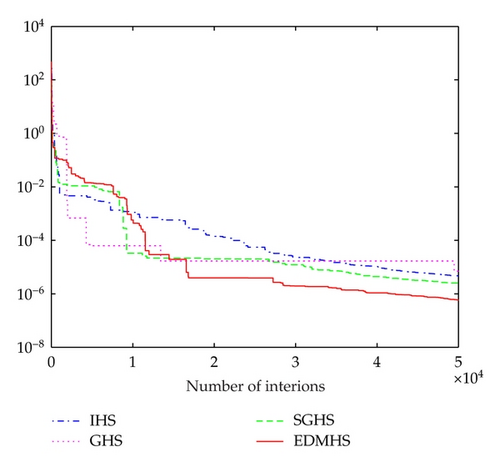

It can be seen from Tables 1–5, comparing with IHS, GHS, and SGHS algorithms, that the EDMHS produces the much better results for four test functions. Figures 1–5 present a typical solution history graph along iterations for the five functions, respectively. It can be observed that four evolution curves of the EDMHS algorithm reach lower level than that of the other compared algorithms. Thus, it can be concluded that overall the EDMHS algorithm outperforms the other methods for the above examples.

4.2. More Benchmark Problems with 30 Dimensions

- (1)

Sphere function:

(4.6)where global optimum x* = 0 and f(x*) = 0 for −100 ≤ xi ≤ 100. - (2)

Schwefel problem:

(4.7)where global optimum x* = (420.9687, …, 420.9687) and f(x*) = −12569.5 for −500 ≤ xi ≤ 500. - (3)

Griewank function:

(4.8)where global optimum x* = 0 and f(x*) = 0 for −600 ≤ xi ≤ 600. - (4)

Rastrigin function:

(4.9)where global optimum x* = 0 and f(x*) = 0 for −5.12 ≤ xi ≤ 5.12. - (5)

Ackley’s function:

(4.10)where global optimum x* = 0 and f(x*) = 0 for −32 ≤ xi ≤ 32. - (6)

Rosenbrock’s Function:

(4.11)where global optimum x* = (1, …, 1) and f(x*) = 0 for −5 ≤ xi ≤ 10.

The parameters for the IHS algorithm, HMS = 5, HMCR = 0.9, , bwmin = 0.0001, PARmin = 0.01, and PARmax = 0.99 and for the GHS algorithm, HMS = 5, HMCR = 0.9, PARmin = 0.01, and PARmax = 0.99.

Table 6 presents the average error (AE) values and standard deviations (SD) over these 30 runs of the compared HS algorithms on the 6 test functions with dimension equal to 30.

| Problem | IHS | IHS | GHS | GHS | SGHS | SGHS | EDMHS | EDMHS |

|---|---|---|---|---|---|---|---|---|

| AE | SD | AE | SD | AE | SD | AE | SD | |

| Sphere | 1.27e − 07 | 1.79e − 07 | 1.41e − 02 | 2.36e − 02 | 5.30e − 07 | 1.27e − 06 | 1.07e − 07 | 1.31e − 07 |

| Schwefel | 4.83e − 01 | 7.06e − 01 | 2.11e − 02 | 3.01e − 02 | 7.70e − 01 | 1.15e − 00 | 7.03e − 01 | 1.61e − 00 |

| Griewank | 1.18e − 01 | 1.87e − 01 | 8.83e − 02 | 1.57e − 01 | 8.02e − 03 | 1.05e − 02 | 1.02e − 02 | 1.51e − 02 |

| Rastrigin | 9.72e − 01 | 1.18e − 00 | 1.09e − 02 | 2.05e − 02 | 1.12e − 00 | 1.43e − 00 | 1.48e − 00 | 1.93e − 00 |

| Ackley | 5.11e − 01 | 6.06e − 01 | 2.05e − 02 | 2.83e − 02 | 2.13e − 01 | 2.98e − 01 | 3.34e − 01 | 3.85e − 01 |

| Rosenbrock | 3.37e + 01 | 4.08e + 01 | 6.77e + 01 | 8.97e + 01 | 3.46e + 01 | 3.80e + 01 | 3.17e + 01 | 4.02e + 01 |

4.3. Integer Variables Examples

Six commonly used integer programming benchmark problems are chosen to investigate the performance of the EDMHS integer algorithm. For all the examples, the design variables, xi, i = 1, …, N, are initially structured with random integer values bounded between −100 and 100, respectively. Each problem is run 5 independent replications, each with approximately 800 searches, all the optimal solution vector are obtained.

4.3.1. Test Problem 1

4.3.2. Test Problem 2

4.3.3. Test Problem 3

4.3.4. Test Problem 4

4.3.5. Test Problem 5

4.3.6. Test Problem 6

5. Conclusion

This paper presented an EDMHS algorithm for solving continuous optimization problems and integer optimization problems. The proposed EDMHS algorithm applied a newly designed scheme to generate candidate solution so as to benefit from the good information inherent in the best and the second best solution in the historic HM.

Further work is still needed to investigate the effect of EDMHS and adopt this strategy to solve the real optimization problem.

Acknowlegdments

The research is supported by the Grant from National Natural Science Foundation of China no. 11171373 and the Grant from Natural Science Foundation of Zhejiang Province no. LQ12A01024.