A New Proof to the Necessity of a Second Moment Stability Condition of Discrete-Time Markov Jump Linear Systems with Real States

Abstract

This paper studies the second moment stability of a discrete-time jump linear system with real states and the system matrix switching in a Markovian fashion. A sufficient stability condition was proposed by Fang and Loparo (2002), which only needs to check the eigenvalues of a deterministic matrix and is much more computationally efficient than other equivalent conditions. The proof to the necessity of that condition, however, is a challenging problem. In the paper by Costa and Fragoso (2004), a proof was given by extending the state domain to the complex space. This paper proposes an alternative necessity proof, which does not need to extend the state domain. The proof in this paper demonstrates well the essential properties of the Markov jump systems and achieves the desired result in the real state space.

1. Introduction

1.1. Background of the Discrete-Time Markov Jump Linear Systems

For the jump linear system in (1.1), the first question to be asked is “is the system stable?” There has been plenty of work on this topic, especially in 90s, [2–6]. Recently this topic has caught academic interest again because of the emergence of networked control systems [7]. Networked control systems often suffer from the network delay and dropouts, which may be modelled as Markov chains, so that networked control systems can be classified into discrete-time jump linear systems [8–11]. Therefore, the stability of the networked control systems can be determined through studying the stability of the corresponding jump linear systems. Before proceeding further, we review the related work.

1.2. Related Work

At the beginning, the definitions of stability of jump linear systems are considered. In [6], three types of second moment stability are defined.

Definition 1.1. For the jump linear system in (1.1), the equilibrium point 0 is

- (1)

stochastically stable, if, for every initial condition (x[0] = x0, q[0] = q0),

()where ∥·∥ denotes the 2-norm of a vector; - (2)

mean square stable (MSS), if, for every initial condition (x0, q0),

() - (3)

exponentially mean square stable, if, for every initial condition (x0, q0), there exist constants 0 < α < 1 and β > 0 such that for all k ≥ 0,

()where α and β are independent of x0 and q0.

In [6], the above 3 types of stabilities are proven to be equivalent. So we can study mean square stability without loss of generality. In [6], a necessary and sufficient stability condition is proposed.

Theorem 1.2 (see [6].)The jump linear system in (1.1) is mean square stable, if and only if, for any given set of positive definite matrices {Wi : i = 1, …, N}, the following coupled matrix equations have unique positive definite solutions {Mi : i = 1, …, N}:

Theorem 1.3 (see [4], [12].)The jump linear system in (1.1) is mean square stable, if all eigenvalues of the compound matrix A[2] in (1.2) lie within the unit circle.

Remark 1.4. By Theorem 1.3, the mean square stability of a jump linear system can be reduced to the stability of a deterministic system in the form yk+1 = A[2]yk [13]. Thus the complexity of the stability problem is greatly reduced. Theorem 1.3 only provides a sufficient condition for stability. The condition was conjectured to be necessary as well [2, 15]. In the following, we briefly review the research results related to Theorem 1.3.

In [14], Theorem 1.3 was proven to be necessary and sufficient for a scalar case, that is, Ai(i = 1, …, N) are scalar. In [15], the necessity of Theorem 1.3 was proven for a special case with N = 2 and n = 2. In [4, 12], Theorem 1.3 was asserted to be necessary and sufficient for more general jump linear systems. Specifically, Bhaurucha [12] considered a random sampling system with the sampling intervals governed by a Markov chain while Mariton [4] studied a continuous-time jump linear system. Although their sufficiency proof is convincing, their necessity proof is incomplete.

- (i)

x[k] ∈ Cn, where C stands for the set of complex numbers,

- (ii)

x0 ∈ Sc, where Sc is the set of complex vectors with finite second-order moments in the complex state space.

Theorem 1.5 (see [3].)The jump linear system in (1.1) (with complex states) is mean square stable in the sense of (1.7) if and only if A[2] is Schur stable.

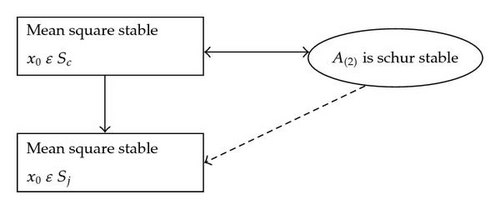

Due to the relationship of Sj ⊂ Sc and Theorem 1.5, we can establish the relationship diagram in Figure 1. As it shows, the Schur stability of A[2] is a sufficient condition for mean square stability with x0 ∈ Sj at the first look.

We are still wondering “whether the condition in Theorem 1.3 is necessary too?” the answer is definitely “yes.” That necessity was conjectured in [2]. A proof to the necessity of that condition was first given in [16], which extends the state domain to the complex space and establishes the desired necessity in the stability sense of (1.7). As mentioned before, our concerned stability (in the sense of (1.8)) is weaker than that in (1.7). This paper proves that the weaker condition in (1.8) still yields the schur stability of A[2], that is, the necessity of theorem 1.3 is confirmed. This paper confines the state to the real space domain and makes the best use of the essential properties of the markov jump linear systems to reach the desired necessity goal. In Section 2, a necessary and sufficient version of Theorem 1.3 is stated and its necessity is strictly proven. In Section 3, final remarks are placed.

2. A Necessary and Sufficient Condition for Mean Square Stability

This section will give a necessary and sufficient version of Theorem 1.3. Throughout this section, we will define mean square stability in the sense of (1.4) (x0 ∈ Sj). At the beginning, we will give a brief introduction to the Kronecker product and list some of its properties. After then, the main result, a necessary and sufficient condition for the mean square stability, is presented in Theorem 2.1 and its necessity is proven by direct matrix computations.

2.1. Mathematical Preliminaries

2.2. Main Results

Theorem 2.1. The jump linear system in (1.1) is mean square stable if and only if A[2] is Schur stable, that is, all eigenvalues of A[2] lie within the unit circle.

There are already some complete proofs for sufficiency of Theorem 2.1, [3, 12, 13]. So we will focus on the necessity proof. Throughout this section, the following notational conventions will be followed.

The initial condition of the jump linear system in (1.1) is denoted as x[0] = x0, q[0] = q0 and the distribution of q0 is denoted as p = [p1 p2 ⋯ pN] (P(q[0] = qi∣q0) = pi).

The necessity proof of Theorem 2.1 needs the following three preliminary Lemmas.

Lemma 2.2. If the jump linear system in (1.1) is mean square stable, then

Proof of Lemma 2.2. Because the system is mean square stable, we get

Lemma 2.3. If the jump linear system in (1.1) is mean square stable, then

Proof of Lemma 2.3. Choose any z0, w0 ∈ Rn. Lemma 2.2 guarantees

Lemma 2.4. VΦ[k] is governed by the following dynamic equation

Proof of Lemma 2.4. By the definition in (2.8), we can recursively compute Φi[k] as follows:

Proof of Necessity of Theorem 2.1. By Lemma 2.3, we get

is an Nn2 × Nn2 matrix. We can write as where Ai(n)(i = 1, …, N) is an Nn2 × n2 matrix. By taking pi = 1 and pj = 0(j = 1, …, i − 1, i + 1, …, N), (2.32) yields

3. Conclusion

This paper presents a necessary and sufficient condition for the second moment stability of a discrete-time Markovian jump linear system. Specifically this paper provides proof for the necessity part. Different from the previous necessity proof, this paper confines the state domain to the real space. It investigates the structures of relevant matrices and make a good use of the essential properties of Markov jump linear systems, which may guide the future research on such systems.

Acknowledgment

This work was supported in part by the National Natural Science Foundation of China (60904012), the Program for New Century Excellent Talents in University (NCET-10-0917) and the Doctoral Fund of Ministry of Education of China (20093402120017).