An Improved Particle Swarm Optimization for Solving Bilevel Multiobjective Programming Problem

Abstract

An improved particle swarm optimization (PSO) algorithm is proposed for solving bilevel multiobjective programming problem (BLMPP). For such problems, the proposed algorithm directly simulates the decision process of bilevel programming, which is different from most traditional algorithms designed for specific versions or based on specific assumptions. The BLMPP is transformed to solve multiobjective optimization problems in the upper level and the lower level interactively by an improved PSO. And a set of approximate Pareto optimal solutions for BLMPP is obtained using the elite strategy. This interactive procedure is repeated until the accurate Pareto optimal solutions of the original problem are found. Finally, some numerical examples are given to illustrate the feasibility of the proposed algorithm.

1. Introduction

Bilevel programming problem (BLPP) arises in a wide variety of scientific and engineering applications including optimal control, process optimization, game-playing strategy development, and transportation problem Thus, the BLPP has been developed and researched by many scholars. The reviews, monographs, and surveys on the BLPP can refer to [1–11]. Moreover, the evolutionary algorithms (EA) have been employed to address BLPP in papers [12–16].

However, the bilevel multiobjective programming problem (BLMPP) has seldom been studied. Shi and Xia [17, 18], Abo-Sinna and Baky [19], Nishizaki and Sakawa [20], and Zheng et al. [21] presented an interactive algorithm for BLMPP. Eichfelder [22] presented a method for solving nonlinear bilevel multiobjective optimization problems with coupled upper level constraints. Thereafter, Eichfelder [23] developed a numerical method for solving nonlinear nonconvex bilevel multiobjective optimization problems. In recent years, the metaheuristic has attracted considerable attention as an alternative method for BLMPP. For example, Deb and Sinha [24–26] as well as Sinha and Deb [27] discussed BLMPP based on evolutionary multiobjective optimization principles. Based on those studies, Deb and Sinha [28] proposed a viable and hybrid evolutionary-local-search-based algorithm and presented challenging test problems. Sinha [29] presented a progressively interactive evolutionary multiobjective optimization method for BLMPP.

Particle swarm optimization (PSO) is a relatively novel heuristic algorithm inspired by the choreography of a bird flock, which has been found to be quite successful in a wide variety of optimization tasks [30]. Due to its high speed of convergence and relative simplicity, the PSO algorithm has been employed by many researchers for solving BLPPs. For example, Li et al. [31] proposed a hierarchical PSO for solving BLPP. Kuo and Huang [32] applied the PSO algorithm for solving bilevel linear programming problem. Gao et al. [33] presented a method to solve bilevel pricing problems in supply chains using PSO. However, it is worth noting that the papers mentioned above are only for bilevel single objective problems.

In this paper, an improved PSO is presented for solving BLMPP. The algorithm can be outlined as follows. The BLMPP is transformed to solve multiobjective optimization problems in the upper level and the lower level interactively by an improved PSO. And a set of approximate Pareto optimal solutions for BLMPP is obtained using the elite strategy. The above interactive procedure is repeated for a predefined count, and then the accurate Pareto optimal solutions of the BLMPP will be achieved. Towards these ends, the rest of the paper is organized as follows. In Section 2, the problem formulation is provided. The proposed algorithm for solving bilevel multiobjective problem is presented in Section 3. In Section 4, some numerical examples are given to demonstrate the proposed algorithm, while the conclusion is reached in Section 5.

2. Problem Formulation

Definition 2.1. For a fixed x ∈ X, if y is a Pareto optimal solution to the lower level problem, then (x, y) is a feasible solution to the problem (2.1).

Definition 2.2. If (x*, y*) is a feasible solution to the problem (2.1) and there are no (x, y) ∈ IR, such that F(x, y)≺F(x*, y*), then (x*, y*) is a Pareto optimal solution to the problem (2.1), where “≺” denotes Pareto preference.

For problem (2.1), it is noted that a solution (x*, y*) is feasible for the upper level problem if and only if y* is an optimal solution for the lower level problem with x = x*. In practice, we often make the approximate Pareto optimal solutions of the lower level problem as the optimal response feedback to the upper level problem, and this point of view is accepted usually. Based on this fact, the PSO algorithm may have a great potential for solving BLMPP. On the other hand, unlike the traditional point-by-point approach mentioned in Section 1, the PSO algorithm uses a group of points in its operation thus, the PSO can be developed as a new way for solving BLMPP. In the following, we present an improved PSO algorithm for solving problem (2.1).

3. The Algorithm

The process of the proposed algorithm is an interactive coevolutionary process for both the upper level and the lower level. We first initialize population and then solve multiobjective optimization problems in the upper level and the lower level interactively using an improved PSO. Afterwards, a set of approximate Pareto optimal solutions for problem 1 is obtained by the elite strategy which was adopted in Deb et al. [34]. This interactive procedure is repeated until the accurate Pareto optimal solutions of problem (2.1) are found. The details of the proposed algorithm are given as follows:

3.1. Algorithm

Step 1. Initialize.

Substep 1.1. Initialize the population P0 with Nu particles which is composed by ns = Nu/Nl subswarms of size Nl each. The particle’s position of the kth (k = 1,2, …, ns) subswarm is presented as zj = (xj, yj) (j = 1,2, …, nl), and the corresponding velocity is presented as: , zj and vj are sampled randomly in the feasible space, respectively.

Substep 1.2. Initialize the external loop counter t : = 0.

Step 2. For the kth subswarm (k = 1,2, …, ns), each particle is assigned a nondomination rank NDl and a crowding value CDl in f space. Then, all resulting subswarms are combined into one population which is named as the Pt. Afterwards, each particle is assigned a nondomination rank NDu and a crowding value CDu in F space.

Step 3. The nondomination particles assigned both NDu = 1 and NDl = 1 from Pt are saved in the elite set At.

Step 4. For the kth subswarm (k = 1,2, …, ns), update the lower level decision variables.

Substep 4.1. Initialize the lower level loop counter tl : = 0.

Substep 4.2. Update the jth (j = 1,2, …, Nl) particle’s position and velocity with the fixed xj and the fixed vj using

Substep 4.3. Consider tl : = tl + 1.

Substep 4.5. Each particle of the ith subswarm is reassigned a nondomination rank NDl and a crowding value CDl in F space. Then, all resulting subswarms are combined into one population which is renamed as the Qt. Afterwards, each particle is reassigned a nondomination rank NDu and a crowding value CDu in F space.

Step 5. Combine population Pt and Qt to form Rt. The combined population Rt is reassigned a nondomination rank NDu, and the particles within an identical nondomination rank are assigned a crowding distance value CDu in the F space.

Step 6. Choose half particles from Rt. The particles of rank NDu = 1 are considered first. From the particles of rank NDu = 1, the particles with NDl = 1 are noted one by one in the order of reducing crowding distance CDu, for each such particle the corresponding subswarm from its source population (either Pt or Qt) is copied in an intermediate population St. If a subswarm is already copied in St and a future particle from the same subswarm is found to have NDu = NDl = 1, the subswarm is not copied again. When all particles of NDu = 1 are considered, a similar consideration is continued with NDu = 2 and so on till exactly ns subswarms are copied in St.

Step 7. Update the elite set At. The nondomination particles assigned both NDu = 1 and NDl = 1 from St are saved in the elite set At.

Step 8. Update the upper level decision variables in St.

Substep 8.1. Initiate the upper level loop counter tu : = 0.

Substep 8.2. Update the ith (i = 1,2, …, Nu) particle’s position and velocity with the fixed yi and the fixed vi using

Substep 8.3. Consider tu : = tu + 1.

Substep 8.5. Every member is then assigned a nondomination rank NDu and a crowding distance value CDu in F space.

Step 9. Consider t : = t + 1.

Step 10. If t ≥ T, output the elite set At. Otherwise, go to Step 2.

In Steps 4 and 8, the global best position is chosen at random from the elite set At. The criterion of personal best position choice is that if the current position is dominated by the previous position, then the previous position is kept; otherwise, the current position replaces the previous one; if neither of them is dominated by the other, then we select one of them randomly. A relatively simple scheme is used to handle constraints. Whenever two individuals are compared, their constraints are checked. If both are feasible, nondomination sorting technology is directly applied to decide which one is selected. If one is feasible and the other is infeasible, the feasible dominates. If both are infeasible, then the one with the lowest amount of constraint violation dominates the other. Notations used in the proposed algorithm are detailed in Table 1.

| xi | The ith particle’s position of the upper level problem. |

| The velocity of xi. | |

| yj | The jth particle’s position of the lower level problem. |

| The velocity of yj. | |

| zj | The jth particle’s position of BLMPP. |

| The jth particle’s personal best position for the lower level problem. | |

| The ith particle’s personal best position for the upper level problem. | |

| The particle’s global best position for the lower level problem. | |

| The particle’s global best position for the upper level problem. | |

| Nu | The population size of the upper level problem. |

| Nl | The subswarm size of the lower level problem. |

| t | Current iteration number for the overall problem. |

| T | The predefined max iteration number for t. |

| tu | Current iteration number for the upper level problem. |

| tl | Current iteration number for the lower level problem. |

| Tu | The predefined max iteration number for tu. |

| Tl | The predefined max iteration number for tl. |

| wu | Inertia weights for the upper level problem. |

| wl | Inertia weights the lower level problem. |

| c1u | The cognitive learning rate for the upper level problem. |

| c2u | The social learning rate for the upper level problem. |

| c1l | The cognitive learning rate for the lower level problem. |

| c2l | The social learning rate for the lower level problem. |

| NDu | Nondomination sorting rank of the upper level problem. |

| CDu | Crowding distance value of the upper level problem. |

| NDl | Nondomination sorting rank of the lower level problem. |

| CDl | Crowding distance value of the lower level problem. |

| Pt | The tth iteration population. |

| Qt | The offspring of Pt. |

| St | Intermediate population. |

4. Numerical Examples

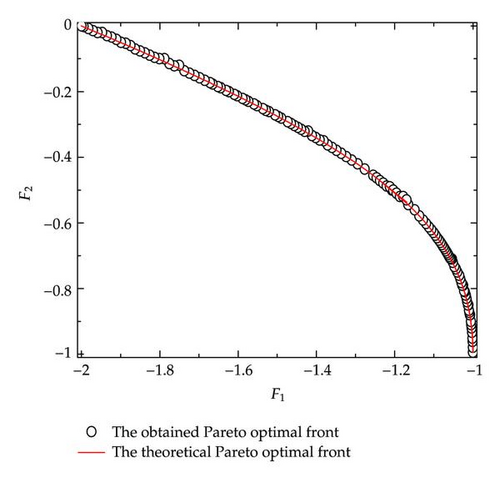

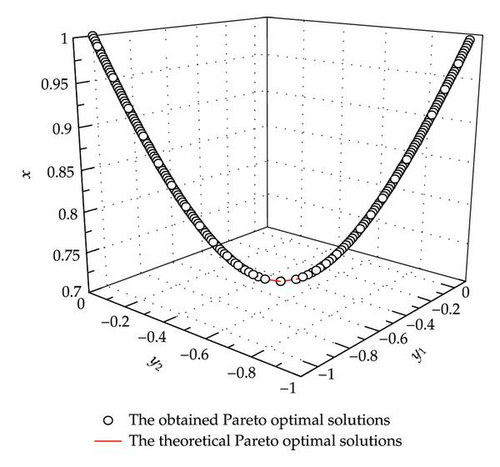

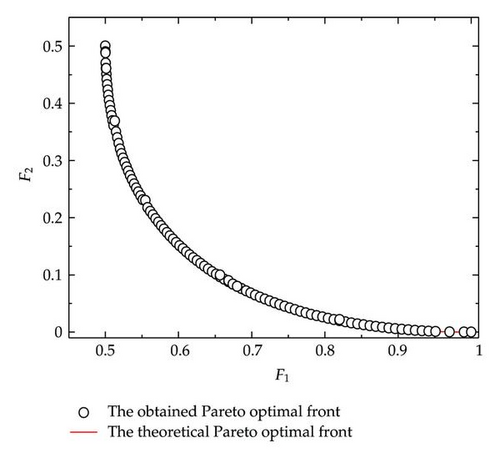

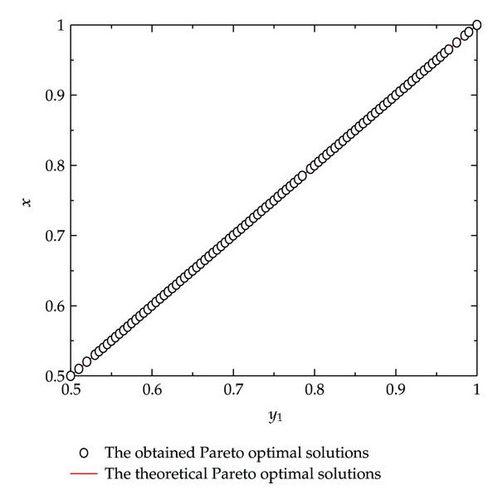

In this section, three examples will be considered to illustrate the feasibility of the proposed algorithm for problem (2.1). In order to evaluate the closeness between the obtained Pareto optimal front and the theoretical Pareto optimal front, as well as the diversity of the obtained Pareto optimal solutions along the theoretical Pareto optimal front, we adopted the following evaluation metrics.

4.1. Generational Distance (GD)

where n is the number of the obtained Pareto optimal solutions by the proposed algorithm and di is the Euclidean distance between each obtained Pareto optimal solution and the nearest member of the theoretical Pareto optimal set.

4.2. Spacing (SP)

where , is the mean of all di, is the Euclidean distance between the extreme solutions in obtained Pareto optimal solution set and the theoretical Pareto optimal solution set on the mth objective, M is the number of the upper level objective function, n is the number of the obtained solutions by the proposed algorithm.

The PSO parameters are set as follows: r1u, r2u, r1l, r2l ∈ random(0,1), the inertia weight wu = wl = 0.7298, and acceleration coefficients with c1u = c2u = c1l = c2l = 1.49618. All results presented in this paper have been obtained on a personal computer (CPU: AMD Phenom II X6 1055T 2.80 GHz; RAM: 3.25 GB) using a C# implementation of the proposed algorithm, and the figures were obtained using the origin 8.0.

Example 4.1. Example 4.1 is taken from [22]. Here x ∈ R1, y ∈ R2. In this example, the population size and iteration times are set as follows: Nu = 200, Tu = 200, Nl = 40, Tl = 40, and T = 40:

Example 4.2. Example 4.2 is taken from [36]. Here x ∈ R1, y ∈ R2. In this example, the population size and iteration times are set as follows: Nu = 200, Tu = 50, Nl = 40, Tl = 20, and T = 40.

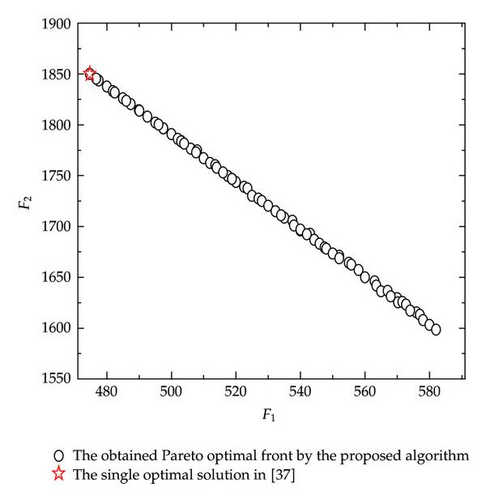

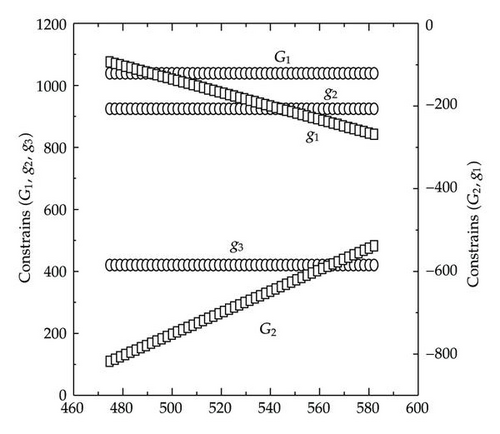

Example 4.3. Example 4.3 is taken from [37], in which the theoretical Pareto optimal front is not given. Here x ∈ R2, y ∈ R3. In this example, the population size and iteration times are set as follows: Nu = 100, Tu = 50, Nl = 20, Tl = 10, and T = 40:

5. Conclusion

In this paper, an improved PSO is presented for BLMPP. The BLMPP is transformed to solve the multiobjective optimization problems in the upper level and the lower level interactively using the proposed algorithm for a predefined count. And a set of accurate Pareto optimal solutions for BLMPP is obtained by the elite strategy. The experimental results illustrate that the obtained Pareto front by the proposed algorithm is very close to the theoretical Pareto optimal front, and the solutions are also distributed uniformly on entire range of the theoretical Pareto optimal front. Furthermore, the proposed algorithm is simple and easy to implement. It also provides another appealing method for further study on BLMPP.

Acknowledgment

This work is supported by the National Science Foundation of China (71171151, 50979073).