Approximate Implicitization Using Linear Algebra

Abstract

We consider a family of algorithms for approximate implicitization of rational parametric curves and surfaces. The main approximation tool in all of the approaches is the singular value decomposition, and they are therefore well suited to floating-point implementation in computer-aided geometric design (CAGD) systems. We unify the approaches under the names of commonly known polynomial basis functions and consider various theoretical and practical aspects of the algorithms. We offer new methods for a least squares approach to approximate implicitization using orthogonal polynomials, which tend to be faster and more numerically stable than some existing algorithms. We propose several simple propositions relating the properties of the polynomial bases to their implicit approximation properties.

1. Introduction

Implicitization algorithms have been studied in both the CAGD and algebraic geometry communities for many years. Traditional approaches to implicitization have focused on exact methods such as Gröbner bases, resultants and moving curves and surfaces, or syzygies [1]. Approximate methods that are particularly well suited to floating-point implementation have also emerged in the past 25 years [2–6]. These methods are closely related to the algorithms we present; however, those that fit most closely into the framework of this paper include [4, 7–9].

Implicitization is the conversion of parametrically defined curves and surfaces into curves and surfaces defined by the zero set of a single polynomial. Exact implicit representations of rational parametric manifolds often have very high polynomial degrees, which can cause numerical instabilities and slow floating-point calculations. In cases where the geometry of the manifold is not sufficiently complicated to justify this high degree, approximation is often desirable. Moreover, for CAGD systems based on floating point arithmetic, exact implicitization methods are often unfeasible due to performance issues. The methods we present attempt to find “best fit” implicit curves or surfaces of a given degree m (the definition of “best fit” varies with regard to the chosen method of approximation). One important property of all the algorithms is that they are guaranteed to give exact implicitizations for sufficiently high implicit degrees, up to numerical stability. In addition, some of the methods are also suitable for implementation in exact arithmetic, hence constituting alternative methods for exact implicitization.

For simplicity of notation, we proceed for the majority of the paper to describe the implicitization of curves. In Sections 2, 3, and 4, we introduce the notation and review existing methods. In Section 5, we present a new method for approximate implicitization using orthogonal polynomials and prove a theoretical relation to the previous methods. Implementations of the methods using different basis functions will be presented in Section 6 and a qualitative comparison and discussion given in Section 7. Finally, the generalization to both tensor-product and triangular surfaces will be covered in Section 8.

2. Preliminaries

Since we are searching for implicit representations and want to avoid the trivial solution q ≡ 0, we add a normalization constraint to q in the approximation. How best to make this choice has been discussed by several authors (see [6] for an overview). However, as we use the singular value decomposition (SVD) as the means of approximation, our results are given with the quadratic normalization ∥b∥2 = 1.

The techniques in this paper focus on minimization of the objective function q∘p over the space of polynomials {q : ∥b∥2 = 1}, where q is defined by (2.2) in a fixed implicit basis. Such a minimization, although not directly minimizing the Hausdorff distance between the implicit and parametric curves, is closely related to the geometric approximation problem. It has been shown that minimization of q∘p gives excellent results in geometric space away from singularities [5].

3. Approximate Implicitization: The Original Approach

In 1997, a class of techniques for approximate implicitization of rational parametric curves, surfaces, and hypersurfaces was introduced in [5]. The approximation quality of the techniques was substantiated by a general proof that the methods exhibit very high convergence rates, as shown in Tables 1 and 2. Extensions of this original approach, all of which inherit these high convergence rates, form the basis of this paper.

| Algebraic degree m | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 |

|---|---|---|---|---|---|---|---|---|

| Convergence rate k | 2 | 5 | 9 | 14 | 20 | 27 | 35 | 44 |

| Algebraic degree m | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 |

|---|---|---|---|---|---|---|---|---|

| Convergence rate k | 2 | 3 | 5 | 7 | 10 | 12 | 14 | 17 |

Given a choice of basis functions , we summarize the general approach of this section in the following algorithm.

Algorithm 3.1. Input: a rational parametric curve p(t) of degree n on the interval [0,1], and a degree m for the implicit polynomial.

- (1)

For each basis function , compute the vector of coefficients such that .

- (2)

Construct a matrix from the column vectors dk.

- (3)

Perform an SVD Dα = UΣVT, and select b = vmin , the right singular vector corresponding to the smallest singular value σmin as an approximate solution.

This algorithm is known as the original method in the α-basis; however, for this paper, we will refer to it simply as the α-method for an arbitrary basis α.

4. Weak Approximate Implicitization

This problem, unlike the minimax problem, can be solved directly if the parametric components are integrable. We simply take b = vmin , the eigenvector corresponding to the smallest eigenvalue of Mw. Since the matrix is symmetric, it has orthonormal eigenvectors. Similarly to the previous method, eigenvectors corresponding to larger eigenvalues give gradually degenerating approximations.

We summarize this algorithm, for a given weight function w, as follows.

Algorithm 4.1. Input: a parametric curve p(t) on the interval [0,1], and a degree m for the implicit polynomial.

- (1)

Construct a matrix Mw by performing the integrals according to

()for k, l = 1, …, M. - (2)

Compute the eigendecomposition Mw = VΛVT.

- (3)

Select b = vmin , the eigenvector corresponding to the smallest eigenvalue λmin .

Algorithms following the procedure above are known under different names in the literature; weak approximate implicitization in [8], numerical implicitization in [4], and the eigenvalue method in [11]. For the rest of this paper, we will call it the weak method.

As previously mentioned, the methods of this section are suitable for either exact or approximate implicitization. They can be performed using either symbolic or numerical integration; however, the former is generally only required when performing exact implicitizations in exact precision. For applications where floating-point precision is sufficient, numerical quadrature rules provide a much faster alternative. The methods also have wide generality since they can be applied to any parametric forms with integrable components, not only rational parametric forms. There are, however, some significant disadvantages in choosing this method in practice. Firstly, due to the high degree of the integrand, the integrals can take a relatively long time to evaluate, even when numerical quadrature rules are used. Secondly, and more importantly, the condition numbers of the matrices Mw can be significantly larger than the condition numbers of the Dα matrices from the previous section, leading to issues with numerical stability.

Since Mw is a symmetric positive (semi) definite matrix, it has a decomposition Mw = KTK, where the singular values of K are the square roots of the singular values of Mw. This decomposition is not unique; however, in the next section, we show that it is possible to construct such a matrix directly, without first computing Mw. The condition number of K will be the square root of the condition number of Mw; hence, we obtain the solution to the least squares problem in a more stable manner. Section 5.1 demonstrates how the lack of stability in the weak method compares to the new method described in the following section.

5. Approximate Implicitization Using Orthonormal Bases

Theorem 5.1. Let α be a polynomial basis, orthonormal with respect to the given inner product 〈·, ·〉 w, and Dα and Mw be defined by (3.2) and (4.3), respectively. Then, .

Proof. By (5.1) and the definition of Mw, we have for any basis α. But since α is orthonormal with respect to the inner product 〈·, ·〉 w, we have (i.e., A = I).

We should note that the matrix Dα is of dimension L × M, compared with the M × M matrix Mw. For n ≥ 3, we have L ≥ M, so finding the singular vectors of Dα may be more computationally expensive than computing the eigenvectors of Mw. However, since the matrix construction is usually the dominant part of the algorithm, these differences do not affect the overall complexity of the algorithms. Moreover, the increase in accuracy justifies any small increase in computational complexity in part of the algorithm.

5.1. Example

6. Examples of the Original Approach with Different Bases

The previous sections justified why the original approach is preferable to the weak approach in cases where the expression q(p(t)) can be expressed in terms of orthogonal functions. In this section, we will look at examples of specific implementations using orthogonal polynomial bases. We will also consider alternative implementations using nonorthogonal bases, which produce approximations to the least squares solution. We state some simple propositions which unify the methods and also consider computational aspects of the algorithms.

6.1. Jacobi Polynomial Bases

Proposition 6.1. Let DP be the matrix defined by (3.2) in a Jacobi polynomial basis , for any α, β > −1, normalized to ∥Pj∥∞ = 1 for j = 1, …, L. Then,

Proof. Since an orthogonal polynomial basis is degree ordered, one of the functions must be identically a nonzero constant, which, by the normalization condition, is equal to 1. Consider the vector b = (1, …, 1), which ensures that q(p(t)) = 1 for all t ∈ [0,1], by the partition of unity on the implicit basis . Clearly, only the basis function of degree zero can have a nonzero coefficient. By (3.2), we have the expansion

One property of Chebyshev expansions of a continuous function is that the error introduced by truncating the expansion is dominated by the first term after the truncation, if the coefficients decay quickly enough [14]. For curves that we wish to approximate (with relatively simple forms), the coefficients do tend to decay quickly, so the coefficients in the lower rows of the matrix tend to be dominated by those above them.

The Chebyshev basis is well known for giving good approximations to minimax problems in approximation theory (see [14] for an overview). This also seems to be the case for approximate implicitization, with the resulting error normally being close to equioscillating. In fact, experiments show that in almost all test cases, the number of roots in the error function given by the Chebyshev method is greater than or equal to the convergence rate, for the given implicit degree m (see Table 1). Thus the Chebyshev method appears to give a “near best” approximation in the sense that the error normally oscillates a maximum number of times.

Our experience in the choice between Legendre and Chebyshev polynomials is that the difference in approximation quality is minor. Chebyshev expansions are slightly quicker to compute and require less programming effort than their Legendre counterparts [13]. In addition they tend to eliminate the spike in error at the end of the intervals that appears in the Legendre method. However, both algorithms provide efficient and numerically stable methods for (weighted) least squares approximation over the entire interval Ω.

6.2. Discrete Approximate Implicitization: The Lagrange Basis

The result of approximate implicitization in the Lagrange basis depends both on the number of points sampled and the density of the point distribution in the parameter domain. Since Lagrange polynomials are neither orthogonal nor degree ordered, they do not solve a least squares problem of type (8.4). However, we can form a direct relation between the discrete and continuous least squares problems, as follows.

Proposition 6.2. Let p(t) be a planar parametric curve with bounded, piecewise continuous components on the interval [0,1], and let DL,L be the matrix defined by (6.4) at uniform nodes. Then, the (k, l) element of converges to a constant multiple of the (k, l) element of M1, defined by (4.3), as the number of samples L is increased.

Proof. Since each of the parametric components of the curve is bounded and piecewise continuous on the interval and qk is a polynomial, we know that qk∘p is bounded and piecewise continuous for each k. Let hL : = 1/(L − 1). Then, for uniform samples , where tj = hL(j − 1) in the parameter domain, we see that

Sampling more points gives ever closer approximations of the true least squares approximation (for any given L, the constant hL is absorbed into the singular values of the matrix and does not affect the singular vectors). However, as we have seen, the Legendre method can solve the (unweighted) least squares problem exactly, without excessive sampling and in a way that best preserves the numerical precision. Although the point sampling method does tend to give good approximations when the number of samples L is large enough, it is a relatively small increase in computational complexity and programming effort to use Legendre expansions instead. The main strength of the Lagrange method lies in its simplicity: it is easy to implement, computationally inexpensive, and highly parallelizable.

Proposition 6.3. Let p(t) be a planar parametric curve with bounded, piecewise continuous components on the interval [0,1], and let DL,L be the matrix defined by (6.4) at Chebyshev points given by (6.7). Then, the (k, l) element of converges to a constant multiple of the (k, l) element of Mw, defined by (4.3) with the weight function , as the number of samples L is increased.

Proof. We use the change of variable t(θ) = (1 − cos (πθ))/2, and note first that

6.3. Bernstein Polynomial Basis

The Bernstein method is closely related to both the Lagrange and Legendre methods seen previously. It is in fact easy to see that the DB,L matrix in the Bernstein basis of degree L − 1 converges asymptotically to the DL,L matrix in the Lagrange basis of degree L − 1 at uniform nodes, as the degree is raised.

Proposition 6.4. Let DB,L be the matrix defined by (3.1) in the degree L − 1 Bernstein polynomial basis. Then, the (j, k) element of DB,L converges to the (j, k) element of DL,L, defined by (3.1) in the Lagrange polynomial basis at uniform nodes.

Proof. It is well known that the Bernstein coefficients of a polynomial tend to the values of the polynomial as the degree is raised, as follows [17]:

We can thus deduce the following convergence property of the Bernstein method as an immediate consequence of Propositions 6.2 and 6.4.

6.4. Exact Implicitization Using Linear Algebra

As mentioned previously, when the degree m is chosen high enough to give an exact implicit representation and the algorithms are implemented in exact precision, all the methods can give exact results. The choice of basis in the exact case is irrelevant to the resulting polynomial and affects only the implementation complexity and computational speed. For example, the elements of the matrix using the Lagrange method can be generated by choosing rational nodes represented in exact arithmetic. As with the floating-point implementation, the matrix can be built very quickly in parallel, but rather than using SVD, we can exploit algorithms for finding the kernel of a matrix. A similar method for exact implicitization is described in [9]. However, the matrix there is expanded in the monomial basis, which leads to computationally expensive expansions for high degrees. It is noted that it is possible to reduce the complexity of that method in certain cases by exploiting the special structures of the algorithm and sparsity in the resulting matrix. In general, sparsity is not a feature of the Lagrange method; however, the matrices can be built more efficiently. The Bernstein method can also be implemented in exact arithmetic; however, similarly to the method in [9], it suffers from issues with computationally expensive expansions. Although it is possible to implement the Chebyshev and Legendre methods in exact precision using the elementwise definition (5.3), the evaluation of such integrals will be slow. The fast algorithms for generating the matrices using FFT are not suitable for an exact implementation.

When using the original method for approximate implicitization, we represent the error function q∘p in a basis of degree mn. In the Lagrange basis, we thus choose the number of nodes L, to be one more than the degree of the basis L = mn + 1. This is shown to be the smallest number of samples one can take in order to guarantee an exact implicitization method in the following proposition.

Proposition 6.6. Suppose one is given a nondegenerate rational parametric planar curve p(t) of degree n (i.e., the degree of the algebraic representation is n). Then, the number of unique samples required to guarantee an exact implicitization by the Lagrange method is given by K = n2 + 1.

Proof. Since the implicitization is exact, we know that there exists a unique polynomial q of degree n with coefficients b such that q∘p ≡ 0. By the theory described in Section 3, we can write

To see that choosing fewer than K points is insufficient, we consider parameter values corresponding to double points on the curve. Let K1 = (1/2)(n + 1)(n + 2) − 1 denote the minimum number of points in general position on a curve of degree n required to define the curve. Let the total number of possible double points on a rational curve of degree n be given by K2 = (1/2)(n − 2)(n − 1) [18]. Then up to K2 points on the curve can correspond to more than one parameter value. Thus, the minimum number of samples guaranteeing a unique exact implicitization is given by

When searching for exact implicitizations, we generally want the implicit polynomial of lowest degree that contains the parametric curve. Since the normalized coefficient vector b, given by an exact implicitization of lowest degree is unique, the kernel of the matrix would be expected to be 1-dimensional. A kernel of dimension higher than one indicates that the implicit polynomial defined by any vector in the kernel is reducible, and thus the degree m can be reduced.

7. Comparison of the Algorithms

So far, we have presented several approaches to exact and approximate implicitization using linear algebra. The approaches exhibit different qualities in terms of approximation, conditioning, and computational complexity. The intention of this section is to provide a comparison of the algorithms.

7.1. Algebraic Error Comparisons

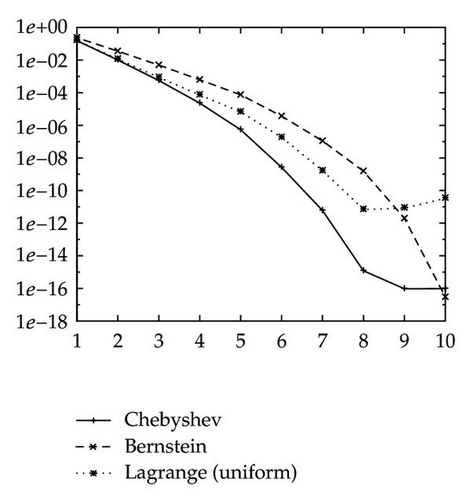

Figure 1 plots the average uniform algebraic error, max t∈[0,1] | q(p(t))|, of the approximations of 100 Bézier curves of degree 10, for algebraic degrees m = 1, …, 10. We use a barycentric coordinate system defined on the triangle (1,0), (0,0), and (0,1) for the implicit representation and choose random control points within this region to define the Bézier curves. We compare the performance of the Lagrange basis (at uniform nodes) the Bernstein basis, and the Chebyshev basis (Any reference to the Lagrange basis throughout this section will be assumed to be at uniformly spaced nodes.). As the results in the Chebyshev and Legendre bases are very similar, we include only the Chebyshev basis. The monomial basis, transformed to the interval [0,1], was also considered but not included due to its vastly inferior approximation qualities. All the algorithms were performed in double precision.

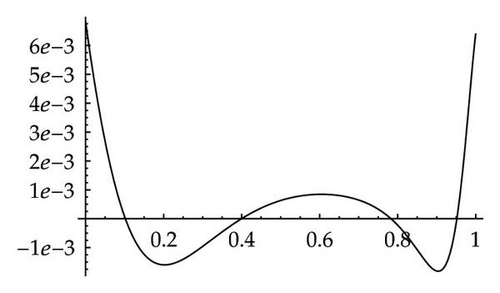

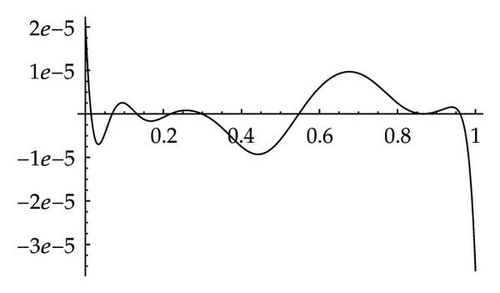

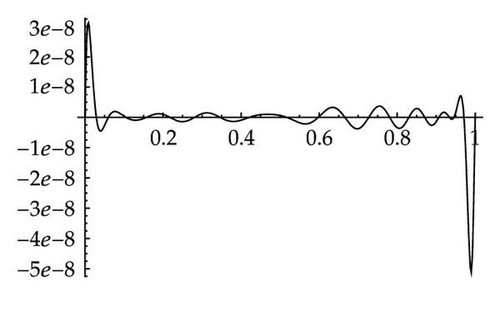

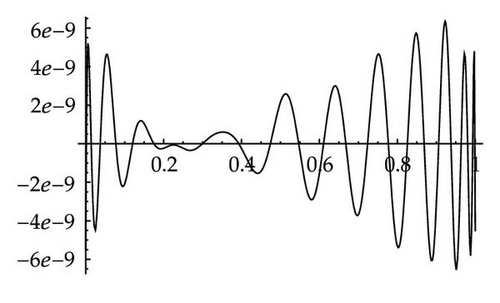

For each degree up to the exact degree, m = 10, the Chebyshev basis gave the best uniform minimization of the objective function q∘p. The maximum error for degree m in the Chebyshev basis was, in general, approximately equivalent to the maximal error in the Lagrange basis of degree m + 1 and the Bernstein basis of degree m + 2. For the Chebyshev basis, the maximal errors level out to roughly double-precision accuracy at degree eight, whereas, for the Bernstein basis, the required degree for machine precision was 10. Although the Lagrange basis performs better than the Bernstein basis for low degrees, the higher degree approximations are distorted to the extent that the exact implicitization, at degree 10, loses several orders of magnitude in accuracy. This appears to be due to the large deviation in the extrema of Lagrange basis functions at uniform nodes, which are associated with large Lebesgue constants. An example of such an error distribution is pictured in Figure 4(c). The spike in error can be reduced by sampling more points, as the error converges to the weak approximation (c.f., Proposition 6.2), or by choosing point distributions with smaller Lebesgue coefficients.

It should be noted that, for the Bernstein and Lagrange methods, the maximum of the algebraic error normally occurs at the end points of the interval and is normally much higher than the average error across the interval (see Figure 4). Moreover, the error away from the ends of the interval can sometimes be smaller in these bases than in the Chebyshev basis. Hence, they generally perform better than Figure 1 may suggest, in relation to the Chebyshev basis. The Chebyshev basis, however, tends to make the error roughly equioscillating throughout the interval. In addition, topological constraints imposed by approximating with lower degrees than necessary mean that, even when the algebraic error is small, the geometric error can be somewhat different, especially for curves with many singularities.

7.2. A Visual Comparison of the Methods

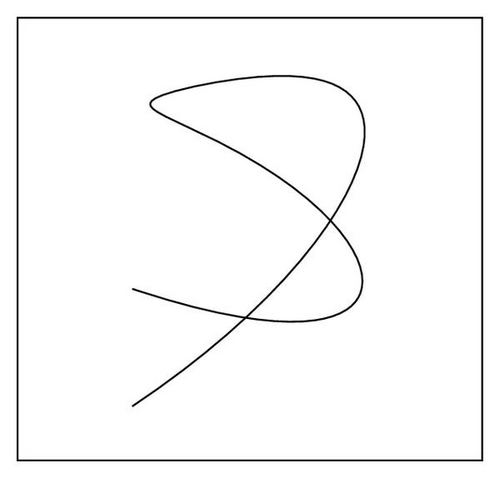

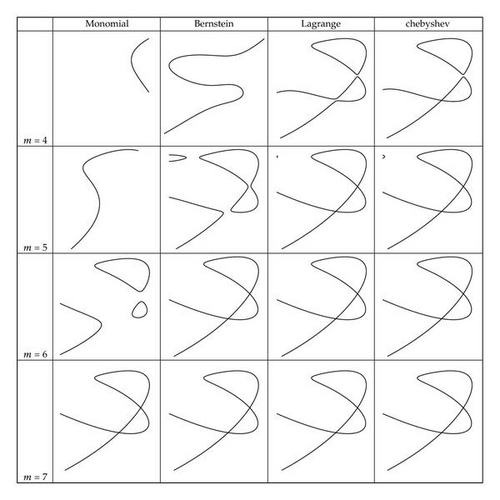

As discussed previously, minimizing the algebraic error does not necessarily minimize the geometric distance between the implicit and parametric curves. In order to visually compare how the methods perform in terms of geometric approximations, Figure 3 plots the implicit approximations of the parametric curve pictured in Figure 2. The curve was chosen as an example that represents the general approximation properties of each of the bases. However, it should be noted that different examples can give different results.

We see that, for the quartic approximation, the Lagrange and Chebyshev methods are already performing fairly well with only some detail lost close to the double point singularities. Despite exhibiting several intersections with the parametric curve, the Bernstein method gives little reproduction of the shape. The monomial approximation bears almost no resemblance to the original curve. For the quintic approximation, the Chebyshev and Lagrange bases again perform very similarly, giving excellent approximations that replicate the singularities well. These approximations would be sufficient for many applications. The Bernstein method performs similarly to the Chebyshev and Lagrange approximations of degree four, with only some loss of detail at each of the double points. Again the monomial basis gives almost no replication of the curve. It is also interesting to note the presence of extraneous branches visible in the Bernstein, Lagrange, and Chebyshev approximations at degree five. This is a feature which may occur with any of the methods. At degree six, the Bernstein, Lagrange, and Chebyshev methods all give excellent results over the entire interval. The monomial method is beginning to show good approximation at the centre of the interval; however, this deteriorates towards the ends. At degree seven, we expect exact results, up to numerical stability, for all of the algorithms. Visually, the implicitizations in all of the bases agree very closely.

It is also interesting to note that when attempting to use the weak method for approximate implicitization as an exact method here, we obtain a completely different solution, with relative error given approximately by eweak = 0.607. This seems to be due to the fact that the nine smallest eigenvalues (which are equal to the singular values, since M is symmetric positive semidefinite) are all below machine precision. Thus, the solution given by the weak method could be a combination of any of the nine corresponding eigenvectors. However, despite the large relative error, the weak method still returns a solution which gives a reasonable geometric approximation.

Typical algebraic error distributions obtained from the methods in this section are displayed in Figure 4. A more accurate approximation of the geometric error can be given by dividing the algebraic error by the norm of the gradient of the given implicit representation. However, since the methods we present do not control the gradient, we have not included such plots here.

7.3. Discussion

In the example of Section 7.2, it is striking how well the Lagrange basis performs in relation to the Chebyshev basis. Despite the spike in error near the ends of the interval, the geometric approximation appears to remain relatively good; in some cases, even better than the Chebyshev. Thus, the comparisons in this section may lead to different conclusions as to which is the best algorithm to choose in general. It is clear that the monomial basis performs relatively badly, but the other bases all tend to perform well. The speed and simplicity of the Lagrange method is appealing, whereas the stability of exact implicitization in the Bernstein basis is desirable for many applications. The fact that the Legendre and Chebyshev methods have the guarantee of solving a least squares problem (see Theorem 5.1) and that there exist efficient algorithms for computing them also gives justification for choosing them as general methods. As an overview of this qualitative comparison, we display various aspects of the implementations in Table 3. The table summarizes how the algorithms perform in terms of the stability, generality, and what sort of problems they solve (i.e., least squares or uniform approximations).

| Least squares | Uniform | Stability | Generality | |

|---|---|---|---|---|

| Lagrange | Good | Ok | Ok | Any |

| Legendre | Very good | Good | Ok | Rational |

| Chebyshev | Very good | Very good | Ok | Rational |

| Bernstein | Ok | Ok | Very good | Rational |

| Weak | Very good | Ok | Very bad | Integrable |

One undesirable property of approximate implicitization is the possibility of introducing new singularities that are not present in the parametric curve. As the implicit polynomial representation is global, we cannot control what happens outside the interval of approximation. In particular, there could appear self-intersections of the curve within the interval of interest. This is an artifact that can appear using any of the methods described in this paper. However, such problems can be avoided by adding constraints to the algebraic approximation [21] or by using information about the gradient of the implicit curve in the approximation [22]. In general, adding such constraints will reduce the convergence rates of these methods [7].

The computation times for each of the methods vary. In all the current implementations of the methods, the matrix generation is the dominant part of the algorithm, and the SVD is generally fast. When constructing the matrices, the monomial and Bernstein methods suffer from computationally expensive expansions for high degrees, whereas the Chebyshev and Lagrange methods are based on point sampling and FFT, which can be implemented in parallel. Computational features of the methods will be the subject of further research, including exploiting the parallelism of GPUs to enhance the algorithms.

8. Approximate Implicitization of Surfaces

In this section, we will discuss how the methods presented for curves in the preceding sections generalize to surface implicitization. We will also provide a visual example of approximate implicitization of surfaces.

8.1. Tensor-Product Parametric Surfaces

The univariate bases and can be be chosen arbitrarily, as in the case for curves. In this way, Theorem 5.1, Propositions 6.2, 6.3, 6.4, and 6.6, and Corollary 6.5 from the univariate case all carry over to the tensor-product case with minimal effort. (For a tensor-product extension of Proposition 6.6, the samples should be taken on a tensor-product grid and the number of samples is given by .) This applies also to higher dimensional tensor-product hypersurfaces. As an example, consider Theorem 5.1. In the tensor-product case, we have the following, almost verbatim to the univariate case.

Theorem 8.1. Let α be a tensor-product polynomial basis, orthonormal with respect to the given inner product 〈·, ·〉 w, and Dα,β and Mw be defined by (8.8) and (8.5), respectively. Then, .

Proof. Similar to Theorem 5.1, with the relevant inner product given by

8.2. Triangular Surfaces

Surfaces on triangular domains may be considered a more fundamental generalization than tensor-product surfaces; however, they often exhibit several difficulties not present in the tensor-product case. For example, most practical applications of the weak method of Section 4 use numerical quadrature, a method which is difficult to implement on triangular domains for high degrees. Since the degree of the integrand in the weak implicitization of a surface of degree n has degree 2mn, high degree quadrature rules are required. For example, exact implicitization of a general quintic parametric surface, with implicit degree m = n2 = 25 would need a quadrature rule accurate to order 250. Although it would be wise to use a lower degree approximation in this case (e.g., m = 5), the degree of the integrand would still be too high for many quadrature rules on triangular domains. High degree quadrature rules can be constructed by first transforming the domain into a square and using two univariate quadrature rules. However, these rules are not symmetric in the triangle and require more samples than is mathematically necessary [23].

Certain methods for approximate implicitization are, however, easy to generalize. For example, the Bernstein basis has a natural representation on simplex domains using barycentric coordinates, and thus the use of approximate implicitization on triangular surfaces in this basis is simple [15]. The convergence result of Corollary 6.5 can be extended to this case, together with well-established degree elevation algorithms, in order to obtain better approximation results.

The Lagrange basis method from Section 6.2, which was based on sampling, can also be extended to triangular surfaces. However, when choosing samples, it is essential that the Lagrange polynomials defining the α-basis are linearly independent; that is, they do in fact form a basis. For curves, choosing unique parameters for the sample points was sufficient (see Proposition 6.6). However, for surfaces we must add the requirement that the samples must not all lie on a curve of degree mn in the parameter domain, where the number of samples is given by .

Orthogonal polynomials on triangular domains also exist, and an extension of Theorem 5.1 holds in this case. However, fast algorithms for generating the coefficients appear to be difficult to replicate, thus limiting the applicability of this method in practice. In particular, there appears to be no direct analogue of the FFT method for generating Chebyshev coefficients. We refer the reader to [24] for a discussion of orthogonal polynomials on triangular domains.

8.3. An Example of Surface Implicitization

As an example of approximate implicitization of tensor-product surfaces, we will consider the problem of approximating the well-known Newells′ teapot model. It is stated in [1] that “the 32 bicubic patches defining Newells′ teapot provide a surprisingly diverse set of tests for moving surface implicitization.” In that paper, properties of the moving surface implicitization algorithm for the different patches are discussed and exact implicit degrees for each patch are stated. We will consider the same 32 patches here but instead use approximate methods, where the degree of approximation has been chosen manually to give good visual results.

Each of the 32 bicubic parametric surfaces has been approximated using the tensor-product Chebyshev method and the degrees stated in Table 4. The resulting surface has been ray traced in Figure 5 using POV-Ray [25] and clearly gives an excellent implicit approximation to the parametric teapot model. Moreover, the degrees of the surface patches are greatly reduced from the exact degrees, which are also stated in Table 4. The highest degree patch in the approximated model is six, whereas, if exact methods are used, patches of degree up to 18 are required. Generally, it appears that for visual purposes, the degree can be reduced to roughly one-third of the exact degree.

| Exact degree | Approximate degree | |

|---|---|---|

| 4 × rim | 9 | 4 |

| 4 × upper body | 9 | 3 |

| 4 × lower body | 9 | 3 |

| 2 × upper handle | 18 | 4 |

| 2 × lower handle | 18 | 4 |

| 2 × upper spout | 18 | 5 |

| 2 × lower spout | 18 | 6 |

| 4 × upper lid | 13 | 3 |

| 4 × lower lid | 9 | 4 |

| 4 × bottom | 15 | 3 |

This example shows one potential application of approximate implicitization; however, there are several factors that should be noted. Firstly, a significant amount of user input was required to generate the approximations of the teapot patches. This involved choosing degrees that were suitable for each patch and also choosing approximations without extra branches in the region of interest. This was done by considering approximations corresponding to other singular values than the smallest. For example, for the upper handle patches we chose the approximation corresponding to the fourth smallest singular value. For each increased singular value, the convergence rate of the method is reduced by one [8]. However, even with the reduced convergence rates, the Chebyshev method continues to give excellent approximations. User input was also given to define the clipping region for the ray tracing of each patch. In this example, the clipping regions were boxes parallel to the xy, xz, and yz-planes. However, in more complicated examples, it is not always suitable to use box regions for this purpose.

Another feature of this example is that the continuity between the parametric patches has been approximated very well in the implicit model. This is mainly due to the high convergence rates, which give good approximations over the entire surface region. However, in this case, there is also symmetry in the model meaning the edge curves where the patches meet can be approximated in a symmetric way. To achieve this, we have used the monomial basis for the implicit representation since it is symmetric around the z-axis. For more general models, such symmetry would not be possible.

9. Conclusions

We have presented and unified several new and existing methods for approximate implicitization of rational curves using linear algebra. Theoretical connections between the different methods have been made together with qualitative comparisons. Extensions of the methods to both tensor-product and triangular surfaces have been discussed. By considering various issues such as approximation quality and computational complexity, we regard the Chebyshev and Legendre methods as the algorithms of choice for approximation of most rational parametric curves. However, to obtain good numerical stability when using floating-point arithmetic for exact implicitization, the Bernstein basis is a more favourable choice. Future research could include how the methods can be improved, for example, by exploiting sparsity as in [11] or by adding continuity constraints to the approximations [26].

Acknowledgments

The research leading to these results has received funding from the (European Community′s) Seventh Framework Programme (FP7/2007-2013) under Grant agreement no. (PITN-GA-2008-214584) and from the Research Council of Norway through the IS-TOPP program.