Complete Solutions to General Box-Constrained Global Optimization Problems

Abstract

This paper presents a global optimization method for solving general nonlinear programming problems subjected to box constraints. Regardless of convexity or nonconvexity, by introducing a differential flow on the dual feasible space, a set of complete solutions to the original problem is obtained, and criteria for global optimality and existence of solutions are given. Our theorems improve and generalize recent known results in the canonical duality theory. Applications to a class of constrained optimal control problems are discussed. Particularly, an analytical form of the optimal control is expressed. Some examples are included to illustrate this new approach.

1. Introduction

Problem (1.1) appears in many applications, such as engineering design, phase transitions, chaotic dynamics, information theory, and network communication [1, 2]. Particularly, if ℓl = {0} and ℓu = {1}, the problem leads to one of the fundamental problems in combinatorial optimization, namely, the integer programming problem [3]. By the fact that the feasible space 𝒳a is a closed convex subset of ℛn, the primal problem has at least one global minimizer. When (𝒫) is a convex programming problem, a global minimizer can be obtained by many well-developed nonlinear optimization methods based on the Karush-Kuhn-Tucker (or simply KKT ) optimality theory [4]. However, for (𝒫) with nonconvexity in the objective function, traditional KKT theory and direct methods can only be used for solving (𝒫) to local optimality. So, our interest will be mainly in the case of P(x) being nonconvex on 𝒳a in this paper. For special cases of minimizing a nonconvex quadratic function subject to box constraints, much effort and progress have been made on locating the global optimal solution based on the canonical duality theory by Gao (see [5–7] for details). As indicated in [8], the key step of the canonical duality theory is to introduce a canonical dual function, but commonly used methods are not guaranteed to construct it since the general form of the objective function given in (1.1). Thus, there has been comparatively little work in global optimality for general cases.

Inspired and motivated by these facts, a differential flow for constructing the canonical dual function is introduced and a new approach to solve the general (especially nonconvex) nonlinear programming problem (𝒫) is investigated in this paper. By means of the canonical dual problem, some conditions in global optimality are deduced, and global and local extrema of the primal problem can be identified. An application to the linear-quadratic optimal control problem with constraints is discussed. These results presented in this paper can be viewed as an extension and an improvement in the canonical duality theory [8–10].

The paper is organized as follows. In Section 2, a differential flow is introduced to present a general form of the canonical dual problem to (𝒫). The relation of this transformation with the classical Lagrangian method is discussed. In Section 3, we present a set of complete solutions to (𝒫) by the way presented in Section 2. The existence of the canonical dual solutions is also given. We give an analytic solution to the box-constrained optimal control problem via canonical dual variables in Section 4. Meanwhile, some examples are used to illustrate our theory.

2. A Differential Flow and Canonical Dual Problem

In the beginning of this paper, we have mentioned that our primal goal is to find the global minimizers to a general (mainly nonconvex) box-constrained optimization problem (𝒫). Due to the assumed nonconvexity of the objective function, the classical Lagrangian L(x, σ) is no longer a saddle function, and the Fenchel-Young inequality leads to only a weak duality relation: min P ≥ max P*. The nonzero value θ = min P(x) − max P*(σ) is called the duality gap, where possibly, θ = ∞. This duality gap shows that the well-developed Fenchel-Moreau-Rockafellar duality theory can be used mainly for solving convex problems. Also, due to the nonconvexity of the objective function, the problem may have multiple local solutions. The identification of a global minimizer has been a fundamentally challenging task in global optimization. In order to eliminate this duality gap inherent in the classical Lagrange duality theory, a so-called canonical duality theory has been developed [2, 9]. The main idea of this new theory is to introduce a canonical dual transformation which may convert some nonconvex and/or nonsmooth primal problems into smooth canonical dual problems without generating any duality gap and deduce some global solutions. The key step in the canonical dual transformation is to choose the (nonlinear) geometrical operator Λ(x). Different forms of Λ(x) may lead to different (but equivalent) canonical dual functions and canonical dual problems. So far, in most literatures related, the canonical dual transformation is discussed and the canonical dual function is formulated in quadratic minimization problems (i.e., the objective function is the quadratic form). However, for the general form of the objective function given in (1.1), in general, it lacks effective strategies to get the canonical dual function (or the canonical dual problem) by commonly used methods. The novelty of this paper is to introduce the differential flow created by differential equation (2.6) to construct the canonical dual function for the problem (𝒫). Lemma 2.5 guarantees the existence of the differential flow; Theorem 2.3 shows that there is no duality gap between the primal problem (𝒫) and its canonical dual problem (Pd) given in (2.7) via the differential flow; Meanwhile, Theorems 3.1–3.4 use the differential flow to present a global minimizer. In addition, the idea to introduce the set 𝒮 of shift parameters is closely following the works by Floudas et al. [11, 12]. In [12], they developed a global optimization method, αBB, for general twice-differentiable constrained optimizations proposing to utilize some α parameter to generate valid convex under estimators for nonconvex terms of generic structure.

The main idea of constructing the differential flow and the canonical dual problem is as follows. For simplicity without generality, we assume that , namely, , where ℓi ≠ 0 for all i.

Lemma 2.1. The dual feasible space 𝒮 is an open convex subset of . If , then ρ ∈ 𝒮 for any .

Proof. Notice that P(x) is twice continuously differentiable in ℛn. For any x ∈ 𝒳a, the Hessian matrix ∇2P(x) is a symmetrical matrix. We know that for any given Q = QT ∈ ℛn×n, is a convex set. By the fact that the intersection of any collection of convex sets is convex, the dual feasible space 𝒮 is an open convex subset of . In addition, it follows from the definition of 𝒮 that ρ ∈ 𝒮 for any . This completes the proof.

Lemma 2.2. Let x(ρ) be a given flow defined by (2.6), and Pd(ρ) be the corresponding canonical dual function defined by (2.7). For any ρ ∈ 𝒮, we have

Proof. Since Pd(ρ) is differentiable, for any ρ ∈ 𝒮,

Theorem 2.3. The canonical dual problem (Pd) is perfectly dual to the primal problem (𝒫) in the sense that if is a KKT point of Pd(ρ), then the vector is a KKT point of (𝒫) and

Proof. By introducing the Lagrange multiplier vector λ ∈ ℛn to relax the inequality constraint ρ ≥ 0 in 𝒮, the Lagrangian function associated with (Pd) becomes L(ρ, λ) = Pd(ρ) − λTρ.Then the KKT conditions of (Pd) become

In addition, we have

Remark 2.4. Theorem 2.3 shows that by using the canonical dual function (2.7), there is no duality gap between the primal problem (𝒫) and its canonical dual (Pd), that is, θ = 0. It eliminates this duality gap inherent in the classical Lagrange duality theory and provides necessary conditions for searching global solutions. Actually, we replace 𝒮 in (Pd) with the space 𝒮#, 𝒮# : = {ρ ≥ 0∣det [∇2P(x) + Diag(ρ)] ≠ 0, for all x ∈ 𝒳a} in the proof of Theorem 2.3. Moreover, the inequality of det [∇2P(x) + Diag(ρ)] ≠ 0, for all x ∈ 𝒳a in 𝒮# is essentially not a constraint as indicated in [5].

Due to introduceing a differential flow x(ρ), the constrained nonconvex problem can be converted to the canonical (perfect) dual problem, which can be solved by deterministic methods. In view of the process (2.2)–(2.6), the flow x(ρ) is based on the KKT (2.2). In other words, we can solve equation (2.2) backwards from ρ* to get the backward flow x(ρ), ρ ∈ 𝒮∩{0 ≤ ρ ≤ ρ*}. Then, it is of interest to know whether there exists a pair (x*, ρ*) satisfying (2.2).

Lemma 2.5. Suppose that ∇P(0) ≠ 0. For the primal problem (𝒫), there exist a point ρ* ∈ 𝒮 and a nonzero vector x* ∈ 𝒳a such that ∇P(x*) + Diag(ρ*)x* = 0.

Proof. Since 𝒳a is bounded and P(x) is twice continuously differentiable in ℛn, we can choose a large positive real M ∈ ℛ such that ∇2P(x) + Diag(Me) > 0, for all x ∈ 𝒳a and (e ∈ ℛn is an n−vector of all ones). Then, it is easy to verify that ∇P(x) + Diag(Me)x < 0 at the point x = −ℓ1/2, and ∇P(x) + Diag(Me)x > 0 at the point x = ℓ1/2.

Notice that the function ∇P(x) + Diag(Me)x is continuous and differentiable in ℛn. It follows from differential and integral calculus that there is a nonzero stationary point x* ∈ 𝒳a such that ∇P(x*) + Diag(Me)x* = 0. Let ρ* = Me ∈ 𝒮. Thus, there exist a point ρ* ∈ 𝒮 and a nonzero vector x* ∈ 𝒳a satisfying (2.2). This completes the proof.

Remark 2.6. Actually, Lemma 2.5 gives us some information to search the desired parameter ρ*. From Lemma 2.5, we only need to choose a large positive real M ∈ ℛ such that ∇2P(x) + Diag(Me) > 0, for all x ∈ 𝒳a and . Since ∇P(0) ≠ 0, then it follows from (∥∇2P(x)∥/M) < 1 uniformly in 𝒳a that there is a unique nonzero fixed point x* ∈ 𝒳a such that

Remark 2.7. Moreover, for the proper parameter ρ*, it is worth investigating how to get the solution x* of (2.2) inside of 𝒳a. For this issue, when P(x) is a polynomial, we may be referred to [17]. There are results in [17] on bounding the zeros of a polynomial. We may consider for a given bounds to determine the parameter by the use of the results in [17] on the relation between the zeros and the coefficients. We will discuss it with the future works as well. However, the KKT conditions are only necessary conditions for local minimizers to satisfy for the nonconvex case of (𝒫). To identify, a global minimizer among all KKT points remains a key task for us to address in the next section.

3. Complete Solutions to Global Optimization Problems

Theorem 3.1. Suppose that is a KKT point of the canonical dual function Pd(ρ) and defined by (2.6). If , then is a global maximizer of (Pd) on 𝒮, and is a global minimizer of (𝒫) on 𝒳a and

Proof. If is a KKT point of (Pd) on 𝒮, by (2.15), stays in 𝒳a, that is, for all i. By Lemma 2.1 and 2.2, it is easy to verify that x(ρ) ∈ 𝒳a and for any .

For any given parameter ρ, (), we define the function fρ(x) as follows:

Thus, is a global minimizer of (𝒫) on 𝒳a and

Remark 3.2. Theorem 3.1 shows that a vector is a global minimizer of the problem (𝒫) if is a critical solution of (Pd). However, for certain given P(x), the canonical dual function might have no critical point in 𝒮. For example, the canonical dual solutions could locate on the boundary of 𝒮. In this case, the primal problem (𝒫) may have multiple solutions.

In order to study the existence conditions of the canonical dual solutions, we let ∂𝒮 denote the boundary of 𝒮.

Theorem 3.3. Suppose that P(x) is a given twice continuously differentiable function, 𝒮 ≠ ∅ and ∂𝒮 ≠ ∅. If for any given ρ0 ∈ ∂𝒮 and ρ ∈ 𝒮,

Proof. We first show that for any given ,

By Lemma 2.2, we have

Suppose that

Since Pd : 𝒮 → ℛ is concave and the condition (3.10) holds, if (3.9) holds, then the canonical dual function Pd(ρ) is coercive on the open convex set 𝒮. Therefore, the canonical dual problem (Pd) has one maximizer by the theory of convex analysis [4, 18]. This completes the proof.

Clearly, when ∇2P(x) > 0 on 𝒳a, the dual feasible space 𝒮 is equivalent to and Diag(ρ) ∈ ℛn×n by (2.1). Notice that for any given . Then Pd(ρ) is concave and coercive on , and (Pd) has at least one maximizer on . In this case, it is then of interest to characterize a unique solution of (𝒫) by the dual variable.

Theorem 3.4. If ∇2P(x) > 0 on 𝒳a, the primal problem (𝒫) has a unique global minimizer determined by satisfying

Proof. To prove Theorem 3.4, by Theorem 2.3 and 3.1, it is only needed to prove that is a KKT point of (Pd) in . By (3.14), the relations for all i ∈ I also hold. Since satisfies equations for all i ∈ J, we can verify that stays in 𝒳a and the complementarity conditions hold. Thus, is a KKT point of (Pd) in by (2.9), (2.15), and is a unique global minimizer of (𝒫). This completes the proof.

Before beginning of applications to optimal control problems, we present two examples to find global minimizers by differential flows.

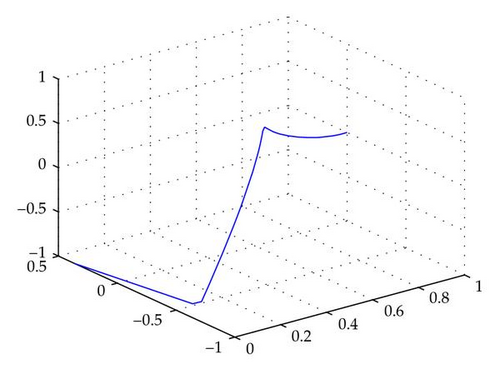

Example 3.5. As a particular example of (𝒫), let us consider the following one dimensional nonconvex minimization problem with a box:

Remark 3.6. In this example, we see that a differential flow is useful in solving a nonconvex optimization problem. For the global optimization problem, people usually compute the global minimizer numerically. Even in using canonical duality method, one has to solve a canonical dual problem numerically. Nevertheless, the differential flow directs us to a new way for finding a global minimizer. Particularly, one may expect an exact solution of the problem provided that the corresponding differential equation has an analytic solution.

Example 3.7. Given a symmetric matrix A ∈ ℛn×n and a vector c ∈ ℛn. Let P(x) = (1/2)xTAx − cTx. We consider the following box-constrained nonconvex global optimization:

If we choose a1 = −0.5, a2 = −0.5, a3 = −0.3, c1 = c2 = 0.3 and ℓ1 = 0.5, ℓ2 = 2, this dual problem has only one KKT point . By Theorem 3.1, is a global minimizer of Example 3.7 and P(1,2) = −2.75 = Pd(1.8,0.7).

4. Applications to Constrained Optimal Control Problems

It is well known that the central result in the optimal control theory is the Pontryagin maximum principle providing necessary conditions for optimality in very general optimal control problems.

4.1. Pontryagin Maximum Principle

Unfortunately, above conditions are not, in general, sufficient for optimality. In such a case, we need to go through the process of comparing all the candidates for optimality that the necessary conditions produce, and picking out an optimal solution to the problem. Nevertheless, Lemma 4.1 can prove that the solution satisfies sufficiency conditions of the type considered in this section, then these conditions will ensure the optimality of the solution.

Lemma 4.1. Let be an admissible control, and be the corresponding state and costate. If , , and satisfy the Pontryagin maximum principle ((4.3)-(4.4)), then is an optimal control to the problem (4.1).

Proof. For any given x, λ, let

Lemma 4.1 reformulates the constrained optimal control problem (4.1) into a global optimization problem (4.6). Based on Theorem 3.4, an analytic solution of (4.1) can be expressed via the co-state.

Theorem 4.2. Suppose that

Next, we give an example to illustrate our results.

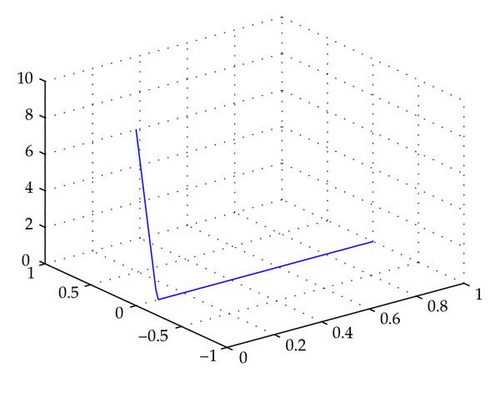

Example 4.3. We consider

Following the idea of Lemma 4.1 and Theorem 4.2, we need to solve the following boundary value problem for differential equations to derive the optimal solution

Acknowledgments

The authors would like to thank the referees for their helpful comments on the early version of this paper. This work was supported by the National Natural Science Foundation of China (no. 10971053).