Time-Optimal Control of Systems with Fractional Dynamics

Abstract

We introduce a formulation for the time-optimal control problems of systems displaying fractional dynamics in the sense of the Riemann-Liouville fractional derivatives operator. To propose a solution to the general time-optimal problem, a rational approximation based on the Hankel data matrix of the impulse response is considered to emulate the behavior of the fractional differentiation operator. The original problem is then reformulated according to the new model which can be solved by traditional optimal control problem solvers. The time-optimal problem is extensively investigated for a double fractional integrator and its solution is obtained using either numerical optimization time-domain analysis.

1. Introduction

In the world surrounding us, the physical laws of dynamics are not always followed by all systems. When the considered systems are complex or can only be studied on a macroscopic scale, they sometimes divert from the traditional integer order dynamic laws. In some cases, their dynamics follow fractional-order laws meaning that their behavior is governed by fractional-order differential equations [1]. As an illustration, it can be found in the literature [2] that materials with memory and hereditary effects, and dynamical processes, such as gas diffusion and heat conduction, in fractal porous media can be more precisely modeled using fractional-order models than using integer-order models. Another vein of research has identified the dynamics of a frog′s muscle to display fractional-order behavior [3].

Optimal Control Problems (OCPs) or Integer-Order Optimal Controls (IOOCs) can be found in a wide variety of research topics such as engineering and science of course, but economics as well. The field of IOOCs has been investigated for a long time and a large collection of numerical techniques has been developed to solve this category of problems [4].

The main objective of an OCP is to obtain control input signals that will make a given system or process satisfy a given set of physical constraints (either on the system′s states or control inputs) while extremizing a performance criterion or a cost function. Fractional Optimal Control Problems (FOCPs) are OCPs in which the criterion and/or the differential equations governing the dynamics of the system display at least one fractional derivative operator. The first record of a formulation of the FOCP was given in [5]. This formulation was general but includes constraints on the system′s states or control inputs. Later, a general definition of FOCP was formulated in [6] that is similar to the general definition of OCPs. The number of publications linked to FOCPs is limited since that problem has only been recently considered.

Over the last decade, the framework of FOCPs has hatched and grown. In [5], Agrawal gives a general formulation of FOCPs in the Riemann-Liouville (RL) sense and proposes a numerical method to solve FOCPs based on variational virtual work coupled with the Lagrange multiplier technique. In [7], the fractional derivatives (FDs) of the system are approximated using the Grünwald-Letnikov definition, providing a set of algebraic equations that can be solved using numerical techniques. The problem is defined in terms of the Caputo fractional derivatives in [8] and an iterative numerical scheme is introduced to solve the problem numerically. Distributed systems are considered in [9] and an eigenfunction decomposition is used to solve the problem. Özdemir et al. [10] also use eigenfunction expansion approach to formulate an FOCP of a 2-dimensional distributed system. Cylindrical coordinates for the distributed system are considered in [11]. A modified Grünwald-Letnikov approach is introduced in [12] which leads to a central difference scheme. Frederico and Torres [13–15], using similar definitions of the FOCPs, formulated a Noether-type theorem in the general context of the fractional optimal control in the sense of Caputo and studied fractional conservation laws in FOCPs. In [6], a rational approximation of the fractional derivatives operator is used to link FOCPs and the traditional IOOCs. A new solution scheme is proposed in [16], based on a different expansion formula for fractional derivatives.

In this article, we introduce a formulation to a special class of FOCP: the Fractional Time-Optimal Control Problem (FTOCP). Time-optimal control problems are also referred to in the literature as minimum-time control problems, free final time-optimal control, or bang-bang control problems. These different denominations define the same kind of optimal control problem in which the purpose is to transfer a system from a given initial state to a specified final state in minimum time. So far, this special class of FOCPs has been disregarded in the literature. In [6], such a problem was solved as an example to demonstrate the capability and generality of the proposed method but no thorough studies were done.

The article is organized as follows. In Section 2, we give the definitions of fractional derivatives in the RL sense and FOCP and introduce the formulation of FTOCP. In Section 3, we consider the problem of the time-optimal control of a fractional double integrator and propose different schemes to solve the problem. In Section 4, the solution to the problem is obtained for each approach for a given system. Finally, we give our conclusions in Section 5.

2. Formulation of the Fractional Time-Optimal Control Problem

2.1. The Fractional Derivative Operator

There exist several definitions of the fractional derivative operator: Riemann-Liouville, Caputo, Grünwald-Letnikov, Weyl, as well as Marchaud and Riesz [17–20]. Here, we are interested in the Riemann-Liouville definition of the fractional derivatives for the formulation of the FOCP.

2.2. Fractional Optimal Control Problem Formulation

2.3. Fractional Time-Optimal Control Problem Formulation

- (1)

if the optimal state x* equations are satisfied, then we obtain the state relation,

- (2)

if the costate λ* is chosen so that the coefficient of the dependent variation δx in the integrand is identically zero, then we obtain the costate equation,

- (3)

the boundary condition is chosen so that it results in the auxiliary boundary condition.

- (1)

if q*(t) is positive, then the optimal control u*(t) must be the smallest admissible control umin value so that

(2.32) - (2)

and if q*(t) is negative, then the optimal control u*(t) must be the largest admissible control umax value so that

(2.33)

3. Solution of the Time-Optimal Control of a Fractional Double Integrator

3.1. Solution Using Rational Approximation of the Fractional Operator

It is possible for time-optimal control problems to be reformulated into traditional optimal control problems by augmenting the system dynamics with additional states (one additional state for autonomous problems). For that purpose, the first step is to specify a nominal time interval, [a b], for the problem and to define a scaling factor, adjustable by the optimization procedure, to scale the system dynamics, and hence, in effect, scale the duration of the time interval accordingly. This scale factor and the scaled time are represented by the extra states.

Such a definition allows the problem to be solved by any traditional optimal control problem solver.

3.2. Solution Using Bang-Bang Control Theory

4. Results

In this section, we find the solution to the problem defined in Section 3 for the following parameter values:

- (i)

umin = −2,

- (ii)

umax = 1,

- (iii)

A = 300.

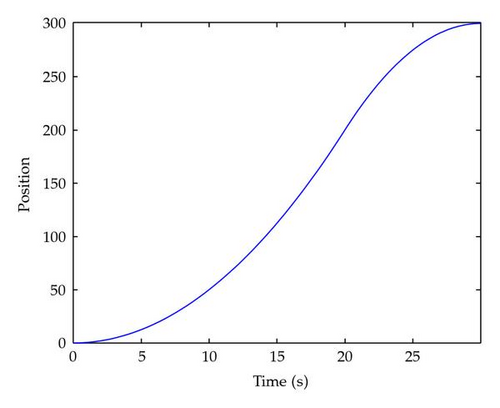

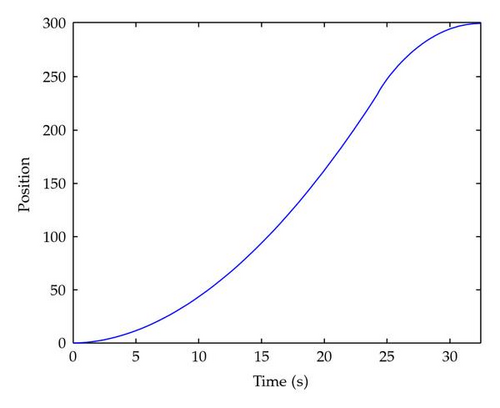

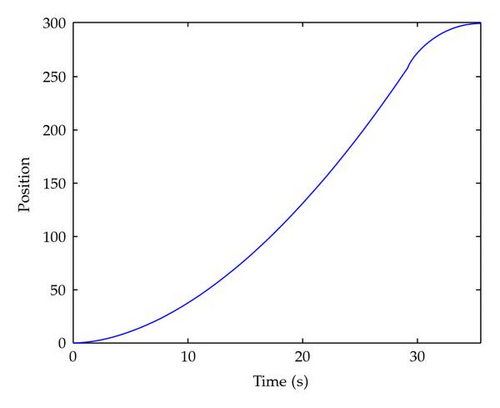

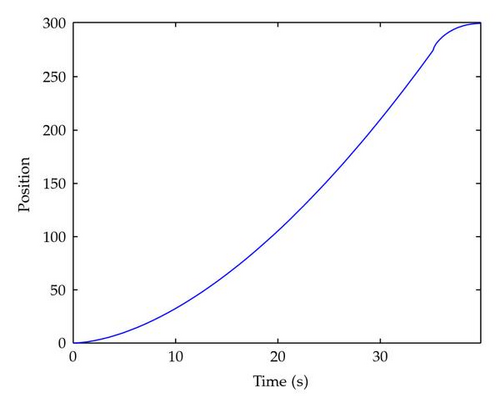

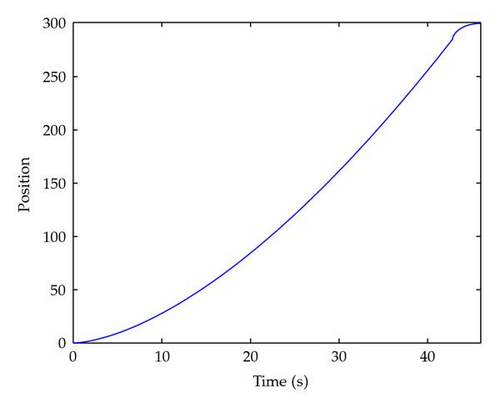

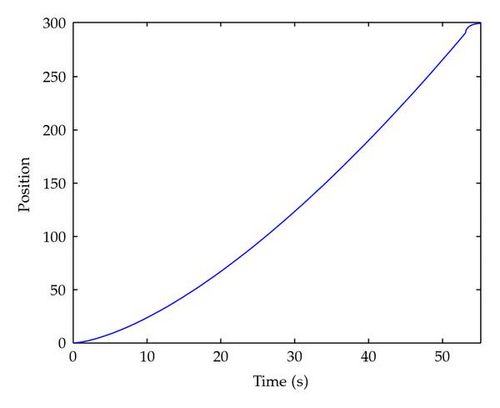

Figure 1 shows the states x(t) as functions of t for α = 1. Figures 2, 3, 4, 5, and 6 show the state x(t) as functions of t for different values of α (0.9, 0.8, 0.7, 0.6, and 0.5, respectively). We can observe that when the order α approaches 1, the optimal duration nears its value for the double integrator case.

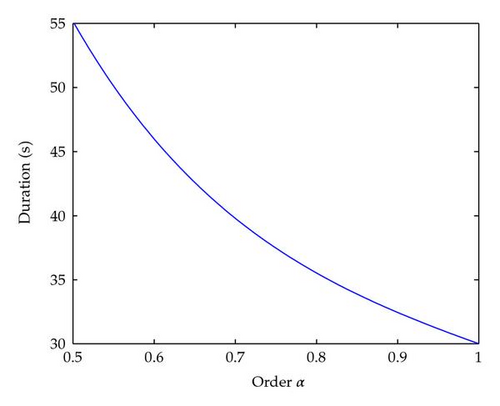

Since it is possible to obtain the analytical solution of the problem from (3.22), we give in Figure 7 the plot of the duration of the control T versus the order of the fractional derivative. As we can see, for α = 1, the solution matches the results obtained for a double integrator.

5. Conclusions

We developed a formulation for fractional time-optimal control problems. Such problems occur when the dynamics of the system can be modeled using the fractional derivatives operator. The formulation made use of the the Lagrange multiplier technique, Pontryagin′s minimum principle, and the state and costate equations. Considering a specific set of dynamical equations, we were able to demonstrate the bang-bang nature of the solution of fractional time-optimal control problems, just like in the integer-order case. We were able to solve a special case using both optimal control theory and bang-bang control theory. The optimal control solution can be obtained using a rational approximation of the fractional derivative operator whereas the bang-bang control solution is derived from the time-domain solution for the final time constraints. Both methods showed similar results, and in both cases as the order α approaches the integer value 1, the numerical solutions for both the state and the control variables approach the analytical solutions for α = 1.