Forward Sensitivity Approach to Dynamic Data Assimilation

Abstract

The least squares fit of observations with known error covariance to a strong-constraint dynamical model has been developed through use of the time evolution of sensitivity functions—the derivatives of model output with respect to the elements of control (initial conditions, boundary conditions, and physical/empirical parameters). Model error is assumed to stem from incorrect specification of the control elements. The optimal corrections to control are found through solution to an inverse problem. Duality between this method and the standard 4D-Var assimilation using adjoint equations has been proved. The paper ends with an illustrative example based on a simplified version of turbulent heat transfer at the sea/air interface.

1. Introduction

Sensitivity function analysis has proved valuable as a mean to both build models and to interpret their output in chemical kinetics (Rabitz et al. [1], Seefeld and Stockwell [2]) and air quality modeling (Russell et al. [3]). Yet, the ubiquitous systematic errors that haunt dynamical prediction cannot be fully understood with sensitivity functions alone. We now include an optimization component that leads to an improved fit of model to observations. The methodology is termed forward sensitivity method (FSM)—a method based on least squares fit of model to data, but where algorithmic structure and correction procedure are linked to the sensitivity functions. In essence, corrections to control (the initial conditions, the boundary conditions, and the physical and empirical parameters) are found through solution to an inverse problem.

In this paper we derive the governing equations for corrections to control and show their equivalence to equations governing the so-called 4D-Var assimilation method (four-dimensional variational method)—least squares fit of model to observations under constraint (LeDimet and Talagrand [4]). Beyond this equivalence, we demonstrate the value of the FSM as a diagnostic tool that can be used to understand the relationship between sensitivity and correction to control.

We begin our investigation by laying down the dynamical framework for the FSM: general form of the governing dynamical model, the type and representation of model error that can identified through the FSM, and the evolution of the sensitivity functions that are central to execution of the FSM. The dual relationship between 4D-Var/adjoint equations is proved. The step-by-step process of assimilating data by FSM is outlined, and we demonstrate its usefulness by application to a simplified air-sea interaction model.

2. Foundation Dynamics for the FSM

We have included a list of mathematical symbols used in this paper. These symbols and associated nomenclature are found in Table 1.

| Symbol | Nomenclature |

|---|---|

| n | Dimension of state vector |

| m | Dimension of observation vector |

| p | Dimension of parameter vector |

| q = n + p | Dimension of control vector |

| N | Number of observation vectors |

| t | Time |

| True model state vector | |

| x(t) = (x1, x2, …xn) T ∈ Rn | Model state vector |

| f : Rn × Rp | Vector field of the model |

| x(0) ∈ Rn | Initial condition for model state vector |

| α ∈ Rp | Parameter vector |

| c = (x(0), α) ∈ Rn × Rp | Control vector |

| z(t) ∈ Rm | Observation vector |

| h(x(t)) : Rn → Rm | Forward operator relating model state and observations |

| h(x(t)) ∈ Rm | Model counterpart of the observation |

| v(t) ∈ Rm | Observation error vector |

| R(t) ∈ Rm×n | Covariance of observation error vector v(t) |

| eF | Forecast error |

| b(t) | Systematic component of forecast error |

| Dx(f) = [∂fi/∂xj] ∈ Rn×n | Model Jacobian w. R. T. x |

| Dα(f) = [∂fi/∂αj] ∈ Rn×p | Model Jacobian w. R. T. A |

| Dα(x) = [∂xi/∂αj] ∈ Rn×p | Sensitivity matrix w. R. T. Parameters |

| Dx(0)(x) = [∂xi/∂xj(0)] ∈ Rn×n | Sensitivity matrix w. R. T. Initial condition |

| Dx(h) ∈ Rm×n | Jacobian of the forward operator w. R. T. X |

| H1(t) = Dx(h)Dx(0)(x) ∈ Rm×n | Sensitivity matrix accounting for h(x(t)) |

| H2(t) = Dx(h)Dα(x) ∈ Rm×p | Sensitivity matrix accounting for h(x(t)) |

| 〈a, b〉 | Inner product |

| J(c), J1(c) | Objective or cost functions |

| δJ, δJ1, δx(t), δα | First variations |

| ∇J, ∇J1 | Gradients of cost functions |

| M(t, s) | Model state transition matrix (Appendix A) |

| L(t, s) | Matrix that determines particular solution (Appendix A) |

2.1. Prediction Equations

Let x(t) ∈ Rn denote the state and let α ∈ Rpdenote the parameters of a deterministic dynamical system, where x(t) = (x1(t), x2(t), …, xn(t)) T and α = (α1, α2, …, αp) T are column vectors, n and p are positive integers, t ≥ 0 denotes the time, and superscript T denotes the transpose of the vector or matrix. Let f : Rn × Rp × R → Rn be a mapping, where f(x, α, t) = (f1, f2, …, fn) T with fi = fi(x, α, t) for 1 ≤ i ≤ n. The vector spaces Rn and Rp are called the model space and parameter space, respectively.

Let z(t) ∈ Rm be the observation vector obtained from the field measurements at time t ≥ 0. Let h : Rn → Rm be the mapping from the model space Rn to the observation space Rm.

2.2. A Classification of Forecast Errors

The following assumption is key to our analysis that follows. The model of choice is faithful to the phenomenon under study. The system is predicted with fidelity—the forecasted state is creditable and useful in understanding the dynamical processes that underpin the phenomenon. Certainly, the forecast will generally exhibit some error, but the primary physical processes are included; that is, the vector field fincludes the pertinent physical processes. In this situation the forecast error stems from erroneously specified elements of control. Thus, in our study the forecast error assumes the form shown in (6). Dee’s work [5] contains a very good discussion of the estimation of the bias b in (7) arising from errors in the model and/or observations.

2.3. Dynamics of First-Order Sensitivity Function Evolution

Since our approach is centered on sensitivity functions, we develop the dynamics of evolution of the forward sensitivities in this section.

The evolution of the sensitivities (solution to (9) and (13)) is dependent on the solution to the governing dynamical equations ((1a) and (1b)). Generally, these equations are solved numerically using the standard fourth-order Runge-Kutta method. Rabitz et al. work [1] contains more details relating to solutions of (9) and (13). In special cases such as in air quality modeling, the sensitivity equations (9) and (13) exhibit extreme stiffness. Special methods are needed to handle the inherent stiffness of these equations. Seefeld and Stockwell work [2] includes a discussion of these issues. Gear’s work [6] is a good reference for a general discussion of stiff equations.

3. Duality between the FSM and 4D-Var Based on Adjoint Method

3.1. The Adjoint Method

Details on the recursive computation of (27) are given in Appendix C.

3.2. Sensitivity-Based Approach

The primary advantage of the sensitivity-based approach is that it provides a natural interpretation of the expression for the gradient in (31). Recall from (22) that η(t) ∈ Rn is the weighted version of the forecast error R−1(t)eF(t) ∈ Rm mapped onto the model space by the linear operator . Also the ith column of is the sensitivity of the ith component xi(t) of the state vector x(t) ∈ Rn with respect to the control vector c ∈ Rq given by (∂xi/∂c1, ∂xi/∂c2, …, ∂xi/∂cq). Thus, it follows from (31) that ∇cJ1 is a linear combination of the columns of which are the sensitivity vectors, where the coefficients of the linear terms are the components of η(t). Thus, columns of with large norms that are associated with large forecast errors will be dominant in determination of the components of ∇J1(c). In other words, we gain some insight into the interplay between corrections to control and forecast errors—something that can be seen through a careful examination of the sensitivity vector at various times from initial state to forecast horizon. (The illustrative example in Section 5 further explores this diagnostic function.) Expression (31) also enables us to isolate the effect of different components xi of x(t) on the performance index J1(c).

4. Data Assimilation Using Sensitivity

We seek to find the solution to the following problem using the FSM. Given f(·) and h(·), the control vector c, the observation z(t), and the error covariance of observations R(t), find a correction δc to the control vector such that the new model forecast starting from (c + δc) will render the forecast error eF(t) purely random; that is, the systematic forecast error is removed and accordingly E(eF(t)) = 0.

4.1. First-Order Analysis

4.1.1. Observations at One Time Only

4.1.2. Observations at Multiple Times

From the discussion relating to the classification of forecast errors, recall that the forecast error inherits its randomness from the (unobservable) observation noise. The vector eF on the right hand side of (53) is random and hence the solution ς of (53) is also random.

5. A Practical Example: Air/Sea Interaction

We choose a simple but nontrivial differential equation to demonstrate the applicability of the forward-sensitivity method to identification of error in a dynamical model. We break this discussion into three parts as follows: (1) the model, (2) discussion of the diagnostic value of FSM, and (3) numerical experiments with data assimilation using FSM.

5.1. The Model

-

θ: temperature of the air column ( ∘C),

-

θs: temperature of the sea surface ( ∘C),

-

CT: turbulent heat exchange coefficient (nondimensional),

-

V: speed of air column (ms−1),

-

H: height of the column (mixed layer)(m),

-

τ: time (h).Equation (59) is nondimensionalized by the following scaling:

There are three elements of control: initial condition, x(0), boundary condition, xs, and parameter, k.

5.2. Diagnostic Aspects of FSM

| Time (hours) | t = 0 | t = 1 | t = 5 | t = 10 | t = 15 | t = 20 |

|---|---|---|---|---|---|---|

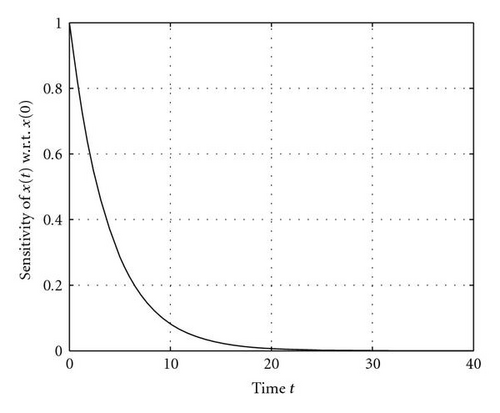

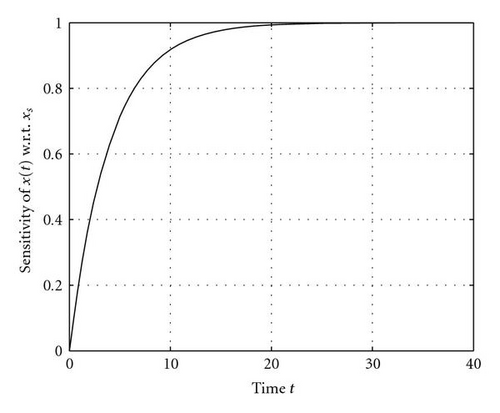

| ∂x(t)/∂x(0) | 1.0 | 0.7788 | 0.2865 | 0.0821 | 0.0235 | 0.0007 |

| ∂x(t)/∂xs | 0.0 | 0.2212 | 0.7135 | 0.9179 | 0.9765 | 0.9993 |

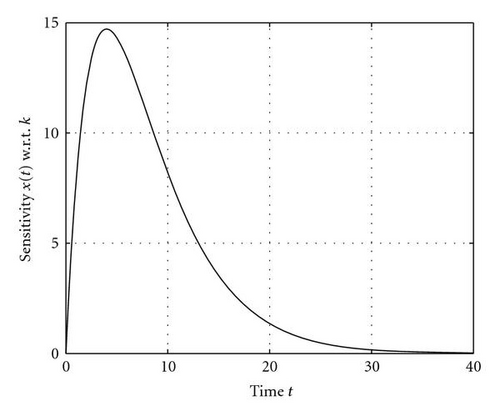

| ∂x(t)/∂k | 0.0 | 7.788 | 14.325 | 8.2100 | 3.525 | 0.1400 |

5.3. Numerical Experiments

We have explored both the goodness and failure of recovery of control under two different scenarios, where either 3 or 6 observations are used to recover the control vector. Since there are 3 unknowns, the case for 6 observations is an over-determined system.

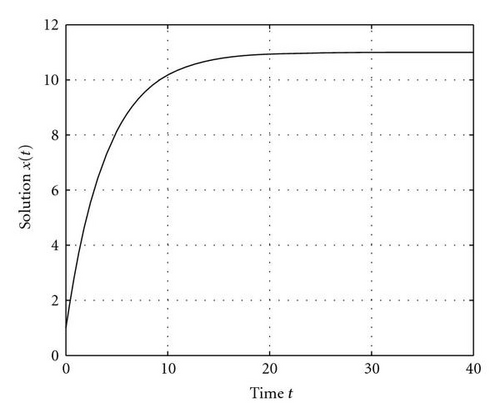

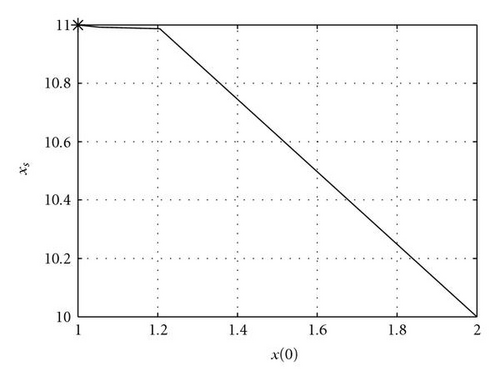

We execute numerical experiments where the observations are spread over different segments of the temporal range—generally divided into an “early stage” and a “saturated stage.” By saturated stage we refer to that segment where the solution becomes close to the asymptotic state, that is, x → xs. The dividing time between these segments is arbitrary; but generally, based on experimental results to follow, we divide the segments at t = 24 where (24) = 10.975, 0.025 less than = 11.0 (see Figure 1).

The following general statement can be made. If more than one of the observations falls in the saturated zone, the matrix becomes illconditioned. As can be seen from (39) and the plots of sensitivity functions in Figure 1, ∂x/∂xs → 1 and ∂x/∂x(0) and ∂x/∂k→ 0 as t → ∞. Accordingly, if two of the observations are made in the saturated zone, this induces linear dependency between the associated rows of the H matrix and in turn leads to ill-conditioning. This illconditioning is exhibited by a large value of the condition number, the ratio of the largest to the smallest eigenvalue of the matrix HTH. The inversion of this matrix is central to the optimal adjustments of control (see (55)).

Illconditioning can also occur as a function of the observation spacing in certain temporal segments. This is linked to lack of variability or lack of change of sensitivity from one observation time to another. And, as can be seen in Figure 1, the absolute value of the slope in sensitivity function curves is generally large at the early stages of evolution and small at later stages. As an example, we find satisfactory recovery, δc = (–0.882, – 0.067, +0.922), when the observations are located at 5.0, 5.1, and 5.2 (a uniform spacing of Δt = 0.1). Yet, near the saturated state, at t = 20.0, 20.1, and 20.2, again a spacing of 0.1, the recovery is poor with the result δc = (+5.317, – 0.142, +0.998). The associated condition numbers for these two experiments are 1.0 × 103 and 1.0 × 106, respectively. Similar results follow from the case where 6 observations are taken. In all of these cases, the key factor is the condition number of HTH. For our dynamical constraint, a condition number less than ~104 portends a good result.

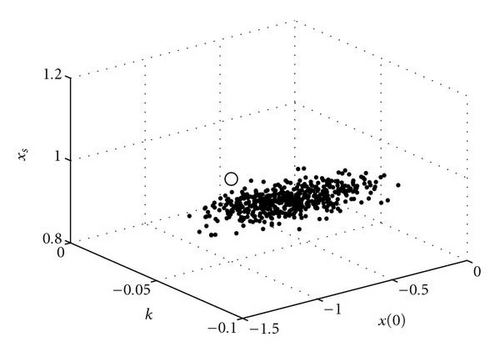

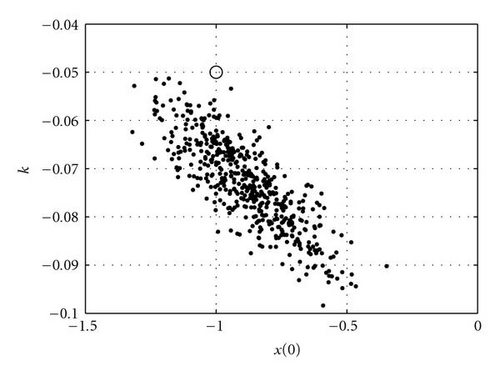

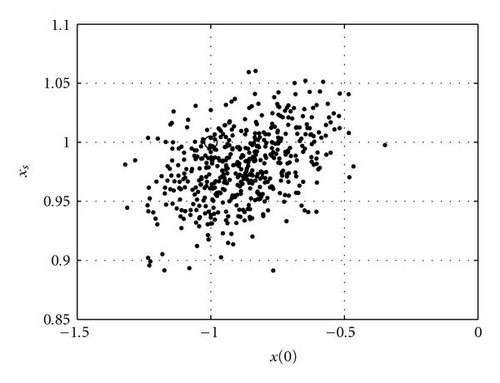

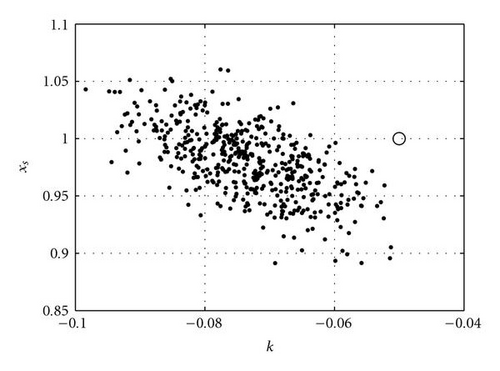

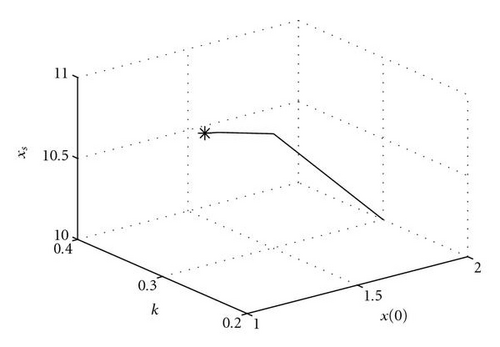

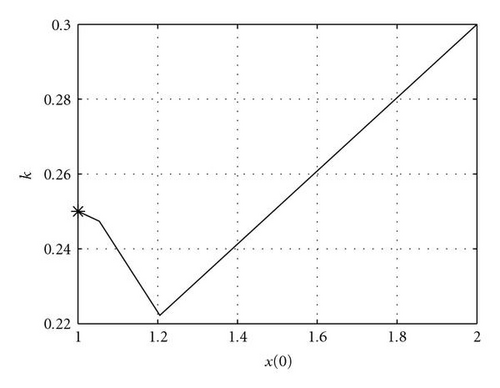

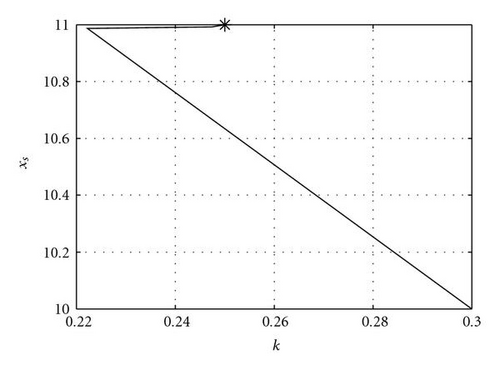

For the case where we have 6 observations at t = 2, 7, 12, 17, 22, and 27, with a random error of 0.01 (standard deviation), we have executed an ensemble experiment with 100 members to recover control. In this case, the condition number is 2.4 × 103. Results are plotted three dimensionally and in two-dimensional planes in the space of control, that is, plots of correction in the xs/x(0), xs/k, and x(0)/k planes. Results are shown in Figure 2.

Finally, we explore the iterative process of finding corrections to control. Here, the results from the 1st iteration are used to find the new control vector. This vector is then used to make another forecast and find a new set of sensitivity functions. The error of the forecast is obtained, and along with the new sensitivity functions, a second correction to control is found, and so forth. For the experiment with 6 observations that has been discussed in the previous paragraph, we apply this iterative methodology. As can be seen in Figure 3, the correct value of control is found in 3 iterations.

6. Concluding Remarks

The basic contributions of this paper may be stated as follows.

(1) While the 4D-Var has been the primary methodology for operational data assimilation in meteorology/oceanography (Lewis and Lakshmivarahan [11]), and while forward sensitivity has been a primary tool for reaction kinetics and chemistry (Rabitz et al. [1]) and air quality modeling (Russell et al. [3], to our knowledge these two methodologies have not been linked. We have now shown that the method of computing the gradient of J(c) by these two approaches exhibits a duality hitherto unknown.

(2) By treating the forward sensitivity problem as an inverse problem in data assimilation, we are able to understand the fine structure of the forecast error. This is not possible with the standard 4D-Var formulation using adjoint equations.

(3) While it is true that computation of the evolution of the forward sensitivity involves computational demands beyond those required for solving the adjoint equations in the standard 4D-Var methodology, there is a richness or augmentation to the information that comes with this added computational demand. In essence, it allows us to make judicious decisions on placement of observations through understanding of the time dependence of correction to control.

Acknowledgments

At an early stage of formulating our ideas on this method of data assimilation, Fedor Mesinger and Qin Xu offered advice and encouragement. Qin Xu and Jim Purser carefully checked the mathematical development, and suggestions from the following reviewers went far to improve the presentation: Yoshi Sasaki, Bill Stockwell, and anonymous formal reviewers of the manuscript. S. Lakshmivarahan’s work is supported in part by NSF EPSCoR RII Track 2 Grant 105-155900 and by NSF Grant 105-15400, and J. Lewis acknowledges the Office of Naval Research (ONR), Grant No. N00014-08-1-0451, for research support on this project.

Appendices

A. Dynamics of Evolution of Perturbations

Let δc = (δxT(0), δαT) T ∈ Rn × Rp be the perturbation in the control vector c and δx(t) the resulting perturbation in the state x(t) induced by the dynamics (1a) and (1b). Our goal is to derive the dynamics of evolution of δx(t).

Case A. Let δα = 0, that is, the initial perturbations are confined only to the initial condition, x(0). Then setting B(t) ≡ 0, from (A.5) we see that L(t, 0) ≡ 0. From (A.4) we get

B. Computation of Sensitivity Functions

C. Computation of ∇αJ in (27)

Given η(ti), MT(ti, ti−1), LT(ti, ti−1) for 1 ≤ i ≤ N, the expression on the right-hand side of (27) can be efficiently computed as shown in Algorithm 1.

-

Algorithm 1:

-

DO j = N to 1

-

DO i = N to j

-

η(ti) = MT(tj, tj−1)η(ti)

-

END

-

END

-

μ(1) = L(t1, 0)

-

DO i = 2 to N

-

μ(i) = μ(i − 1) + L(ti, ti−1)

-

END

-

Grad = 0

-

DO i = 1 to N

-

Grad = Grad + μ(i)η(ti)

-

END

Then ∇αJ = − Grad. It is to be noticed that there is only matrix-vector multiplication involved in these operations and not matrix-matrix multiplication.