Earnings and Earnings Inequality in South Africa: Evidence from Household Survey and Administrative Tax Microdata from 1993 to 2020

Note: This paper has been funded by the UNU-WIDER SA-TIED program. The tax microdata used in the paper has been made available through cooperation between the South African Revenue Service, the South African National Treasury, and UNU-WIDER. All output has been checked to ensure that it does not compromise confidentiality. I thank Martin Wittenberg, Andrew Donaldson, Amina Ebrahim, Aroop Chatterjee, Chris Axelson, Aalia Cassim, Jesse Naidoo, Sihle Khanyile, Nicola Branson, David Lam, Marlies Piek, the editor and three referees, participants at a 2021 SALDRU seminar and the 2022 IARIW-TNBS Conference on Measuring Income, Wealth and Well-being in Africa for very helpful comments. Martin Wittenberg's work creating harmonized earnings data in PALMS was extremely helpful in creating the GHS earnings data, and his broader research on and insight about data and survey quality has been fundamental in shaping this paper. I also thank Fadzayi Chingwere, Grace Bridgman, Singita Rikhotso, Michael Kilumelume, Dane Brink, and Michelle Place from UNU-WIDER for all their help in using the IRP5 administrative tax data. All errors are my own.

This paper was presented at the IARIW-Tanzania National Bureau of Statistics conference “Measuring Income, Wealth and Well-being in Africa” November 11-13, 2022 in Arusha, Tanzania. Lars Osberg and Hai-Anh H. Dang acted as guest editors of the conference papers submitted for publication in the ROIW.

Abstract

In this paper I use household survey and administrative tax microdata to describe earnings inequality in South Africa over the period 1993–2020. I find that earnings inequality is very high but has been stable, or even declined by some measures, and earnings increases have been largest at the bottom of the earnings distribution. Previous findings of increasing earnings or wage inequality in South Africa from 2010 onwards come from one set of household surveys. I show that the publicly available data from these surveys include poor-quality earnings imputations and that non-public data without these imputations provide more sensible trends in earnings and earnings inequality. Comparisons between tax and survey data also show that earnings inequality in the tax data is generally higher than in the more comparable households survey and that earnings in the surveys is under-captured far down the formal sector earnings distribution.

1 Introduction

Earnings and wage inequality within many rich countries have been increasing over the last 40 years (Acemoglu & Autor, 2011; Autor, 2019; OECD, 2011), which has been ascribed mainly to skill biased technical change and, more recently, to wage and job polarization (Autor et al., 2006), resulting from automation of routine tasks (Acemoglu & Restrepo, 2022). But in urban China, India and Latin America wage inequality has declined in recent times—(Khurana & Mahajan, 2020; Messina & Silva, 2021; Zhang, 2021). Traditionally household surveys have been used to measure trends in earnings inequality, but researchers have increasingly turned to administrative tax or social security data to compare and complement household survey data, often because of concerns that surveys under-capture incomes at the top of the distribution (Piketty et al., 2022). Correcting underreporting of “top incomes” can have a substantial impact on the estimates of income inequality levels and trends (Jenkins, 2017).

In this paper I describe the levels and trends in earnings and earnings inequality in South Africa using two sets of household surveys over 28 years between 1993 and 2020 and 10 years of administrative tax data between 2010 and 2019. I investigate the impact of earnings imputations present in the Quarterly Labour Force Surveys (QLFS) from 2010 onwards on earnings inequality trends and levels. I also compare the earnings distributions and earnings inequality estimates in the survey and tax data.

There are two main contributions of the paper. The first is the comparison of earnings and earnings inequality estimates across two sets of household surveys and administrative tax data that is not limited to tax filers (a small proportion of the employed in any middle-income country) but includes almost every employee working in the formal sector, which is around 70 percent of all employees in South Africa, and around 35–39 percent of the entire working age population.

Previous research has highlighted that item non-response and imputation is an important concern when using survey data to measure earnings inequality, even in rich countries (Autor et al., 2008, Bollinger et al., 2019, p. 302). The second main contribution of the paper is to compare earnings inequality estimates from two different sources of publicly South African household survey data and interrogate why they produce quite different estimates of inequality levels and trends.

To undertake my analysis, I use microdata from 90 household surveys over 28 years and 10 years of administrative tax data with over 120 million individual-year observations covering almost all employment in the formal sector. I describe changes in the earnings distribution at various percentiles, and measure inequality using the Gini coefficient and the variance of log earnings. Single index measures like the Gini can hide changes in opposite directions at different points in the distribution (Autor et al. 2008, Wittenberg, 2017a), so I also estimate percentile ratios to examine changes at the top and bottom of the distribution.

I show that earnings inequality in South Africa is very high, as many others have pointed out. But the first key finding of the paper is that earnings inequality in South Africa has not unambiguously and dramatically increased since 2010, as the publicly available QLFS data and several researchers using this data suggest (Bassier & Woolard, 2021; Bhorat et al., 2023). Rather, I find that inequality changes vary depending on the measure and dataset used. The Gini coefficient has increased since the early 2000s but the variance of log earnings has declined in the household surveys, and both had declined in the tax data since 2010.

Linked to the first key finding, the second key finding of the paper is that the trends in and levels of earnings and earnings inequality in South Africa in the two sets of household surveys used in the paper differ dramatically from 2010 onwards. By comparing public and non-public earnings data in the Quarterly Labour Force Surveys (QLFSs) I find that the public data do not provide a reliable description of the earnings distribution or inequality since 2010, most likely because of the very poor quality of earnings imputations in the publicly available data. These imputations are neither flagged in the data nor discussed in the data release documents.

A third key finding is that even in the reliable survey data earnings is substantially underestimated across the entire distribution compared to administrative tax data, not just at the very top. This has implications beyond the measurement of inequality, for example the fraction of individuals who are paid less than the minimum wage is likely much lower than the household surveys suggest.

In the next section I provide an overview of the literature on measuring earnings inequality in survey and administrative data. I then discuss the data sources I use in my analysis in Section 3 and then describe the results of the analysis in Section 4. Section 5 concludes and discusses suggestions for improving the publicly available survey data.

2 Literature review

2.1 Trends in wage and earnings inequality

Wage inequality in the rich world has generally increased over the last 30 years (Acemoglu & Autor (2011); OECD (2011); Autor (2019)). But there has been a recent decline in wage inequality in China in urban households (Zhang, 2021) and wage inequality has also declined in Latin America over the last 20 years (Messina & Silva, 2021) and urban India in the 2010s (Khurana & Mahajan, 2020).

The key driver of increasing inequality in rich countries in the last 50 years was thought to be skill biased technological change (SBTC) (Acemoglu & Autor, 2011). But Autor et al. (2006), Goos and Manning (2007) and many subsequent papers have highlighted increasing employment and wage polarization as other drivers of increasing inequality. Polarization means rising employment and wages in low and high skilled jobs and declines in middle skilled jobs, which cannot be explained by the canonical SBTC model (Acemoglu & Autor, 2011). Institutional factors, such as declines in minimum wages and unionization rates, have also been found to be partially responsible for increases in wage inequality in rich countries (Farber et al., 2021; Fortin et al., 2021).

There are important links between wage and earnings inequality in rich countries and developing countries. Khurana and Mahajan (2020) note that job polarization in rich countries results from both automation in rich countries and off-shoring, meaning that some middle-skilled jobs are destroyed whilst others are shifted to developing countries, where they might actually be fairly high up the earnings distribution. Middle-skilled jobs could also be destroyed by automation in developing countries, although in low-income countries most jobs are unlikely to be automated (Maloney & Molina, 2019).

The recent literature on job polarization in developing countries finds little evidence that job polarization is occurring (Maloney & Molina, 2019; Martins-Neto et al., 2021). Thus, there is no reason to think that there should be wage or earnings polarization in most developing countries either. But evidence on wage polarization is more limited, due to a relative lack of comparable wage or earnings data across countries. Two recent papers do shed some light on this—Messina and Silva (2021) find no evidence of wage polarization in 13 Latin American countries between 1995 and 2015 and Khurana and Mahajan (2020) find no evidence of earnings polarization in urban, non-agricultural employment in India between 1983 and 2017.

Trends in earnings and wage inequality in South Africa

There are several studies describing trends in wage or earnings inequality in South Africa. Wittenberg (2017a, 2017b) showed that, using the Gini coefficient, Theil T index, and Atkinson indices, wage inequality rose in the 1990s but was roughly constant from 2000 to 2011. Wittenberg (2017b) showed that this constant inequality trend hid important differences for the bottom and top halves of the wage distribution—the 50/10 percentile ratio declined but the 90/50 percentile ratio increased, which is consistent with wage polarization. Bhorat et al. (2023) discuss similar results using data from only 2 years, 2000 and 2015, described this as wage polarization and thus argued that South Africa's trends match those in rich countries.

Bassier and Woolard (2021) used a combination of survey data, tax tables, and microdata samples of tax filers to investigate patterns of income growth and inequality between 2003 and 2018. Using tax tables, they show that for the entire adult population, income growth in the top 5 income percentiles was between 4 and 5 percent per year over this period, though it was only 2.5 percent from 2010 to 2018. Using survey data from 2003 to 2015 they showed that earnings growth was highest at the top and bottom of the distribution and lowest in the middle. But for 2010–2015 earnings growth was negative for most of the bottom 95 percent of the distribution. I investigate this finding in my analysis below.

Chatterjee et al. (2023) used household survey data from 1993 to 2019 and tax tabulations from 2002 to 2019 to investigate changes in overall income inequality and labor income inequality. Adjusting the surveys using the tax data, they argue that the share of the top 1 percent of the population obtained 15 percent of all labor income in 1993, but that this had increased to 23 percent by 2019. This paper and the other research discussed in this section ignore earnings imputation in the Quarterly Labour Force Surveys, which is undertaken to solve item non-response. I discuss this and other challenges when measuring earnings inequality in the next section.

2.2 Challenges when measuring earnings inequality

Researchers have identified several challenges that arise when measuring earnings or wage inequality in household surveys and have shown that these matter for the conclusions that can be drawn. These challenges include item and unit non-response, measurement error and under sampling or under representation of high-income individuals (Acemoglu & Autor, 2011; Bollinger et al., 2019; Lustig, 2020; Meyer et al., 2015; Ravallion, 2022). One check and possible solution to some of these issues is to use administrative data from tax or social security records, which Meyer et al. (2015, p. 201) argue ‘provides one potentially fruitful avenue for improving the quality of survey data.’ Solutions to household survey non-response, including reweighting (for unit non-response) and imputation (for item non-response) are important, and their efficacy has been interrogated using administrative data. In this Section I focus on item non-response, imputation and the use of administrative data as checks on survey results, given their relevance to measuring earnings inequality in South Africa using household survey and administrative data, which I show in my analysis in Section 4.

Item non-response, meaning that no information is provided by a respondent to a specific question, is a concern in household surveys. Bollinger et al. (2019) highlight that item non-response to the US Current Population (household) Survey (CPS) earnings questions doubled between the mid-1980s and mid-2010s to around 25 percent and Campos-Vazquez and Lustig (2019) show that the item non-response rate for the earnings questions in the Mexican labor force survey rose from 5 percent in 2002 to 30 percent by 2017. Linking the CPS responses to Social Security administrative data, Bollinger et al. (2019) show that item non-response to earnings questions in the CPS is not missing at random, i.e. that item non-response is not only a function of individual observable characteristics, but of the level of earnings itself. They show that item non-response is highest in the tails of the distribution.

Bollinger et al. (2019) note that the usual solution to item non-response to earnings questions is to impute earnings for non-responders, which in the CPS is done using hot deck imputation. Hot deck imputation means that the earnings of those who do respond are “donated” or “allocated” to observably similar individuals who do not give their earnings. Using the linked data they find that the census bureau imputation results in underestimates of inequality and that “between one-third and one-half the difference in inequality measures between the CPS and administrative data is accounted for by [item] nonresponse in the CPS” (Bollinger et al., 2019, p. 2148).

Bollinger et al. (2019) helpfully compare administrative and survey data to learn about the potential weaknesses of household survey data. However Meyer et al. (2015, p. 216) note that “Comparing weighted microdata from surveys to administrative aggregates … also has some important limitations including possible differences between the survey and administrative populations.” One form of this problem is that tax data often include all individuals with earnings at any point in a tax year, whereas some household surveys only allow an estimate of the earnings distribution for those employed at the time of the survey—what Acemoglu and Autor (2011, p. 1049) call a “point-in-time” measure. I show below that this is an important issue in my comparisons of survey and tax administrative data in South Africa.1 This is because the low employment rate and high worker flows in South Africa (Kerr, 2018) imply that there are many fewer individuals counted as employed when they are asked about employment in the last week (as South African household surveys generally ask), compared to the number of individuals who worked at any point during the year.

Using administrative and survey data in developing countries

It is now fairly common to have administrative data that covers all or almost all employment in high income countries and to compare household surveys with administrative earnings data. There is much less research using administrative data in developing countries focusing specifically on earnings and very little that extends survey and admin data comparisons beyond the top few percentiles, where tax filers are located. Most developing countries also have sizable informal sectors, where no administrative data is available to compare to data collected from household surveys.

One use of administrative data is aggregated information. Campos-Vazquez and Lustig (2019) obtained Mexican social security data on the number of formal sector workers in each of 25 earnings categories, using this to reweight the household surveys so that the number of individuals in each earnings category in the surveys matched the number in the social security data. This, as well as the hotdeck imputation for item non-response, increased estimated earnings inequality in Mexico and instead of inequality declining between 2000 and 2017, inequality was stable.

Three papers in a recent issue of Quantitative Economics undertake comparisons of household survey and administrative microdata covering the entire formal sector in Argentina (Blanco et al., 2022), Brazil (Engbom et al., 2022) and Mexico (Puggioni et al., 2022), covering 20, 35 and 14 years respectively across different periods between the 1980s and mid-2010s. All three papers find evidence of declining wage inequality, driven partly by larger wage increases at lower percentiles, that is consistent with the findings of Messina and Silva (2021) using household survey data that wage or earnings inequality has declined across Latin America. But all three papers find that the tax data pick up stronger growth in the top half of the distribution than in the surveys. Puggioni et al. (2022) argue that in Mexico this is likely due to increasing item non-response rates (also noted by Campos-Vazquez & Lustig, 2019), although it is not clear whether Puggioni et al. (2022) imputed earnings for non-responders in their analysis. All three papers find that the growth in the bottom half of the wage distribution is more similar in the surveys and administrative data than the top half, although growth at the bottom is also much higher in the administrative data than the surveys in Argentina.

All three papers only show relative changes in earnings percentiles in the survey and tax data, normalizing to the first year of available data. This means that it is not possible to compare the actual levels of earnings across the two sources of data, only the trends. In my analysis below I compare both levels and trends, given that one of my aims is to examine the extent of under capturing of earnings in the survey data, which I discuss for South Africa in the next section.

Earnings measurement challenges in South Africa

South African researchers investigating earnings from 2010 onwards have exclusively used the Quarterly Labour Force Surveys. Most of this research ignores that earnings data in the QLFS contains imputations by Statistics South Africa. This is partly because the public release documents do not discuss imputation of earnings or provide an explanation of how the data is imputed. There are also no flags or other information in the data that allow researchers to know which individuals have imputed data and which do not.2 The lack of discussion about earnings imputation in South Africa is strange because several researchers wrote about item non-response (and partial item non-response) to earnings or income questions in the Labour Force Surveys that ran from 2000 to 2007 and that are used in my analysis below, as well as the population censuses for 1996 and 2001, including Ardington et al. (2006), von Fintel (2007) and Vermaak (2012).

Kerr and Wittenberg (2021) showed the weaknesses of the 2011 and 2012 QLFS earnings imputation, using unimputed non-public earnings data from these years obtained from Stats SA to compare results with those from the publicly available imputed data. The imputed earnings data produced implausible trends in the variance of log wages, a measure of inequality that I use in the analysis below. But the imputation methods used by Stats SA seem to have changed after 2012 and so it is important to check the more recent imputation methods, which I undertake below, using non-public earnings data without imputations for 2011, 2012 and 2018–2020.

In South Africa administrative tax data has been used as a check on the quality of household survey earnings information by Wittenberg (2017c), who used SARS tax microdata on tax filers only from 2010 to 2011 to compare the earnings distribution with the 2011 QLFS. Wittenberg (2017c) found that earnings in the survey data were on average 40 per cent lower than in the tax data for the top 3 million employees and even lower at the top of the distribution. As a result of this under capturing of earnings in the surveys, Wittenberg (2017c) estimated that the Gini coefficient of earnings was understated by around three percentage points in the household surveys undertaken at the same time. I investigate this further using tax data for the entire formal sector over 10 years in my analysis below.

As well as making labor market research much more challenging, the QLFS earnings imputations were likely influential in the implementation of a key labor market policy, the national minimum wage, introduced in 2019 and debated for several years before its introduction. The quantitative research used in these debates (Finn, 2015), and the final report of the panel set up to investigate the minimum wage (National Minimum Wage Panel, n.d.), used the public, QLFS earnings data with imputations. The final report showed that around 50 percent of employees in 2014 were being paid less in real terms than the minimum wage that was eventually introduced in 2019. I show in my analysis below that this was likely a large overestimate and that this is very likely driven by the use of the publicly available QLFS earnings data from 2010 onwards that includes poor quality imputations by Stats SA.

3 Data sources

In this Section 1 describe the sources of administrative data and household survey data that I use to estimate earnings and earnings inequality in South Africa in Section 4.

3.1 Household survey data

The household surveys used in this paper were all conducted by Statistics South Africa, except the 1993 Project for Statistics on Living Standards and Development (PSLSD), conducted by the Southern African Labour and Development Research Unit (SALDRU). The first source of survey microdata used is a version of the Post-Apartheid Labour Market Series (PALMS) (Kerr et al., 2019). PALMS is a publicly available compilation of nationally representative household surveys (The PSLSD, October Household Surveys, Labour Force Surveys and Quarterly Labour Force Surveys) with harmonized data on employment and earnings from 1993 to 2019.

For this paper I created my own version of PALMS using the publicly available code but include non-public Quarterly Labour Force Survey earnings data for 2011, 2012 Quarter 1, 2018, 2019 and 2020 Quarter 1. This non-public earnings data is comparable to the 2007 and earlier surveys in PALMS and to the other source of household survey data I use, the General Household Surveys, because it does not have the earnings imputations present in the public data. I also analyze the public QLFS earnings with imputations separately and compare to both the non-public QLFS in PALMS and the GHS.3

The other source of household survey data I use is the General Household Surveys (GHS) from 2002 to 2019 (Statistics South Africa, 2002–2019). Surprisingly the GHS has been unused by researchers as a source of earnings data in South Africa. It allows comparisons with the potentially unreliable public QLFS, it has very similar questions about earnings to the LFS and QLFS, and in some years has the same or overlapping sample clusters as the QLFS. In Appendix S1 I provide description of the earnings questions asked in the PALMS and GHS surveys, details about item non-response and how I process the earnings data, take into account the sample design and impute for item non-response.

3.2 Administrative tax data

The administrative data I use comes from the South African Revenue Service (SARS) for the 2011–2020 tax years (National Treasury and UNU-WIDER, 2022). The tax year runs from March in the previous calendar year until the end of February.4 Any individual earning more than R2,000 per year who works in a firm registered for Pay as You Earn (PAYE) tax should be issued an IRP55 certificate for each employer they worked for during the year. Individuals can thus have multiple certificates. The microdata contains information from each IRP5 certificate issued to all individuals. There is no top coding of earnings and all individuals are included (i.e. there is no sampling in the data I use). In Appendix S1 I provide further details of how I process the tax data.

I use two different groups of individuals in my analysis of the tax data. The first is only those employed in the first 2 weeks of the tax year (the first 2 weeks of March). I use this group because it closely aligns with the survey definition of employment, which is derived from questions that ask about employment in the week before the survey took place. The second group I use is all those employed at any point during the tax year, a much larger group. I show in my analysis that these two groups give quite different results about the extent of differences between the surveys and tax data, because one is a point in time measure similar to the household surveys and one is not (Acemoglu & Autor, 2011).

One important issue is that around two thirds of all pension contributions were not included on IRP5 certificates before the 2017 tax year, as they were not tax deductible (Redonda & Axelson, 2021). This means that earnings that includes pension contributions is not comparable before the 2017 tax year when compared to 2017 and later tax years. For most of my analysis I thus use earnings that includes all parts of earnings except pension contributions. In Appendix S1 I provide some key results that include pension contributions.

3.3 IRP5 administrative tax data and household survey sample comparisons

To make comparisons between the household surveys and tax data one needs the same hypothetical population in both sources (Meyer et al., 2015). South African tax legislation requires that IRP5 tax certificates are issued by employers to all employees in all firms registered for Pay as you earn (PAYE) tax, if the employee earns more than R2,000 a year (around $115 in 2022), a very small amount. There are no direct questions on employer tax registration in the QLFS but there are enough questions to produce a very comparable population of employees—QLFS employees who report that their employers contribute to the Unemployment Insurance Fund (UIF) on their behalf or who are in the public sector (public sector employers do not make UIF contributions), who have a written contract and who are not domestic workers. All private companies are required to register all their employees for the UIF so all the employees responding that their employers do make UIF contributions should also be issued tax certificates. I check this below.

From 2010 to 2019, the period over which I undertake survey and administrative data comparisons, the GHS only asks a question about whether the organization an employee works for is “in the formal sector (registered to perform activity)”, so I am forced to use this somewhat broad definition.

Figure A1 in the Appendix shows the number of individuals in each source of data using these definitions of the formal sector. The IRP5 group including all individuals employed at any point in the year is about 20 percent larger than the group employed only in the first 2 weeks. The QLFS formal sector and IRP5 population employed in the first 2 weeks of each tax year are similar from 2013 onwards, as they should be based on the closely comparable hypothetical populations present in each.6 This means that comparisons of the earnings distribution in these two populations should be validly comparing the same population. The GHS formal sector is much larger than the QLFS formal sector—by around 20–30 percent. This means that the comparisons between the GHS formal sector and either the QLFS formal sector population or the tax data population employed in the first 2 weeks of the tax year are not comparing the same two populations. Unfortunately, this is the best I can do given the limited questions asked in the GHS. Tables A1 and A2 in the Appendix show the sex and age breakdowns in the QLFS, SARS and GHS. All three show broadly similar levels and all show declines in the male share of employment. This suggests that the three groups are fairly similar, although as noted the GHS formal group is too large.

Once I undertake the data-cleaning discussed in the Appendix and use the QLFS formal sector definition, I should be left with a comparable population in the QLFS surveys and the IRP5 tax data population employed in the first 2 weeks of the tax year.

4 Analysis

To begin the analysis, I describe long run changes in earnings and earnings inequality in the household survey data for all the employed from 1993 to the first quarter of 2020, just before COVID-19 lockdowns and a shift from in-person to telephone surveying. I use PALMS (which includes the non-public QLFS earnings), the GHSs and the publicly available QLFSs. I highlight differences in the earnings distributions between both the GHS and non-public QLFS compared to the publicly available QLFS that emerge around 2012/2013. I then compare public and non-public earnings for the QLFS 2011, 2012 quarter 3, 2018, 2019 and 2020 quarter 1 to show that the imputations in the public data are very poor and are likely responsible for the differences between the two surveys. I then use the administrative tax data to undertake comparisons with the household surveys for formal sector employees only. I use earnings rather than wages for most of the analysis because the GHS and the tax administrative data does not contain information on hours worked.

4.1 Earnings and earnings inequality in the household surveys

I first use the household surveys to describe trends in earnings and earnings inequality for all employed individuals (employees and self-employed, formal and informal) between 1993 and 2020 quarter 1, just before COVID-19 lockdowns and a shift from in-person to telephone surveying. The surveys conducted in the 1990s are not as reliable as those from 2001 onwards for several reasons. The sample frame used to select the sample until 1998 was from the (pre-democracy) 1991 census, where in some areas the census could not be conducted due to political violence (Christopher, 2001). The 1990s surveys also did not probe as carefully about informal employment and thus undercounted such employment (Casale et al., 2004), meaning that the earnings distribution is likely too optimistic in these surveys. The first two LFSs in 2000 and the third in 2001 counted too many workers in subsistence agriculture and informal self-employment, respectively (Kerr & Wittenberg, 2015).

The analysis includes earnings data from non-public versions of the QLFS from 2011, 2012 Q3, 2018, 2019 and Q1 2020. Because this non-public data includes unimputed earnings I can process this data in a similar manner to the surveys pre-2008 and the GHS—see Appendix Section 6.1.3. The results using the non-public QLFS data are very similar to the GHSs conducted at the same time but very different to the rest of the public QLFS, after 2012. I show in the next section that this is likely a result of poor-quality imputations undertaken by Stats SA.

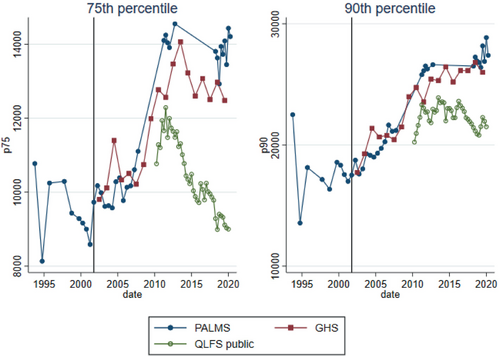

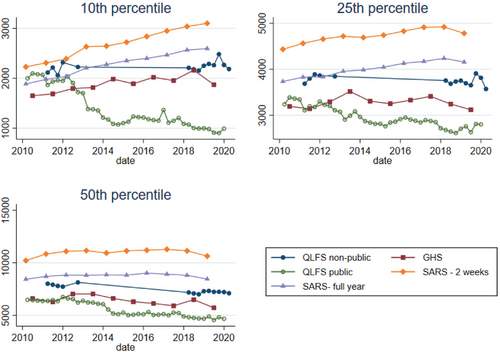

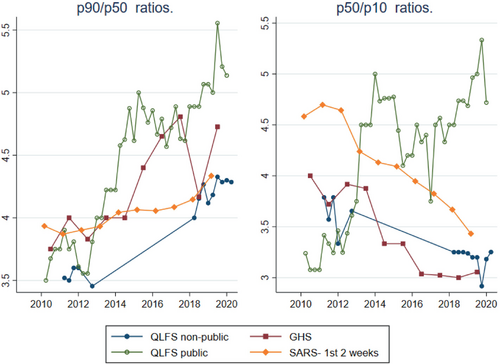

In my analysis I show changes in five percentiles (10, 25, 50, 75, 90), and then several measures of inequality: percentile ratios, Gini coefficients and the variance of log earnings.7 Because of the under capturing of informal, low paid work in the surveys done in the 1990s, I believe the trends from the early 2000s onwards in PALMS and the GHS are more reliable but that the results from the 1990s are not comparable to the later surveys. Figure 1 shows that the 10th, 25th and 50th percentiles rose substantially from the early 2000s until the mid-2010s in PALMS and the GHS. Both data sources differ markedly to the public QLFS from 2012 onwards.8 Figure 2 shows increases in the 75th and 90th percentiles from the early 2000s until 2012 in the GHS and PALMS and are stagnant over that but the public QLFS shows large declines after 2012.

Note: Black vertical line is at September 2001 — indicating that surveys on and after this date became more comparable.

Source: Own calculations from PALMS, public QLFS and GHSs.

Note: Black vertical line is at September 2001, indicating that surveys on and after this date became more comparable.

Source: Own calculations from PALMS, public QLFS and GHSs.

The relative changes for all five percentiles are shown in Figure A2 in the appendix, normalizing to one in 2002 for all three data sources. From 2002 onwards the GHS and PALMS both clearly show the same pattern—the largest increases have been at the 10th and 25th percentiles, with smaller increases for the median, 75th and 90th percentiles. The GHS show no growth or even declines in real earnings from around 2013 onwards, except for a little growth at the 10th percentile, and PALMS shows growth between 2012 and 2020 only for the 90th, 10th and 25th percentiles. The public QLFS data from 2010 onwards shows a very different trend to the GHS and PALMS data—very large declines at all five percentiles, with the largest being at the 10th percentile—where the GHS and PALMS show strong increases. Bassier and Woolard (2021) find negative growth in the QLFS for most of the bottom 95 percent of the distribution between 2010 and 2015, which Figures 1 and 2 and A2 show is likely due to the public QLFS earnings imputations, since no such pattern is observed in the GHS and PALMS (which includes the non-public QLFS data).

One important question is whether these trends provide any evidence of earnings or wage polarization. In rich countries this is generally taken to mean that earnings or wage growth was highest at the top, lower at the bottom and that the lowest growth was in the middle (Autor et al., 2006). In PALMS since 2002 the largest increases for monthly earnings have been at the 10th and 25th percentiles, the median the smallest, the 90th percentile growth higher than the median and the 75th percentile between the median and 90th percentile. The GHS results are similar, although the growth the median and 75th percentile growth is about the same. I would thus argue that there is not strong evidence for earnings polarization since 2002. Figure A3 in the Appendix shows that broadly similar patterns hold when using PALMS data for monthly earnings for employees only and for hourly wages for employees only, implying that there is also not strong evidence for wage polarization between 2000 and 2012. This contradicts Bhorat et al. (2023), who use the public QLFS earnings data for 2000 and 2015 to argue for wage polarization, and then to look for explanations.

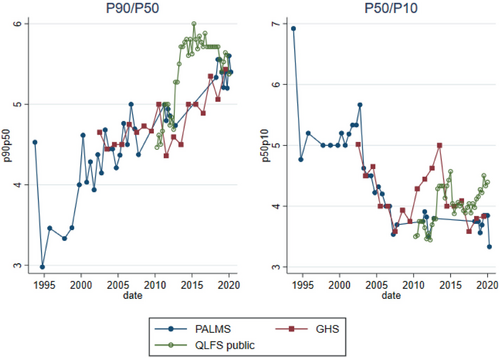

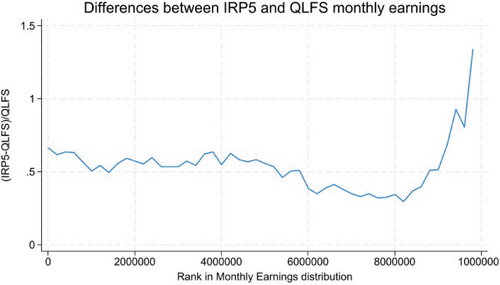

I now use the data shown in Figures 1 and 2 to describe inequality trends and levels by showing the p90/p50 and p50/p10 percentile ratios. Figure 3 shows that between the early 1990s and Q1 2020, the top of the distribution consistently moved further away from the middle, and that the bottom consistently moved towards the middle. The changes are large in magnitude—someone at the median in the early 2000s when the surveys became more comparable earned about five times more than the person at the 10th percentile but only around 3.3 times more in Q1 2020—as a result of the much larger increases at the 10th percentile compared to the median, as shown above. And someone at the 90th percentile earned around four times someone at the median in the early 2000s but around 5.5 times more by 2020. Again, the public QLFS trends look implausible whereas the GHS and PALMS trends look more reasonable. Figure A4 in the Appendix shows the p50/25 and p75/p50 percentile ratios. The conclusions are broadly the same. The key result is that the bottom of the earnings distribution has moved closer to the middle throughout the post-Apartheid period, whilst the top of the distribution moved away from the middle, a finding consistent with Wittenberg (2017b), who used household survey data up to 2011.

Source: Own calculations from PALMS, public QLFS and GHSs.

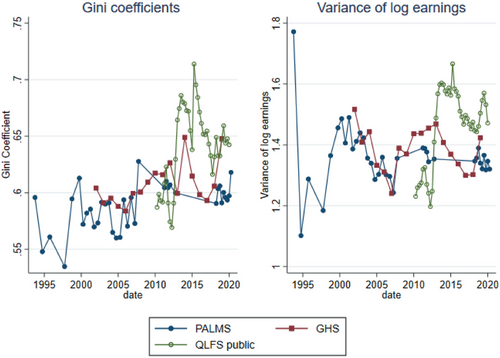

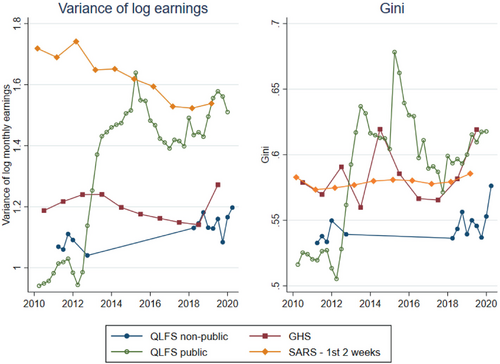

Figure 4 shows the Gini coefficient for earnings and variance of log earnings from 1993 to 2020 using PALMS, public QLFS and the GHS. Both measures show that earnings inequality is extremely high in South Africa. Between the early 2000s and 2019 PALMS shows an increase in the Gini of around two points but the GHS Gini is more erratic, perhaps because sparsity in top incomes can result in volatility in estimates over time (Jenkins, 2017), and the increase to 2019 is larger than in PALMS. The variance in log earnings in both PALMS and GHS has declined since the early 2000s when the surveys became more reliable. The public QLFS Gini coefficient and the variance in log earnings both increase very dramatically between 2012 and 2013 and the subsequent trend is much more erratic and highly implausible.

Source: Own calculations from PALMS, public QLFS and GHSs.

Broadly speaking then, the analysis thus far has shown that earnings inequality in South Africa is still extremely high, making it one of the most unequal countries in the world. But the GHS and PALMS show that since the early 2000s, when the surveys became more comparable, inequality has declined when using the variance of log earnings and has increased only slightly when using the Gini coefficient (the GHS Gini is volatile, although not as much as in the public QLFS). During this period the bottom has caught up to the middle whilst the top has moved further away from the middle.

The implausible trends in earnings distributions, the Gini and variance of log earnings in the public QLFS, as well as the differences between the QLFS public data on the one hand, and the GHS and PALMS data on the other, suggest that the public QLFS earnings imputations may be to blame. In the next section I show further evidence that this is likely true, by merging the non-public and public QLFS earnings data for the same individuals in the years where I have non-public QLFS data.

4.2 QLFS earnings imputations

Earnings imputation is the usual solution to earnings item non-response. Bollinger et al. (2019) highlight that accounting for item non-response is a crucial part of measuring earnings inequality, and that not doing so can result in under-estimates of inequality. Public QLFS earnings data is imputed but never discussed in public documents. Kerr and Wittenberg (2021) obtained a non-public Stats SA document that indicated that hotdeck imputation was used, with two sex, four race, seven education and three occupation categories used to find donors. Kerr and Wittenberg (2021) obtained and used unimputed earnings data for 2011 and 2012 from Statistics South Africa and concluded that the imputations were of poor quality- because the estimated union wage premium was much lower in the public data and the variance of earnings in the union and non-union sectors in the public data was highly implausible. The analysis in the previous section showed that in 2010–2011 the earnings distribution of the public QLFS data was not too dissimilar to PALMS and the GHS, but that it got further apart and that by 2020 the two sources were very different, suggesting the imputation may have got worse over time. I investigate this further in this section.

In Tables 1 and 2 I show the earnings response types in the public and non-public microdata in 2011, 2012 Q3, 2018, 2019 and 2020. According to the QLFS questionnaire, individuals can either give an “amount,” a bracket, a “don't know” or a refusal. There are also some “other missings” who are employed but are in none of the other four categories. Tables 1 and 2 show that in the non-public data there are (as expected) “amounts,” brackets, “don't knows” and refusals, but also that 3 percent of the employed have “Other missing.” In 2011/2 only around 56–57 percent of respondents gave an “amount,” 19 percent gave a bracket and 24 percent had no earnings information.

| Response type | 2011 | Q3 2012 | ||

|---|---|---|---|---|

| Non-public data | Public data | Non-public data | Public data | |

| Amount | 57.12 | 99.05 | 56.34 | 92.25 |

| Bracket only | 19.24 | 0.00 | 19.12 | 0.00 |

| don't know | 9.38 | 0.00 | 10.61 | 0.00 |

| Refusal | 10.79 | 0.00 | 10.87 | 7.03 |

| Other missing | 3.48 | 0.95 | 3.06 | 0.72 |

| 100 | 100 | 100 | 100 | |

- Note: Data are not weighted.

- Source: Own calculations from public and non-public QLFS 2011 and Q3 2012 earnings data.

| Response type | 2018/2019 | Q1 2020 | ||

|---|---|---|---|---|

| Non-public data | Public data | Non-public data | Public data | |

| Amount | 49.51 | 83.39 | 49.69 | 82.85 |

| Bracket only | 20.79 | 0.00 | 21.10 | 0.00 |

| Don't know | 14.26 | 0.00 | 14.38 | 0.00 |

| Refusal | 12.61 | 16.09 | 13.60 | 16.68 |

| Other missing | 2.83 | 0.52 | 1.24 | 0.47 |

| 100 | 100 | 100 | 100 | |

- Note: Data are not weighted.

- Source: Own calculations from public and non-public QLFS 2018, 2019 and Q1 2020 earnings data.

In 2018, 2019 and Q1 2020 the “amount” response rate dropped to 50 percent and roughly 20 percent gave a bracket, meaning that there was no earnings information for about 30 percent of the employed sample. Vermaak (2012) reports that there was no earnings information for around 6–10 percent of the employed in the LFSs that ran in the 2000s until 2007. This means that non-response roughly tripled from the early 2000s to 2012 and increased a further 25 percent between 2012 and 2020. This increase is larger than the earnings item non-response increase in the US CPS reported by Bollinger et al. (2019, p. 2145) for a nearly 30-year period, but similar to the increase in the Mexican labor force survey reported by Campos-Vazquez and Lustig (2019) between 2000 and 2017.

Tables 1 and 2 show different patterns in the public data for the different years. In 2011 there are almost all amounts, no “bracket onlys,” “don't knows” or refusals- meaning full imputation was undertaken for these categories. There is a small group of “other missings.” In 2012 Q3 in the public data 7 percent of responses are indicated as refusals but this percentage is lower than in the non-public data. In 2018, 2019 and Q1 2020 there are also no don't knows in the public data, which suggests that “don't knows” are imputed but that refusals are not. But this conclusion is incorrect, as I show next.

To undertake further analysis, I merge the public and non-public earnings data for each respondent using the household ID and person number. In 2018–2020 the merge is perfect- the person and household ID numbers merge perfectly, all individuals are merged and they have the same sex, race, age, industry, occupation and union status in the public and non-public datasets. In 2012 about 0.25 percent of employed individuals do not merge into the public data, but all those in the public data are merged and all those that were merged have the same values for the variables sex, race, age, industry, occupation and union status. In 2011 all observations in both non-public and public data merge but I was only provided with the earnings variables, the household ID and the person number, so I cannot check whether the merge results in the same sex, race etc. The imputed amounts in the public data are in monthly earnings, so I create a monthly earnings value from the non-public data using the period of reporting earnings (monthly, weekly etc.) and the earnings amount reported by the respondent in that period.9

To explore whether the imputations are to blame for the differences between public and non-public QLFS I first rule out that the earnings values in the public data for those who the non-public data shows gave actual amounts are problematic. Figure A5 in the Appendix shows that the levels and trends for the amount responses in the public data are very similar to the amount responses in the non-public data for 2011, 2012, 2018 and 2019. This implies that the differences between the QLFS public and non-public earnings distributions are driven by the imputations for bracket responders, refusals, “don't knows” and other missings.

Tables 3 and 4 below also use merged data and compare the response types for the same individuals in the public and non-public data. Tables 3 and 4 show that the imputation methods are very poor.10 The main concerns are that most bracket responders are imputed earnings outside the bracket they reported in the public data, that after 2011 many bracket responders are “imputed” as refusals in the public data, a substantial share of don't know responses are “imputed” as refusals in the public data and only some true refusals are imputed, while others are shown as refusals in the public data. My conclusion from this analysis is that the bad quality imputations that I have described and the very large differences between the earnings distributions and the inequality measures in non-public and public data that result from this render the data unusable for inequality analysis.

| Non-public response type | Public data | Row total | |||||

|---|---|---|---|---|---|---|---|

| Correct Amt imputed | Incorrect Amt imputed | Refusal | Amt imputed | Other missing | |||

| 2011 | Bracket only | 15.24 | 84.50 | 0.00 | 0.00 | .00 | 100 |

| don't know | 0.00 | 0.00 | .00 | 99.13 | 0.00 | 100 | |

| Refusal | 0.00 | 0.00 | 0.00 | 99.02 | 0.00 | 100 | |

| Other missing | 0.00 | 0.00 | 0.00 | 77.88 | 0.00 | 100 | |

| Q3 2012 | Bracket only | 14.57 | 73.16 | 12.19 | 0.00 | 0.00 | 100 |

| don't know | 0.00 | 0.00 | 21.03 | 77.68 | 0.00 | 100 | |

| Refusal | 0.00 | 0.00 | 19.25 | 79.72 | 0.00 | 100 | |

| Other missing | 0.00 | 0.00 | 11.94 | 65.39 | 0.00 | 100 | |

- Note: Data are not weighted. Correct Amt Imputed means that the amount imputed was within the bracket the respondent gave. Incorrect Amt Imputed means that the amount imputed was not within the bracket the respondent gave. Amt Imputed means that the amount imputed was from a refusal, don't know or other missing.

- Source: Own calculations from public and non-public QLFS 2011 and Q3 2012 earnings data.

| Non-public response type | Correct Amt imputed | Incorrect Amt imputed | Refusal | Amt imputed | Other missing | Row total | |

|---|---|---|---|---|---|---|---|

| 2018/2019 | Bracket only | 10.71 | 60.21 | 28.39 | 0.00 | 0.00 | 100 |

| don't know | 0.00 | 0.00 | 35.20 | 64.73 | 0.00 | 100 | |

| Refusal | 0.00 | 0.00 | 37.45 | 62.46 | 0.00 | 100 | |

| Other missing | 0.00 | 0.00 | 14.79 | 66.98 | 18.24 | 100 | |

| Q1 2020 | Bracket only | 10.40 | 59.91 | 28.44 | 0.00 | 0.00 | 100 |

| don't know | 0.00 | 0.00 | 35.80 | 64.16 | 0.00 | 100 | |

| Refusal | 0.00 | 0.00 | 39.60 | 60.31 | 0.00 | 100 | |

| Other missing | 0.00 | 0.00 | 8.37 | 54.88 | 0.00 | 100 |

- Notes: Data are not weighted. Correct Amt Imputed means that the amount imputed was within the bracket the respondent gave. Incorrect Amt Imputed means that the amount imputed was not within the bracket the respondent gave. Amt Imputed means that the amount imputed was from a refusal, don't know or other missing.

- Source: Own calculations from public and non-public QLFS 2018, 2019 and Q1 2020 earnings data.

The imputation weaknesses are very important for a range of other highly relevant policy issues. For example, the monthly earnings in a full time job paying the national minimum wage (R20 an hour for most jobs) is at the 33rd percentile of the formal sector employee earnings distribution in the Q1 2020 public data whilst it is at the 21st percentile when using the non-public data and my own hotdeck imputation, a massive difference that translates to around 1.2 million formal sector workers incorrectly assumed to be earning less than the full time monthly equivalent of the minimum wage.

4.3 Earnings and earnings inequality in the IRP5 data and household surveys

Having described earnings and earnings inequality for all employed in the household surveys and documented the weaknesses of the QLFS public earnings data, I now use administrative tax data to estimate earnings and earnings inequality distribution and trends over time for employees. The tax data is available from the 2011 to 2020 tax years, and so I limit the analysis of the General Household Surveys and QLFSs to this period. I include the public QLFS earnings data even though I have shown above that it is unreliable, since it has been used before to describe inequality and to show again that trends and levels differ dramatically compared to the tax data, the GHS and the non-public QLFS data.

To make the tax and household survey data more comparable I include only employees in the formal sector.11 As noted above, and shown in Figure A1, the population of employees in the tax data employed in the first 2 weeks of the year and the number of QLFS formal sector employees are very similar from 2013 onwards. This, and the results from Tables A1 and A2, means that the hypothetical populations in the tax and QLFS formal sector data are similar. However, the number of GHS formal sector employees is too large, due to the limited number of questions available to determine whether employed individuals would be in the hypothetical population that was issued a tax certificate. This means that the QLFS and GHS “formal sector” populations are not as comparable and may explain the larger differences between the results of the two surveys in this section compared to the results in Section 4.1.12

Figure A1 also shows that the population of employees in the tax data employed at any time in each tax year was much higher than the estimate from the QLFS of total formal sector employees, and so the earnings distribution from this population is not directly comparable to the QLFS population estimates (or the GHS), which Acemoglu and Autor (2011) call “point in time” estimates. Nevertheless, I include the earnings distribution for the tax data population employed at any point in the year to demonstrate that this distribution is very different to the one obtained for those employed in the first 2 weeks of the tax year, and that comparing a point in time measure from a household survey to the population employed in the tax data at any time of the year is incorrect.

Figures 5 and 6 show the 10th, 25th, 50th, 75th and 90th percentiles in the surveys and the two different tax data populations. Figure A6 in the appendix shows the relative changes in these percentiles in the GHS and SARS data and Figure A7 shows the relative changes for the public and non-public QLFS data. Three key results stand out. The first is that the for the comparable populations—the non-public QLFS formal sector employees and the SARS group employed in the first 2 weeks of the tax year, earnings at the 25th–90th percentiles are much lower in the QLFS than in the tax data, and the gap has been widening at all five percentiles. This suggests that even the household surveys with a comparable population and reliable earnings data are under capturing earnings throughout the distribution, not just at the top, and that this has got worse over time. I previously compared the shares of formal sector employees earning less than the full-time monthly equivalent of the national minimum wage. The tax data implies that around 15 percent of employees working for tax registered firms earned less than the monthly full time equivalent of the hourly minimum wage in 2019, compared to 21 percent in the non-public QLFS with my imputations and 33 percent in the public QLFS in 2019.13

Notes: SARS full year is the group employed at any point in the year. SARS 2 weeks is the group employed only in the first two weeks of the tax year. Household surveys include only formal sector employees—see Appendix for formal sector definitions in each survey. Earnings expressed in December 2020 rands.

Source: Own calculations from IRP5, QLFS public, QLFS non-public and GHS data.

Notes: SARS full year is the group employed at any point in the year. SARS 2 weeks is the group employed only in the first two weeks of the tax year. Household surveys include only formal sector employees—see Appendix for formal sector definitions in each survey. Earnings expressed in December 2020 rands.

Source: Own calculations from IRP5, QLFS public, QLFS non-public and GHS data.

The tax data suggest that at the 25th and 50th percentiles formal sector employees saw real annual earnings growth of <1 percent, that at the 75th and 90th percentiles this was just over 1 percent and that annual real growth was around 3 percent at the 10th percentile. In the GHS and non-public QLFS there were declines in annual earnings of around 1 percent a year at the 50th percentile, roughly no growth at the 25th percentile and then growth of less than 1 percent at the 10th, 75th and 90th percentiles. The public QLFS implies declines of between 1 percent and 6 percent per year, with the largest decline for the 10th percentile.

Bassier and Woolard (2021) showed much higher rates of income growth in tax data between 2003 and 2018 for the top percentiles, around 4–5 percent per year. There are several possible explanations for the differences between my results using tax data and theirs. The first is that for the period in which the tax data I use overlaps with theirs, they find growth rates of only around 2.5 percent per year. The second is that their data includes all incomes, including capital income, capital income is more important at the top of the distribution and Bassier and Woolard (2021) show that share incomes increased by 15 percent per year between 2003 and 2015 for the top percentile and 10 percent per year for the 95th percentile.14 The third is the difference in datasets—their data are derived from tax filings that include the self-employed whereas mine include all employees issued an IRP5 certificate. Bassier and Woolard's percentiles are also calculated for the entire adult population, whereas mine are only for the employed in the formal sector, a much smaller population. This may also contribute to differences in the results.

The second key result is that the earnings percentiles in the tax data of the population employed in the first 2 weeks of the tax year, the population more comparable to the household surveys, are much higher than the distribution of those employed at any point in the year. This means that researchers using tax data on the population employed at any point in the year and comparing this to household survey “point in time” estimates (Acemoglu & Autor, 2011, p. 1049) are understating the differences between the survey and tax data.

The third key result is that the publicly available QLFS data again seems unreliable- the declines in the 10th percentile and median are unbelievable. The non-public QLFS earnings data seems much better although it still substantially under captures earnings compared to the tax data, despite the two having very similar hypothetical populations.

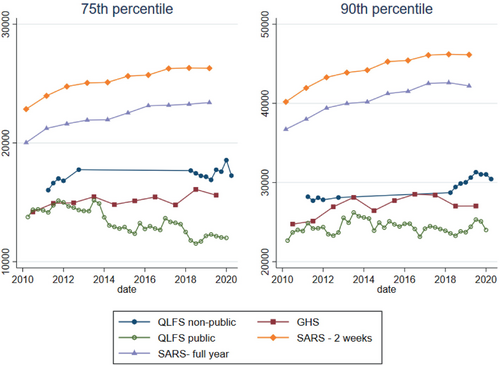

Figures 5 and 6 show only five percentiles of the earnings distribution. In Figure 7 I provide a comparison of earnings throughout the distribution between the non-public QLFS Q1 2019 and the comparable group in the tax data—those employed in the first 2 weeks of the 2020 tax year (the first 2 weeks of March 2019).15 I rank all individuals in the SARS data and create a rank for the QLFS respondents using the survey weights,16 and then comparing earnings at each rank at which there is a survey respondent, following Wittenberg (2017c). The points are then joined using a local linear regression. A value of 1 means that the amount earned by the person in the tax data at that rank was 100 percent more than the person at the same rank in the survey. Figure 7 again shows that the differences between the QLFS and tax data are substantial. Under capturing is highest at the top ranks, is then roughly constant for the rest of the top 60 percent of earners and then declines over most of the rest of the distribution.17 Figure 7 again suggests that under capturing of earnings is not limited to the top of the distribution.

Source: Own calculations for SARS and non-public QLFS.

Having described and compared trends and levels in earnings across the different data sources, Figure 8 shows inequality in earnings using the p50/p10 and p90/p50 percentile ratios.18 The GHS, non-public QLFS and tax data show broadly similar trends—the bottom catching up to the middle and the top moving away from the middle. The public QLFS data produces the opposite trend in the p50/p10 ratio and larger increases in the p90/p50 compared to the other data sources.

Source: Own calculations from IRP5, PALMS, QLFS non-public and GHS data.

Figure 9 shows the variance of log earnings and the Gini coefficient for formal sector employees in the surveys and tax data. The tax data and non-public QLFS are supposedly the most comparable. But the variance of log earnings in the tax data is 80 percent higher than in the QLFS at the start and around 40 percent higher at the end, as a result of it declining in the tax data and rising slightly in the non-public QLFS. The Gini is around 3–5 points higher in the tax data, and the gap also declines. The Gini in the GHS is higher and more erratic than in the non-public QLFS whilst the level and trend in the variance of log earnings are more similar to the non-public QLFS than for the Gini. The non-public QLFS trends again seem implausible.

Source: Own calculations from IRP5, QLFS public, QLFS non-public and GHS data.

As discussed in Section 3, the tax data analysis thus far has excluded pension contributions from the definition of earnings because of a change in tax policy that meant that before the 2017 tax year roughly two thirds of total pension contributions were not included on the IRP5 certificates that are the source of data for the analysis. In Figures A9–A11 I redo some analysis including pension contributions in earnings-showing the 75th and 90th percentiles over time, a version of Figure 7 comparing SARS and QLFS across the entire earnings distribution and the Gini coefficient and variance of log earnings. There is a clear shift up at the 2017 tax year in the P75 and p90 when all pension contributions are included and this shows up in a larger difference between SARS and QLFS in Figure A10. Figure A11 shows that changes in inequality due to including pensions are not large—the Gini increases by half a point and the variance of log earnings by about 5 percent from 2017 onwards when all pension contributions are included on IRP5 certificates.

5 Conclusion

In this paper I have used household survey and administrative data microdata to shed light on trends in earnings and earnings inequality. The surveys in PALMS (including the non-public QLFS) and the GHS tell a broadly consistent story about changes in earnings and earnings inequality since 2002. Inequality is extremely high but the largest increases in earnings have come at the 10th and 25th percentiles, with growth at the median much lower, and growth at the 75th and 90th percentiles in between. This meant that the bottom of the distribution caught up to the middle whilst the top moved away from the middle, as Wittenberg (2017b) showed up to 2011 using OHS, LFS and public QLFS data. The variance of log earnings declined in both sets of surveys whilst the Gini increased slightly in PALMS and more in the GHS (it was also more erratic in the GHS).

The broad consistency of PALMS and GHS contrasts with the results from the public QLFS from 2010 onwards, which showed very large declines in earnings at the bottom of the distribution and very large increases in the Gini coefficient and variance of log earnings. In comparing merged public and non-public QLFS data I have shown that the imputations in the public QLFS data appear to be extremely poor and are very likely the reason for the differences between the public QLFS on the one hand and the non-public QLFS and the public GHS on the other. This point is important for users of any source of micro data in which item non-response is a concern.

Administrative tax microdata can be used as a check on and to improve household surveys. My analysis of the SARS data from 2010 to 2019 showed that earnings in the household surveys are under captured across the distribution, not just at the top, as is often assumed, and that this under capturing has increased over time. But the surveys and tax data show some similar trends—earnings growth was lowest at the middle and higher at the top and bottom, meaning that the bottom caught up to the middle and the top moved away from the middle. The tax data also show the limitations of the surveys—the Gini and variance of log earnings are higher in the tax data than in the non-public QLFS, which has a population most comparable to tax data population.

I also showed that there are very substantial differences in the tax data earnings distributions of those employed only in a two-week window and those employed at any point in the year. Because those employed for short periods of time are more likely to be lower earners, the earnings distribution of those employed at any point in the year is much lower than those employed in the start of the tax year. This matters when comparing with household surveys, which in South Africa only ask about earnings for the currently employed. Prior research in South Africa comparing surveys with point in time employment and earnings questions and tax data covering anyone employed in the tax year made the surveys look closer to the tax data than they really are. This issue is also of relevance in any country where household surveys ask point in time questions about earnings or incomes.

South Africa is an extremely unequal society, and this is driven partly by inequality in earnings in the labor market. Using tax data I have shown that the reliable South African household surveys underestimate earnings inequality. I have also shown that careful attention needs to be paid to the various sources of earnings data to obtain an accurate description of the trends and levels of earnings and earnings inequality, and that not doing so results in erroneous conclusions about the extent of and trends in earnings inequality in South Africa.

References

- 1 Jenkins and Rios-Avila (2023) note that the UK Family Resources Survey and the German Legitimation of Inequality over the Life Span survey also use point in time measures.

- 2 Lillard et al. (1986) note that a similar situation occurred in the US in the first years of the CPS data releases from 1968 to 1975, when imputation flags were not included.

- 3 The public QLFS earnings data is released with the title “Labour Market Dynamics” data, although it is the earnings data collected in the QLFS and released annually, rather than each quarter with the rest of the (non-earnings) QLFS microdata.

- 4 The 2020 tax year ended at the end of February 2020, a month before COVID-19-related lockdowns were implemented.

- 5 I have not been able to find out what words the acronym IRP5 represents.

- 6 It is not clear why the QLFS formal sector population is much lower than the tax data population in 2010–2012. One explanation is that the tax data population in (only) the 2010 to 2012 tax years erroneously included some individuals reporting pension income and no labor income, as a result of SARS including pension and labor income in the same source code in these 3 years. This would affect the first two years of data I analyse, which start with the 2011 tax data. This issue is discussed in Kerr (2018: 147). The large increase in the number of QLFS formal sector workers between Q1 2012 and Q2 2012 shown in Figure A1 is also puzzling.

- 7 In the figures in Section 4.1 I include the non-public QLFS as part of PALMS, to emphasize that it can be processed in a very similar way and is comparable to the pre-QLFS surveys in PALMS. The public QLFS is shown separately.

- 8 There was no earnings data collected in the QLFS in the first two years of this survey, 2008 and 2009.

- 9 I multiply daily amounts by 22, weekly by 4.3 and fortnightly by 2.15. I divide annual amounts by 12. For hourly amounts I multiply by the reported number of usual hours worked in a week and then by 4.3.

- 10 Because the QLFS non-public data results in distributions similar to the GHS, because Kerr and Wittenberg (2021) show how poor the public earnings data is, and because the amount responses are so similar in the public and non-public data, I assume that the non-public data classifications (e.g. refusal, don't know) are correct, although I cannot prove this.

- 11 This means that the QLFS and GHS earnings distributions shown in this section are not comparable with those discussed in Section 4.1.

- 12 As noted in Section 3, in the Appendix Tables A1 and A2 show the age and sex composition of the three data sources. Despite the GHS formal sector population being 20% larger than the QLFS the age and sex compositions are fairly similar.

- 13 These percentages differ to those discussed in Section 2 because they include only employees working in the formal sector and not all employees.

- 14 A large South African bank estimates that if one had invested in the Johannesburg Stock All Share Index in 2009 the return after 10 years would have been around 5% per year in real terms (https://www.investec.com/en_za/focus/investing/donot-look-back-in-anger.html).

- 15 Figure A1 shows that these populations are almost the same size in 2019.

- 16 Again, imputing for those who refused or said they don't know or gave an earnings bracket.

- 17 The pattern changes at the very bottom of the distribution and the gap becomes larger again. This is because there are around 200 000 extra earners in the SARS data compared to the QLFS, so, for example, the very last comparison is between the lowest ranked QLFS individual and an individual in the tax data who is 200 000 people above the lowest earner in the tax data earnings distribution.

- 18 Figure A8 in the Appendix shows the p50/p25 and p75/p50 percentile ratios.