Call for algorithmic fairness to mitigate amplification of racial biases in artificial intelligence models used in orthodontics and craniofacial health

Abstract

Machine Learning (ML), a subfield of Artificial Intelligence (AI), is being increasingly used in Orthodontics and craniofacial health for predicting clinical outcomes. Current ML/AI models are prone to accentuate racial disparities. The objective of this narrative review is to provide an overview of how AI/ML models perpetuate racial biases and how we can mitigate this situation. A narrative review of articles published in the medical literature on racial biases and the use of AI/ML models was undertaken. Current AI/ML models are built on homogenous clinical datasets that have a gross underrepresentation of historically disadvantages demographic groups, especially the ethno-racial minorities. The consequence of such AI/ML models is that they perform poorly when deployed on ethno-racial minorities thus further amplifying racial biases. Healthcare providers, policymakers, AI developers and all stakeholders should pay close attention to various steps in the pipeline of building AI/ML models and every effort must be made to establish algorithmic fairness to redress inequities.

1 CONTEXT

In recent years, Machine Learning (ML) a subfield of Artificial Intelligence (AI) has gained much popularity and is being increasingly used to develop clinical prediction models.1, 2 The landscape of AI has matured tremendously in recent years and the deployment of AI models in routine clinical practice is a giant leap towards realizing precision/personalized Orthodontics.3, 4 With tremendous increases in computing power and availability of data from a wide variety of sources, AI and ML applications have seeped into our daily lives and there has been tremendous excitement and expectation about human-level performance of some of the state-of-the-art ML models.5 While this is being seen as the next big generational leap and a monumental jump to the next curve, we have to be cognizant of ethical concerns stemming from the use of AI/ML models.5, 6 In the following sections, we will provide an overview of the current state of Orthodontics research and AI/ML models, the ethno-racial concerns stemming from the use of current AI/ML algorithms, and potential solutions to mitigate the amplification of racial biases. We used a narrative review approach of existing literature in the medical field to achieve our objective.

2 ORTHODONTICS RESEARCH LANDSCAPE

In the field of Orthodontics, we are obtaining access to large amounts of data from a wide range of data sources.4 Traditionally, we have been using diagnostic record sets (images, photographs and diagnostic models) and narratives in electronic health records to assess outcomes. Recent advances in genomic sequencing, access to omics data and use of personal wearable devices have enabled us to obtain more data that is non-linear and multi-modal.4 Traditional analytical models are unable to analyse multi-modal data. In contrast, the AI/ML models are able to analyse multi-modal data. There have been tremendous developments in AI/ML models with some of the state-of-the-art the science Deep Learning models capable of analysing data from different input types to develop robust non-linear outcome prediction models.3 Large language models are poised to exploit the texts from electronic health records datasets and provide us with a more comprehensive understanding of our data and how best we can utilize them to facilitate high-quality processes of care and realize excellent patient-centered outcomes.3, 7 Multiple data sources, data types and advances in AI/ML models have opened up new opportunities for studying unconventional problems in Orthodontics.

Despite several advances and excitement about the use of AI and ML, research in Orthodontics has been conducted typically in academic settings and topics studied have been predicated on key determinants such as funding priorities of sponsors' and the interest of researchers at that particular point of time. Very few avenues exist for conducting large-scale nationwide studies that yield generalizable findings.8 It has been shown that significant disparities exist in Orthodontics care utilization based on insurance status, poverty and ethnicity/race.9 Data from the Medical Expenditure Panel Survey showed that children with public insurance had fewer orthodontic visits (9.41% of all dental services visits were Orthodontics visits) compared to those covered by private insurance (16.42% of all dental services visits were Orthodontics visits).9 Black Non-Hispanic children (9.26%) and Hispanic children (12.32%) had fewer orthodontic visits as a proportion of all dental services visits compared to White Non-Hispanic children (16.07%) and Asian children (16.07%). Poverty level was inversely correlated with the use of orthodontic visits. Children living at or below 100% of the Federal Poverty Level (FPL) had fewer Orthodontic visits (9.31% of all dental service visits) compared to those at >400% of FPL (16.43% of all dental service visits).9 Such pronounced disparities in access to Orthodontics care have tremendous ramifications on research. Selection biases in clinical datasets further exacerbate health disparities. This situation sets off a vicious cycle. We have tended to use convenient samples for conducting clinical research in our clinics and ethno-racial minorities and the marginalized tend to be underrepresented in Orthodontics datasets in the current landscape.

3 TRAINING DATASETS USED FOR AI/MACHINE LEARNING MODELS

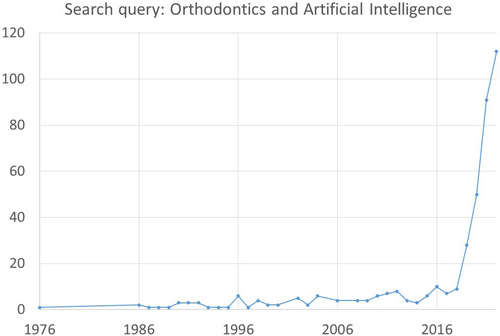

There has been a burgeoning interest in the use of AI and ML in Orthodontics research. A PubMed Search using the term “Orthodontics and Artificial Intelligence” showed that 399 articles were published between 1976 and 2022 with 112 of these published in the year 2022 (see Figure 1). While this points to a healthy trend and shows that the research enterprises in our speciality are adapting to rapidly evolving scientific priorities and interests, we have to keep in perspective that the underlying empirical framework of our speciality may not be on healthy and unbiased grounds. In Figure 2, we provide a conceptual framework of a ML model that we typically use in Orthodontics to estimate growth potential using lateral cephalometric radiographs.10 The first step in the model development is data collection. The lateral cephalometric radiographs should be readily available, accessible, interoperable with different softwares, and safely stored. Related data such as subject demographics (including age, sex, race, etc.) should also be available. In the second step, the data is cleaned, curated and labelled. During this step, expert consensus is obtained to define a reference standard. Following this, the dataset is divided into a training dataset and a test dataset. In the next step, the model is trained on the training dataset. During this stage, a multitude of variables that are available are iteratively fitted and the best mix of variables is selected to obtain the most parsimonious model (feature selection stage). This model is then further validated for accuracy and reproducibility in the test dataset. In the last stage, the model is deployed for real-world applications and continuously monitored, optimized and retrained as appropriate. The different steps in model development may not typically flow in a linear order as explained above.10 Frequently, feedback loops are developed where feedback from the earlier stages can be used to refine the models continuously and as more data becomes available.

The AI/ML models are built presuming that the current clinical practices are the “gold standards” and the data on which we have built our “gold standards” are free from biases and racial prejudices.7, 11-13 But this presumption is far from reality. The AI/ML models are trained on datasets that have a gross under-representation of racial minorities and historically disadvantaged groups who have been subject to poor access to care, discriminatory practices, and biases.11-32 Furthermore, there has been poor operationalization and representation of race and ethnicity variables in Orthodontics literature. For example, the five most recent publications originating from the United States on “Machine Learning in Orthodontics” have reported race in an inconsistent manner or have not reported it at all (Table 1).33-37 In two of the five studies, information on race was missing in over 50% of subjects and information about ethnicity was missing for over 70% of subjects. There was also wide variation on how race was included or not included in the ML models.33-37 Racial categories have been historically difficult to define and the inherent diversity within racial groups has not been well delineated and accounted for in prior research.22 The effects of upstream factors such as social, behavioural, economical, educational and structural determinants of health that have a significant confounding effect on race have not been systematically studied and parsed out.18-22

| Authors | Title of study | Reporting of race | Categories of race reported | Reporting of ethnicity | Race and ethnicity accounted for in the models |

|---|---|---|---|---|---|

| Taraji et al. | Novel machine learning algorithms for prediction of treatment decisions in adult patients with class III malocclusion | Yes | White, Hispanic, Black or African American, Asian, Other | Yes | Yes |

| Maston et al. | A machine learning model for orthodontic extraction/non-extraction decision in a racially and ethnically diverse patient population | Yes | White, Black, Asian, native, Other, Not available (59.5% of study subjects did not have information on race) | Yes (71.5% did not have information on ethnicity) | Yes |

| Leavitt et al. | Can we predict orthodontic extraction patterns by using machine learning? | Yes | White, Black, Asian, Native, Other, Not available (77% of study subjects did not have information on race) | Yes (74.9% of study subjects did not have information on ethnicity) | Yes |

| Mackie et al. | Quantitative bone imaging biomarkers and joint space analysis of the articular Fossa in temporomandibular joint osteoarthritis using artificial intelligence models | No | No | No | No |

| Lee et al. | A novel machine learning model for class III surgery decision | No | No | No | No |

In recent years, there has been much progress to mandate reporting of more granular details on race and ethnicity, sex/gender and age in human studies. The National Institute of Health (NIH) awardees are now required to report individual-level study participant data in annual progress reports. NIH has provided extensive guidance on how race and national origin are to be reported at the WWW link https://www.nih.gov/nih-style-guide/race-national-origin. The NIH racial and ethnic categories are: American Indian or Alaska Native; Asian; Black or African American; Hispanic or Latino; Native Hawaiian or Other Pacific Islander and White.38

4 ALGORITHMIC BIASES

We have to start paying more attention as algorithmic biases can be introduced with the current ML approaches that are being used to study Orthodontics related questions. Typically, AI developers develop models using a supervised ML approach wherein a training dataset is used with labelled outcomes.10 The outcomes are typically labelled by “content experts”. The experts themselves may have implicit biases owing to their backgrounds. Yet another challenge we face is that the training datasets lack diverse racial and ethnic subjects. When AI/ML models are built on training datasets that do not have an appropriate representation of traditionally disadvantaged groups and underrepresented ethno-racial minorities, they can scale up healthcare disparities and racial biases.21-26 Erroneous predictions may occur when an AI/ML model trained in a general population is inappropriately applied to a specific high-risk demographic or population subgroup (for example: Black Race and Uninsured cohorts) thus leading to a “distribution-shift” of outcomes.23 It has been demonstrated that AL/ML models that appear unbiased in the general population may actually exacerbate existing biases when applied to a different setting and context.21-26 The training datasets should represent the population subgroups in which the AI/ML model will be used. Unfortunately, the training datasets in Orthodontics are hampered by a lack of representation of racial minorities and hence there is a high risk of introducing algorithmic biases. Yet another major drawback of clinical datasets used in Orthodontics is that they are siloed (due to concerns about patient privacy, HIPAA privacy rules etc) and currently, there are no broad standards to describe clinical datasets used for developing AI/ML models. Journals do not require any description of data standards either. Consequently, the clinical datasets that are used for research are not transparent and in most situations are extremely opaque. The black-box nature of the AI/ML algorithms further accentuates the issues stemming from the lack of transparency of datasets and perpetuating healthcare disparities. There is considerable evidence documenting that AI/ML models have performed poorly when applied to diverse populations and settings and this situation is particularly pronounced in racial minorities thus perpetuating racial bias and inequity.24-26

5 ALGORITHMIC FAIRNESS

Healthcare providers, researchers, model developers, policymakers and other stakeholders must pay close attention to the AI/ML model building pipeline which includes several steps such as: identification of the problem; establishing study objectives; selection of study population; collection of data; curating and cleaning datasets; feature/variable selection; defining outcomes; model training; model validation; deployment of model in real world; and model monitoring and optimization.10 Careful considerations must be made in each stage of the AI/ML model-building pipeline to prevent biases. Algorithmic fairness should be implemented to prevent discrimination on race and other socio-demographic constructs.12 However, we are in a nascent stage in Orthodontics when it comes to implementing algorithmic fairness principles. We should ensure that AI/ML models are developed and implemented in such a manner as to ensure algorithmic fairness.

First off, having a diverse workforce who bring in different points of view is critical to mitigate any implicit biases in the study team.28 The importance of diversity cannot be over-emphasized and there is a national reckoning of this issue. We should develop a pipeline of diverse workforce in Orthodontics that is sensitized to the importance and impact of race on a myriad of clinical outcomes. It is heartening to know that organizations such as the American Association of Orthodontists Foundation have issued calls for proposals to conduct research on racial biases. During recent years, there has been an increasingly diverse student body getting admissions into residency programs and the faculty workforce is also becoming more diverse.

As described in the above sections, a key determinant of algorithmic biases is the quality of datasets that are being used to train AI/ML models. The AI/ML models can only be as good as the data from which they are built. Researchers should ensure that the datasets are not homogenous and have a good representation of various socio-demographic groups. It could be advantageous to develop race-specific datasets that encompass traditionally underrepresented ethnoracial minorities. There is a need for race/ethnic-specific models in Orthodontics as these will prevent representation bias and it is likely that the race/ethnicity-specific AI/ML models (where race/ethnic-specific training datasets are used) will outperform (in terms of accurately predicting outcomes in racial minorities) when compared to general AI/ML models (where training datasets have subjects from all races). It has been demonstrated that race-specific AI/ML models perform as well or better than models in which race is used as a co-variate.24, 29, 30 Orthodontic datasets are hampered by considerable amounts of missing data, especially on race and socio-economic factors.34-37 Researchers should consider using imputation strategies to account for missing information. Certain mix of variables such as insurance type, health status and socioeconomic status might be predictive of race and ethnicity and a two-staged model can be developed wherein a propensity scoring approach is developed to obtain a continuous or categorical score for missing race in the first stage. This score (predicted race and ethnicity) can be used in the second stage as a co-variate in the model to predict a particular outcome. Other imputation strategies using the bayseian improved surname geocoding method and missing indicator method can also be used to account for missing information on race and ethnicity.39

Our speciality should clearly establish patient-centered outcomes as opposed to emphasizing outcomes established by the healthcare system. The AI/ML algorithms should then be developed in a manner that is in concordance with patient-centred outcomes. Such an approach could redress some of the racial inequities that have been historically perpetuated by the healthcare system and current AI/ML models.26, 31 It is rather unfortunate that there is an underrepresentation of published research on traditionally disenfranchised cohorts and the outcomes that are in their best interest. These groups have been hesitant to participate in research and contribute their data. One way to redress this issue is to conduct outreach efforts to sensitize study subjects from traditionally marginalized and disenfranchised groups about the importance of participation in research. These groups need to be encouraged to serve as key stakeholders and outcome metrics should be designed to their specific needs. Without their participation, our research efforts and findings cannot be truly generalizable.

It is about time that our speciality develops standards to describe clinical datasets that will be used to develop AI/ML models. At the present time, data standards that clearly describe how the training data is collected, labelled, processed and curated are non-existent.21 Data standards should be developed to ensure transparency of datasets and thereby promote algorithmic fairness.21, 32 Recently, there has been a push in certain specialties about establishing data reporting standards of ML analyses in clinical research.40 Such reporting standards can mitigate instances of spurious or non-reproducible findings. With the burgeoning interest in ML methods in the field of Orthodontics, our field will stand to benefit much from journals mandating reporting of ML methods in a comprehensive and transparent manner. The de-identified datasets should be publicly available along with a comprehensive description of data elements in training and validation datasets so as to be amenable for external vetting and evaluations. External validation of AI/ML models must be conducted prior to being used in the real world of clinical practice.24 Potential mismatches between the training datasets and target datasets where the AI/ML algorithms will be eventually deployed should be formally assessed and documented.32 Finally, AI/ML model developers should conduct a comprehensive assessment of all sources of algorithmic biases and report these. All the above recommendations can ensure that AI/ML algorithms are developed, tested and implemented in a fair, transparent and equitable manner.

6 CONCLUSIONS

As clinician scientists and educators, we have an obligation to ensure that science is done in a fair and transparent manner and the care we provide to our patients is based on a solid empirical framework that is free from biases. In this context, we have to question the existing dogma on how we have historically handled race in the research we have conducted. AI/ML models built on existing clinical datasets can exacerbate healthcare disparities which are particularly pronounced in historically underrepresented ethno-racial groups. Future AI/ML models must be built to ensure algorithmic fairness and unpack the biases surrounding race and other associated upstream socio-economic constructs.

AUTHOR CONTRIBUTIONS

Study idea and conceptualization: Veerasathpurush Allareddy and Min Kyeong Lee. Obtaining funding: Veerasathpurush Allareddy, Min Kyeong Lee, Maysaa Oubaidin, and Mohammed H. Elnagar. Review and synthesis of literature: Veerasathpurush Allareddy, Maysaa Oubaidin, and Sankeerth Rampa. Drafting of the manuscript: Veerasathpurush Allareddy, Maysaa Oubaidin, Sankeerth Rampa, Shankar Rengasamy Venugopalan, Mohammed H. Elnagar, Sumit Yadav and Min Kyeong Lee. Final approval of manuscript: Veerasathpurush Allareddy, Maysaa Oubaidin, Sankeerth Rampa, Shankar Rengasamy Venugopalan, Mohammed H. Elnagar, Sumit Yadav and Min Kyeong Lee.

FUNDING INFORMATION

Dr. Allan G. Brodie Craniofacial Chair Endowment. Dr. Robert and Donna Litowitz Fund. American Association of Orthodontists Foundation.

CONFLICT OF INTEREST STATEMENT

None of the authors listed in the manuscript have any conflicts of interest (financial or otherwise).

ETHICAL APPROVAL

The present study is a review article and did not involve human subjects participation.

Open Research

DATA AVAILABILITY STATEMENT

There is no original data. The study is a review article of existing literature.