AggregateNet: A deep learning model for automated classification of cervical vertebrae maturation stages

Abstract

Objective

A study of supervised automated classification of the cervical vertebrae maturation (CVM) stages using deep learning (DL) network is presented. A parallel structured deep convolutional neural network (CNN) with a pre-processing layer that takes X-ray images and the age as the input is proposed.

Methods

A total of 1018 cephalometric radiographs were labelled and classified according to the CVM stages. The images were separated according to gender for better model-fitting. The images were cropped to extract the cervical vertebrae automatically using an object detector. The resulting images and the age inputs were used to train the proposed DL model: AggregateNet with a set of tunable directional edge enhancers. After the features of the images were extracted, the age input was concatenated to the output feature vector. To have the parallel network not overfit, data augmentation was used. The performance of our CNN model was compared with other DL models, ResNet20, Xception, MobileNetV2 and custom-designed CNN model with the directional filters.

Results

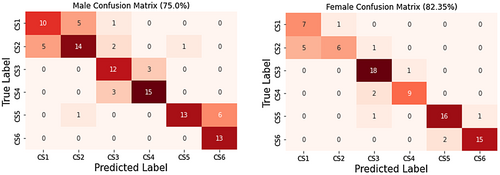

The proposed innovative model that uses a parallel structured network preceded with a pre-processing layer of edge enhancement filters achieved a validation accuracy of 82.35% in CVM stage classification on female subjects, 75.0% in CVM stage classification on male subjects, exceeding the accuracy achieved with the other DL models investigated. The effectiveness of the directional filters is reflected in the improved performance attained in the results. If AggregateNet is used without directional filters, the test accuracy decreases to 80.0% on female subjects and to 74.03% on male subjects.

Conclusion

AggregateNet together with the tunable directional edge filters is observed to produce higher accuracy than the other models that we investigated in the fully automated determination of the CVM stages.

1 INTRODUCTION

Assessment of skeletal maturity is crucial in treatment planning and determining the optimal treatment timing1 (e.g. for planning the appropriate time for dentofacial orthopaedics, orthognathic surgery and implants). Several methods have been used to determine skeletal maturity. These include increase in body height,2 attainment of menarche or voice changes,2, 3 tooth formation and development stages,4 hand-wrist radiographic analysis5, 6 and cervical vertebrae maturation (CVM) stages.7 Use of hand-wrist radiographs has been proposed to estimate a patient's skeletal maturation stage with relatively high accuracy.7 However, it has several drawbacks, including the additional amount of radiation required to obtain hand-wrist radiographs.8 To address these limitations, CVM assessment was developed as an alternate method to assess skeletal maturation from lateral cephalograms that are used routinely for orthodontic diagnosis and treatment planning. The size and shape of the second, third and fourth cervical vertebrae can be used to estimate the stage of maturational development of the craniofacial region, thus eliminating the need for an additional radiograph.7 CVM have been classified into five maturation stages (CVM I–CVM V)9 or into six maturation stages (CS1–CS6).7 The validity and reliability of the CVM staging, besides its correlation with the hand-wrist method and the peak of mandibular growth, have been supported by multiple studies.8-10 However, the CVM staging is usually assessed visually, which needs an experienced and well-trained radiologist or orthodontist. The major limitation of the CVM method is that it needs experienced practitioners. There have been reports of poor reproducibility among non-expert examiners.11 In contrast, another group reported a satisfactory level of reliability after specific training on the visual assessment of the CVM stages.12 Furthermore, it is not integrated with the digital cephalometric software.

A fully automated diagnostic approach using machine learning (ML) has drawn attention because it holds the potential of lowering human error as well as the time and effort required for the task.13 The use of ML techniques in the field of medical imaging is quickly evolving.13 The aim of the present study is to use a parallel structured deep learning (DL) method to develop a fully automated ML system to detect and classify the CVM stages. Recent research has focused on fully automated detection and categorization of the CVM stages using pre-trained networks, with each study using a different data set.14, 15 In this study, we propose a novel DL model with parallel architecture to develop a fully automated system to detect and classify the CVM stages. Our DL network has a parallel structure consisting of three sub-networks in each block which are independently trained with different initialization parameters. A set of unique directional filters that highlight the margins of the cervical vertebrae in X-ray images precedes the model.

In this paper, AggregateNet model inspired by aggregated connection is designed. Aggregated residual connection introduced in ResNext in network layers has been shown to perform well in large benchmark data sets.16 Instead of a linear connection in between neurons, a non-linear function is performed with the help of aggregated convolutional operations. The AggregateNet has some of the features of the ResNext network: (i) input to a block is shared by parallel sub-networks; and (ii) the network has a multiheaded structure.

2 MATERIALS AND METHODS

2.1 Overview of the data set

Digitized X-ray images of scanned lateral cephalometric films, made available by The American Association of Orthodontists Foundation (AAOF) Craniofacial Growth Legacy Collections, an open data source, are utilized in this study.17 The present study was granted IRB approval (2021-0480) by the Office of Human Subjects Protection at The University of Illinois Chicago. The author (M.H.E.), an experienced orthodontist and clinician scientist with more than 10 years of expertise in categorizing CVM, examined and classified the images. The second (C2), third (C3) and fourth (C4) cervical vertebrae are all clearly visible on the appropriate quality cephalometric radiographs that were used in this study. Poor image quality or abnormalities in the head and neck region were the only exclusion criteria. Cervical maturation stages were classified into six stages (CS1–CS6) following recent consensus on CVM classification.7, 18 Cervical Stage 1 (CS1): The third and fourth cervical bodies are trapezoidal, and the inferior borders of vertebral bodies C2–C4 are flat. Cervical Stage 2 (CS2): The second cervical vertebra has a noticeable notch along its inferior border, but the lower margins of the third and fourth vertebral bodies are still flat, and the form of the C3 and C4 vertebrae is still trapezoidal. Cervical Stage 3 (CS3): The inferior borders of C2 and C3 have obvious notches; the inferior border of C4 is flat, and the bodies of C3 and C4 still have a trapezoidal form. Cervical Stage 4 (CS4): C3 and C4 bodies are shaped like horizontally elongated rectangular shapes, and the inferior borders of vertebral bodies C2–C4 show noticeable concavities along their inferior surfaces. Cervical Stage 5 (CS5): distinguished from CS4 by the development of square C3 and/or C4 body forms. There are notches on all three cervical bodies. At least one of the third and fourth cervical bodies has developed a vertically extended rectangular shape at cervical stage six (CS6). The inferior border is shorter than the posterior border in length. Additionally, CS6 appears to have a greater definition of the cortical bone than CS5. The principal evaluator (M.H.E.) repeated the classification process 2 weeks later, and the intraexaminer reproducibility for the cervical vertebral stages was tested by weighted kappa (wk). The intraexaminer agreement was almost perfect (wk = 0.95). Furthermore, another evaluator (O.S.), performed the repeated classification process, and the interexaminer agreement was strong (wk = 0.90). The final data set classified images by the principal evaluator (M.H.E.) was used in this study based on the MHE's greater experience.

The size and form of the C2, C3 and C4 vertebrae represent the fundamental distinction between the classes. Additionally, the distinction could only affect one vertebra, which may confuse a conventional CNN model. For example, two classes CS1 and CS2 are only separated by the shape of the lower bound of C2 vertebra.7, 12

We evaluated the model performances on the 6-stage classification issue using the main data set, which was divided into six CVM stages (CS1–CS6). The author (M.H.E.) served as the primary examiner for the entire data set of 1012 images. Male patients accounted for 534 of the 1012 photos, while female patients accounted for 478 of them. The total number of lateral cephalograms in our data set were 153, 182, 174, 159, 167 and 177, for cervical stages CS1, CS2, CS3, CS4, CS5 and CS6, respectively. After the data sets were formed for each gender, a 4:1 ratio was used to create the training and testing data sets, which results in 104 images for testing and 430 images for training the male subjects; 85 images for testing and 393 images for training the female subjects. The images were segmented and cropped to get extract the key relevant parts. The final data set contained the images with a size of 77 × 35. To prevent overfitting of the DL networks to the training dataset, various combinations of data augmentation techniques including random translation, rotation and autocontrast were used.

Unlike traditional classification problems, the CVM classification task requires a DL model to separate the shapes and sizes of the same object in the image. To do so, one must use a custom-designed network tailored to CVM classification. Therefore, by utilizing AggregateNet with three sub-networks and directional edge filters, we anticipate enhancing the differences between the classes. We employed three sub-networks because the images in the data set comprise three vertebrae, and directional edge filters are advantageous because the difference occurs in the vertebral bodies.

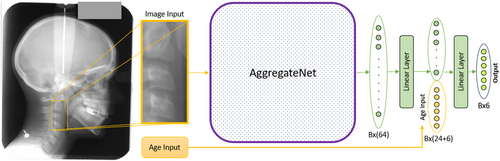

2.2 Structure of the AggregateNet

Aggregated residual connection is proposed to increase the non-linearity in the network, resulting in better performance in benchmark data sets. The idea extends the residual connection by adding parallel convolutional layers to it. We introduced AggregateNet inspired by the aggregated residual connection to classify CVM stages in this study. The model is comprised of two parts: feature extraction and classification. The model diagram is shown in Figure 1. In feature extraction, the input image is fed to AggregateNet, and in classification, the resulting feature vector is used along with the age input to obtain the result. Before the output layer, we concatenated age input with the result of the first linear layer. Age input was repeated six times with the addition of Gaussian noise with zero mean and 0.1 variance to increase the impact. The model employs an additional linear layer to reduce the dimension of the feature vector before the output layer. The age input was repeated, but the contribution may still be minimal in comparison to the contribution of the image feature vector. To make the chronological age input effective, the reduction of the feature dimension was required. After the dimension of the image feature vector is decreased, we concatenated the age input and feed the combination to the output layer. The output layer had the Softmax activation function to determine the likelihood of each class (CS1–CS6).

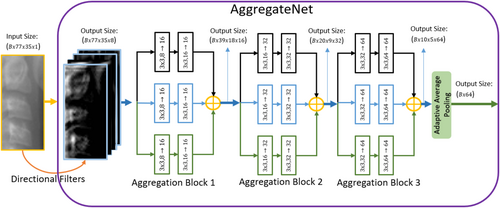

AggregateNet consists of three aggregation blocks, each block containing three sub-networks. Each sub-network contains two convolutional blocks. Furthermore, the directional edge filters are applied as the pre-processing layer in AggregateNet. The directional edge filters are derived from a one-dimensional high-pass prototype filter obtained from a seventh-order half-band Lagrangian maximally flat lowpass filter. The details of the filters and how they were obtained are shown in Refs. [19, 20]. We postulated that the edge-highlighted images produced by the directed filters will aid in classification. AggregateNet was designed to extract the feature map and the model uses two linear layers to produce the output.

We show the details of AggregateNet in Figure 2. In AggregateNet, we decided not to use a residual connection since the depth of the model does not cause vanishing gradients. A similar aggregation residual connection was first proposed in ResNext model.16 The model produced state-of-the-art performance on large benchmark data set surpassing many other models in ILSVRC classification task.21 We believe that similar aggregation can be used to extract the relevant features from the image, and we designed AggregateNet for CVM classification task.

In addition to AggregateNet, we compared the results with ResNet20,22 MobileNetV2,23 Xception.24 All three networks were used for image classification and tested on the large benchmark data sets. Besides the pre-trained networks, we also used CNNDF model.25 CNNDF is a model designed for CVM classification and it uses the same data collection, AAOF Legacy Collection. CNNDF also uses directional filters as the pre-processing layer.

We used the best testing accuracy measured during the training as the evaluation metric. In Section 3, we report the accuracy results of each model and compare the different methods. Additionally, the performance of the proposed AggregateNet model is evaluated using the confusion matrix.

3 RESULTS AND COMPARISONS

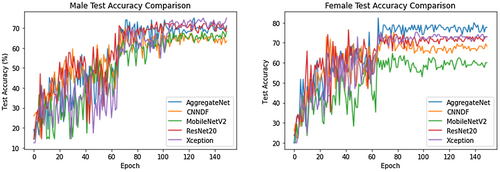

In this section, the results obtained and the comparison between different methods and various networks are presented. We trained every model for 150 epochs with the initial learning 0.01. Adam optimizer is used, and the learning rate is reduced to 1/10 at 62nd, 102nd and 124th epoch. Our AggregateNet model with data augmentation produces 82.35% accuracy in female patients and 75.0% in male patients. To demonstrate that the best performance is attained, various comparisons are used. We use the model without the data augmentation to show its impact. AggregateNet without directional filters produces inferior results compared with the one with the directional filters. Comparing the outcomes with and without the chronological age information allows us to determine the contribution of the age input. The comparison results of the methods are presented in Table 1.

| Data augmentation | Directional filters | Age input | Female accuracy | Male accuracy |

|---|---|---|---|---|

|

|

|

68.23% | 58.65% |

|

|

|

69.41% | 60.57% |

|

|

|

80.0% | 74.03% |

|

|

|

74.11% | 64.42% |

|

|

|

82.35% | 75.0% |

- Bold indicates higher accuracy.

The results in Table 1 show that the best performance for both genders is achieved when directional filters and age input are used along with the data augmentation. It is evident that without data augmentation, the model overfits. The chronological age input also contributes significantly as shown. To compare AggregateNet with other networks, we present Figure 3. The comparison of the models' testing accuracy outcomes over the training epochs is shown in a single figure. As stated, we used ResNet20, Xception, MobileNetV2 and CNNDF. The best result is obtained with AggregateNet. In male subjects, the second-best result is produced by Xception with 75.0% but the performance drops to 73.07% and 70.19% if ResNet20 and CNNDF are used, respectively. MobileNetV2 exhibits the worst performance in both genders with 68.27% and 64.71%, respectively. In female subjects, the results of ResNet20, Xception and CNNDF are 76.47%, 75.29% and 75.29%, respectively.

Apart from the comparison, we use confusion matrices as stated earlier. Confusion matrices are tools to see how a model generalizes the data set. They can identify if there is an overfit or biased assumption by model. In Figure 4, we present the confusion matrices of both genders. The model trained successfully, with one exception, and only commits the often-occurring error in classes that are nearby. If the model behaved quite flawlessly, we could conclude that it was overtrained and had memorized the data set. However, AggregateNet has been properly trained and has performed well in the testing.

4 DISCUSSION

Hand-wrist and CVM stages are the two most commonly used skeletal maturity indicators. In clinical practice, evaluation of CVM stages on the lateral cephalometric radiograph is recommended as it prevents the need for further radiation exposure.7 However, experience and training have an impact on the CVM staging techniques. The CVM classification techniques were developed using the AAOF collection, and the relationship between CVM growth and development stages was investigated. It is crucial to note that no prior research has been published that focuses exclusively on using supervised AI to stage CVM development using the AAOF legacy collection. The area of predicting AI growth and development needs to be investigated. The goal of this study was to create a completely automated pipeline for figuring out the CVM stages.

There have been other attempts to develop a fully automated DL tool for CVM classification. Manoochehri et al. developed attention-guided multi-scale CNN to improve the performance and the interpretability. They discovered that they could distinguish more distinct features, improving performance.26 Stepwise segmentation-based classification model is proposed by Kim et al. Their three-step model produced 62.5% test accuracy.27 Atici et al. used the same data collection and their model, CNNDF, can achieve good accuracy without using gender and age input. The model is fed with the input image only and the best performance on 6-stage CVM classification is 75.11%.25 Different models' comparison is also performed by Seo et al.14 They used different pre-trained networks to classify the CVM stages and obtained higher than 90% accuracy on their data set. It is normal to have diverse results because the classification task as specified by CVM is heavily influenced by the data set selection.

In this study, a unique CNN model was created for the CVM classification problem. AggregateNet uses aggregation blocks to increase the non-linearity in the model. It was demonstrated that a parallel network finds the global optimum more effectively than a conventional DL model.28 Instead of a linear connection between neurons, a non-linear function was performed with the help of aggregated convolutional operations. AggregateNet model inspired by aggregated parallel connection was designed to classify CVM stages. Since the difference among the classes arises in the vertebral bodies of C2, C3 and C4 regions, we used three sub-networks in each aggregation block. We think the best way to infer the features in the image is to use two convolutional layers, followed by a batch normalization layer in each sub-network. In addition to the input image, the chronological age input was used to improve the performance of the model. The data set was split by gender since male patients may experience a different rate of growth than female patients, maximizing the effectiveness of using the chronological age. The proposed AggregateNet achieves state-of-the-art performance with 82.35% in female patients and 75.0% in male patients on the dataset collection. Even though one can use and contrast the numerous conventional CNN models, we demonstrated that our model is superior to other models we investigated.

5 CONCLUSIONS

In this paper, a custom design CNN model is introduced to classify CVM into six maturation stages (CS1–CS6). The model is composed of two parts: feature extraction and classification. AggregateNet is used in the model for feature extraction. It uses directional filters as the pre-processing layer to enhance the edge and shape information. Then, a total of four aggregation blocks are employed to extract the feature vector. Each block contains three sub-networks, and two convolutional layers with batch normalization were used in each sub-network. The output feature map of the AggregateNet was combined with the age input to produce the final decision. After the concatenation of the image feature vector with the chronological age input, the model produces the output using a Softmax activation function. For obtaining unbiased results, the proposed model was trained and tested on different genders. To compare the outcome with our model, the performance of ResNet20, MobileNetV2, Xception and CNNDF are also investigated. It is shown that utilizing directional filters and age input improves the performance of our network.

AUTHOR CONTRIBUTIONS

Salih Furkan Atici conducted the experiment and wrote manuscript. Rashid Ansari and Ahmet Enis Cetin conceptualized and contributed to the study design and edited manuscript. Veerasathpurush Allareddy edited the manuscript and acquired the funding. Omar Suhaym labelled the data. Mohammed H. Elnagar contributed to the conception and study design, data labelling and wrote the manuscript.

FUNDING INFORMATION

MHE received funding support from: American Association of Orthodontists Foundation. VA: received funding support from Brodie Craniofacial Endowment. The funder had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

CONFLICT OF INTEREST

All the authors have no conflict of interest to disclose.

Open Research

DATA AVAILABILITY STATEMENT

The data that support the findings of this study are available from https://www.aaoflegacycollection.org/aaof_home.html. Restrictions apply to the availability of these data, which were used under license for this study. Data are available from https://www.aaoflegacycollection.org/aaof_home.html with the permission of https://www.aaoflegacycollection.org/aaof_home.html.