Geometric ergodicity and conditional self-weighted M-estimator of a GRCAR() model with heavy-tailed errors

Abstract

We establish the geometric ergodicity for general stochastic functional autoregressive (linear and nonlinear) models with heavy-tailed errors. The stationarity conditions for a generalized random coefficient autoregressive model (GRCAR()) are presented as a corollary. And then, a conditional self-weighted M-estimator for parameters in the GRCAR() is proposed. The asymptotic normality of this estimator is discussed by allowing infinite variance innovations. Simulation experiments are carried out to assess the finite-sample performance of the proposed methodology and theory, and a real heavy-tailed data example is given as illustration.

1 INTRODUCTION

Model (1) with (GRCAR(1)) was first introduced by Hwang and Basawa (1998), and it includes Markovian bilinear model and random coefficient exponential autoregressive model as special cases. When , model (1) becomes the ordinary autoregressive (AR()) model. There have been a lot of perfect theoretical achievements about it. For example, Ling (2005) proposed a self-weighted least absolute deviation estimator and showed its asymptotic normality. The method has been used in many references, such as Pan et al. (2007), Pan and Chen (2013), Pan et al. (2015). Wang and Hu (2017) proposed a self-weighted M-estimator for the AR(p) model and established the asymptotic normality of this estimator. When with , and is independent of , model (1) becomes the first-order random coefficient autoregressive (RCAR(1)) model (see Nicholls and Quinn (1982)), which has been frequently used to describe the random perturbations of dynamical systems in economics and biology (see Tong (1990), Yu et al. (2011), Zhang et al. (2015), Araveeporn (2017)). As a generalization of RCAR model and AR models, GRCAR model has become one type of important models in nonlinear time series, since it allows dependence between random errors and random coefficients. Estimation of parameters and asymptotic properties of GRCAR models have been studied in the literature. For instance, Hwang and Basawa (1997) established the local asymptotic normality of a class of GRCAR models. Zhao and Wang (2012) constructed confidence regions for the parameters by using empirical likelihood method. Zhao et al. (2013) considered the problem of testing the constancy of coefficients in a GRCAR model by empirical likelihood method. Zhao et al. (2018) studied the variable selection problem in GRCAR models. Zhao et al. (2019) proposed a weighted least squares estimate and empirical likelihood (EL) based weights through using some auxiliary information for GRCAR models. Moreover, time series models with heavy-tailed errors, even when is infinite, are often found and studied in economic and financial modeling. Wu (2013) studied M-estimation for general ARMA processes with infinite variance. Yang and Ling (2017) investigated the self-weighted least absolute deviation estimation for heavy-tailed threshold autoregressive models. Fu et al. (2021) studied the asymptotic properties for the conditional self-weighted M-estimator of GRCAR(1) model with possibly heavy-tailed errors. However, general easy-to-check conditions for stationarity and limiting distributions of robust parameter estimators for statistical inference of GRCAR() with heavy-tailed errors are still open problems.

This article aims to reach two targets. First, we establish the geometric ergodicity of general stochastic functional autoregressive (linear and nonlinear) models with possibly heavy-tailed error terms under a mild moment condition. Moreover, the stationarity conditions for GRCAR() are implied as a corollary of our general result. Second, motivated by Yang and Ling (2017), Ling (2005), Wang and Hu (2017) and Fu et al. (2021), we prove the asymptotic property of a self-weighted M-estimator (SM-estimator) for GRCAR() with possible infinite variance, and show that the limiting distribution of SM-estimator is asymptotically normal. Simulation results and a real data example are given to support our methodology.

The contents of this article are organized as follows. Section 2 presents the main results. Section 3 reports the simulation results. Section 4 shows a real data example. All proofs of our main results are given in Section 5.

2 MAIN RESULTS

2.1 Geometric Ergodicity

We first establish geometric ergodicity of general stochastic functional autoregressive models (including linear and nonlinear) under a mild moment condition. Then the geometric ergodicity which can imply the stationarity conditions of model (1) is given as a corollary of the main theorem.

Theorem 2.1.Suppose model (3) satisfies

Under the conditions of Theorem 2.1, more concretely, we have the following corollary.

Corollary 2.2.Suppose model (2) satisfies

- (i)

There exists a constant vector and a satisfying

(5)such that(6)and for any ,(7) - (ii)

The density function of is continuous and positive everywhere,and for in (i).

Then, in model (2) is stationary and geometrically ergodic.

In Corollary 2.2, when , we can get the stationarity conditions for GRCAR() as another corollary of our general result.

Corollary 2.3.Suppose model (1) satisfies

- (C.1)

- (i)

, for ;

- (ii)

, for a constant ;

- (iii)

The density function of is continuous and positive everywhere, and for in (ii).

Then, in model (1) is stationary and geometrically ergodic.

- (i)

Remark 1.Theorem 2.1 establishes the geometric ergodicity of general stochastic functional autoregressive (linear and nonlinear) models with possibly heavy-tailed error terms under a mild moment condition. The stationarity conditions for GRCAR() in Corollary 2.3 is a consequence of Theorem 2.1. In Corollary 2.3, the moment condition is very weak. We only require a finite moment of order about the error , which includes the Cauchy distribution. The condition on random coefficients makes the model not too far away from the linear AR. This is a reasonable requirement for any (non-parametric or parametric) AR-type model.

Remark 2.We note that the GRCAR is a quite broad kind of models for time series data. A special case of this type of models can be used to describe conditionally heteroscedastic structure. For example, consider the model defined as: , . The conditional mean and conditional variance of this model are

2.2 Conditional Self-weighted M-estimation

2.3 Asymptotic Normality of SM-estimation

- (C.2)

Let be a convex function on with left derivative and right derivative . Choose a function such that .

- (C.3)

Suppose that exists, has a derivative at and .

- (C.4)

and , as .

- (C.5)

is a measurable and positive function on such that , where denotes the Euclidean norm of a vector .

Theorem 2.4.Under the (C.1)–(C.5),we have

Remark 3.Assumption (C.1) does not rule out the possibility that has an infinite variance, and even is infinite. Theorem 2.4 establishes the asymptotic property of SM-estimators for parameters in GRAR() models with possible heavy-tailed errors.

Remark 4.It is worth mentioning that Assumptions (C.2)–(C.4) are traditional assumptions for an M-estimation in a linear model, which can be found in many references, for examples, Bai et al. (1992), Wu (2007) and Wang and Zhu (2018). Examples of satisfying assumptions include and , which then correspond to the conditional self-weighted least-squares estimator, conditional self-weighted least absolute deviation estimator and conditional self-weighted Huber estimator respectively. Assumption (C.5) is standard on the weight for the self-weighted method in IVAR models which allows to be infinite by properly choosing weight function . Firstly, the purpose of the weight is to downweight the leverage points in such that the covariance matrices and in Theorem 2.4 above are finite. Secondly, the allow us to approximate by a quadratic form. In addition, Theorem 2.4 generalizes the results of Ling (2005), Wang and Hu (2017) and Fu et al. (2021).

Remark 5.For the case and , taking . Applying Theorem 2.4, we have

3 SIMULATION STUDIES

We conduct some simulation studies in finite samples through Monte Carlo experiments. What we are interested in are the accuracy and sampling distribution of the proposed estimator. The results show that our method performs well.

Data are generated from the following GRCAR () models:

Model A: . Consider and .

Model B: , where . Consider , and .

The true values of parameters in model A are , and in model B. In model A, the random coefficients are correlated with the error process. The random coefficients are independent of the error process in model B. Here three distributions are given for reference: has finite expectation and finite variance; has finite expectation but infinite variance; Cauchy(0,1) only have a finite moment of order . We set the sample sizes and . The number of replications is 2000.

| Bias | 0.000 | 0.000 | 0.001 | 0.001 | 0.004 | 0.005 | ||||

| SD | 0.103 | 0.084 | 0.093 | 0.077 | 0.123 | 0.118 | 0.066 | 0.065 | ||

| AD | 0.116 | 0.075 | 0.103 | 0.067 | 0.137 | 0.077 | 0.076 | 0.043 | ||

| Bias | 0.001 | 0.001 | 0.003 | 0.002 | 0.001 | 0.000 | 0.002 | 0.003 | ||

| SD | 0.072 | 0.059 | 0.065 | 0.053 | 0.086 | 0.082 | 0.044 | 0.043 | ||

| AD | 0.079 | 0.053 | 0.070 | 0.047 | 0.093 | 0.054 | 0.051 | 0.030 | ||

| Bias | 0.001 | 0.001 | 0.002 | 0.000 | 0.000 | 0.002 | 0.008 | 0.007 | ||

| SD | 0.111 | 0.090 | 0.090 | 0.111 | 0.132 | 0.125 | 0.105 | 0.099 | ||

| AD | 0.125 | 0.081 | 0.151 | 0.098 | 0.114 | 0.082 | 0.076 | 0.064 | ||

| Bias | 0.002 | 0.002 | 0.001 | 0.001 | 0.002 | 0.002 | 0.003 | 0.002 | ||

| SD | 0.078 | 0.064 | 0.064 | 0.076 | 0.092 | 0.086 | 0.044 | 0.065 | ||

| AD | 0.086 | 0.057 | 0.101 | 0.068 | 0.076 | 0.057 | 0.051 | 0.044 | ||

| Bias | 0.001 | 0.001 | 0.003 | 0.004 | 0.000 | 0.001 | 0.004 | 0.005 | ||

| SD | 0.110 | 0.089 | 0.095 | 0.078 | 0.141 | 0.134 | 0.078 | 0.075 | ||

| AD | 0.126 | 0.081 | 0.107 | 0.069 | 0.162 | 0.090 | 0.090 | 0.050 | ||

| Bias | 0.000 | 0.001 | 0.002 | 0.002 | 0.001 | 0.000 | 0.002 | 0.002 | ||

| SD | 0.078 | 0.064 | 0.067 | 0.055 | 0.099 | 0.093 | 0.053 | 0.052 | ||

| AD | 0.086 | 0.057 | 0.073 | 0.049 | 0.110 | 0.063 | 0.061 | 0.035 | ||

| Bias | 0.002 | 0.001 | 0.004 | 0.004 | 0.001 | 0.002 | 0.004 | 0.005 | ||

| SD | 0.118 | 0.096 | 0.094 | 0.078 | 0.152 | 0.144 | 0.072 | 0.070 | ||

| AD | 0.135 | 0.087 | 0.106 | 0.069 | 0.174 | 0.096 | 0.082 | 0.046 | ||

| Bias | 0.000 | 0.001 | 0.002 | 0.002 | 0.001 | 0.000 | 0.002 | 0.002 | ||

| SD | 0.083 | 0.068 | 0.066 | 0.054 | 0.106 | 0.100 | 0.048 | 0.048 | ||

| AD | 0.093 | 0.061 | 0.073 | 0.048 | 0.119 | 0.068 | 0.056 | 0.032 | ||

| Bias | 0.001 | 0.001 | 0.006 | 0.006 | 0.001 | 0.000 | 0.005 | 0.004 | 0.000 | 0.000 | 0.003 | 0.003 | ||

| SD | 0.095 | 0.075 | 0.092 | 0.076 | 0.110 | 0.106 | 0.068 | 0.064 | 0.127 | 0.133 | 0.045 | 0.044 | ||

| AD | 0.109 | 0.075 | 0.106 | 0.073 | 0.131 | 0.076 | 0.079 | 0.046 | 0.156 | 0.077 | 0.045 | 0.022 | ||

| Bias | 0.000 | 0.001 | 0.003 | 0.003 | 0.000 | 0.001 | 0.002 | 0.002 | 0.002 | 0.002 | 0.002 | 0.002 | ||

| SD | 0.066 | 0.053 | 0.065 | 0.053 | 0.078 | 0.075 | 0.048 | 0.045 | 0.090 | 0.095 | 0.031 | 0.031 | ||

| AD | 0.074 | 0.053 | 0.072 | 0.051 | 0.089 | 0.054 | 0.054 | 0.032 | 0.106 | 0.055 | 0.031 | 0.015 | ||

| Bias | 0.002 | 0.000 | 0.006 | 0.006 | 0.002 | 0.000 | 0.005 | 0.004 | 0.001 | 0.001 | 0.005 | 0.005 | ||

| SD | 0.102 | 0.081 | 0.136 | 0.110 | 0.117 | 0.112 | 0.097 | 0.093 | 0.133 | 0.138 | 0.064 | 0.064 | ||

| AD | 0.118 | 0.081 | 0.155 | 0.107 | 0.139 | 0.081 | 0.117 | 0.069 | 0.161 | 0.081 | 0.070 | 0.035 | ||

| Bias | 0.001 | 0.001 | 0.005 | 0.004 | 0.000 | 0.001 | 0.003 | 0.003 | 0.002 | 0.002 | 0.003 | 0.003 | ||

| SD | 0.071 | 0.058 | 0.095 | 0.076 | 0.083 | 0.079 | 0.070 | 0.067 | 0.093 | 0.100 | 0.044 | 0.045 | ||

| AD | 0.080 | 0.057 | 0.105 | 0.074 | 0.094 | 0.057 | 0.080 | 0.048 | 0.110 | 0.057 | 0.048 | 0.024 | ||

| Bias | 0.000 | 0.001 | 0.006 | 0.005 | 0.002 | 0.001 | 0.004 | 0.002 | 0.000 | 0.000 | 0.004 | 0.005 | ||

| SD | 0.101 | 0.079 | 0.094 | 0.077 | 0.125 | 0.121 | 0.079 | 0.073 | 0.164 | 0.175 | 0.076 | 0.073 | ||

| AD | 0.116 | 0.079 | 0.107 | 0.074 | 0.150 | 0.087 | 0.089 | 0.052 | 0.200 | 0.102 | 0.072 | 0.036 | ||

| Bias | 0.001 | 0.001 | 0.002 | 0.002 | 0.003 | 0.001 | 0.001 | 0.001 | 0.000 | 0.000 | 0.002 | 0.002 | ||

| SD | 0.070 | 0.057 | 0.065 | 0.053 | 0.090 | 0.086 | 0.055 | 0.051 | 0.115 | 0.124 | 0.051 | 0.050 | ||

| AD | 0.079 | 0.056 | 0.073 | 0.052 | 0.102 | 0.062 | 0.061 | 0.037 | 0.136 | 0.072 | 0.049 | 0.026 | ||

| Bias | 0.000 | 0.001 | 0.006 | 0.005 | 0.003 | 0.001 | 0.003 | 0.002 | 0.001 | 0.001 | 0.004 | 0.005 | ||

| SD | 0.108 | 0.085 | 0.094 | 0.077 | 0.136 | 0.131 | 0.074 | 0.068 | 0.176 | 0.188 | 0.066 | 0.062 | ||

| AD | 0.125 | 0.085 | 0.107 | 0.074 | 0.161 | 0.094 | 0.082 | 0.048 | 0.215 | 0.109 | 0.060 | 0.030 | ||

| Bias | 0.001 | 0.001 | 0.002 | 0.002 | 0.004 | 0.002 | 0.001 | 0.001 | 0.001 | 0.001 | 0.002 | 0.002 | ||

| SD | 0.075 | 0.061 | 0.065 | 0.053 | 0.097 | 0.092 | 0.051 | 0.048 | 0.125 | 0.134 | 0.044 | 0.043 | ||

| AD | 0.085 | 0.060 | 0.073 | 0.052 | 0.110 | 0.067 | 0.056 | 0.034 | 0.146 | 0.077 | 0.041 | 0.021 | ||

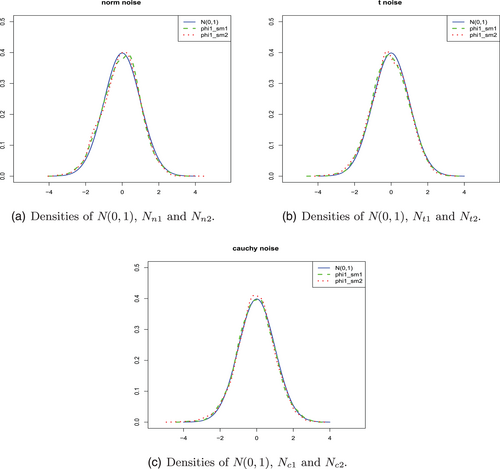

To get an overall view on the sampling distributions of the estimators and the estimators, we simulate 2000 replications for the case and when the error distributions are and for model A, and for the case and when the error distributions are , and Cauchy for model B. Denote ; , when the error distribution is , and respectively, where and are the SDs of and respectively. Figure 1 shows the density curves of model A. The density curves of model B are presented in Figure 2. We can see that the density of is approximated reasonably well by those of , and in both model A and model B.

In conclusion, the numeric results show that the conditional self-weighted M-estimators perform well in finite sample no matter with finite variance or infinite variance.

4 REAL DATA ANALYSIS

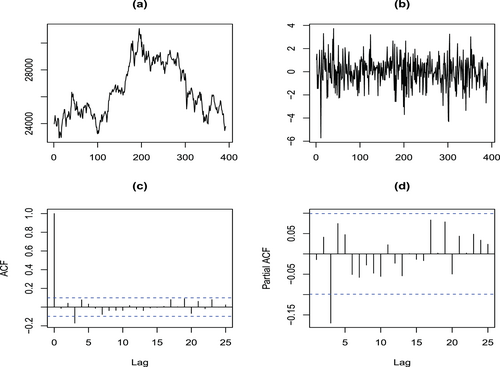

-

Step 1. Data transformation: The sample time plot for the data is shown in Figure 3(a). It can be seen that the time series is not stationary because of various levels. To get a stationary time series, let . The sample path plot for the data is shown in Figure 3(b). Figure 3(b) indicates that is close to stationarity.

-

Step 2. Model identification: The plot of sample autocorrelation function (ACF) and sample partial autocorrelation function (PACF) of are presented in Figure 3(c) and (d) respectively. Figure 3 can provide some important information for tentative identification of the orders of a stable AR model. Based on the sample ACF and PACF plots, it is reasonable to consider fitting an AR(3) autocorrelation structure to . Since stock data are affected by various factors, the coefficients of the autoregressive model may also change randomly over time, even the coefficients may be correlated with the error, so we can try to fit a GRCAR(3) model instead of AR(3). However, we realize that the data may be heavy-tailed and how to determine the order of autoregression for a time series with infinite variance is a problem which needs further study. Here we just use the sample PACF to give a rough indication of the order in which a GRCAR model might be fitted.

-

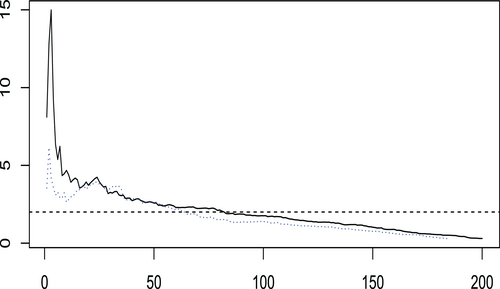

Step 3. Heavy-tail test: To test whether has a heavy-tailed distribution, we use Hill's estimator (see Drees et al. (2000) and Resnick (2000)) to estimate the tail index of . Let be the order statistics of . The estimators of the right-tail and left-tail indices are defined as

respectively. Figure 4 displays the Hill estimates of the right-tail and left-tail indices when . From Figure 4, we can see that both the right and left tail indices are most likely less than 2. Hence, should have a heavy tail. Therefore, it may be more appropriate to suppose these data are generated from a process with infinite variance rather than to assume this data have finite variance.Based on the above discussion, we can fit a GRCAR(3) model to the data:

(15) -

Step 4. Parameter estimation: The unknown parameters are estimated by different methods on the training data. We calculated the mean absolute errors (MAEs) of predicted values for transformed data based on 1000 repetitions. The results are shown in Table III. From them, we can see that the self-weighted estimators perform better, especially . So we choose the for further analysis. The estimates are

whose corresponding asymptotic standard deviations are respectively. -

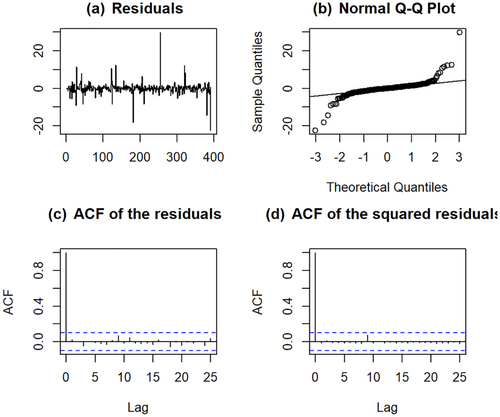

Step 5. Model diagnostics: The absolute value of the eigenvalues for the corresponding matrix in model (15) are 0.5549, 0.5383 and 0.5383, which are all less than one. Therefore, this model satisfies the stationary conditions of Corollary 2.3. Figure 5 presents the residuals of the fitted model (15), the normal – plot of the residuals, the sample autocorrelation function (ACF) of the residuals and the sample ACF of the squared residuals. From that, we can see the model (15) fits the data reasonably well.

-

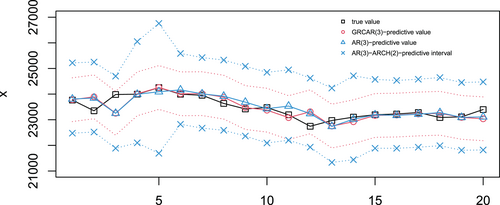

Step 6. Prediction: We use the above model (15) to predict in the test set. As the coefficient of model (15) is random, the predicted values of Hang Seng Index from 3 December 2021 to 31 December 2021, , were calculated by taking the average of 1000 repetitions. We compare our GRCAR(3) model with the AR(3) model and AR(3)-ARCH(2) model. The predictive performance of different models is presented in Figure 6. We can see that the predicted value of model (15) captures the change trend of the real value and most of the predicted values are very close to the true values. Figure 6 and Table IV also show that the GRCAR(3) model performs better than AR(3) model and AR(3)–ARCH(2) model for this dataset.

| Methods | LAD | LS | ||

|---|---|---|---|---|

| MAE | 0.9691 | 0.9529 | 0.9800 | 0.9996 |

| Mean of residual | SD of residual | MAE | |

|---|---|---|---|

| AR(3) | 282.93 | 200.98 | |

| GRCAR(3) | 8.07 | 279.34 | 176.75 |

In summary, our model and method perform well in analysis and forecasting of time series data with heavy-tailed distributions.

5 PROOFS OF THEORETICAL RESULTS

This section presents the proofs of our theoretical results.

Proof of Theorem 2.1.It is easy to see that defined by (3) is a homogenous Markov chain. By the conditions of Theorem 2.1, this Markov chain is -irreducible and aperiodic, and all bounded sets with positive -measure in are small sets. Take the test function . It holds that

Proof of Corollary 2.2.We only need to verify the condition (i) of Theorem 2.1. Denote

Proof of Corollary 2.3.We only need to verify the condition (i) of corollary 2.2.

Define

In the following, we give two lemmas, which will be used frequently in the proof of Theorem 2.4. The first lemma is directly taken from Davis et al. (1992).

Lemma 5.1.Let and be stochastic process on and suppose that on . Let minimize and minimize . If is convex for each and is unique with probability one, then on .

Proof.See Davis et al. (1992).

Lemma 5.2.Under the conditions (C.1)–(C.5), we have, as ,

- (a)

, ;

- (b)

for any fixed vector such that ;

- (c)

.

Proof.By applying the conditions (C.1) with being stationary and ergodic, it is easy to get (a) and (b). So we omit the proofs of (a) and (b), and only give the proof of (c). Put . Then

Proof of Theorem 2.4.Denote and

6 CONCLUDING REMARKS

This article establishes the geometric ergodicity of general stochastic functional autoregressive models (including linear and nonlinear) under a broad condition. Furthermore, the stationary conditions and a self-weighted M-estimator for GRCAR(p) models with possibly heavy-tailed errors are proposed. The proposed estimator is shown to be asymptotically normal. The simulation study and a real data example showed that our theory and methodology perform well in practice. A general approach to stationarity and estimation for GRCAR models with heavy-tailed errors is presented. The methodology and results could be extended further to other time series models such as heavy-tailed GRCARMA models.

ACKNOWLEDGEMENTS

The authors thank the Editor, the Co-Editor and the Referee(s) for their insightful comments and suggestions that make us improve our article significantly. The second author's work was partially supported by the National Natural Science Foundation of China (Grant No. 12171161).

Open Research

DATA AVAILABILITY STATEMENT

Our dataset consists of the daily Hang Seng closing index from 7 May 2020 to 31 December 2021. The data were downloaded at https://cn.investing.com and are available publicly.