</Click to begin your digital interview>: Applicants' experiences with discrimination explain their reactions to algorithms in personnel selection

Abstract

Algorithms might prevent prejudices and increase objectivity in personnel selection decisions, but they have also been accused of being biased. We question whether algorithm-based decision-making or providing justifying information about the decision-maker (here: to prevent biases and prejudices and to make more objective decisions) helps organizations to attract a diverse workforce. In two experimental studies in which participants go through a digital interview, we find support for the overall negative effects of algorithms on fairness perceptions and organizational attractiveness. However, applicants with discrimination experiences tend to view algorithm-based decisions more positively than applicants without such experiences. We do not find evidence that providing justifying information affects applicants—regardless of whether they have experienced discrimination or not.

Practitioner points

-

Algorithms evaluating digital interviews violate applicants' fairness perceptions and diminish organizational attractiveness

-

Applicants with discrimination experiences tend to view algorithm-based decisions more positively

-

Information about the use of algorithms in hiring could be detrimental

-

The use of algorithms could be an alternative to hiring prior victims of discrimination

1 INTRODUCTION

The use of algorithms has emerged as a promising alternative to human decision-making, particularly as prior meta-analytic research indicates that algorithms outperform human experts across multiple outcome criteria, including employee-related decisions in the organizational context (Kuncel et al., 2013). Subsequently, an increasing number of organizations have started using algorithms for some employee-related decisions that traditionally were made by people (Cheng & Hackett, 2021). One area where organizations are beginning to rely on algorithm-based approaches is personnel selection (Stone et al., 2015). While algorithms may allow organizations to select employees out of their applicant pool in a more effective and efficient way, the question of how applicants perceive the use of algorithms is a different one. Addressing this question is important since some organizations have even started to proudly advertise the use of algorithms in selection (Booth, 2019). This question becomes all the more important with current attempts at new rules and legislation on AI that put great emphasis on transparency for applicants (European Union, 2022; New York City Department of Consumer and Worker Protection, 2022).

Prior research on the effects of algorithms on employees mostly revealed negative effects on applicants' justice and fairness perceptions, but some researchers also pointed toward positive aspects (Langer & Landers, 2021). These contradictory findings, as well as different lines of argumentation, hint at individual differences in perceptions of algorithms that have not been considered sufficiently (Mirowska & Mesnet, 2022). Applicants' prior experiences may significantly influence whether they perceive an algorithm, for example, as reductionistic and, in turn, as less fair; or whether they perceive the algorithm as less biased and, in turn, as more fair. We theorize that one of these critical individual differences is prior experiences of discrimination. Considering discrimination experiences is crucial for companies to generate a diverse and qualified applicant pool and for targeted recruiting (Avery & McKay, 2006).

The aim of our study is to consider the disadvantages that algorithms might have on applicants' perceptions, but also investigate one line of reasoning that reflects the perceived advantages of algorithms: reduction of biases and prejudices to arrive at more objective decisions. More specifically, we argue that algorithms can be seen as being more objective by some applicants, thereby preventing applicants from being discriminated against by human decision-makers. Drawing on organizational justice research in the selection context and empirical evidence on people's attitudes towards algorithms, we hypothesize that algorithm-based (compared to human-based) decisions in digital selection procedures negatively affect (a) fairness perceptions. Based on exposure and signaling theory, we further hypothesize that algorithms lower (b) organizational attractiveness. Moreover, we theorize that individuals who experienced discrimination at work might view algorithms more positively in terms of (a) fairness and (b) organizational attractiveness. In addition, we assume that justifying information about more objective decision-making is perceived more positively (a and b) when individuals experienced discrimination compared to when they did not experience discrimination in the work context before. In two experimental studies, we investigate these relationships in the context of digital interviews (i.e., asynchronous video interviews during which applicants record their answers to a standard set of questions).

This study makes several contributions. First, we further develop the nascent literature on algorithms in human resource (HR) management to assess when applicants find algorithms used in personnel selection to be more or less fair. Second, we contribute to the organizational justice and fairness literature by examining the perceived fairness of algorithms in selection while acknowledging different lines of reasoning and prior experiences of applicants. Third, we contribute to the discrimination literature by showing whether and when victims of perceived discrimination in the past have the capacity to believe that a new work situation will treat them fairly. This advances what we know about equal employment opportunities in the HR literature and has important implications for HR practice.

2 THEORETICAL BACKGROUND AND HYPOTHESES DEVELOPMENT

2.1 Algorithm-based decisions and applicants' fairness perceptions

Prior research on the influence of algorithmic decision-makers on employees has mainly focused on perceptions of justice or fairness (Langer & Landers, 2021). Justice is defined as the “perceived adherence to rules that reflect appropriateness in decision contexts” (Colquitt & Rodell, 2015, p. 188) and can be subdivided into procedural, interpersonal, informational, and distributive justice (Colquitt, 2001). Procedural justice represents the appropriateness in decision-making procedures and consists of seven rules (Leventhal, 1980; Thibaut & Walker, 1975): process control (provision of opportunities to voice one's viewpoint throughout the process), decision control (provision of opportunities to influence the outcome of the process), consistency (application of similar procedures to all applicants), bias suppression (neutral decision-making), accuracy (to base allocations on good information), correctability (provision of opportunities to modify decisions), and ethicality (compatibility with moral and ethical principles). Interpersonal justice covers treatment during the process and includes the rules of respect and propriety (Bies & Moag, 1986). Informational justice deals with the appropriateness of explanations offered for procedures and comprises the rules of truthfulness and justifications (as reasonable, timely, and specific; Bies & Moag, 1986; Shapiro et al., 1994). Distributive justice covers appropriateness in decision outcomes and includes the rules of equity, equality, and need (Leventhal, 1976). Fairness is defined as a global perception of appropriateness that lies theoretically downstream of justice (Colquitt & Rodell, 2015).

According to Gilliland's (1993) organizational justice framework, several characteristics of the selection procedure, such as HR policy, test type, or behavior of HR personnel, influence the extent to which job candidates perceive different justice rules to be satisfied or violated and, hence, contribute to perceptions of overall fairness of the selection process. Studies comparing algorithmic versus human decision-makers in the selection or broader HR context demonstrated mainly negative effects of algorithms on employees' fairness perceptions as an overall outcome of justice, but also provide evidence for positive or no significant effects (Langer & Landers, 2021). In an experimental study, Lee (2018) revealed that employees' reactions depend on the kind of decision. Her results showed that in tasks requiring human skills, such as in a hiring situation, human decision-makers are perceived to be fairer than algorithms. In additional open-ended questions, she further found that lower fairness evaluations were justified by algorithms' lack of intuition and subjective judgment skills. Newman et al. (2020) investigated in four vignette studies across different HR decisions that algorithms are perceived as less fair and that this effect is mediated by perceptions of decontextualization and quantification (e.g., algorithms are perceived to reduce accurate information via quantification while neglecting qualitative characteristics). Based on this literature, we argue that the use of algorithms rather than human decision-makers to analyze video recordings lowers applicants' overall fairness perceptions. This is because subjective judgment skills and qualitative characteristics are important considerations in selection decisions, especially in job interviews that allow applicants to display different qualitative characteristics.

Furthermore, applicants may perceive that algorithms violate several justice rules. While empirical evidence to date is inconclusive and also affirms positive (Marcinkowski et al., 2020; Min et al., 2018) or no effects (Suen et al., 2019) of algorithms on applicants' justice perceptions in a selection context, most studies reveal negative effects (Acikgoz et al., 2020; Noble et al., 2021). More fine-grained analyses highlight that algorithms violate most of the procedural (except for consistency) and interpersonal justice rules (Acikgoz et al., 2020; Noble et al., 2021). Overall, we assume a negative effect on perceptions of fairness as an outcome of justice. Taken together, we hypothesize:

Hypothesis 1a.Algorithm-based compared to human-based decision-making in digital interviews lowers applicants' fairness perceptions.

2.2 Algorithm-based decisions and organizational attractiveness

We further expect that whether an algorithm or human recruiter makes the selection decision has an impact on organizational attractiveness. First, exposure theory (Zajonc, 1968) proposes that the more individuals are exposed to a certain stimulus, the more favorable their attitudes are toward the object. This theory has also been applied in the field of applicant attraction (Ehrhart & Ziegert, 2005), suggesting that the more familiar the applicants are with the environment, the stronger their attraction to the organization. Hence, applicants might be less attracted to organizations that use algorithms because they are commonly less used to being evaluated by algorithms.

Second, based on signaling theory (Spence, 1973), prior work suggested that applicants use all information available to them as signals about the organization as a potential employer (Turban, 2001). Particularly in the early stages of the selection process (e.g., before applicants have had personal contact with company representatives), it is likely that they will interpret the scarce information as an indicator of what working would be like at this organization (Turban, 2001). Prior meta-analytic findings underlined that characteristics of the selection process and recruiter behaviors, such as recruiters' personableness, informativeness, competence, and trustworthiness, are strong predictors of organizational attractiveness (Chapman et al., 2005). We argue that algorithms fall short in many of the positive recruiter behaviors. In addition, algorithms may send a signal about the low value the organization attaches to HR (Mirowska & Mesnet, 2022) and, thus, makes the company seem like a less attractive employer. In sum, we hypothesize:

Hypothesis 1b.Applicants perceive organizations that rely on algorithm-based compared to human-based decision-making in digital interviews as less attractive.

2.3 Interaction effect of decision-maker and discrimination experience

Applicants' perceptions of selection procedures differ for individuals depending on their individual characteristics; this might be the case, particularly for algorithm-based selection decisions (Mirowska & Mesnet, 2022). Initial research indicated that individuals' characteristics, such as their computer programming knowledge, influence their perceptions of algorithms in selection (Lee & Baykal, 2017). In the context of fairness perceptions, we propose that applicants' prior discrimination experiences are particularly relevant.

Discrimination is defined as denying individuals equality of treatment because of their demographic background (Allport, 1954). When employees believe they have been discriminated against at work, many negative outcomes follow, including lowered job attitudes, mental health, physical health, and career advancement (Pascoe & Smart Richman, 2009; Triana et al., 2015, 2019). Research also showed that victims of perceived discrimination do a lot of ruminating as they try to disentangle what happened to them and why (Byng, 1998; Crocker et al., 1998; Deitch et al., 2003). One thing is certain: victims of discrimination perceive injustice and will watch out for future violations (Dipboye & Colella, 2005; Feagin & Sikes, 1994).

We argue that applicants who have experienced some form of discrimination (e.g., due to their ethnic background, gender, age, or other characteristics) may see human and algorithmic decision-makers in a different light. First, it is likely that some applicants perceive algorithms used to screen their video recordings to be biased and less fair, while others perceive algorithms to be less biased and more fair (Köchling & Wehner, 2020). Gilliland (1993) proposed that applicants' experiences with prior selection processes affect the salience of procedural justice rules and applicants' fairness evaluations of subsequent selection processes. While these experiences comprise general types of tests, we extend this assumption to specific experiences at work. According to the model of justice expectations of Bell et al. (2004), applicants build justice expectations and perceptions based on personal experiences. Especially when applicants have no or little information about the hiring organization or organizational agent, past experiences made in similar situations guide their justice expectations, and, in turn, influence justice perceptions (Bell et al., 2004). Following this model, we propose that applicants' own experiences with discriminatory behavior by others at work guide their expectations about the hiring process with human decision-making agents. Victims of workplace discrimination may expect that human decision-makers in the new organization will also treat them unfairly and, hence, perceive the selection process as unfair. This can be further rationalized based on salient procedural justice rules. Applicants who had experienced relatively more workplace discrimination in the past are likely to expect that human representatives violate the rules of consistency (e.g., they may not treat all applicants equally), bias suppression (their judgments will not be neutral), accuracy (they may use irrelevant information such as applicants gender, age, or ethnicity), and/or ethicality (they may not uphold moral and ethical principles) when making selection decisions. Taken together, we assume that the selection process will be expected and perceived to be less fair. In contrast, these applicants may even expect that they experience a fairer selection process if the decision maker is nonhuman.

Second, we believe that algorithms have differential effects on organizational attractiveness depending on employees' prior discrimination experiences. In H1b, we argued that applicants, on average, see negative or at least less positive signals sent by the use of algorithms instead of human representatives. However, the use of algorithms might also signal that the organization is more focused on objective decision-making (Mirowska & Mesnet, 2022). We believe that applicants with discrimination experiences interpret the use of algorithms rather as a sign of objectivity or consider this signal as more important than other signals. Empirical evidence demonstrates that individuals with more (compared to less) discrimination experiences are more attracted to organizations having a specific affirmative action plan (Slaughter et al., 2002). Similarly, we believe that for those who experienced discrimination at the hands of others at work, algorithms can signal that the organization implements affirmative action. Furthermore, we argue that exposure to the “new” algorithmic process might be more negative for applicants who have had positive experiences in the work context before. However, if individuals experienced negative discriminatory treatment by other human beings and processes in the work context, they might be more open to technological advances. Consequently, we hypothesize:

Hypothesis 2.Discrimination experience at work weakens the negative effect of algorithm-based decision-making on applicants' fairness (H2a) and organizational attractiveness perceptions (H2b).

2.4 Interaction effect of justifying information about the decision-maker and discrimination experience

Providing applicants with explanations about the selection process could improve their reactions. First, Gilliland's (1993) justice framework proposes that explanations increase fairness perceptions and reactions toward the organization. Second, the model of social validity highlights that transparency about evaluation processes and the selection situation, including information about acting persons, make selection processes socially acceptable for applicants (Schuler, 1993). This has also been supported by meta-analytical evidence (Truxillo et al., 2009) and shown to hold in the context of technology-based selection (Basch & Melchers, 2019). Basch and Melchers (2019) demonstrated that explanations emphasizing the advantages of digital interviews, such as higher standardization, improved applicants' fairness perceptions. Langer et al. (2018) found that higher levels of information about novel technologies for selection (an interview conducted with a virtual character), on the one hand, positively influenced organizational attractiveness through some procedural justice rules (open treatment and information known), but on the other hand had a direct negative effect on organizational attractiveness.

While these studies focused on general information about the selection process, we are interested in justifying information about the decision-maker. This is in line with Langer et al. (2021), who distinguished between process information and process justification and who defined process justification as providing a rationale for why a certain procedure is used. In particular, we take a closer look at a company's justification of objective decision-making because we believe that prevention of biases and prejudices in digital interviews is especially important for applicants who experienced discrimination in their work life before. Similar to our arguments leading to H2a and H2b, we assume that this justifying information is more salient and accessible or more important for individuals with higher levels of discrimination experiences. For instance, providing justification for selection procedures may provide applicants with reassurance that the process will meet the justice rules of consistency, bias suppression, accuracy, and ethicality (Leventhal, 1980) and as a sign of the organization's interest in making objective decisions. As such, we hypothesize:

Hypothesis 3.Discrimination experience at work increases the positive relationship between justifying information about the decision-maker and procedural fairness (H3a) and organizational attractiveness perceptions (H3b).

3 OVERVIEW OF STUDIES

We conducted two experimental studies. In Study 1, we manipulated the decision-maker (algorithm vs. human) and tested the effects on fairness (H1a) and organizational attractiveness (H1b). We also measured discrimination experience to test H2a and H2b. In Study 2, we used a 2 (algorithm vs. human) × 2 (justifying information vs. control) design. In this study, we used an alternative measure for discrimination experience to replicate our findings from Study 1 and furthermore test H3a and H3b.

4 STUDY 1

4.1 Methods

4.1.1 Sample and procedure

We conducted an online experiment recruiting a sample of actual job-seekers via the platform Prolific, which is specifically geared for research and provides high-quality data from a diverse population (Palan & Schitter, 2018). We restricted the sample to US nationalities because we wanted to increase the likelihood of participants' proficiency in English (Feitosa et al., 2015). Overall, 234 participants responded. We had to omit three participants who wanted their data to be withdrawn after the debriefing. The experiment included two attention checks to enhance the data quality of the final sample (Oppenheimer et al., 2009). Specifically, we asked participants to select strongly agree here, so that we know you are paying attention. In addition, participants were instructed to ignore the list below about leadership experiences and instead select ‘Other, please specify:. After the omission of 22 individuals who did not pass one or both of the attention checks, we were able to analyze the data of 209 participants. In our final sample, 54% are female, and the average age is 28 years (29.19% are between 18 and 20-year-old, 38.27% between 21 and 30, 20.58% between 31 and 40, 6.22% between 41 and 50, and 5.74% between 51 and 62-year-old). Education level is distributed as follows: 0.96% no degree, 36.84% high school degree (or similar), 6.70% professional degree, 36.36% bachelor's degree, 15.79% master's degree, and 3.35% a doctoral degree. We can also rely on a relatively diverse sample with regard to participants' ethnicity: 14.35% are Asian or Asian American, 11.96% are Black or African American, 9.09% are Hispanic or Latino, 56.94% are White, Caucasian, European, not Hispanic, 0.96% are American Indian, and 6.70% mixed.

Participation took, on average, about 20 min. All participants were compensated with £2.50. To create a realistic setting and increase participants' effort, we told participants that we were researchers from a German-based research institute, conducting a survey on behalf of one of our clients, a well-established corporation that plans to expand globally and is interested in feedback to find out if their current selection process needs to be adapted for other countries. We highlighted that it would be essential to go through the process as if they were applying for a job at this company and answer all questions thoroughly since their feedback would be important and used to redesign the selection process. Participants were then informed about the selection process. Next, participants had to conduct a digital interview. We collaborated with an HR service provider who allowed us to adjust their tool to our demands. We directed participants to their interview platform on which participants had to use their camera and microphone and record their answers to three typical job interview questions that have also been used in previous research (Langer et al., 2017; Straus et al., 2001): (1) There are times when stress is very high. Please remember a situation in which you had several deadlines at the same time; how did you handle this? (2) Can you tell us about a time that you had a conflict with someone at work?, and (3) What sets you apart from your peers?. Only after the successful completion of the interview, participants received a code to continue with the study. Please note that unbeknownst to the participants, the videos were stored only on participants' computers, and have not been uploaded or transmitted to our partner, us, or anyone else, to protect participants' privacy. We then presented an email from our company client that notified applicants about the next steps. Next, participants were invited to evaluate the selection process and the company as an employer. Afterward, participants provided demographic information and answered questions that we used as manipulation checks. Finally, we debriefed participants.

4.1.2 Manipulation of the decision-maker

Participants were randomly assigned to one of two conditions and informed that their online application would be screened by either a machine-learning algorithm (coded with 1; 117 participants), or a company representative (coded with 0; 92 participants). We included this manipulation graphically both before the digital interview when we presented the selection process and after the digital interview in an email informing participants about the next steps. In this email, we also wrote that A company representative [/an algorithm] will now use all the information gathered to evaluate the candidates [using statistical analyses] and decide if you are among the final candidates who will be considered for the position and be invited to a final interview.

4.1.3 Measures

We used 5-point Likert scales with response options ranging from 1 = strongly disagree to 5 = strongly agree for all survey items (unless indicated otherwise).

Fairness perceptions

We used a three-item scale developed by Bauer et al. (2001) to measure fairness perceptions. Following the suggestions of Bauer et al. (2001), we slightly adapted the items to measure fairness in this specific selection context. A sample item is Overall, the method of selecting used was fair. Cronbach's α for this scale is .91.

Organizational attractiveness

We measured general organizational attractiveness with the five-item scale of Highhouse et al. (2003). A sample item for this scale is This company is attractive to me as a place for employment. Cronbach's α for this scale is .88.

Discrimination experience

To measure participants' discrimination experience at work, we used the three-item scale from Snape and Redman (2003). We modified the scale to capture not only age discrimination but also other kinds of discrimination at work, such as discrimination due to someone's ethnical background or gender. A (reverse coded) sample item reads, I personally have never experienced discrimination due to age/gender/ethnical background/any other reason in my job. Cronbach's α of this scale is .72.

Manipulation checks

We asked all participants to indicate, To which extent did the following entities play a role in the decision-making process?, first an algorithm (manipulation check algorithm), and second a company representative (manipulation check human) on a five-point scale from 1 = to no extent to 5 = to a large extent. We also gave participants the opportunity to give additional open feedback. The comments indicate that participants believed our cover story and that the selection process was convincing as an actual selection process of an organization.

Control variables

To test interaction hypotheses (H2 and H3), we also used participants' demographic variables as controls because one's demographic background is a source of prior discrimination experiences (Allport, 1954; Triana et al., 2021), and we wanted to assess the moderating effect of perceived discrimination on the dependent variables beyond the effects of other personal characteristics. Women experience unequal treatment to men consistently around the world because of their relatively lower social status compared to men, and they report more discrimination than men (Benokraitis & Feagin, 1995; World Economic Forum, 2022) which can affect their reactions to algorithms in selection and the organizations that use such technology. Therefore, we asked participants about their gender and coded the variable female with 1 if they indicated to be female, and 0 otherwise. Age has been shown to positively relate to organizational attachment and commitment (Mathieu & Zajac, 1990), which can influence applicant reactions to organizations, so we also controlled for participants' age measured as a continuous variable. In addition, education has been negatively associated with attachment to organizations, perhaps because more highly educated employees have more job alternatives (Mathieu & Zajac, 1990). Therefore, we controlled for participants' highest level of education. We further asked participants about their ethnicity, because minority racial/ethnic groups experience more discrimination than majority groups (Feagin & Sikes, 1994). In our analyses, we used the variable Non-White, which we coded with 1 if participants indicated to be Asian or Asian American, Black or African American, Hispanic or Latino, American Indian, or of mixed racial background, and with 0 if participants indicated that they are White, Caucasian, European, not Hispanic.

4.2 Results

4.2.1 Manipulation checks and descriptive results

We tested whether our manipulation was effective using t-tests. For the manipulation check algorithm, we used Welch's (1947) approximation because variances were unequal; ratings were significantly higher under the algorithm condition than under the company representative condition (MA = 4.45, SDA = 0.77, and MH = 2.86, SDH = 1.24; t(145.69) = −10.82, d = −1.59, p < .001). For manipulation check human, ratings were significantly higher under the company representative condition than under the algorithm condition (MA = 2.41, SDA = 1.25 and MH = 3.68, SDH = 1.25; t(207) = 7.33, d = 1.02, p < .001), indicating that our manipulation worked. Table 1 displays the means, standard deviations, internal consistencies, and zero-order correlations for the main variables.

| M | SD | 1 | 2 | 3 | 4 | 5 | 6 | 7 | |

|---|---|---|---|---|---|---|---|---|---|

| 1. Algorithm (1) vs. human (0) | 0.56 | 0.50 | - | ||||||

| 2. Fairness | 3.38 | 1.06 | −.15* | - | |||||

| 3. Organizational attractiveness | 3.36 | 0.84 | −.19** | .59*** | - | ||||

| 4. Discrimination experience | 2.46 | 1.07 | −.03 | −.07 | −.02 | - | |||

| 5. Femalea | 0.54 | 0.50 | −.06 | .04 | .06 | .23*** | - | ||

| 6. Age | 28.07 | 10.42 | .02 | −.20** | −.08 | .15* | −.07 | - | |

| 7. Educationb | 3.39 | 1.25 | −.01 | −.21** | −.13 | .13 | .07 | .40*** | - |

| 8. Non-Whitec | 0.43 | 0.50 | .01 | .07 | .01 | .00 | .04 | −.16* | −.07 |

- Note: N = 209.

- Abbreviations: M, mean; SD, standard deviation.

- a 0 = male; 1 = female.

- b Education: 1 = no degree, 2 = high school degree (or similar), 3 = professional degree, 4 = bachelor's degree, 5 = master's degree, 6 = doctorate degree.

- c Non-White: 0 = White, Caucasian, European, not Hispanic; 1 = all other ethnic groups.

- * p < .05;

- ** p < .01;

- *** p < .001.

4.2.2 Hypothesis tests

To test H1a and H1b, we again used t-tests. In H1a, we suggested a lower mean value in the algorithm condition than in the human condition for fairness, which was supported in our data (MA = 3.25, SDA = 1.11 and MH = 3.56, SDH = 0.98; t(207) = 2.15, d = 0.30, p = .033). We also suggested a mean difference for organizational attractiveness in H1b, which was supported by our findings (MA = 3.21, SDA = 0.86, and MH = 3.54; SDH = 0.77; t(207) = 2.85, d = 0.40, p = .005).

To test H2a and H2b, we conducted separate multiple regression analyses. In Table 2, we report two models for each dependent variable, with model 1 including all of the direct predictors (i.e., hypothesized direct effect of algorithm and discrimination experience) and controls and with model 2 adding the two hypothesized interaction terms. The interaction was significant for fairness (H2a; b = 0.31, p = .023) and general organizational attractiveness (H2b; b = 0.23, p = .032).

| Fairness | Organizational attractiveness | |||

|---|---|---|---|---|

| Model 1 | Model 2 | Model 1 | Model 2 | |

| Predictors | b (SE) | b (SE) | b (SE) | b (SE) |

| (Intercept) | 4.27*** (0.29) | 4.22*** (0.29) | 3.81*** (0.23) | 3.77*** (0.23) |

| Femalea | 0.09 (0.15) | 0.11 (0.15) | 0.10 (0.12) | 0.11 (0.12) |

| Age | −0.01 (0.01) | −0.01 (0.01) | −0.00 (0.01) | −0.00 (0.00) |

| Educationb | −0.14* (0.06) | −0.14 (0.06) | −0.08 (0.05) | −0.08 (0.05) |

| Non-Whitec | 0.09 (0.15) | 0.09 (0.15) | 0.00 (0.12) | 0.01 (0.12) |

| Algorithm (1) vs. human (0) | −0.32* (0.14) | −0.32* (0.14) | −0.33** (0.12) | −0.33** (0.11) |

| Discrimination experience | −0.05 (0.07) | −0.22* (0.10) | −0.01 (0.06) | −0.15 (0.08) |

| Algorithm × discrimination experience | 0.31* (0.13) | 0.23* (0.11) | ||

| R2 | .09 | .11 | .06 | .08 |

- Note: N = 209.

- a 0 = male; 1 = female;

- b Education: 1 = no degree, 2 = high school degree (or similar), 3 = professional degree, 4 = bachelor's degree, 5 = master's degree, 6 = doctorate degree.

- c Non-White: 0 = White, Caucasian, European, not Hispanic; 1 = all other ethnic groups.

- * p < .05;

- ** p < .01;

- *** p < .001.

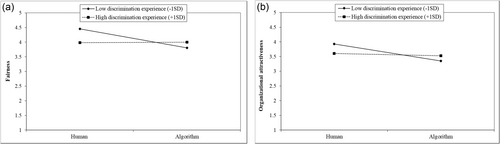

We conducted simple slope tests modeled at –1 SD below and +1 SD above the mean of the moderator to gain a better understanding of the significant interactions that are plotted in Figure 1. The simple slopes tests revealed that seeing the algorithm (instead of a human) was negatively related to fairness for applicants with lower levels of discrimination experiences (b = −0.65, t = −3.19, p = .002), but not significantly related to fairness for applicants with higher levels of discrimination experiences (b = 0.01, t = 0.05, p = .960). The effect of algorithms on organizational attractiveness was significantly negative for applicants with lower levels of discrimination experiences (b = −0.57, t = −3.53, p = .001) and close to zero for applicants with higher levels of discrimination experiences (b = −0.08, t = −0.49, p = .625). In sum, this supports H2a and H2b.

4.2.3 Additional analyses

Please note that our analyses so far show the (moderation) effect of discrimination experience over and above the effect of factors that often lead to discrimination (gender, age, and ethnicity). The results were qualitatively unaffected if we did not include demographic variables as controls. We further tested whether gender, age, education, or ethnic background moderates the effect of algorithms on applicants' perceptions. We did not find any evidence for that in our data. The results of these moderation analyses are displayed in Supporting Information: Table S1.

5 STUDY 2

5.1 Methods

5.1.1 Sample and procedure

We conducted an online experiment on Prolific with 405 individuals. We restricted the sample to UK nationalities. We had to omit three participants because they wanted their data to be withdrawn after the debriefing. We included five attention checks that were similar to Study 1. We omitted 100 individuals who did not pass one or more of these attention checks. This left 302 participants in the sample, with 61% females and an average age of 36 years (9.93% are between 18 and 20, 32.12% between 21 and 30, 25.50% between 31 and 40, 12.91% between 41 and 50, 13.25% between 51 and 60, and 6.29% between 61 and 73-year-old. Education level is distributed as follows: 3.97% no degree, 25.17% high school degree (or similar), 6.95% professional degree, 41.72% bachelor's degree, 17.88% master's degree, and 4.30% a doctoral degree. Participants also have different ethnic backgrounds: 8.28% are Asian, 13.58% are Black, 67.22% are White, Caucasian, European, not Hispanic, 0.33% are Indigenous, and 10.60% are mixed.

Participation took about 20–25 min. All participants were compensated with £2.50. The procedure was the same as in Study 1 with the only exception that we used a 2 (algorithm vs. human) × 2 (justifying information vs. control) design and, thus, randomly assigned participants to one of four conditions.

5.1.2 Manipulation of the decision-maker

As in Study 1, participants were informed that their digital interview will be evaluated either by an algorithm (162 participants) or by a human (140 participants). This time, we chose a manager instead of a company representative. As in Study 1, we included this manipulation graphically both before and after the digital interview. In the email, the exact wording was, A manager [/a machine-learning algorithm] will evaluate your video recording and decide if you are among the final candidates who will be considered for the position or not.

5.1.3 Manipulation of justifying information

In Study 2, we also manipulated whether the company provided a reason for its choice of decision-maker or not. Participants were randomly assigned to these two conditions. In the justifying information group, we included the following sentence right after the manipulation of the decision-maker both, before and after the digital interview: We chose an experienced manager [algorithm] for this step because it has been shown that this [it] prevents biases and prejudices and allows to make objective decisions. This sentence was not included in the control condition.

5.1.4 Measures

For all scales, we again used Likert scales from 1 = strongly disagree to 5 = strongly agree, if not indicated otherwise.

Fairness perceptions

We used the same measure for fairness that we used in Study 1. Cronbach's α for this scale is .88.

Organizational attractiveness

Organizational attractiveness was measured with the same scale used in Study 1. Cronbach's α for this scale is .92.

Discrimination experience

We measured major employment experiences of discrimination with a scale developed by Williams et al. (2012) that specifically asks about unfairness experienced in the work context and has also been used in a longer version in recent experimental research (Nurmohamed et al., 2021). The scale consists of five items with dichotomous response options being yes (coded with 1) and no (coded with 0). A sample item is For UNFAIR reasons, do you think you have ever not been hired for a job?. We summed up the five answers to an overall score of discrimination experience ranging from 0 to 5.

Manipulation checks

For the algorithm manipulation, we asked all participants, similar to Study 1, to indicate, To which extent did the following entities play a role in evaluating the video recordings and deciding on whom to invite to a final interview?, first an algorithm (manipulation check algorithm), and second a manager (manipulation check human) on a five-point scale from 1 = to no extent to 5 = to a large extent. For the manipulation check justifying information, we developed a three-item scale that asked participants, why the organization uses the presented screening process for selection to prevent biases, to prevent prejudices, and to make objective decisions. Cronbach's α for this scale is .88.

As in Study 1, we asked participants open questions to provide the company with some more feedback. The answers indicate that participants believed our cover story and that the selection process was perceived as realistic.

Additional measures

For more fine-grained analyses, we included a scale of Colquitt (2001) that distinguishes between procedural justice (seven items, Cronbach's α is .75), interpersonal justice (four items, α is .90), and informational justice (five items, α is .74). We modified the four-item scale of distributive justice perceptions of Colquitt (2001) to the selection context following Bell et al. (2006) and our specific setting as recommended. A sample item is The evaluation of my video recordings and the decision whether I will be among the final candidates… will reflect what I could contribute to Client 847 [alias name of the organization]. Cronbach's α for this scale is .86.

Control variables

We used the same set and coding of control variables that we used in Study 1.

5.2 Results

5.2.1 Manipulation checks and descriptive results

We tested whether our manipulations were effective with analysis of variance (ANOVA) and planned contrast effects. For all three manipulation checks, ANOVA revealed significant differences across the four groups (F(3, 298) = 66.27, p < .001, η2 = .40 for manipulation check algorithm; F(3, 298) = 61.95, p < .001, η2 = .38 for manipulation check human; F(3, 298) = 8.50, p < .001, η2 = .08 for manipulation check justifying information). For the manipulation check algorithm, ratings were significantly higher under the algorithm conditions than under the human conditions as expected (MA = 4.41, SDA = 0.85; MH = 2.71, SDH = 1.24; F(1, 298)= = 198.10, d = −1.63, p < .001). For the manipulation check human, ratings were significantly higher under the human conditions than under the algorithm conditions (MA = 2.43, SDA = 1.21, and MH = 4.12, SDH = 0.92; F(1, 298) = 183.16, d = 1.56, p < .001). This indicates that our decision-maker manipulation was effective. For the manipulation check justifying information, the mean of the groups with a manipulated reason (M = 3.75, SD = 0.88) was significantly higher compared to the mean of the control groups without a given reason (M = 3.44, SD = 0.92; F(1, 298) = 9.00, d = −0.35, p = .003). Table 3 displays the means, standard deviations, internal consistencies, and zero-order correlations for the main variables.

| M | SD | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | |

|---|---|---|---|---|---|---|---|---|---|---|

| 1. Algorithm (1) vs. human (0) | 0.54 | 0.50 | - | |||||||

| 2. Reason (1) vs. control (0) | 0.50 | 0.50 | .01 | - | ||||||

| 3. Fairness | 3.33 | 0.95 | −.28*** | .03 | - | |||||

| 4. Organizational attractiveness | 3.32 | 0.89 | −.15* | −.01 | .66*** | - | ||||

| 5. Discrimination experience | 1.95 | 1.47 | .09 | −.02 | −.09 | .03 | - | |||

| 6. Femalea | 0.61 | 0.49 | −.00 | −.10 | .00 | .03 | −.04 | - | ||

| 7. Age | 36.12 | 13.45 | −0.01 | .12* | −.01 | −.00 | .16** | −.10 | - | |

| 8. Educationb | 3.57 | 1.27 | .05 | .05 | −.07 | −.11 | .04 | −.00 | .08 | - |

| 9. Non-Whitec | 0.33 | 0.47 | .03 | −.05 | −.00 | .10 | .01 | .07 | −.31*** | −.00 |

- Note: N = 302.

- Abbreviations: M, mean; SD, standard deviation.

- a 0 = male; 1 = female.

- b Education: 1 = no degree, 2 = high school degree (or similar), 3 = professional degree, 4 = bachelor's degree, 5 = master's degree, 6 = doctorate degree.

- c Non-White: 0 = White, Caucasian, European, not Hispanic; 1 = all other ethnic groups.

- * p < .05;

- ** p < .01;

- *** p < .001.

5.2.2 Hypothesis tests

We first tested whether algorithms had a direct effect on both outcomes with ANOVA and planned contrast effects. As expected in H1a, fairness perceptions differed significantly across groups (F(3, 298) = 9.72, p < .001, η2 = .09) with significantly lower values in the algorithm groups (M = 3.08, SD = 0.92) than in the human groups (M = 3.61, SD = 0.92; F(1, 298) = 25.14, d = 0.58, p < .001). ANOVA also revealed significant differences in organizational attractiveness across groups (F(3, 298) = 2.91, p = .035, η2 = .03). Results of planned contrasts showed that organizational attractiveness was significantly lower in the algorithm conditions (M = 3.20, SD = 0.86) than in the human conditions (M = 3.46, SD = 0.91, F(1, 298) = 6.49, d = 0.29, p = .011), as hypothesized in H1b.

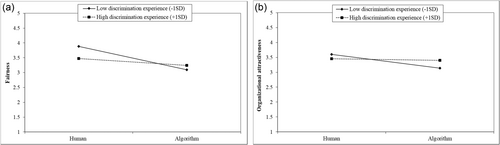

As in Study 1, we ran multiple regression analyses to test H2a and H2b. The results are displayed in Table 4. In the first model, we entered dummies for both manipulations, discrimination experience, and our control variables. In the second model, we also entered the interaction term of the algorithm manipulation and discrimination experience. The interaction was significant for fairness (H2a; b = 0.19, p = .012) and organizational attractiveness (H2b; b = 0.14, p = .048). Figure 2 displays the plots of these interactions modeled at –1 SD below and +1 SD above the mean of the moderator. Simple slope analyses revealed that the relationship between seeing the algorithm (instead of the human) and fairness was significantly negative for applicants with lower levels of discrimination experiences (b = −0.78, t = −5.22, p < .001), but not significant for applicants with higher levels of discrimination experiences (b = −0.24, t = −1.57, p = .118). The effect of algorithms on organizational attractiveness was significantly negative for applicants with lower levels of discrimination experiences (b = −0.46, t = −3.23, p = .001), and close to zero for applicants with higher levels of discrimination experiences (b = −0.05, t = −0.37, p = .709).

| Fairness | Organizational attractiveness | |||||

|---|---|---|---|---|---|---|

| Model 1 | Model 2 | Model 3 | Model 1 | Model 2 | Model 3 | |

| Predictors | b (SE) | b (SE) | b (SE) | b (SE) | b (SE) | b (SE) |

| (Intercept) | 3.69*** (0.25) | 3.68*** (0.24) | 3.69*** (0.25) | 3.54*** (0.23) | 3.53*** (0.23) | 3.54*** (0.24) |

| Femalea | 0.00 (0.11) | −0.01 (0.11) | 0.00 (0.11) | 0.05 (0.11) | 0.04 (0.10) | 0.05 (0.11) |

| Age | 0.00 (0.00) | −0.00 (0.00) | 0.00 (0.00) | 0.00 (0.00) | 0.00 (0.00) | 0.00 (0.00) |

| Educationb | −0.04 (0.04) | −0.04 (0.04) | −0.04 (0.04) | −0.07 (0.04) | −0.07 (0.04) | −0.07 (0.04) |

| Non-Whitec | 0.01 (0.12) | 0.02 (0.12) | 0.01 (0.12) | 0.21 (0.11) | 0.22 (0.11) | 0.21 (0.11) |

| Algorithm (1) vs. human (0) | −0.52*** (0.11) | −0.51*** (0.11) | −0.50*** (0.11) | −0.26* (0.10) | −0.26* (0.10) | −0.26* (0.10) |

| Reason (1) vs. control (0) | 0.07 (0.11) | 0.11 (0.11) | 0.07 (0.11) | −0.00 (0.10) | 0.02 (0.10) | −0.00 (0.10) |

| Discrimination experience | −0.04 (0.04) | −0.14** (0.05) | −0.07 (0.05) | 0.02 (0.04) | −0.05 (0.05) | 0.02 (0.05) |

| Algorithm × discrimination experience | 0.19* (0.07) | 0.14* (0.07) | ||||

| Reason × discrimination experience | 0.06 (0.07) | 0.01 (0.07) | ||||

| R2 | .09 | .10 | .09 | .05 | .06 | .05 |

- Note: N = 302.

- a 0 = male; 1 = female.

- b Education: 1 = no degree, 2 = high school degree (or similar), 3 = professional degree, 4 = bachelor's degree, 5 = master's degree, 6 = doctorate degree.

- c Non-White: 0 = White, Caucasian, European, not Hispanic; 1 = all other ethnic groups.

- * p < .05;

- ** p < .01;

- *** p < .001.

Next, we tested moderation effects hypothesized in H3a and H3b also with multiple regression analyses. In model 3, we added the interaction term of the justifying information manipulation and discrimination experience to the variables included in model 1. The interaction effect was neither significant for fairness (b = 0.06, p = .384) nor for organizational attractiveness (b = 0.01, p = .837). Thus, H3a and H3b are not supported.

5.2.3 Additional analyses

We also tested H2a, H2b, H3a, and H3b without including control variables. The results were robust to these changes. As in Study 1, we tested whether the effect of algorithms on fairness and organizational attractiveness varies with applicants' demographic backgrounds. Results are displayed in Supporting Information: Table S2. We did not find that reactions to algorithms differ significantly between individuals based on their gender, education, or ethnicity. We only found a significant moderation effect of age in such a way that the negative effect of algorithms on fairness perceptions was significantly higher for younger (b = −0.77, p < .001) than for older applicants (b = −0.26, p = .089). Supporting Information: Figure S1 displays the plots of this interaction modeled at –1 SD below and +1 SD above the mean of the moderator.

In additional regression analyses, we also looked at different facets of justice, as suggested by Colquitt (2001). Results, displayed in Supporting Information: Table S3, showed significant and negative main effects of the algorithm (Models 1) on informational justice and distributive justice. The interaction effects between discrimination experience and algorithms (Models 2) were significant for all justice dimensions. Simple slope analyses further showed that the relationship between seeing the algorithm (instead of the human) and procedural justice was significantly negative for applicants with lower levels of discrimination experiences (b = −0.24, t = −2.47, p = .014), but not for applicants with higher levels of discrimination experiences (b = 0.03, t = 0.35, p = .727). The relationship between algorithm and interpersonal justice was negative, but not significant for applicants with lower levels of discrimination experiences (b = −0.16, t = −1.52, p = .129) and positive, but not significant for applicants with higher levels of discrimination experiences (b = 0.20, t = 1.76, p = .079). The effect of algorithms on informational justice was significantly negative for applicants with lower levels of discrimination experiences (b = −0.29, t = −3.17, p = .002), and close to zero for applicants with higher levels of discrimination experiences (b = 0.02, t = 0.24, p = .810). Algorithms had a negative effect on distributive justice that was only significant for applicants with lower levels of discrimination experiences (b = −0.56, t = −4.14, p < .001), but not for applicants with higher levels of discrimination experiences (b = −0.09, t = −0.68, p = .500). Supporting Information: Figure S2 displays the plots of these significant interactions modeled at –1 SD below and +1 SD above the mean of the moderator. Entering the interaction terms between the justifying information manipulation and discrimination experience (Models 3), we found a significant positive interaction effect on informational justice. Simple slope analyses showed that the effect of justifying information on informational justice was not significant for applicants with lower levels of discrimination experiences (b = −0.15, t = −1.63, p = .103) and also not significant for applicants with higher levels of discrimination experiences (b = 0.16, t = 1.78, p = .076). This interaction modeled at –1 SD below and +1 SD above the mean of the moderator is displayed in Supporting Information: Figure S3.

In our hypotheses development, we reasoned that discrimination experiences may moderate the effect of algorithms on fairness perceptions because applicants who experienced discrimination may expect that the rules of consistency, bias suppression, accuracy, and/or ethicality will be violated if humans make the selection decision. Thus, we further refined our analyses and ran multivariate regressions to test the effects on the procedural justice rules. In these analyses, the seven items (rules) of procedural justice were entered simultaneously as dependent variables. Results from these analyses are displayed in Supporting Information: Table S4. The analyses showed that the interaction between the algorithm and discrimination experience had a significant effect on consistency and ethicality. The interaction term was not significant for any of the other procedural justice rules. Supporting Information: Figure S4 displays the plots of the significant interactions. Simple slope analyses further showed that the effects of algorithms on consistency were significantly negative for applicants with lower levels of discrimination experiences (b = −0.31, t = −2.83, p = .005), but not for applicants with higher levels of discrimination experiences (b = 0.01, t = 0.07, p = .947). The relationship between algorithms and the ethicality item was negative and significant for individuals with low levels of discrimination experience (b = −0.39, t = −2.75, p = .006) and not significant for individuals with higher levels of discrimination experiences (b = 0.02, t = 0.12, p = .908).

6 DISCUSSION

Our findings from two experimental studies indicate that applicants perceive selection procedures as less fair and organizations as less attractive when organizations communicate that they use algorithmic compared to human decision-makers. However, our results also reveal that applicants' prior discrimination experiences at work explain differences in perceptions and influence whether they see algorithms as either a boon or bane for their own chances of being treated fairly in the selection process. In both experiments, discrimination experiences lowered the negative effects of algorithms on the perceptions of fairness and organizational attractiveness in such a way that victims of discrimination are indifferent between algorithmic and human decision-makers. It seems that those who have not experienced much discrimination crave human interaction, while those who have experienced discrimination place a bit of hope in algorithms.

Post hoc analyses revealed that applicants with low or no levels of discrimination experiences at work perceive more procedural, interpersonal, informational, and distributive injustice when they are informed that algorithms make selection decisions. The procedural justice rules, especially the rules of consistency and ethicality, seem to be violated for these individuals. However, applicants with high levels of discrimination experiences rated algorithms as positively as human decision-makers in terms of these justice dimensions and individual justice rules. We also assumed that algorithms are perceived to be less biased and more accurate by those who experienced discrimination. However, our results did not support this. It might be that some applicants, irrespective of their discrimination experience, believe that algorithms can generate as much or more discrimination when they are trained on biased data, for example, due to input data from nonrepresentative samples (Köchling & Wehner, 2020; Tippins et al., 2021).

We further hypothesized that individuals who have experienced more discrimination in their past are more receptive (in terms of fairness and organizational attractiveness) to an organization's justifying information of using a more objective decision-maker in selection. However, the results from our Study 2 did not support these hypotheses. There are several potential reasons for this. It is possible that justifying information is interpreted as a hollow promise and that no real action will be taken by the company. Employees are used to seeing equal employment opportunity statements at the bottom of application forms and may not pay attention to such wording if they believe it is a line included in every job posting template. Interestingly, we found a slightly stronger effect of the algorithmic manipulation (d = −0.43, p < .001) than of the justifying information manipulation (d = −0.35, p = .003) on the manipulation check justifying information. Applicants may think that using an algorithm is more objective than just telling applicants that the decision-maker in the selection process will prevent biases and prejudices. The small effect of our justifying information manipulation on the respective manipulation check could also indicate that the justifying information manipulation was too weak in our study or that applicants, in general, do not believe that experience of managers leads to better or more objective decision-making (Highhouse, 2008). Furthermore, this kind of justifying information during the selection process might be unusual for all applicants, irrespective of their past experience with discrimination. Therefore, perhaps applicants were unsure how to process this information, resulting in similar effects across all individuals.

6.1 Theoretical contributions

The present study makes three main contributions to the literature. First, we advance the emerging literature on algorithms in HR management. In line with most prior research, we show that, on the whole, (prospective) employees perceive algorithm-based compared to human-based HR decisions more negatively. However, we make an important extension to the literature, because we demonstrate that the negative effect is diminished among applicants with prior experiences of discrimination. The fact that individuals' reactions to algorithms vary with their prior experiences might explain why previous research is inconclusive and provided evidence for opposing (or insignificant) effects. It is likely that other (so far unnoticed) experiences also matter when people are exposed to algorithms. Our study reveals that it is important to consider and further examine employees' prior experiences and backgrounds. Taken together, we respond to the recent call for research explaining how new technologies in HR management are perceived and why different individuals react differently to their usage (Kim et al., 2021).

Second, the present study also has consequences for the organizational justice and fairness literature. Our findings highlight the importance of looking at the perceived fairness of algorithms in the selection and of considering different lines of argumentation and interindividual differences. In accordance with previous work (Ötting & Maier, 2018; Schlicker et al., 2021), our findings underline that nonhuman characteristics of the decision-making agent play a role in building fairness perceptions (Marques et al., 2017). Because new technologies such as more complex machine-learning algorithms provide mounting opportunities for selection decisions, we want to emphasize that it is essential to add nonhuman representatives to existing theoretical models. Moreover, the findings from our post hoc analyses demonstrate that it is not only important to look at overall fairness perceptions but also at different facets of justice and single justice rules. Considering different lines of argumentation based on different facets of justice and justice rules (e.g., consistency and ethicality vs. correctability) is particularly relevant since this explains why some people perceive algorithms as less fair than others (e.g., applicants with low vs. high experiences with discrimination).

Third, we make an important contribution to the discrimination literature by showing that victims of perceived work-related discrimination are more open towards algorithmic decision-makers and believe that this new technology will treat them just as fairly as a traditional human decision-maker. Only recently have researchers highlighted the need for more research on how HR practices can help to diminish discrimination (Triana et al., 2021). Our study adds to our understanding of how a specific HR practice alters candidate reactions to a selection tool. Individuals who experienced prior discrimination at work seem willing to take their chances with an algorithm. Their previous bad experiences of perceived discrimination in a work setting mitigate the fear or doubt that many people have when they encounter an algorithm as a decision-maker in a selection process. Even though prior research on reactions to algorithms shows that people find them to be impersonal, people who have experienced discrimination in the past rated the fairness of the algorithm to be equally high compared to a human decision-maker.

6.2 Practical implications

The results suggest that practitioners should be cautious when informing about the usage of algorithms in the selection process, as this information could potentially harm fairness perceptions and organizational attractiveness. However, our findings further reveal that people who have experienced discrimination at the hands of others will accept an algorithmic decision-maker in selection just as much (i.e., perceived fairness and organizational attraction are just as high) as a human decision-maker. Research on perceived discrimination at work shows that employee reactions to organizations vary depending on their racial/ethnic backgrounds and their own experiences with discrimination (Triana et al., 2010). Thus, advertising the use of algorithms in selection might be beneficial for targeted recruiting of under-represented groups in organizations who may be attracted to algorithmic decision-makers as a way of creating a fair and level playing field for them to be treated the same as social majority group members.

Our results also imply that only telling applicants that the organization uses a certain selection procedure to arrive at more objective decisions may not be enough to appeal to applicants with prior experiences of discrimination. Rather, it seems important that companies take action and change traditional procedures and patterns of biases in selection. Equal employment opportunity statements have been used so ubiquitously in job postings that applicants may ignore that language today if the words seem hollow. Therefore, to be perceived favorably, our findings suggest that organizations must use actions and implement fair selection procedures to be compelling and to be rated as fair and attractive employers.

These implications gain in importance in the near future since current attempts for new rules, and legislation on AI tend to put great emphasis on transparency for stakeholders, including applicants in employee selection. Examples include the proposed Artificial Intelligence Act in the European Union (European Union, 2022) or initiatives by the New York City Department of Consumer and Worker Protection for new legislation related to the use of automated employment decision tools (New York City Department of Consumer and Worker Protection, 2022).

6.3 Limitations and future directions

We acknowledge that the present work has limitations. Our results might be affected by a sample selection bias. It is likely that participants who agreed to be video-recorded might be more open to new technologies and have an open mind about algorithms. Consequently, our results are quite conservative, and the negative effect of algorithms might be even stronger among all applicants. Furthermore, our experiments are based on hypothetical hiring situations. Although we used job-seekers as participants in Study 1 and participants went through a whole digital interview in both studies, participants did not apply for a real job. When applicants think about real organizations with an established image and reputation, the characteristics of the selection process could be less influential on the applicants' perceptions of the organization as a whole. Consequently, the relationship between algorithm-based decisions and general organizational attractiveness might be overestimated in our experiments. In contrast, it is also likely that the effects are even stronger in the field when applicants have to bear the consequences of the selection decision. Hence, we encourage future research to replicate our findings in field experiments or with a broader population of potential candidates.

Future research might look at boundary conditions that affect the relationship between algorithmic versus human decisions and fairness perceptions or organizational attractiveness. One possibility would be to study different conditions and interventions that might affect the strength of these relationships (e.g., communication medium, transparency). Furthermore, it might be interesting to alter the justifying information that we provided in Study 2 to further explore the reasons for our nonfindings.

In addition, our analysis illustrated high variances in the perceptions of applicants in the algorithmic treatment group, suggesting that some individuals may value the use of algorithms in selection, while others may have a strong aversion to algorithms. In our studies, discrimination experiences explained some of the variance. While our measure covered different types of discrimination (e.g., due to gender, age, and ethnicity), post hoc analyses showed that the demographic background of applicants alone does not explain much variance. We only find a significant moderation effect of age in such a way that the negative effect of algorithms on fairness perceptions is significantly lower for older applicants. This pattern of results is consistent with two studies concluding that overall older workers experience more discrimination than younger workers (Gordon & Arvey, 1986; Posthuma & Campion, 2009). Future research might try to further disentangle whether some types of discrimination experiences are more relevant than others. Investigating which other interindividual differences (e.g., trust in technology, experiences with algorithms) account for dissimilar perceptions would be another fruitful avenue for future studies.

ACKNOWLEDGMENTS

This work was supported by the Julius Paul Stiegler Memorial Foundation (grant number €1000 for conference participation). Open Access funding enabled and organized by Projekt DEAL.

CONFLICT OF INTEREST STATEMENT

The authors declare no conflict of interest.

Open Research

DATA AVAILABILITY STATEMENT

The data that support the findings of this study are available from the corresponding author upon reasonable request.