Framework and operationalisation challenges for quantitative comparative research in higher education

Abstract

enThe increasing availability of data on higher education systems, institutions and their members creates new opportunities for comparative research adopting a quantitative approach. The value of future studies crucially depends on the capability to recognise and address some major methodological challenges existing in quantitative comparative research in higher education. The higher education context presents in fact specific features that can hinder comparisons, and political and social processes occurred in recent decades further enhanced complexity. This article aims to discuss key challenges that are currently met in quantitative comparative research in higher education while developing the conceptual framework and in research operationalisation, to discuss possible solutions and the value of configurational and multilevel analytical approaches in identifying meaningful objects of comparisons, to take into account the complexity of the higher education context and in identifying causal relationships.

Sammendrag

noDen økende tilgjengeligheten av data som omhandler høyere utdanningssystemer, institusjoner og deres ansatte, gir nye muligheter for komparativ forskning med en kvantitativ tilnærming. Fremtidige studiers verdi vil, i stor grad, avhenge av deres evne til å gjenkjenne og adressere de viktige metodologiske utfordringene som en finner i kvantitativ komparativ forsking på høyere utdanning. Konteksten til høyere utdanning kan ha karakteristika som hindrer sammenlikning, noe som er blitt ytterligere forsterket av politiske og sosiale prosesser de siste tiårene. Denne artikkelen diskuterer sentrale utfordringer som en møter i kvantitativ komparativ forsking på høyere utdanning under utarbeidelsen av det metodisk konseptuelle rammeverket, og i operasjonaliseringen av forsking, for å diskutere mulige løsninger og verdien av konfigurasjons- og flernivå analytiske tilnærminger for å identifisere meningsfulle objekter en kan sammenlikne, gitt kompleksiteten i høyere utdanningsfeltet, og identifisere årsakssammenhenger.

1 INTRODUCTION

In recent decades, technological, political and managerial transformations have changed the landscape for comparative research in higher education, unveiling new opportunities and new challenges.

Technological progress with respect to software, computing power, digitalisation, connectivity and communication has made it easier to retrieve, store and analyse data. Moreover, the global competition for prestige, growing demands for accountability and efficient use of resources, as well as the introduction of new steering instruments and the professionalisation of administrative staff, have increased the need and capability of higher education institutions (HEIs) to collect information (Chirikov, 2013; Mathies & Välimaa, 2013). In parallel, university associations increasingly gather information on their members, and national datasets have become more accessible thanks to the Internet, and more comparable through the standardisation of indicators. International organisations such as the Organisation for Economic Co-operation and Development (OECD) and the European Union (EU) have also played important roles in creating and widely disseminating multinational datasets. As a result of these processes, scholars now have at their disposal an unprecedented wealth of data on higher education systems, institutions, scientists and students, as well as computing instruments and power to analyse them.

While the greater availability of data1 and analytical tools create new opportunities for quantitative comparative research and favours a shift from a ‘case-oriented’ towards a ‘variable-oriented’ approach (Bleiklie, 2014), each higher education system still possesses peculiar features which can hinder country comparisons, as well as between HEIs and individuals in different systems. Moreover, political and social processes have occurred in recent decades to make the higher education context more complex to analyse. Namely, globalisation, modernisation policies and multilevel governance have spurred new competitive and institutional forces that operate beyond and within the traditional national boundaries, forcing comparative thinkers to adopt more sophisticated frameworks.

The goal of this article is therefore to address some key challenges currently met in quantitative comparative research in higher education. The structure of the article reflects key phases in developing a quantitative study: (a) setting the framework, (b) operationalisation and (c) analysis. Quantitative research and comparative research in the social sciences each display distinctive challenges, e.g., of validity and reliability vis-à-vis conceptual equivalence. This article focuses on the distinct challenges emerging in higher education research when quantitative and comparative approaches are combined. The first section examines common issues related to the development of a conceptual framework for a quantitative comparative study in higher education, namely in identifying meaningful objects of comparison and taking into account the complexity of the higher education context. The second section elaborates on the key challenges emerging in research operationalisation, whereas the third section discusses the usefulness of two analytical approaches—configurational and multilevel—in addressing the challenges discussed as well in detecting causal relationships.

2 THE FRAMEWORK OF A QUANTITATIVE COMPARATIVE STUDY IN HIGHER EDUCATION

Developing the conceptual framework of a quantitative comparative study raises specific challenges for the higher education researcher, when it comes to define the objects of comparison2 and the factors that matter for the phenomenon under examination.

While comparative research explores a given phenomenon in different macrosocial contexts, like countries, societies, nations or regions (Teichler, 1996), and uses attributes of the macrosocial contexts as potential explanatory variables (Ragin, 2014), the object of comparison is not necessarily a macro-level phenomenon, but it may well be—for instance—a phenomenon at the meso level (organisational level) or micro level (individual level) (Bleiklie, 2014), such as HEIs’ or departments’ strategies, or students’ satisfaction in different countries. In addition, variables at the macro level often derive from the aggregation of organisational and individual data, like a country number of HEIs or total scientific production. Therefore, a comparative higher education study not only entails defining the macrosocial units being compared (e.g., what higher education systems or countries), but also what organisations and/or individuals must be considered, and to ascertain the implications of different sampling decisions.

Decisions regarding which HEIs must be considered for the comparative analysis, namely the definition of the institutional perimeter, bear great importance and should be carefully pondered (Huisman, Lepori, Seeber, Frølich, & Scordato, 2015). HEIs are commonly defined as institutions awarding degrees at least at the bachelor level. However, systems greatly differ in the types of organisations they comprehend and their relative proportions, such as according to their legal status (public or private), type (universities and universities of applied sciences), and their functions (teaching only vs. teaching and research). For example, Spain is a unitary system including only universities that all have research activity as a core task, whereas Belgium is a binary system including universities of applied sciences—for which research is not a core task—as well as universities, which are strongly research-oriented. Hence, a study comparing the research quality of HEIs in the two countries will provide very different results depending on whether both kinds of institutions or only universities are considered for Belgium. Moreover, a comparative study may wish to consider not only HEIs that formally belong to the national higher education system, but all institutions located in a country, such as including private HEIs that do not award state-recognised degrees. Comparability issues may also emerge due to outliers, namely institutions that display peculiar traits and whose inclusion or exclusion can strongly impact the results (Huisman et al., 2015).

Systems may also differ regarding the definition of the individual members. For example, PhD students employed as teaching assistants or research assistants are included among academic staff in some systems and not in others (Lepori et al.,2018). Thus, among the HEIs included in the European Tertiary Education Register (ETER),3 British and German HEIs exclude PhD students from the academic staff count, whereas Dutch universities include them (Lepori et al., 2018). Such differences can distort comparisons, for example on gender balance or the internationalisation of the academic staff—since the share of female and foreign staff tends to be much larger among PhD students than among professorial staff. The researcher should therefore ascertain the attributes held by the same units in different systems, and eventually remedy the differences that may distort the comparison (in the case of comparing the internationalisation of academic staff, for instance, by excluding PhD students from the pool of the academic staff).

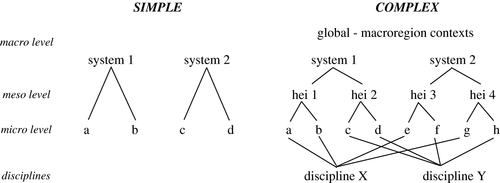

Beyond clarifying the objects of comparison, a second pivotal step is to identify the factors that matter for the phenomenon of interest, for example by focusing on relationships between macro-level constructs (e.g., investment in the higher education system and the system’s educational output), macro-level and meso-level constructs (e.g., system policies and universities’ performance, or vice versa), as well as between macro-level and micro-level constructs (e.g., systems’ policies and teachers’ behaviours, or vice versa). In this regard, comparative higher education research presents specific and increasing challenges due to the existence of multiple contexts in which individuals, organisations and systems are embedded and intertwined. First, higher education has been long regarded a peculiar social setting, characterised by strong national differences and the prominence of scientific disciplines’ system of beliefs, goals and social structures, which differ from each other and cut across national systems (Clark, 1983; Whitley, 2000). Second, while HEIs have long been known to operate as loosely coupled structures with little ability to influence their members’ behaviour and shape their environment, global competition and modernisation policies have spurred universities to actively steer their members, and develop clear boundaries and strategies (Brunsson & Sahlin-Andersson, 2000; Krücken & Meier, 2006; Seeber et al., 2015). Third, globalisation processes and the emergence of multilevel governance approaches have created new institutional and competitive forces beyond and within the national level (Enders, 2004; Jones & Oleksiyenko, 2011; Maassen & Olsen, 2007).

In turn, comparative higher education studies that focus primarily on system differences, or system and individual level variables and their relationships, are no longer sufficient (Kosmützky, 2015). Comparative higher education research must now take into account the multiple embeddedness of individuals, within disciplines and HEIs that possess distinctive values and objectives, and the multiple embeddedness and interactions of HEIs with local, national and supranational contexts conveying different competitive and institutional pressures (Frølich, Huisman, Slipersæter, Stensaker, & Bótas, 2013; Hüther & Krücken, 2016; Marginson, 2006; Seeber, Cattaneo, Huisman, & Paleari, 2016). Neglecting such complexity may threaten the validity of the comparative study.

As a matter of example, Figure 1 juxtaposes a simple conceptualisation of the higher education context (left side) and a complex one (right side). The first only considers that micro-level units—like students or scientists, represented by letters—are embedded into different higher education systems. The latter also considers that individuals are simultaneously embedded into different HEIs and disciplines—with distinct norms and pressures, and that HEIs are themselves embedded into different systems and supranational contexts—such as European universities in the European Higher Education Area (EHEA).

3 OPERATIONALISATION: CHALLENGES FOR COMPARATIVE HIGHER EDUCATION

Quantitative social science aims to test hypotheses on relationships—correlational or causal—between variables (Ragin, 2014). Testing these relationships requires a process of operationalisation, whereby abstract phenomena are represented via concepts and operational definitions (i.e., indicators) that allow to measure and quantify them.

Operationalisation’s main challenge is to provide suitable representations of the phenomena being investigated. At the same time, comparative research requires that concepts and indicators can be meaningfully employed across different contexts.

3.1 Concepts

The phenomena of central interest to higher education researchers are often multidimensional and abstract (like student satisfaction, teaching quality, research productivity), which are more complex to operationalise compared to mono dimensional and concrete phenomena (such as the number of students). The definition of concepts, their attributes and meanings, are not straightforward for multidimensional and abstract objects of analysis. This problem is heightened by the fact that the field of higher education research hosts scholars with different research backgrounds, who may have different ideas about how a given construct should be conceptualised. For instance, scholars comparing universities’ efficiency in education often conceptualise educational output quantitatively, in terms of the number of students enrolled and/or degrees produced (e.g., Abbott & Doucouliagos, 2003; Johnes, 2006). Some researchers may find this conceptualisation insufficient, because it ignores that the cost of producing a degree differs greatly across disciplines, nor does it consider the quality of the educational output or the students’ improvement vis-à-vis their initial capabilities. More detailed conceptualisations may then include the cost and quality of education, with operational definitions consisting of the standard cost for producing each graduate in different disciplines or students’ test scores (e.g., Witte & López-Torres, 2017).

In choosing among possible conceptualisations, the researchers should take into consideration not only which one is better in terms of representing the underlying phenomenon, but also whether it is suitable for comparing systems. In this respect, a major challenge is that the key attributes of concepts employed in higher education research often differ across systems. This is well illustrated by the previously mentioned example of academic staff. Namely, while ETER sets the defining attributes of academic staff as being related to the combinations of a role (staff, PhD students) and activities (research, teaching, public service),4 yet variations exist across systems in PhD students’ teaching and research duties that affect whether or not they are included among the academic staff.

A related challenge emerges when concepts developed in one context are used in new contexts, where the original definition may be unsuitable. Higher education scholars will meet this problem more frequently in the future, as data availability extends the possibility of comparative studies to new systems. It is then important to review this problem and possible solutions with an example.

In a study of the internationalisation activities (IA) of European HEIs, Seeber, Meoli, and Cattaneo (2018) conceptualised three types of HEIs’ internationalisation profile: basic, academic and entrepreneurial. Entrepreneurial HEIs, for example, develop both IA aimed to improve research and teaching, as well as IA aimed to attract resources. Let’s suppose a researcher aims to investigate HEIs’ IA in African countries by using the conceptualisations extrapolated from the analysis of European HEIs. This is potentially problematic, as all HEIs in the new context may belong to one typology—namely the conceptualisation is not helpful in discerning differences, or HEIs may display portfolios of IA that do not fit the existing conceptualisations, such as an hypothetical portfolio comprising only IA aimed at attracting resources. One option is to run the clustering analysis with the new sample and identify new concepts and their defining attributes. However, the risk of a ‘sample-dependent’ approach (Cooper & Glaesser, 2011; Kent, 2015) is that the emerging concepts may have little in common with the original ones, meaning comparisons with the European context are unfeasible. A second option is to start from the defining attributes of the original concepts and adapt them, resulting in the typical challenge of comparative studies of managing the trade-off between specification and abstraction, between system-specific and system-inclusive concepts (Smelser, 1976). In pursuing abstraction, the researcher will relax the constraints of the original definition—for example, by including new attributes or by considering units that only possess a few of the defining attributes as members. The potential downside is conceptual stretching (Sartori, 1970), namely that the boundaries of the concept become so blurred that it loses its original meaning. An alternative solution is to add new ‘defining attributes’ (such as, recruiting international research talent) and include both the original and the new defining attributes.

In any case, decisions upon a concept’s attributes and meaning (its intension) are intertwined with decisions regarding the set of entities to which the concept should apply (its extension; namely, what is to be considered an entrepreneurial HEI in internationalisation?). Given the set of defining attributes, different approaches exist to determine the extension. For example, in the family resemblance approach, the members are those units that have at least one or some attributes in common with every other unit. In the radial approach, a prototype member is defined—e.g., by having all attributes—and real members must possess a certain number of attributes of the prototype (Collier & Mahon, 1993).

In sum, applying a concept in a new context should achieve a suitable balance between generalisation and ability to represent local differences. No single approach is always preferable to the others in this regard. Rather, the researcher should weigh the pros and cons in terms of the resemblance of the new concept vs. the original concept, its usefulness in describing the new context, and for developing broader comparisons.

3.2 Indicators

Indicators represent operational definitions of a concept, which allow its measurement. Comparative researchers should develop or rely on indicators—and related measures—that are not biased and that are equivalent (He & van de Vijver, 2012).

Indicators are biased when affected by systematic measurement anomalies. An important precondition for meaningful comparisons is in fact that indicators and related measures are the same in different contexts. For example: data on personnel may be expressed in terms of head counts, or full-time equivalent; financial data should be converted in the same currency or, given that exchange rates can vary rapidly, in purchasing power standards. In some cases, data need to be rearranged to allow for comparability. For example, some systems encompass three tenured academic ranks (assistant, associate and full professors—e.g., in the Netherlands), while others encompass two (e.g., in Norway, associate and full professors), so that researchers may need to re-elaborate classifications in order to make them comparable.5

Indicators are equivalent when the resulting scores’ relationship to the whole construct that is measured is the same across systems. This property can be illustrated with an example. Only a small part of icebergs is observable, while 90 per cent lies beneath sea level; yet, we can compare the size (phenomenon-construct) of icebergs (objects compared) through the small part that is visible (proxy-measure), because this remains in constant proportion to the whole for all icebergs.6

However, how can researchers design indicators that satisfy such properties and that can be safely employed for comparative research?

In many cases, the finer the operationalisation, the better the representation of the whole phenomenon, and the better the equivalence. For instance, a researcher aiming to compare a systems’ research output (proxy) can rely on the number of articles included in the Web of Science (WOS) database. Yet in many countries, monographs are still very common, or incentives reward publications indexed in Scopus database rather than in WOS, so relying on the WOS only will implicitly overestimate the production of systems that focus on such a database compared to other systems. Instead, using a more comprehensive proxy that includes both types of publication archives will provide more meaningful comparisons.

In other cases, increasing the precision/comprehensiveness of the indicator may not be necessary or desirable. This is true even for seemingly simple concepts that encompass a single and concrete dimension. A notorious example is the coastline paradox, formulated as a puzzle by the mathematician Benoît Mandelbrot to determine ‘how long is the coast of Britain?’ (1967). This problem appears to be rather straightforward, but the length of the coastline depends on the scale of measurement: the shorter the yardstick the longer the measured length, as the ruler would be laid along a more curvilinear route. Hence, if the coast of Britain is measured with a yardstick of 200 km, it measures 2,400 km; if a 50-km yardstick is used, the coast measures 3,400 km, and so forth. However, as the yardstick becomes more and more precise and decreases towards zero, the measured length increases ad infinitum, leading to a measure that is of little empirical value. In higher education research this is a very common problem. For example, in order to measure the social impact of an HEI’s activities, a researcher can think about a very large number of indicators covering the many ways in which HEIs’ activities impact society. Yet one should wonder if the increased precision also delivers measures that are superior for comparison and whether the gain is worth the cost and time needed for more data collection. In some cases, increased precision may even lead to measures that fare worse for comparative purposes. For instance, a researcher comparing a system’s performance in third mission activities can rely on the number of patents produced and the amount of resources attracted from third parties. However, HEIs in some countries may be accurate in collecting information about similar revenues, while others may be not. In such cases, an indicator that is less representative of the whole, but based on more homogeneously collected data, will guarantee a stronger degree of equivalence.

It is often the case that full equivalence cannot be achieved, and the choice of the indicator will affect the comparison in favour of one system or another. In other words, indicators are rarely ‘neutral’ to the comparison. Once more, the example of the coast length is illustrative: because the coastlines of Russia and Norway are jagged to a different extent, the former may be longer or shorter than the latter depending on the yardstick’s length. In any case, researchers should avoid operationalisations that engender blatant comparative biases and acknowledge the consequences of indicators’ choices on comparison.

Decisions upon whether and how to normalise the indicators are also very relevant for meaningful comparisons. For example, the number of universities from a country listed in the universities’ ranking have been used to compare the quality of higher education systems governance and funding policy. Since US universities dominate such rankings, it is frequently deducted that US governance and funding structures are the best and should be imitated by other systems (e.g., Aghion, Dewatripont, Hoxby, Mas-Colell, & Sapir, 2007). However, the number of HEIs in the ranking is largely affected by the sheer size of a country higher education system, such as in terms of investment in research. Hence, when the number of HEIs in the university ranking is used to infer the quality of the higher education governance, this number should be at least normalised according to proxies of a system’s size.7

Specific issues of comparability exist for indicators based on the judgement of experts and the perceptions of non-experts. Experts’ judgements or individual perceptions may in fact be consciously or unconsciously affected by the individual characteristics or role of the respondent, and by the context in which the respondent is embedded.

Expert-based indicators rely on judgements from people who have better knowledge or hold positions that allow them to give an accurate evaluation and information. Experts’ judgements are particularly valuable when it is impossible to observe, measure or experiment on the phenomenon in question, and when indicators based on concrete dimensions provide incomplete or questionable proxies (Benoit & Wiesehomeier, 2009). For example, the number of publications and citations provide a proxy of scientific output, yet the value of scientific production cannot be fully captured by numbers alone and judgements from reputed academics can provide valuable, complementary information. Indicators for measuring the decision-making power of different academic bodies provide another example. Proxies can be constructed based on universities’ statutes, which specify academic bodies’ formal powers—yet some bodies or persons can have little formal decision-making power, but have strong decision-making power in practice, thanks, for instance, to a favourable network position (Padgett & Ansell, 1993). In a similar case, a survey of people holding insider positions, such as employees in the central administration, can provide more valid information on actual power than the information derived from official documents. In the case of experts’ judgement, comparability is at stake when respondents in different units display systematic differences in traits or roles. For instance, when asked if internationalisation is important for their institution, rectors may give systematically higher scores than deans. If systems differ in the proportion of respondents in the two roles, comparisons will be distorted. Therefore, experts should have similar characteristics in different systems, and/or the inferential analysis should include specific variables to take such effects into account.

For given constructs, there are no real experts with privileged information. For example, when it comes to concepts that pertain to cognitive dimensions or dimensions which are socially constructed, such as an organisation’s culture, identity or quality of the working environment. These attributes are diffused through the organisation and possibly heterogeneously, so there is no privileged point of view to observe them. In this case, scholars develop surveys to collect perceptions from a variety of members from the units analysed (i.e., ‘non-experts’). For indicators derived from perceptions, a major threat to comparability occurs when the measure is biased towards the point of view of as a specific subgroup. To be comparable, samples should be representative of the whole (system or organisation) population, along the individuals’ traits—such as rank, role, gender, age—and the variety of the relevant contexts in which they are embedded—such as department/discipline, and the type of HEIs in which they work. For example, academics working in a technical university/department may hold different views on the purpose of education than respondents from a university/department specialised in the humanities. When the sample is not representative of the whole population, the researcher can weight responses from different groups differently in order to rebalance their over- or under-representation in the sample (so-called ‘post-stratification’, Davern, 2008).

Unwarranted response variations can also be triggered by country differences in contextual pressures, such as on what is socially desirable and what is not (Kreuter, Presser, & Tourangeau, 2008). The same question or measure may even hold different meanings in different countries, cultures (Välimaa & Nokkala, 2014) or, more subtly, items translated in different languages may convey different meanings (Brady, 1985). An extensive literature has explored comparability of survey items in cross-cultural and cross-national survey research (Holland & Wainer, 1993; Peng, Peterson, & Shyi, 1991; Strauss, 1969), and several types of equivalence have been identified, which can be grouped in two fundamental domains (Johnson, 1998). Interpretive equivalence concerns with similarities in how abstract or latent concepts are interpreted across cultures, including, for instance, conceptual equivalence, when the construct has similar meanings within the contexts compared (Okazaki & Sue, 1995), and functional equivalence, which exists to the extent that the concept serves similar functions within each society being investigated (Singh, 1995). Procedural equivalence regards instead the measures and procedures used to make comparisons, and emphasises the applicability of identical procedures, including among others stimulus equivalence (Anderson, 1967) and text equivalence (Alwin, Braun, Harkness, & Scott, 1994).

Several techniques are also available for evaluating the comparability of survey measures across nations or cultures (Braun & Johnson, 2010; Johnson, 1998). Equivalence across contexts can be pursued, for instance, by improving response anchors, deleting the more problematic answers, correcting for respondents’ differential interpretations (King, Murray, Salomon, & Tandon, 2004), developing several indicators and retaining those that are correlated across countries, as such correlation signals that they relate to the same underlying concept (Przeworski & Teune, 1966).

For example, surveys exploring questionable research practices can solely inquire about perceptions—for instance: ‘Do you think that there is a problem of questionable research practices in your university?’ However, respondents in different countries may hold different ideas of what a questionable research practice is, so conceptual equivalence should be ascertained in advance—for example, through a vignette design. At the same time, procedural equivalence may be at stake because responses to such a question can be affected by contextual factors, such as how much time the mass media in the country dedicate to scandals in science, which might be decoupled from the ‘true’ level of questionable research practices. Hence, researchers can opt for operationalisations that are less vulnerable to cultural and contextual biases, such as questions about objective facts, like: ‘Do you know of any colleague ever fabricating data?’,8 although also this measure may have limitations in terms of procedural equivalence, because the proportion of questionable practices in research consisting of ‘fabricating data’ may vary across countries.9

In turn, operationalisation via survey items presents trade-offs in terms of comparability, which should be carefully assessed.

4 PROMISING APPROACHES TO COMPARATIVE ANALYSIS

This section discusses the value of configurational and multilevel research approaches in addressing some of the challenges discussed so far, namely in setting an appropriate institutional perimeter, taking account of the complex social structure of higher education systems and identifying causal relationships, which is often a prime goal of comparative research (Allardt, 1990; Ragin, 2014; Reale, 2014; Verba, 1967).10

Configurational research aims to identify groups of organisations that resemble each other along important dimensions, through statistical techniques such as cluster analysis. This approach assumes that organisations can be better understood by identifying groups of organisations with distinct, internally consistent traits, rather than by seeking to uncover causal relationships that hold true for all organisations (Ketchen, Thomas, & Snow, 1993; Short, Payne, & Ketchen, 2008).

The configurational approach is valuable to define meaningful institutional perimeters—i.e., what HEIs are comparable in different systems and should be therefore considered in the analysis. In fact, country variation in HEIs’ organisational taxonomies may hinder functional equivalence, and hide differences or similarities that threaten the validity of the comparison: HEIs with similar traits and functions may be named differently (such as Fachhochschulen in Germany and Hogescholen in the Netherlands), or HEIs with substantial differences may have the same official category (the label ‘university’ can be used by ‘world-class’ research-intensive institutions as well as by regional, teaching-oriented ones). Thus, researchers should take advantage of configurational approaches to identify in the first place which HEIs belong to the same population and can be rightfully compared.

The configurational approach can also be valuable to identify causal relationships between constructs, particularly when the relationship between an independent and a dependent variable is not the same for the whole sample—for instance, when the relationship is positive for a subsample of cases and negative for another one. Hence, once internally coherent groups are identified, the researcher can explore what organisational traits and contextual conditions relate to specific configurations. The configurational approach can also be used in combination with the method of difference (Goedegebuure & van Vught, 1996; Mill, 1967) to explore causal relationships. Namely, by identifying cases that are classified in different groups and display similar traits and environmental conditions except one, this one trait or condition can be regarded as the cause for the different classification. For instance, in Seeber et al.’s (2018) study, Spanish and Italian HEIs display very similar contextual conditions and traits, namely they are all universities of unitary systems, with a Napoleonic administrative tradition, endowed with similar resources and for which tuition fees represent between 10 per cent and 20 per cent of the total revenues. Yet Spanish HEIs are much more often entrepreneurial in internationalisation than Italian HEIs. A major difference between the two systems is their language, which could explain the difference. In fact, around 500 million people speak Spanish outside Spain, whereas far fewer Italian (native) speakers live outside Italy. Hence, Spanish HEIs have a much greater potential to attract students from abroad than Italian HEIs by developing entrepreneurial IA (Seeber et al., 2018).

A second important analytical approach is represented by multilevel models, which are particularly valuable to represent and address the complexity of the higher education settings, where individuals and organisations are embedded in multiple social environments at the same time (see Figure 1). Multilevel regression models consider that observations (like individuals or HEIs) are not independent from each other, as they share the same environment and they affect each other (Snijders & Bosker, 2012). Multilevel models split the variance of the dependent variable (such as individual performance) between levels (like systems, HEIs, disciplines), hence diagnosing the extent to which a phenomenon is affected by factors at each level (Jones & Subramanian, 2012; Snijders & Bosker, 2012). For example, the more universities in the same country display a similar behaviour between each other and different from universities in other countries, the more the variance at the country level will be proportionally greater and statistically significant, thus signalling that the causes of the behaviour should be searched at the country level. On the contrary, if less than 5% of the variance is accounted for by between-countries variations, it can be inferred that system policies and country traits have a minor impact. For instance, by adopting a multilevel approach to explore HEIs’ rationales to internationalise, Seeber et al. (2016) found that country-level factors matter only for few rationales and systems, and that HEIs’ internationalisation rationales are also affected by the extent to which they are embedded in the global competitive arena.

Multilevel models are also valuable to overcome some notable challenges of comparative studies in detecting causal relationships. When comparisons occur between macro-level constructs, the fact that macro-level social units (higher education systems) are often limited in number may lead to what is known as the ‘many variables, small N’ problem, namely that too many explanatory variables may exist for relatively few cases (Lijphart, 1971). The increased availability of data at meso and micro level combined with multilevel models can often overcome this obstacle, because the number of observations for each country becomes much larger and it is possible to control for alternative explanatory factors at meso and micro level. For example, the number of universities in a country listed in universities’ ranking may not be affected only by a system’s governance, funding structure, language, size and investment in research (Li, Shankar, & Tang, 2011), but also by its HEIs’ characteristics, like their internal governance or their size.

5 CONCLUSIONS

This article discusses some key challenges that currently characterise quantitative comparative research in higher education as well as possible solutions. Some choices and limitations that underpin this work should be considered. First, the article focuses on challenges that are relevant for a vast array of comparative studies—like the definition of the objects of comparison—and that most frequently undermine the validity of quantitative comparative studies in higher education. The list of challenges examined is far from exhaustive, and future studies should explore other important methodological aspects. Second, the article focuses on methodological challenges that are relevant for the kind of data and analytical tools that are currently available to most higher education researchers, such as surveys, institutional research, databases developed from large organisations. Namely, data that are: (a) structured and (b) with a size that is manageable by common hardware and software tools. The article does not discuss challenges related to the rise of so-called ‘big data’, which are typically (a) unstructured and (b) with a size beyond the processing capability of common hardware and software tools. These kinds of data require distinct analytical approaches—such as machine learning aiming at pattern recognition—and imply a new array of methodological challenges for comparative higher education scholars.

Given nowadays’ rapid pace of social, political and technological transformation, some reflections are warranted upon the future opportunities and challenges of (quantitative) comparative higher education research. On the one hand, despite a long-standing policy pressure aimed at blending the peculiar features of the higher education sector, by transforming universities from loosely coupled to complete organisations (Brunsson & Sahlin-Andersson, 2000) and increasing external guidance and standardisation of research and education activities (Hood, James, Peters, & Scott, 2004; Musselin, 2007; Paradeise & Thoenig, 2013), nevertheless academic tasks, structures and identities have often proven resilient towards attempts of steering and homogenisation (Musselin, 2007; Seeber et al., 2015; Thoenig & Paradeise, 2014). Thus, future research needs to consider the lasting complexity of the higher education context. On the other hand, more recent political and technological processes may have durable effects on the opportunities and modes of quantitative comparative higher education. First, the scientific, economic and political hegemony of Western liberal democracies, which eased the spread of institutions and models of Western systems and organisations (Mounk & Foa, 2018), is increasingly contested. For example, the proportion of scientific articles from China compared to the US has grown from 9 per cent to 88 per cent between 1996 and 2018 (Source: Scimago). In 2003 no Chinese university could be found among the top 200 positions of the Academic Ranking of World Universities (ARWU) Shanghai ranking, whereas 19 are found in 2019, second only to US universities. This process weakens the isomorphic pressure towards a single template, as other systems can claim a role model or their distinctiveness (Marginson, 2017). This shift can possibly spur diversity of practices and governance arrangements in higher education systems and institutions around the globe, or even reverse the direction of isomorphic forces, creating opportunities and need for broader international comparisons. At the same time, broader comparative studies heighten methodological challenges, because the more the compared systems differ in terms of their cultural and political background, the more they likely differ in terms of understandings and assumptions about key constructs. Moreover, shifts in global economic and political influence are also resulting in geopolitical frictions, which may threaten the scientific collaboration needed for large-scale comparative studies.

A second major process looming over quantitative social sciences is the rise of the ‘big data’ and the explosion in computing power. It is estimated, for example, that 90 per cent of all digital data in the world was created in the last two years (McAfee & Brynjolfsson, 2017). Access to a much larger and deeper set of information on systems, organisations and individuals creates huge opportunities for research, but also new methodological and ethical challenges for comparative higher education studies (Foster, Ghani, Jarmin, Kreuter, & Lane, 2016). Future research should address both foreseeable challenges, such as of operationalisation in spite of different regulations on privacy and ethical standards, as well as challenges that will emerge with the diffusion of new research approaches.

ACKNOWLEDGEMENTS

I am grateful to the reviewers for their insightful comments, to Anna Kosmützky, Tehri Nokkala and my former colleagues Jef Vlegels, Stijn Daenekindt, Jeroen Huisman and Thomas Koch for their very useful and constructive feedbacks, and to Kari Nordstoga Hanssen for her kind support in translating the abstract in Norwegian.

ENDNOTES

- 1 The article focuses on kinds of data currently available to most higher education (HE) researchers. We do not refer here—but discuss in the conclusion—the so-called ‘big data’.

- 2 Regarding the challenges of selecting units for comparison in quantitative studies, see also Ebbinghaus (2005).

- 3 ETER is a European Commission initiative consisting in a database collecting information on the basic characteristics, geographical position, educational activities, staff, finances and research activities of HEIs in Europe. ETER provides data at the level of individual institutions, which complement educational statistics at the country and regional level provided by EUROSTAT (the EU’s statistical office); see also: www.eter-project.com.

- 4 ETER defines as academic staff: (a) staff whose primary assignment is instruction, research or public service, (b) staff who hold an academic rank, like professor, assistant professor, lecturer or an equivalent title, (c) staff with other titles (like dean, head of department, etc.) if their principal activity is instruction or research, and (d) PhD students employed for teaching assistance or research.

- 5 The development of the Changing Academic Profession survey implied a similar challenge, and academic ranks were grouped into junior and senior positions (Rostan et al., 2014).

- 6 I am grateful for this metaphor to Daniele Archibugi, who used it during a project meeting.

- 7 A country number of HEIs in the ranking is affected by other factors unrelated to the quality of the governance and funding structure, such as whether it is an English-speaking country (Li et al., 2011), and most popular rankings also consider indicators related to past scientific performance (such as the number of Nobel prize winners from the university, ARWU, 2018), that implicitly favours systems with an established scientific tradition.

- 8 For a meta-analysis on research malpractices see e.g., Fanelli (2009).

- 9 A similar issue is illustrated by surveys exploring countries’ level of corruption, which employ both questions based on perceptions of corruption, i.e., ‘Do you think that corruption is a problem in this country?’ and about objective facts, i.e., ‘Have you paid a bribe in the past 12 months?’ Countries may rank very differently according to the two indicators. For instance, Italy ranks among the fairly corrupted countries according the first question (75th position), and among the least corrupted according to a fact-related question (15th position) (Transparency International, 2013).

- 10 By comparing different systems, a researcher can in fact deduct which policies, for instance, are more effective. Increased capability to retrieve data at the organizational and individual levels opens up the possibility for comparative studies to not only explore relationships between macro-level constructs (e.g., system policy → system efficiency), but also relationships between macro- and micro-/meso-level constructs (e.g., system policy → individual/organizational behaviour) and micro-/meso–macro-level constructs (e.g., individual/organizational behaviour → system efficiency).