FLAMES—Federated Learning for Advanced MEdical Segmentation

Funding: This work has been also supported by PNRR project FAIR - Future AI Research (PE00000013), Spoke 3, under the NRRP MUR program funded by the NextGenerationEU, and G.A.N.D.A.L.F. - Gan Approaches for Non-iiD Aiding Learning in Federations, CUP: E53D23008290006, PNRR - Missione 4 “Istruzione e Ricerca” - Componente C2 Investimento 1.1 “Fondo per il Programma Nazionale di Ricerca e Progetti di Rilevante Interesse Nazionale (PRIN).”

ABSTRACT

Federated learning (FL) is gaining traction across numerous fields for its ability to foster collaboration among multiple participants while preserving data privacy. In the medical domain, FL enables institutions to share knowledge while maintaining control over their data, which often vary in modality, source, and quantity. Institutions are often specialised in treating one or a few types of tumours, typically focusing on a specific organ. Hence, different institutions may contribute with distinct types of medical imaging data of various organs, originating from diverse machines. Collaboration among these institutions enhances performance on shared tasks across different areas of the body. The framework employs modality-specific models hosted on the server, each designed for a particular imaging modality and designed to predict the presence of tumours in scans from its respective modality, regardless of the organ being imaged. Clients focus on their specific imaging modality, utilising knowledge derived from images contributed by institutions employing the same modality. This approach facilitates broader collaboration, extending beyond institutions specialising in the same organ to include those working within the same imaging modality. This approach also helps avoid the introduction of potential noise from clients with images of different modalities, which might hinder the model's ability to effectively specialise and adapt to the data specific to each institution. Experiments showed that FLAMES achieves strong performance on server data, even when tested across different organs, demonstrating its ability to generalise effectively across diverse medical imaging datasets. Our code is available at https://github.com/MODAL-UNINA/FLAMES.

Abbreviation

-

- FL

-

- federated learning

1 Introduction

In the healthcare domain, multimodal data refers to information collected from different sources and modalities, such as Electronic Health Records, medical imaging, genomic data, environmental data, behavioural data, sensor data, or data from wearable devices (Di Cola et al. 2023; Shaik et al. 2024). Data from the UP Fall dataset, which contains cameras, wearable sensors, and infrared sensors of the same activity, are used in Qi et al. (2023) for fall detection experiments. Hospitals and specialised centers often generate large amounts of valuable data, which are critical for building accurate and robust Machine Learning models. By integrating data from different modalities, healthcare professionals can achieve a comprehensive understanding of a patient's health, leading to personalised care and informed decision making (Acosta et al. 2022). Sharing sensitive medical data across institutions can be restricted by privacy regulations. Federated Learning (FL) offers a solution by allowing multiple institutions to collaboratively train models without sharing sensitive data. This decentralised approach can even be implemented on tiny devices (Qi, Chiaro, and Piccialli 2024), and is particularly advantageous in the healthcare domain, where data privacy and security are paramount. When managing multiple clients, carefully selecting which ones participate in the aggregation process can help reduce energy consumption, while maintaining good performance (Savoia et al. 2024). Using FL, institutions can contribute to the development of global models while ensuring that patient data remain localised. When dealing with multimodal data, such as different imaging techniques from various sources or machines, specific adaptations for each modality may be necessary to ensure effective model training, that is, personalised models can be built by modifying the FL model aggregation process (citetan2022towards).

In this work, we introduce the FL framework FLAMES (Federated Learning for Advanced MEdical Segmentation), designed to handle multimodal medical data efficiently. Each modality present in the FL system is assigned a dedicated model, managed by the server, which aggregates, for a given model, the parameters received from the clients operating with the same modality and sends the updated weights back to those clients. Hence, clients collaborate only with others handling the same data modality, maximising collaboration efficiency among institutions working with the same modality. In this way, each model on the server specialises in one modality, by customising the client training phase. Thus, clients are assisted in specialising in the modality they require, preventing potential noise from clients with different data types. Meanwhile, the server maintains the ability to generalise across images of various organs, relying solely on the specific imaging modality.

- A novel FL framework, FLAMES, tailored for multimodal medical data, ensuring both modality-specific specialisation and cross-organ generalisation.

- An adaptive model aggregation strategy that assigns a higher weight to the local model while retaining information from other clients.

- Generalised models, enhancing the framework's ability to handle diverse data modalities, independently of the organ type.

The remainder of this paper is organised as follows. Section 2 reviews related works, Section 3 introduces concepts relevant to our work. Section 4 presents the FLAMES framework, and Section 5 presents the employed datasets and their pre-processing, the baseline method, the initialization of hyperparameters, and the environment settings. Section 6 presents the FLAMES results and a comparison with the baseline method. Lastly, in Section 7 we summarise the contributions of our study and outline potential directions to extend our research.

2 Related Work

2.1 Data Fusion and Medical Imaging Modalities

In areas such as medical analysis, data can be of different modalities (Di Cola et al. 2023; Shaik et al. 2024), can come from multiple devices and institutions and may include missing or incomplete information. Integrating these diverse sources efficiently, while maintaining data security and privacy, presents significant challenges.

Medical images can be of different types and each medical imaging modality has distinct characteristics and properties, making them suitable for examining specific organs, diagnosing diseases, and monitoring therapeutic results (Saleh et al. 2022). For instance, Magnetic Resonance Imaging (MRI) utilises magnetic fields to generate detailed cross-sectional images, especially useful for visualising soft tissues and detecting abnormalities. X-rays are commonly employed to identify bone fractures and structural anomalies. Computed Tomography (CT) provides high-resolution images of dense structures such as bones, with the ability to detect subtle differences in tissue composition. Ultrasound (US) relies on low-frequency sound waves to produce real-time images, often used to examine soft tissues and monitor fetal development. Positron Emission Tomography (PET) is widely utilised in clinical settings to assess metabolic processes by detecting gamma rays emitted by a radioactive tracer. Advanced imaging modalities such as PET-CT, MRI-PET, MRI-CT-PET, and MRI-SPECT-PET leverage image fusion techniques to integrate anatomical and functional data, enhancing diagnostic accuracy. Finally, Single Photon Emission Computed Tomography (SPECT) is used to evaluate organ functionality by detecting gamma radiation.

As data can be of various types, multimodal data fusion has become crucial for ML applications, and numerous techniques have been developed for managing data fusion. Two fusion approaches that can be utilised to combine the results from several modalities are early fusion and late fusion (Zhang, Sidibé, et al. 2021).

In early fusion, data from multiple modalities are combined at an initial stage, typically before any decision-making occurs. The raw features extracted from each modality are integrated into a unified representation, allowing the model to capture correlations between modalities at a low level. This approach can lead to a more comprehensive and informative representation of the input data.

Late fusion involves processing data from each modality independently, with fusion occurring at a later stage, often after each modality has been analysed. This approach allows each modality to contribute to the final decision based on its individual analysis. In late fusion, the multimodal data are processed separately through distinct branches, with the outputs subsequently merged into a common feature space via fusion operations during the decoding stage. This method is particularly useful for cases where the modalities have significantly different characteristics or representations.

Zhai et al. (2023) proposes a dual-branch generative adversarial network (DBGAN) model to fuse CT and MRI images, to retain the salient features and complementary information from the source images. Chen et al. (2024) presents the Deep Spatial Prior Interaction (DSPI) framework which fuses different types of data: visual, textual, and spatial information about objects in each image. DSPI utilises Grounding DINO to extract spatial priors, providing a precise object location based on textual prompts. A meta adapter transforms textual inputs into structured object queries, aligning them with visual features. Lastly, the model employs attention mechanisms to fuse features of different modalities, ensuring that the combined representation captures both semantic meaning and spatial context. The RAQNet model (Zhai et al. 2024) improves crowded counting by fusing local and global features through a structured network composed of a feature extractor, ORA (object region awareness) modules, QDC (quantum-driven calibration) modules, and a decoder. Local features are captured using ORA modules with regional attention, which focus on crowd areas and suppress background noise. Global features are extracted using QDC modules that apply quantum attention mechanisms. These modules are arranged to mutually enhance each other, allowing the model to integrate both local and global contexts effectively. Finally, the decoder generates a density map from the fused features using transposed convolutions.

2.2 Medical Segmentation

One of the most common challenges in dealing with medical images is the identification and delineation of regions of interest such as organs, tissues, and tumours. In this direction, many works explore methodologies that perform well in these tasks. DoDNet, introduced in Zhang, Xie, et al. (2021), uses an encoder, a task encoding module, a dynamic filter generation module, and a dynamic segmentation head conditioned on the input image and assigned task, to segment multiple organs and tumours. Authors in Ma et al. (2024) present MedSAM, a model designed to enable universal medical image segmentation using bounding boxes, covering 10 imaging modalities and more than 30 cancer types.

In He et al. (2023) authors treat tumour segmentation from whole-body PET/CT images as cascade object detection and segmentation problems. They built a two-stage architecture to separate the complex task of tumour segmentation from the whole body into simpler tasks of tumour detection and tumour segmentation only on slices effectively containing the tumour. The authors in Cinar et al. (2022) propose a hybrid DenseNet121-UNet model with MRI pre-processing and post-processing in the BraTS2019 dataset (Menze et al. (2014)). To overcome the data imbalance and increase the model's accuracy, they divided the image into 1, 2, or 4 pieces with (64 × 64) size dimensions according to the tumour size based on the tumour center coordinates. In Chen et al. (2023) a ResU-Net network is developed for brain tumour segmentation tasks in MRI. It employs residual and neural units in conjunction with the U-Net framework. nnU-Net (Isensee et al. 2021) is a deep learning-based segmentation method that automatically configures itself, including pre-processing, network architecture, training and post-processing for organ and tumour segmentation in the biomedical domain. This network is used in various segmentation studies, such as Nishio et al. (2021) for lung tumour segmentation, using different datasets. The authors in Andrearczyk et al. (2020) propose an automatic segmentation of head and neck tumours and nodal metastases from FDG-PET and CT images using a 3D and 2D V-Net.

2.3 Medical Segmentation in FL

FL (Konečnỳ et al. 2015) is a decentralised approach in which models are trained directly on local devices, reducing the need for centralised data storage, ensuring data privacy. FL in medicine is widely applied to various tasks, including medical image segmentation. To perform organ segmentation using CT images from diverse sources in FL, Kanhere et al. (2024) uses a 3D UNet architecture. The SegViz framework developed can aggregate knowledge from heterogeneous medical imaging datasets into a single multi-organ segmentation model. In Borazjani et al. (2024) clients can have multimodal and non-IID data and the model eliminates the need for clients to possess identical sets of data modalities by employing a versatile distributed encoder-decoder architecture. It aggregates each modality's encoder and classifiers of different existing modality combinations across the institutions separately.

Attention-based transformers and FL algorithms are integrated into Shiri et al. (2023) for PET/CT image segmentation in patients with head and neck cancers.

The authors in Dai et al. (2024) propose an FL framework with federated modality-specific encoders and multimodal anchors (FedMEMA) to simultaneously address two problems: some FL participants only possess a subset of the complete imaging modalities and each participant would expect to obtain a personalised model tailored for its local data characteristics. FedMEMA is validated on the BraTS2020 (Menze et al. 2014) benchmark for multimodal brain tumour segmentation. It employs an encoder for each modality to allow a great extent of parameter specialisation. While the encoders are shared between the server and the clients, the decoders are personalised to cater to individual participants.

Although existing works have made significant progress in applying FL to medical image segmentation (Table 1), particularly in handling multimodal data, to the best of our knowledge, FLAMES is the first framework to address both multi-organ and multi-modality segmentation in an FL setting. Most prior approaches either focus on a single organ across multiple modalities or on multiple organs within a single modality and often do not even adopt an FL setting. However, this assumption breaks down when client data differ significantly in both anatomy and modality. In FLAMES, clients are supported in tailoring their models to the specific imaging modality they need, avoiding interference from clients with differing data types. At the same time, the server is able to generalise across images of various organs, relying solely on the imaging modality information. A significant advancement of FLAMES is its capacity to concurrently manage several models on the server side, a functionality achieved by altering the foundational FL framework, Flower (Beutel et al. 2022), in a manner not previously investigated in the existing literature. This design allows for more flexible and efficient training, as the server can orchestrate heterogeneous learning tasks in parallel.

| Paper | FL | Multimodal imaging data | Multi-organ tumour segmentation |

|---|---|---|---|

| Zhang, Xie, et al. (2021) | ✘ | ✓ | ✓ |

| Ma et al. (2024) | ✘ | ✓ | ✓ |

| He et al. (2023) | ✘ | ✓ | ✓ |

| Cinar et al. (2022) | ✘ | ✓ | ✘ |

| Chen et al. (2023) | ✘ | ✘ | ✘ |

| Nishio et al. (2021) | ✘ | ✓ | ✘ |

| Andrearczyk et al. (2020) | ✘ | ✓ | ✓ |

| Kanhere et al. (2024) | ✓ | ✘ | ✓ |

| Borazjani et al. (2024) | ✓ | ✘ | ✓ |

| Shiri et al. (2023) | ✓ | ✓ | ✘ |

| Dai et al. (2024) | ✓ | ✓ | ✘ |

| FLAMES | ✓ | ✓ | ✓ |

3 Background

3.1 Federated Learning

When a federated system contains at least two different data types (i.e., modalities) among all local datasets it can be defined as a multimodal FL (Pan et al. 2024).

4 Methodology

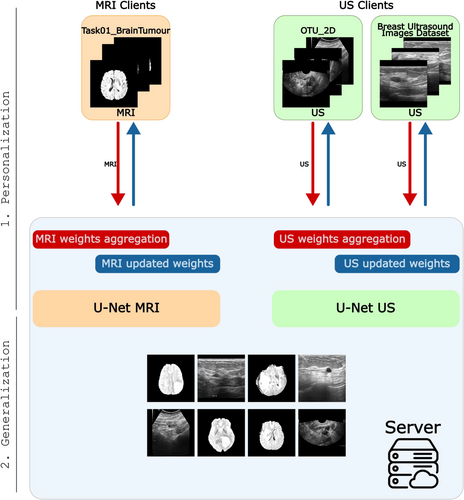

Although existing works have made significant strides in medical segmentation, to the best of our knowledge, no existing FL framework independently handles different data modalities from various organs while enabling predictions on images without dependence on the organ type. FLAMES enables clients to specialise in their respective data modalities while facilitating the creation of generalised models on the server that can process input images without requiring prior knowledge of the specific organ (Figure 1).

Let be a set of medical image modalities. Without loss of generality, each dataset corresponding to a specific organ and modality can be considered as a single client in this framework. Therefore, each client holds images of a unique combination of organ and modality within . The central server can access different sets of data , each corresponding to modalities in irrespective of the organ type.

- Client's personalization: In this phase, the server maintains separate models, each dedicated to a specific data modality. FL is performed independently for each modality, ensuring that the global models for different data types are updated using the corresponding clients. A novel weights aggregation strategy has been developed to address the multimodal nature of the datasets and the inherent class imbalance. In this phase, clients personalization is the focus.

- Generalisation: The server can detect tumours in medical images, using the corresponding modality-specific model.

In detail, the FL process starts with a global model initialized on the central server. This model is distributed to all participating clients and serves as the starting point for local training. Each client loads its local dataset and applies data augmentation during each local epoch to enhance the robustness of the training process. Clients train the global model locally for a fixed number of epochs. After completion of local training, each client sends only the updated model weights back to the server. The server collects model updates from all clients and aggregates the model updates separately for each data modality.

This approach ensures that each client retains more influence from its data, which could be particularly beneficial in scenarios involving numerous clients with heterogeneous data to prevent dilution of the local model's learning.

After aggregation, the server redistributes updated modality-specific models to clients corresponding to the same modality for subsequent rounds. Meanwhile, the server can predict across all modalities of various organs. Due to privacy constraints, models are tested on server-side datasets, each comprising images from a specific modality. This approach enables the server to generalise effectively across diverse data sources using modality-specific models.

On the client side, FLAMES can prevent the need to participate in an FL system with too many clients that may not contribute effectively to the client's modality personalization, due to different data modalities, reducing the noise, thereby improving overall learning efficiency and lowering the round overhead in synchronous FL, as fewer clients need to complete their local epochs, compared to traditional FL systems. On the server side, FLAMES enables the training of a generalised model capable of performing segmentation tasks across various data types on various organs.

The proposed framework has been implemented and trained using the Flower framework (Beutel et al. 2022) detailed in Section 5.5.

5 Experimental Setting

5.1 Datasets and Preprocessing

- Task01_BrainTumour: This dataset contains 750 4D MRI volumes of brain tumours, a subset of the 2016 and 2017 Brain Tumour Image Segmentation (BraTS) challenges (Menze et al. 2014; Bakas et al. 2017) and part of the Medical Segmentation Decathlon (MSD) challenge (Antonelli et al. 2022). Data include multi-parametric MRI sequences: native T1, post-Gadolinium (T1-Gd), T2-weighted (T2), and T2 Fluid Attenuated Inversion Recovery (T2-FLAIR) scans, acquired using various protocols and scanners from 19 institutions. Brain tumour sub-regions are classified as edema, enhancing, and non-enhancing tumour (Simpson et al. 2019). For this study, only 484 training volumes (with segmentation masks) are used, excluding the 266 test images.

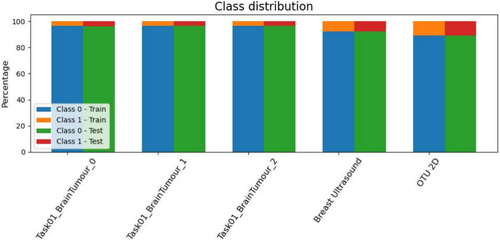

- Breast Ultrasound Images Dataset: Breast ultrasound images from 600 women aged 25–75 are included in this data collection. The dataset consists of 780 images, classified as normal, benign, or malignant (Al-Dhabyani et al. 2020). We select only benign and malignant cases. Each image has a binary mask that identifies the tumour.

- OTU 2D: OTU 2D and OTU CEUS are the two subsets that make up the MMOTU dataset (Li 2024). In this study only 2D ultrasound images from the OTU 2D subgroup are used. Each image has a binary mask that identifies the tumour.

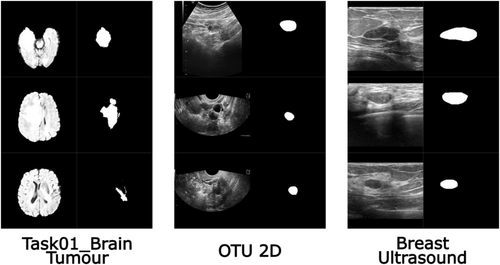

Non-square images were padded with black borders to create square dimensions. Images were resized to 240 × 240 pixels and scaled to [0, 1].

All images were in grayscale. Note that, due to the large amount of data, Task01_BrainTumour was split into 3 parts, according to patient IDs, and assigned to different clients. Hence, each client works with a manageable data volume. The datasets reflect a range of imaging modalities, anatomical regions, and acquisition protocols, making them ideal for evaluating the performance of FLAMES across diverse data sources.

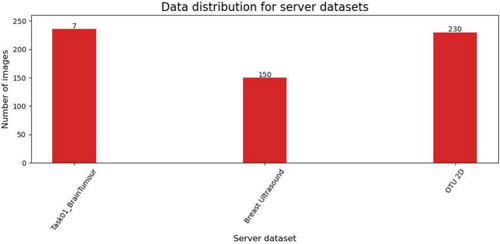

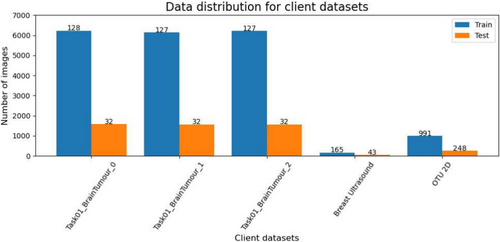

The pre-processing ensured uniformity and compatibility across all datasets for further analysis. Examples of client images after pre-processing are reported in Figure 2. Each dataset was divided into three distinct subsets: the client's training and test sets and the server's test set. There is no overlap between patients in these sets, as the data is partitioned based on patient IDs. The server dataset obtained, along with the number of patients in each subset is shown in Figure 3.

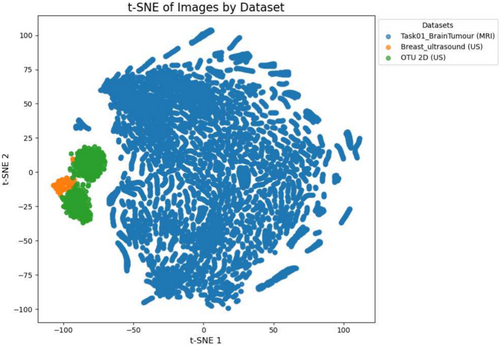

In Figure 6 the dimensionality reduction technique t-Distributed Stochastic Neighbour Embedding (t-SNE) Van der Maaten and Hinton (2008) has been utilised on clients' train data. It shows a clear separation between the MRI and US modalities. This distinction underscores the challenge of applying a single, generalised model across heterogeneous datasets. FLAMES addresses this by initially training modality-specific models with the same architecture on separate clients data, allowing each client to specialise in its respective modality.

5.2 Baseline

The baseline employed in this study is a traditional FL approach, in which a single global model is collaboratively trained among all clients. Each client contributes with its local dataset to the training process, and the server iteratively aggregates updates from these clients in a global model, using the FedAvg strategy. The datasets and augmented images used by both the clients and the server are identical to those in the FLAMES framework. Similarly to FLAMES, we fixed the number of rounds at 50. Both the model architecture and hyperparameters are consistent with those of the modality-specific models. With this approach, all clients share a unified model without specialisation or adaptation to specific modalities or data distributions.

5.3 Models and Hyperparameters

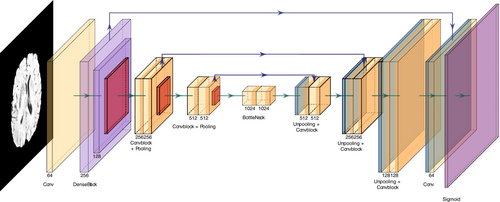

For each modality, we employed a UNet-based architecture (Ronneberger et al. 2015) suitable for segmentation tasks. Each modality uses the same model design, as shown in Figure 7.

The architecture is a customised UNet architecture that integrates a pre-trained DenseNet121 as part of its encoder to leverage transfer learning. A 2D convolution layer with a single input channel is followed by a first dense block and transition layer from DenseNet121. Then, 3 convolutional blocks are employed, each of them comprising two convolutional layers followed by batch normalisation and ReLU activation. To avoid overfitting on the data, a Dropout of 0.3 is used after each convolutional block. The input image, with a size of , is progressively downsampled through max-pooling layers with a kernel size of , before each convolutional block.

The decoder employs transpose convolution layers for upsampling, concatenating the corresponding encoder outputs to recover spatial details through skip connections. The final layer is a convolution, which maps the output to a single channel, representing the predicted segmentation mask. A sigmoid activation function is applied to produce probabilities for binary segmentation tasks.

In our experiments, we performed fine-tuning of the model parameters to optimise performance and found that the best results were achieved with . The Dice loss is designed to maximise the overlap between the predicted segmentation and the ground truth. It is particularly effective for addressing class imbalance in segmentation tasks. The Focal loss is an extension of the Binary Cross-Entropy loss, designed to address the issue of class imbalance by emphasising difficult-to-classify examples. The Dice loss ensures a robust alignment between predicted and ground-truth segmentations, particularly in imbalanced datasets, while the Focal loss emphasises learning from harder examples, enhancing the model's discriminative ability. Data in the client's training set were augmented through random flips, rotations, zooms, stretches, and shifts on original images. The augmented images varied between epochs and between different clients.

5.4 Evaluation Metrics

- Intersection over Union (IoU) (Jaccard 1901), also known as the Jaccard Index, measures the overlap between and , defined as:

(3)where is a small constant added to avoid division by zero.

- Dice Similarity Coefficient (DSC) (Dice 1945), measures the similarity between two sets and is particularly useful to handle class imbalances in segmentation tasks. It is defined as:

(4)where is the Euclidean distance between points and in the predicted and ground truth masks, respectively.

- Pixel Accuracy, measures the ratio of correctly predicted pixels to the total number of pixels in the ground truth mask. It provides a straightforward evaluation of the overall accuracy of the prediction. Pixel Accuracy is defined as:

(5)where is an indicator function that equals 1 if the predicted pixel matches the ground truth pixel and 0 otherwise and is the total number of pixels. Pixel accuracy may not necessarily indicate good segmentation performance, especially when dealing with unbalanced classes. In such cases, it should ideally be higher than the accuracy reached by predicting only the majority class.

5.5 Flower Framework

The Flower framework (Beutel et al. 2022) is useful for managing the FL process and creating a coordinated collaboration between a central server and multiple participating institutions. Flower is particularly well-suited for extending FL to diverse client environments, including mobile and wireless devices. Furthermore, Flower's flexibility allows for the seamless integration of new algorithms, training strategies, and communication protocols, making it an ideal choice for medical applications of FL where continuous adaptation and improvement are required. The current server and strategies implementations assume synchronous FL.

5.6 Hardware Infrastructure

The experiments took place on a multi-node server configuration. We used the Infrastructure for Big Data and Scientific Computing (I.BI.S.CO) at the S.Co.P.E. Data Center, University of Naples Federico II (Barone et al. 2022). The system includes 36 nodes, 32 dedicated to computation, and 4 reserved for storage, meeting our requirements for simulating an FL environment. Each computational node features a DELL C4140 server equipped with 4 NVIDIA Tesla V100 GPUs, totaling 128 GPUs across the entire system.

6 Results and Discussion

We run our experimentation on I.BI.S.CO (see Section 5.6). The evaluation metrics are DSC, IoU, and Pixel-Accuracy (see Section 5.4). Datasets are distributed among clients as described in Table 2, and the performance results for each client, comparing the baseline and FLAMES approaches, are reported in Table 3.

| Client | Dataset | Modality | Train images | Test images |

|---|---|---|---|---|

| 1 | Task01_BrainTumour_0 | MRI | 6223 | 1574 |

| 2 | Task01_BrainTumour_1 | MRI | 6130 | 1567 |

| 3 | Task01_BrainTumour_2 | MRI | 6217 | 1568 |

| 4 | Breast ultrasound | US | 165 | 43 |

| 5 | OTU_2d | US | 991 | 248 |

- Note: The “_0”, “_1” and “_2” in client datasets indicate the division of the Task01_BrainTumour dataset into three parts based on patient IDs.

| Client | Baseline | FLAMES | ||||

|---|---|---|---|---|---|---|

| IoU | DSC | Pixel accuracy | IoU | DSC | Pixel accuracy | |

| 1 | 0.70 | 0.78 | 99.23% | 0.71 | 0.78 | 99.22% |

| 2 | 0.70 | 0.77 | 99.22% | 0.68 | 0.76 | 99.22% |

| 3 | 0.70 | 0.79 | 99.22% | 0.70 | 0.78 | 99.20% |

| 4 | 0.00 | 0.00 | 91.94% | 0.42 | 0.51 | 94.66% |

| 5 | 0.00 | 0.00 | 90.30% | 0.59 | 0.69 | 95.90% |

As shown in Table 3, the baseline approach fails to generalise across all clients, particularly when it comes to ultrasound (US) data. Clients with larger datasets, such as client 1 (6223 train images) and client 2 (6130 train images), generally show better segmentation metrics (IoU and DSC) compared to those with smaller datasets (e.g., client 4 with 165 train images). US clients (clients 4–5) report IoU and DSC values on the test of 0.00, suggesting the baseline models struggle to segment the data effectively with the fixed number of rounds. These results may be influenced by the use of FedAvg for aggregation, which assigns model weights based on the number of images from each client. This means that clients with more images, particularly those related to the MRI modality, are given greater weight in the aggregation, leading to a dominance of the MRI clients during model updates. Hence, the non-IID nature of the datasets and the amount of images strongly influence the performances. As a result, this approach may prevent clients from specialising in their own modality and could introduce noise. The high pixel accuracy observed in the results can be attributed to the fact that the model tends to predict most pixels as background, which is the majority class in the datasets.

In contrast, FLAMES shows clear advantages, especially for US clients. While maintaining comparable performance for MRI clients (with minimal changes in Dice and IoU), FLAMES dramatically improves US performance. Client 4, despite having access to a limited number of images, achieves a good Dice score by leveraging collaboration with the other US dataset (client 5), even though the images originate from different organs and institutions. Hence, this approach is particularly advantageous for clients with limited data availability.

To assess the generalisation capability of the modality-specific models trained by the server, we tested them on a separate test set containing unseen images from multiple datasets and organ types. Results in Table 4 demonstrate that the model performs well on unseen test data, where images from various datasets and organs are mixed.

| Model | IoU | Dice | Accuracy |

|---|---|---|---|

| MRI | 0.57 | 0.67 | 0.99 |

| US | 0.53 | 0.62 | 0.95 |

7 Conclusions

FLAMES offers a robust solution for advancing segmentation tasks across diverse medical imaging datasets, addressing the challenges posed by non-IID and multimodal data in multi-organ tumour segmentation. Using a unified architecture can improve the collaboration between institutions handling the same modalities. The ability to manage multiple modality-specific models makes FLAMES more adaptable to real-world federated scenarios, where data heterogeneity is the norm. Moreover, the server can segment tumours in all modalities and organs. Future studies could explore several directions. These include the implementation of an asynchronous framework that allows clients of the same modality to continue training independently without waiting for others, scaling the framework to larger, more heterogeneous client pools, testing the server models on modalities and organs not present in the current client's datasets and exploring a decentralised, server-less federated architecture where clients coordinate model updates directly, to reduce central bottlenecks and enhance robustness in environments without stable server access.

Acknowledgements

The authors thank the IBiSco project (Infrastructure for BIg data and Scientific COmputing), PON R&I 2014-2020 under Call 424-2018—Action II.1, for the support and use of the HPC Cluster. The authors extend special thanks to Dr. Luisa Carraciuolo and Eng. Davide Bottalico for their constant and continuous support in utilising the IBiSco HPC Cluster.

This work has been also supported by PNRR project FAIR - Future AI Research (PE00000013), under the NRRP MUR program funded by the NextGenerationEU.

This work has been also supported by G.A.N.D.A.L.F.—Gan Approaches for Non-IID Aiding Learning in Federations, CUP: E53D23008290006, PNRR—Missione 4 “Istruzione e Ricerca”–Componente C2 Investimento 1.1 “Fondo per il Programma Nazionale di Ricerca e Progetti di Rilevante Interesse Nazionale (PRIN)”. Open access publishing facilitated by Universita degli Studi di Napoli Federico II, as part of the Wiley - CRUI-CARE agreement.

Disclosure

The authors have nothing to report.

Conflicts of Interest

The authors declare no conflicts of interest.

Open Research

Data Availability Statement

- Task01_Brain_Tumour: http://medicaldecathlon.com/.

- Breast Ultrasound Images Dataset: https://www.kaggle.com/datasets/aryashah2k/breast-ultrasound-images-dataset.

- OTU_2D: https://figshare.com/articles/dataset/_zip/25058690?file=44222642.