Single Word Change Is All You Need: Using LLMs to Create Synthetic Training Examples for Text Classifiers

Funding: Prof. Alfredo Cuesta-Infante was supported by R&D project TED2021-129162B-C22, funded by MICIU/AEI/ 10.13039/501100011033/and the European Union NextGenerationEU/PRTR, and R&D project PID2021-128362OB-I00, funded by MICIU/AEI/ 10.13039/501100011033/and FEDER/UE.

ABSTRACT

In text classification, creating an adversarial example means subtly perturbing a few words in a sentence without changing its meaning, causing it to be misclassified by a classifier. A concerning observation is that a significant portion of adversarial examples generated by existing methods change only one word. This single-word perturbation vulnerability represents a significant weakness in classifiers, which malicious users can exploit to efficiently create a multitude of adversarial examples. This paper studies this problem and makes the following key contributions: (1) We introduce a novel metric to quantitatively assess a classifier's robustness against single-word perturbation. (2) We present the SP-Attack, designed to exploit the single-word perturbation vulnerability, achieving a higher attack success rate, better preserving sentence meaning, while reducing computation costs compared to state-of-the-art adversarial methods. (3) We propose SP-Defence, which aims to improve by applying data augmentation in learning. Experimental results on 4 datasets and 2 masked language models show that SP-Defence improves by 14.6% and 13.9% and decreases the attack success rate of SP-Attack by 30.4% and 21.2% on two classifiers respectively, and decreases the attack success rate of existing attack methods that involve multiple-word perturbations.

1 Introduction

With proliferation of generative AI based applications, text classifiers have become mission critical. These classifiers are designed to be guardrails for outputs of an application that uses a large language model underneath. A text classifier can be used to detect if a chatbot is accidentally giving financial advise; or leaking sensitive information, or in the past they are frequently used in security-critical applications such as misinformation detection (Wu et al. 2019; Torabi Asr and Taboada 2019; Zhou et al. 2019). At the same time, there has been a steady stream of work showing that these classifiers are vulnerable to perturbations. That is, simple perturbations—subtly modified sentences that, while practically indistinguishable from the original sentences, nonetheless change the output label of a classifier resulting in failure modes of these classifiers. Deploying these classifiers alongside generative AI applications have brought up the question of whether the failure modes of these classifiers can be identified before the classifiers are deployed—and, if they can be identified, whether they can also be updated to overcome these failure modes. One promising solution is to synthetically construct these “subtly modified” sentences in order to test the classifiers, and also to create sentences we can add to the training data to make the classifiers more robust in the first place. Not surprisingly, synthetic data generation is also possible with large language models. In this paper, we propose and demonstrate the use of use large language models to construct synthetic sentences that are practically indistinguishable from the real sentences. In classical literature, sentences that can trick these classifiers are known as “adversarial examples”. The process of trying to find these examples for a given classifier (or in general) is called an “adversarial attack” and training the classifier to be robust to these attacks is called “defense”. We will use these terms here, as they enable us to compare our method with other well-known methods using similar notations and language. A common metric for assessing the vulnerability of a classifier to such attacks is the Attack Success Rate (ASR), defined as the percentage of labelled sentences for which an adversarial sentence could be successfully designed. Recent proposed methods can achieve an ASR of over 80% (Jin et al. 2020; Li, Zhang, et al. 2021), making adversarial vulnerability a severe issue.

These attacks work through black box methods, accessing only the output of a classifier. They tweak sentences in an iterative fashion, changing one or multiple words and then querying the classifier for its output until an adversarial example is achieved. When we examined the adversarial sentences generated by these methods, we were surprised to find that in a significant portion of them, just one word had been changed. In Table 1 we show results of three existing methods, their attack success rates and the percentage of adversarial examples they generated that had only single-world changes (SP). For example, 66% of adversarial examples generated by CLARE attacks (Li, Zhang, et al. 2021) on a movie review (MR) dataset (Pang and Lee 2005) changed only one word. Furthermore, many different adversarial sentences used the same word, albeit placed in different positions. For example, inserting the word “online” into a sentence repeatedly caused business news articles to be misclassified as technology news articles.

| TextFooler | BAE | CLARE | SP-Attack | |||||

|---|---|---|---|---|---|---|---|---|

| ASR | SP% | ASR | SP% | ASR | SP% | ASR | SP% | |

| AG | 65.2 | 17.0 | 19.3 | 35.8 | 84.4 | 38.6 | 82.7 | 100 |

| MR | 72.4 | 49.2 | 41.3 | 66.6 | 90.0 | 66.2 | 93.5 | 100 |

Directly modelling this vulnerability is crucial, as both of these properties are beneficial to attackers. First, the fewer words that are changed, the more semantically similar the adversarial example will be to the original, making it look more “innocent” and running the risk that it will not be detected by other methods. Second, knowing that a particular word can reliably change the classification will enable an attacker to reduce the number of queries to the classifier in a black box attack, which is usually queries, where is the sentence length.

Despite the attractive properties of direct methods for attackers, to the best of our knowledge, no existing work has been done to design a single-word perturbation- based attack—nor is there a specific metric to quantify the robustness of a classifier against single-word perturbations. We approach this problem in a comprehensive way.

First, we define a measure—the single-word flip capability, denoted as – for each word in the classifier's vocabulary. This measure, presented in Section 2, is the percentage of sentences whose classification can be successfully changed by replacing that word at one of the positions. A subset of such sentences that are fluent enough and similar enough to the original sentence would comprise legitimate attacks. allows us to measure a text classifier's robustness against single-word perturbations, denoted as . is the percentage of sentence-word pairs in the Cartesian product of the dataset and the vocabulary where the classifier will not flip regardless of the word's position, meaning a successful attack is not possible.

The vocabulary size of the classifiers and datasets we are working with are too large for us to compute these metrics through brute force (30 k words in the classifier's vocabulary, and datasets with 10 k sentences of 30 words on average). Therefore, in Section 3, we propose an efficient upper bound algorithm (EUBA) for , which works by finding as many successful attacks as possible within a given time budget. We use first-order Taylor approximation to estimate the potential for each single-word substitution to lead to a successful attack. By prioritising substitutions with high potential, we can find most of the successful attacks through verifying a subset of word substitutions rather than all of them, reducing the number of queries to the classifier.

With these two measures, we next design an efficient algorithm, SP-Attack, to attack a classifier using single-word perturbations while still maintaining fluency and semantic similarity (Section 4). SP-Attack pre-computes for all words using EUBA, then performs an attack by changing only one word in a sentence, switching it to another high-capacity word (i.e., high ) to trigger a misclassification. We show that SP-Attack is more efficient than existing attack methods due to pre-computing and flipping only one word.

To overcome this type of attack, in Section 5, we design SP-Defence, which leverages the first-order approximation to find single-word perturbations and augment the training set. We retrain the classifier to become more robust—not only against single-word perturbation attacks, but also against attacks involving multiple word changes.

We also carry out extensive experiments on masked language models, specifically BERT classifiers, in Section 6 to show that: (1) and are necessary robustness metrics; (2) EUBA is an efficient way to avoid brute force when computing the robustness metric ; (3) SP-Attack can match or improve ASR compared to existing attacks that change multiple words, and better preserve the semantic meanings; (4) SP-Defence is effective in defending against baseline attacks as well as against SP-Attack, thus improving classifier robustness.

2 Quantifying Classifier Robustness Against Single-Word Perturbations

In this section, we formulate the single-word perturbation adversarial attack problem, and define two novel and useful metrics: , which represents how capable a particular word is at flipping a prediction, and , which represents the robustness of a text classifier against single-word perturbation. We also refer the reader to Table 2 for other notations used throughout the paper.

| Notation | Description |

|---|---|

| A text classifier | |

| The vocabulary used by | |

| A text classification training set | |

| A subset of where can correctly classify | |

| The Cartesian product of and | |

| A set of sentences constructed by replacing one word in with | |

| The single-word adversarial capability of | |

| The robustness against single-word perturbation measured on the training set | |

| The robustness against single-word perturbation measured on the test set | |

| ASR | Attack success rate |

2.1 Single-Word Perturbation Attack Setup

We consider a restricted adversarial attack scenario where the attacker can substitute only one word. The attack is considered successful if the classifier's prediction differs from the label.

- the prediction is flipped, i.e., ;

- is a fluent sentence;

- is semantically similar to .

2.2 Two Useful Metrics for Robustness

2.3 Definitions and Theorem

We then give formal definitions and a theorem.

Definition 1.The single-word flip capability of word on a classifier is

Definition 2.The robustness against single-word perturbation is

can be interpreted as the accuracy of on , where is considered correct on if all sentences in are predicted as .

Theorem 1. and have the following relation:

Proof.

3 Efficient Estimation of the Metrics

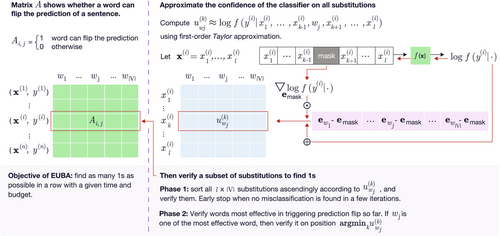

Directly computing and metrics is time-consuming, because each word in the vocabulary needs to be placed in all sentences at all positions and then verified on the classifier. To overcome this issue, we instead estimate the lower bound of (and thus the upper bound of ). In this section, we first give an overview of the efficient upper bound algorithm (EUBA), then detail our first-order approximation. Figure 1 depicts the algorithm and the pseudocode is presented in Appendix B.

3.1 Overview

To find the lower bound of , we want to find as many such that can successfully flip the prediction. Since we want to compute for all words, we can convert it to the symmetric task of finding as many as we can for each such that the sentence can be successfully flipped by the word. This conversion enables a more efficient algorithm.

Phase 1: We sort all substitutions in ascending order by , and verify them on the classifier. We stop the verification after consecutive unsuccessful attempts and assume all remaining substitutions are unsuccessful, where is a hyper-parameter.

Phase 2: If a word can successfully flip many other sentences in the class , it is more likely to succeed on . Therefore, we keep track of how many successful flips each word can trigger on each category. We sort all words in descending order by the number of their successful flips, and verify them. For each word , we only verify the position where it is most likely to succeed (i.e., ). Similarly, phase 2 stops after consecutive unsuccessful attempts.

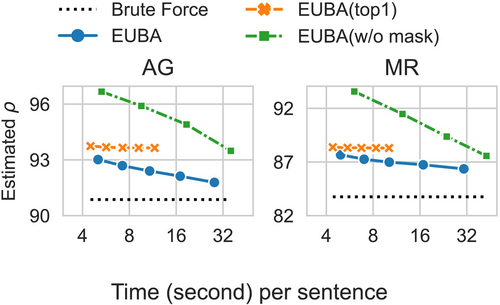

By using , we can skip a lot of substitutions that are unlikely to succeed, improving efficiency. The hyper-parameter controls the trade-off between efficiency and the gap between the lower bound and the exact . When setting , EUBA can find the exact and . In Section 6.5, we show that efficiency can be improved a lot if a relatively small is used, at the small cost of neglecting some successful flips. We also compare EUBA with two alternative designs.

3.2 First-Order Approximation

4 Single-Word Perturbation Attack

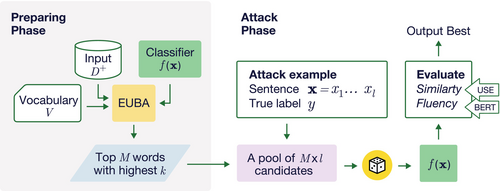

For attackers who try to find fluent and semantically similar adversarial examples, words with high provide the potential for low-cost attacks. We propose SP-Attack, which first uses our algorithm, EUBA to estimate , then use the top words with highest to craft an attack. Figure 2 shows the attack pipeline.

There are two differences between SP-Attack and conventional black-box adversarial attacks. (1) SP-Attack substitutes only one word with one of the words, whereas conventional methods can change multiple words and can use any word. As a result, SP-Attack is much more efficient. (2) Estimating using EUBA requires computing the gradient of the classifier, while black-box adversarial attack methods do not. Even so, our attack method can be applied to real-world scenarios—for example, an insider attack where someone with access to the classifier calculates the flip capacity and leaks high-capacity words to other malicious users, who use them to conduct attacks.

5 Single-Word Perturbation Defence

We present a data augmentation strategy, SP-Defence, to improve the robustness of the classifier in a single-word perturbation scenario. Specifically, we design three augmentations.

Random augmentation randomly picks one word in a sentence, then replaces it with another random word in . Since words have at least 6.5% according to our experimental results, even pure randomness can sometimes alter the classifier's prediction.

Then we apply the substitution with the minimum . Equation (5) is more efficient because it only needs one forward and backward pass on to compute , comparing to forward and backward passes on in Equation (3). Therefore it is more suitable for data augmentation, which usually involves large-scale training data.

In each training iteration, we apply gradient-based augmentation on half of the batch. For the other half, we randomly choose from original training data, random augmentation, or special word augmentation.

6 Experiments

In this section, we conduct comprehensive experiments to support the following claims: and metrics are necessary in showing the classifier robustness against single-word perturbation (Section 6.2); SP-Attack is as effective as conventional adversarial attacks that change multiple words (Section 6.3); SP-Defence can improve any classifier's robustness in both single-word and multiple-word perturbation setups (Section 6.4); EUBA is well-designed and configured to achieve a tighter bound than alternative designs (Section 6.5).

6.1 Setup

Datasets. We conduct our experiments on four datasets: (1) AG—news topic classification, (2) MR—movie review sentiment classification (Pang and Lee 2005), (3) SST2—Binary Sentiment Treebank (Wang et al. 2019), and (4) Hate speech detection dataset (Kurita et al. 2020). Dataset details are shown in Table 3.

Classifiers. We evaluate our method on two classifiers, the BERT-base classifier (Devlin et al. 2019) and the distilBERT-base classifier (Sanh et al. 2019). We select BERT models, which are masked language models, because they are trained to predict the masked token. The vanilla classifiers are trained with the full training set for 20 k batches with batch size 32 using the AdamW (Loshchilov and Hutter 2019) optimizer and learning rate 2e-5. We also include results for the RoBERTa-base classifier (Liu et al. 2019) in Appendix E.3.

- (CAcc ): clean accuracy, the accuracy of the classifier measured on the original test set.

-

: quantifies classifier robustness in a single-word perturbation scenario.

- –

() is measured on 1000 examples sampled from the training set.

- –

() is measured on the test set.

- –

- (ASR ): attack success rate that shows how robust the classifier is against an adversarial attack method. This is defined as

- (ASR1 ): Since we are interested in single-word perturbations, we constrain other attack methods and use adversarial examples from them that have only one word perturbed and evaluate ASR.

Note that ASR also shows the efficacy of attack methods. A higher ASR means the attack method is more effective. We use ASR , and similarly ASR1 , in the context of comparing attacks.

Human evaluation. We collect human annotation to further verify the similarity and fluency of adversarial sentences. We ask human annotators to rate sentence similarity and sentence fluency in 5-likert. In addition, we ask humans to check if the adversarial sentence preserves the original label. See Appendix E.2 for detailed settings.

Adversarial attack SOTA. We compare SP-Attack against three state-of-the-art methods, namely: TextFooler (Jin et al. 2020), BAE (Garg and Ramakrishnan 2020) and CLARE (Li, Zhang, et al. 2021). When reporting ASR, any adversarial example generated with one (or more) word changes is considered a successful attack. While in ASR1, we consider the subset of adversarial sentences with only a single-word change as a successful attack.

- Rand: We conduct random perturbations to augment training data in all steps of training.

- A2T (Yoo and Qi 2021) is an efficient gradient-based adversarial attack method designed for adversarial training.

For Rand and SP-Defence, we tune the classifier with another 20 k batches. For A2T, we tune the classifier with 3 additional epochs as recommended by the authors.

6.2 Necessity of and Metrics

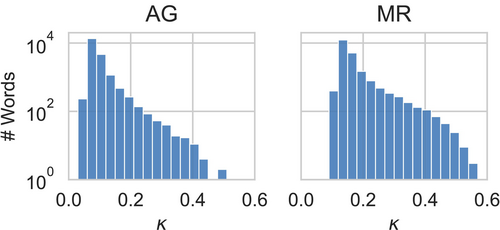

We measure the exact using brute force for the BERT-base classifier on AG and MR. The measurement takes 197 and 115 GPU hours respectively on a single Nvidia V100 GPU. Figure 3 shows the histogram of . We find that 95% of words in the vocabulary can successfully attack at least 6.5% and 12.4% of examples, while the top 0.1% of words can change as much as 38.7% and 49.4% examples on the two datasets respectively. This shows that classifiers are extremely vulnerable to single-word perturbations. The distributions of for the two tasks are different, showing is task-dependent. Words have lower average on AG than on MR. We compute the as and for the two datasets respectively. See additional analysis of words with high in Appendix D.

We highlight the necessity of and metrics. can show the flip capability of each individual word. It precisely reveals all vulnerabilities of the classifier under single-word perturbation. is derived from and factors in the number of different single-word perturbations that can successfully attack each sentence. It quantifies classifier robustness to single-word perturbation scenarios more effectively than ASR, which only shows whether each sentence could be successfully attacked.

6.3 SP-Attack Performance

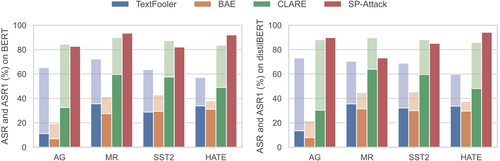

Figure 4 shows the ASR and ASR1 for all datasets, two different classifiers (left and right) and multiple attack methods. We make a few noteworthy observations. SP-Attack is the most effective method for finding adversarial examples based on single-word perturbations. ASR1's for SP-Attack are at least 73%, and over 80% in 7 out of 8 cases, demonstrating the significant vulnerability of these classifiers. A second observation is that other methods, even those with a notable number of single word perturbation-based adversarial examples, have significantly lower ASR1's (where only single-word adversarial examples are included) than ASR's (where multi-word adversarial examples are included). For TextFooler across the 8 experiments, the difference between ASR and ASR1 is 38.3%. An obvious next question we examine is whether multi-word substitutions are essential for achieving higher attack success rates. Our experiments also show that SP-Attack achieves comparable ASR with single-word perturbations, and even beats the best multi-word substitution in 4 out of 8 cases.

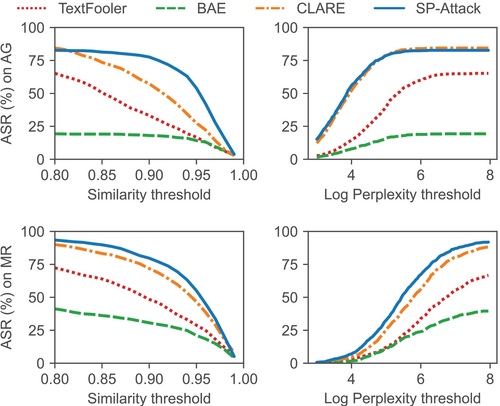

Single-word perturbation may cause poor fluency and/or low semantic similarity. To show SP-Attack is comparable with multi-word attacks in terms of fluency and similarity, we plot the ASR under different similarity and perplexity thresholds. We measure similarity using USE and perplexity using a BERT language model fine-tuned on the dataset. Figure 5 shows the results on AG and MR datasets. It shows that, at the same threshold, SP-Attack achieves comparable or better ASR than those attack baselines. Table 4 shows the human evaluation. The similarity and fluency scores are similar for all methods, but SP-Attack outperforms baselines by 5% and 10% in preserving the original label on AG and MR datasets respectively. We show inter-annotator agreement in Appendix E.2.

| Dataset | Attack | Similarity | Fluency | Preserve label |

|---|---|---|---|---|

| AG | TextFooler | 3.40 | 3.41 | 80% |

| Clare | 3.37 | 3.33 | 82% | |

| SP-Attack | 3.47 | 3.39 | 87% | |

| MR | TextFooler | 3.93 | 3.47 | 69% |

| Clare | 3.96 | 3.41 | 83% | |

| SP-Attack | 4.02 | 3.48 | 93% |

6.4 SP-Defence Performance

6.4.1 Can Our SP-Defence Overcome Our Attack?

We measure the accuracy and robustness of the vanilla and improved classifiers. The complete results are available in Appendix E.3.

Table 5 shows the CAcc, , and ASR of SP-Attack. We observe that all data augmentation or adversarial training methods have small impacts on CAcc; in most cases, the decrease is less than 1%. However, and differ a lot, showing that classifiers with similar accuracy can be very different in terms of robustness to single-word attacks (both in training and testing). We found that SP-Defence outperforms Rand and A2T on in all cases. This shows that SP-Defence can effectively improve a classifier's robustness in a single-word perturbation scenario. Averaged over 4 datasets, SP-Defence achieves 14.6% and 13.9% increases on ; 8.7% and 8.4% increases on ; and 30.4% and 21.2% decreases on ASR of SP-Attack on BERT and distilBERT classifiers respectively.

| Defence | AG | MR | SST2 | HATE | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CAcc | ASR | CAcc | ASR | CAcc | ASR | CAcc | ASR | ||||||||||

| BERT | NA | 93.7 | 91.5 | 90.1 | 82.7 | 87.7 | 86.4 | 77.2 | 93.5 | 80.6 | 74.7 | 76.6 | 82.1 | 94.5 | 72.6 | 71.4 | 92.1 |

| Rand | 92.0 | 96.3 | 93.1 | 66.2 | 87.5 | 98.2 | 80.7 | 84.2 | 79.8 | 82.1 | 79.7 | 77.9 | 94.3 | 86.3 | 83.2 | 89.2 | |

| A2T | 93.4 | 96.2 | 92.9 | 62.7 | 88.3 | 90.4 | 76.2 | 69.3 | 80.4 | 76.5 | 74.9 | 69.5 | 93.4 | 87.1 | 82.1 | 87.3 | |

| SP-D | 94.3 | 97.4 | 94.1 | 33.7. | 87.5 | 99.8 | 83.1 | 61.8 | 79.3 | 90.0 | 80.5 | 68.8 | 93.7 | 96.6 | 92.5 | 64.4 | |

| distilBERT | NA | 94.0 | 91.7 | 91.6 | 89.9 | 85.9 | 85.6 | 72.7 | 73.2 | 78.7 | 75.8 | 74.3 | 85.1 | 93.3 | 73.8 | 72.9 | 94.2 |

| Rand | 94.2 | 95.4 | 92.0 | 69.5 | 86.0 | 96.5 | 78.1 | 92.2 | 78.0 | 81.1 | 78.3 | 87.2 | 93.1 | 85.6 | 82.6 | 91.3 | |

| A2T | 94.0 | 95.3 | 92.6 | 64.8 | 85.0 | 85.1 | 73.4 | 88.8 | 78.3 | 77.7 | 76.1 | 80.5 | 93.2 | 86.1 | 82.0 | 89.5 | |

| SP-D | 94.3 | 97.6 | 93.6 | 43.1 | 85.1 | 99.8 | 79.6 | 73.4 | 78.2 | 88.3 | 80.2 | 73.0 | 92.0 | 96.9 | 91.6 | 68.4 | |

- Note: NA denotes the vanilla classifier. Bold values are the best results of each column according to the up/down arrows in the caption.

- Abbreviation: SP-D, SP-defence.

6.4.2 Can Our SP-Defence Overcome Other SOTA Attacks Constrained to Single-Word Adversarial Examples?

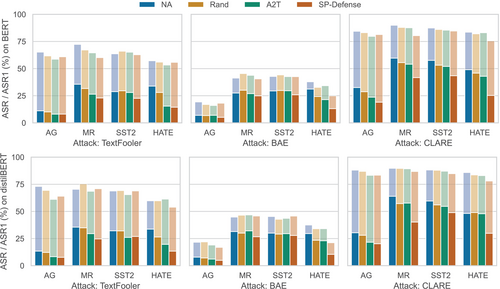

Figure 6 shows the ASR and ASR1 on vanilla and improved classifiers for different SOTA attacks (left to right). The ASR1 decreases by a large margin after the application of SP-Defence, which is consistent with the improvement on . The ASR also decreases in 20 out of 24 cases, showing the improvement of classifier robustness against a conventional multi-word adversarial attack setup.

6.5 Justifying Design Decisions of EUBA

- EUBA (top 1): We use the same gradient-based approximation. But for each word, we only verify the top position, that is, .

- EUBA (w/o mask): We do not replace each position with masks. Instead, we use the original sentence to directly compute a first-order approximation like Equation (5).

7 Related Work

The widespread deployment of text classifiers has exposed several vulnerabilities. Backdoors can be injected during training that can later be used to manipulate the classifier output (Li, Song, et al. 2021; Zhang, Xiao, et al. 2023; Jia et al. 2022; Yan et al. 2023; You et al. 2023; Du et al. 2024). The use cases of backdoor attacks are limited because they involve interfering with the training. Universal adversarial triggers can be found for classifiers (Wallace et al. 2019), wherein triggers, usually involving multiple-word perturbations, change the classifier prediction for most sentences. Adversarial attack methods (Jin et al. 2020; Garg and Ramakrishnan 2020; Li et al. 2020; Li, Zhang, et al. 2021; Zhan et al. 2024; Zhan et al. 2023; Zhang, Huang, et al. 2023; Li et al. 2023; Ye et al. 2022) change a classifier's prediction by perturbing a few words or characters in the sentence. They maintain high semantic similarity and fluency, but are computationally expensive. SP-Attack attacks the classifier by trying just a few single-word perturbations, making it an efficient attack that maintains the high similarity and effective fluency of conventional adversarial attacks.

To defend against attacks, adversarial training can be applied. Adversarial training is often used to help the classifier resist the types of attacks described above. A2T (Yoo and Qi 2021) is an efficient attack designed specifically for adversarial training. There are other defence methods including perplexity filtering (Qi et al. 2021), and synonym substitution (Wang et al. 2021), which increase the inference time complexity of the classifier. Our SP-Defence does not add overhead to inference and can effectively defend against adversarial attacks.

8 Conclusion

In this paper, we comprehensively analyse a restricted adversarial attack setup with single-word perturbation. We define two useful metrics—ρ, the quantification of a text classifier's robustness against single-word perturbation attacks, and , the adversarial capability of a word—and show that these metrics are useful to measure classifier robustness. Furthermore, we propose SP-Attack, a single-word perturbation attack which achieves a comparable or better attack success rate than existing and more complicated attack methods on vanilla classifiers, and significantly reduces the effort necessary to construct adversarial examples. Finally, we propose SP-Defence to improve classifier robustness in a single-word perturbation scenario, and show that it also improves robustness against multi-word perturbation attacks.

Acknowledgements

Prof. Cuesta-Infante was supported by R&D project TED2021-129162B-C22, funded by MICIU/AEI/10.13039/501100011033/ and the European Union NextGenerationEU/PRTR, and R&D project PID2021-128362OB-I00, funded by MICIU/AEI/10.13039/501100011033/ and FEDER/UE.

Open Research

Data Availability Statement

The data that support the findings of this study are openly available in Movie Review Data at https://www.cs.cornell.edu/people/pabo/movie-review-data/.