RETRACTED: ELSTM: An improved long short-term memory network language model for sequence learning

Abstract

The gated structure of the long short-term memory (LSTM) alleviates the defects of gradient disappearance and explosion in the recurrent neural network (RNN). It has received widespread attention in sequence learning such as text analysis. Although LSTM has good performance in handling remote dependencies, information loss often occurs in long-distance transmission. We propose a new model called ELSTM based on the computational complexity and gradient dispersion in the traditional LSTM model. This model simplifies the input gate of LSTM, reduces some time complexity by reducing some components, and improves the output gate. By introducing the exponential linear unit activation layer, the problem of gradient dispersion is alleviated. Comparing the new model with multiple existing models, when predicting language sequences, the time used by the model has been greatly reduced, and the language confusion has been reduced, showing good performance.

1 INTRODUCTION

Although traditional language models are good at capturing statistical co-occurrence of entities, their ability to handle factual knowledge is often limited, their generalization ability is poor, and it is difficult to learn rare and unknown words (Ahn et al., 2016). Recurrent neural networks (RNN) can map input sequences to output sequences or prediction sequences such as speech recognition and machine translation, and show good performance in natural language processing (NLP), widely used in various Language modelling task (Bappy et al., 2019; Bianchi et al., 2017; Ha et al., 2016). Due to the disappearance of gradient and explosion, when the gradient of the neural network error function is propagated through the neural network unit, the long-distance input will change greatly, and the gradient cannot be detected. Through examining text data, word embeddings can calculate word likelihood. By putting this data via a system that creates criteria for contextual in linguistic form, they understand it. The system then employs these guidelines in language problems to precisely anticipate or generate brand-new phrases.

Due to the disappearance of gradients in RNN, the network cannot learn long-distance information very well, which leads to the information being lost during transmission (Dinarelli & Tellier, 2016; Farsad & Goldsmith, 2018). LSTM uses stochastic gradient descent and has good performance in handling remote dependencies. The optimizing procedure stochastic gradient descending is frequently utilized in machine learning systems to identify the variables that best match the expected as well as observed outcomes. It is a crude yet effective method. The machine learning industry frequently uses gradient descent method. It has become an effective model for solving many learning problems related to sequential data (Greff et al., 2016; Hefron et al., 2017; Hochreiter, 1998).

As the dimensions disappear with the increase of the information sequence, traditional LSTM cannot capture the spatial correlation that may exist in the data, which increases the prediction error. Faced with high information flow, because the transfer function between the two hidden states of LSTM is too shallow, there is no way to capture the underlying structure in the sequence (Huangkang & Ying, 2018; Kim & Park, 2018). It is challenging to use the dropout's technique to address the overfitting problem with LSTMs. A regularization technique called dropout excludes intake as well as recurring linkages to LSTM units' probability from weights as well as activating adjustments during training phase.

This paper proposes a new model called ELSTM, which eliminates the input cell state processing in the input gate, reduces parameters, and simplifies the complexity of the model. We introduced the exponential linear unit (ELU) activation layer. By predicting the sentence sequence, the experiment showed that the ELSTM model showed lower language confusion in the prediction of unknown languages, and effectively reduced the training time.

This paper is structured as follows, section 2 presents the existing work techniques and their limitations, followed by the methodological framework is presented in section 3 which discussed the implementation of LSTM and ELSTM. Further, the section 4 provides the training procedures and in section 5 the experimental results are presented. Finally, section 6 concluded the paper with the result outcome.

2 RELATED WORKS

Since the LSTM architecture was first proposed, modern research has made many improvements to the LSTM architecture. Krause et al. (2016) used a deep gate to connect the memory units of adjacent layers, introduced a gated linear relationship between the lower and upper loop units. They proposed a new architecture based on LSTM, and proved a structure can improve the performance of machine translation and language modelling. In order to better deal with the problem of word-level language modelling, Merity et al. used DropConnect as a form of cyclic regularization, and proposed the weight-dropped LSTM (Lam et al., 2019). The mLSTM (Li et al., 2018) adopts the combination of traditional LSTM and multiplicative recursive neural network. When dealing with sequence modelling problems, it has the ability of different recursive conversion functions for each possible input. An automated method of text data analysis is sentiment analysis on Twitter. It establishes the sentiment or opinion of public tweets from different domains. With testing accuracy, deep learning algorithms promise to assess the review tweets and also recognize movie reviews (Gandhi et al., 2021).

The LSTM model performs well in multiple regions in natural language processing (Merity et al., 2017; Mohan & Gaitonde, 2018; Neil et al., 2016). As was said before, LSTM allowed us to enter a phrase for predictions as opposed to only one phrase, that is, considerably more practical and effective in NLP. This study's explanation of LSTM for text categorization as well as the Python & Keras frameworks used to create the software for it come to a close. Proposed a latent relational language model (LRLM), which parameterizes the joint distribution of words and entities in a document based on a knowledge graph. It not only improves the performance of the language model, but also can obtain the posterior of a given range of text entities through relations probability. Salem (2018) inputed spatio-temporal features obtained from traffic data into the LSTM network, and proposed a ST-LSTM model that meets the requirements of real-time and has higher accuracy. Strobelt et al. (2017) proposed the RLSTM model, combining the residual frame with the traditional LSTM, and constructing a proposed attention network for sequential data by learning the changes in spatial input. Yukun Ma et al. effectively used the layered attention mechanism composed of target-level and sentence-level attention to extend the LSTM network, and successfully incorporated emotion-related information into the emotion classification.

LSTM is prone to accumulate errors during iterative training, has a large amount of calculation and takes a long time. The backpropagation method employs a process based as the Function Approximation or Stochastic Gradient to find the least amount of the error functional in weighted region. The training issue is thus thought to have an answer in the weighting that minimize the error measure. New methods to improve the network convergence speed use timing gates to enhance the long-term dependence learning ability of LSTM, and attention-based methods in multivariate time series prediction models are increasingly used. Yu et al. designed a spatiotemporal convolutional neural network that was used to design the neural network with fewer parameters and faster training speed (Tang et al., 2019). The STCN incorporates the benefits of the STI equations with the temporal convolutional system (TCN), that intuitively predicts the targeted variables by mapping greater information to its predicted scheduled components.

3 MODELS

3.1 Long short-term memory

LSTM is different from other deep learning architectures, that includes multiple LSTM units. The core of its architecture is a storage unit that can adjust the state with information, and a non-linear gating unit that regulates the inflow and outflow of information (Trinh et al., 2018). Because there may be delays of uncertain length between significant occurrences in a response variable, LSTM systems are well-suited to categorizing, analysing, as well as generating forecasts depending on time series information. To solve the disappearing gradients issue that might arise when learning conventional RNNs, LSTMs were created. It is crucial to have non-linearity since it enables succeeding levels to grow atop one another. The strength of two subsequent linear levels is identical to that of a linear regression stack (both can express the precise identical collection of functions). LSTM can also learn from the data and make use of sequential dependencies to make predictions based on the latest context in the input sequence, alleviating the problem of vanishing gradients. LSTM contains input gate, output gate and forget gate. Its gate structure allows LSTM to store and access information over time (Vermaak & Botha, 1998). A singular outcome is generated by a standard RNN by feeding it one source at a period. However, in backpropagation, both the present and past intakes are used as source. One of them is referred to as a keyframe, so one timestep will have several time series analysis items into the RNN at the same period. LSTM uses gate structures to selectively control information, thereby alleviating the defect of the disappearance gradient of traditional RNN (Wang et al., 2020). Among them, the input gate and output gate control input and output of the unit respectively, and forget the state of the gate control unit.

3.2 Exponential linear unit

Compared with units of other activation functions, ELU has improved learning characteristics at any time, and mean activation near zero can make learning faster. ELU reduces the variation of forward propagation and the information transmitted to the next layer when the input parameters are small. In this paper, by introducing the ELU layer instead of , the model has both robustness and low complexity, which simplifies the operation and alleviates the problem of vanishing gradient.

3.3 ELSTM

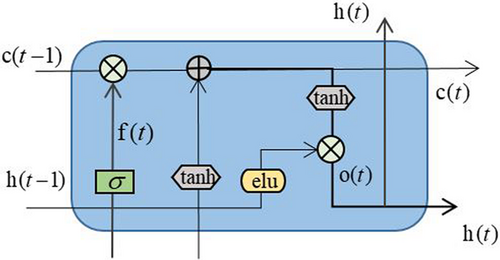

Although LSTM often shows good performance when processing data sequences with evenly distributed information, it does not perform well in the face of sequences with uneven information flow between steps. This paper improves the input gate of LSTM, removes the components in the input gate, simplifies the operation, and adds an ELU activation layer. The output gateways complete the subsequent concealed phase, while the control signal determines what pertinent info may be supplied from the present phase. The model can selectively forget the learned knowledge or learn new information by adjusting the forget gate and output gate. The forget gate effectively alleviates the problem of overfitting by selectively forgetting the information in the previous time step Figure 1.

As the time step changes, the information in the ELSTM model is loaded and transmitted, and the past cell status or new information is selectively discarded. By uploading the output information to the storage unit at a certain point in time, the problem of information disappearance caused by too long time can be effectively prevented, and by using longer context information, it can be better represented natural language.

4 TRAINING PROCEDURE

This experiment performs sequence tasks by comparing traditional LSTM and various variants of LSTM, and sees the effect of predicting the next word, thereby viewing the effectiveness of various models. At time step , the training model predicts the input at time step . The model takes one-hot encoded words from the input, converts them to embedding vectors, and uses one word vector at a time. The output layer consists of the softmax layer, and the vector is input to the softmax function to generate the predicted distribution of the next value, where each symbol has an associated probability, and the sum of all these probabilities is 1. Given a sequence of words that have been seen, input it into our neural network to train to predict the next word. Using n-gram modelling is among the earliest techniques for calculating the likelihood that a certain word will appear following a phrase. Using the (n − 1) phrases that came before it, n-gram algorithms try to predict the following word in a phrase.

5 EXPERIMENTS

5.1 Data

The summation of the probability density functional is the likelihood function value. This method is highly helpful since it provides information on the likelihood that an event will take place within a certain time frame. A probabilistic dispersion is a statistical variable used in maximum likelihood estimation that estimates the likelihood that various potential scenarios of an operation will happen.

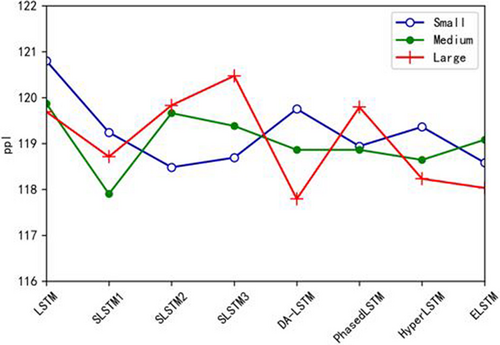

Ppl can measure the degree to which the probability distribution model processes the prediction samples, and is usually used as a criterion to judge whether the language model is good or bad. If the ppl is smaller, then the accuracy is higher and the language model is better.

5.2 Experiments and results

In this experiment, three different experimental configurations were used, and the experimental specifications were increased in sequence according to the experimental configuration in Table 1.

| Small | Medium | Large | |

|---|---|---|---|

| Num layers | 2 | 2 | 2 |

| Batch size | 20 | 20 | 20 |

| Hidden size | 200 | 650 | 1500 |

| Num steps | 20 | 35 | 35 |

| Init scale | 0.1 | 0.05 | 0.04 |

| Max grad_norm | 5.0 | 5.0 | 10.0 |

| Epoch start_decay | 4 | 6 | 14 |

| Max epoch | 13 | 39 | 55 |

| dropout | 0.0 | 0.5 | 0.65 |

| Lr decay | 0.5 | 0.8 | 1.0/1.15 |

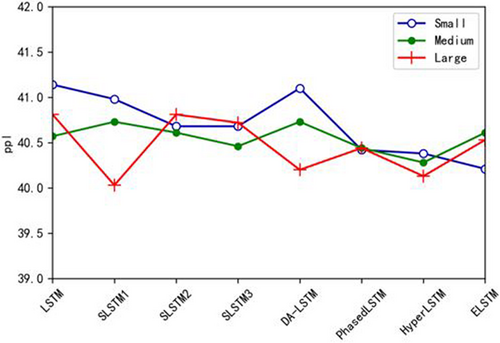

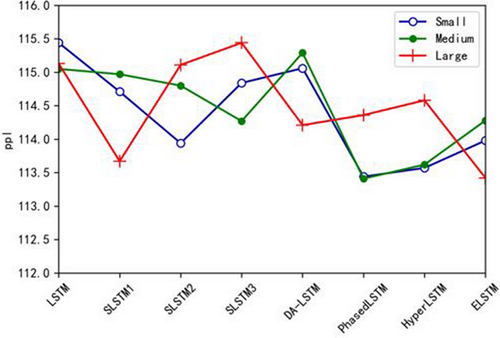

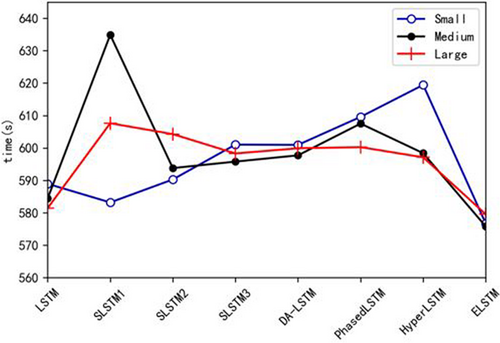

Yu et al. (2017) proposed three simplified LSTM variants, and their experiments show that if the LSTM layer is simplified in different ways, the parameter configuration when achieving the best results is often different. By including a different time gating, kt, the Phased LSTM paradigm enhances the LSTM network. Upgrades towards the cell states ct and ht are only allowed whenever the gate is open, so this gateway is regulated by a separate periodic vibration that has three variables. Zhang et al. (2019) proved that Phasedlstm accelerates the convergence speed of LSTM by reducing the amount of calculation by several orders of magnitude during operation. David proposed the Hyperlstm model, which generates non-shared weights for traditional LSTM. LSTMs allow for the backpropagation of errors over period as well as levels, aiding in their preservation. A synthetic RNN design considered an LSTM model features return links that enable it to analyse complete periods of information in addition to individual data items. Only a few parameters are needed in the calculation, and good training results are obtained in sequence tasks. The DA-LSTM proposed by can effectively process sequence data with uneven information distribution and reduce the amount of calculation. This experiment uses the initial reduction of the components of the input gate, replace the activation function of the output gate with various activation functions, and compare the experimental results. In our experiments, we use the new model and the latest variant of LSTM to prove the advanced nature of the ELSTM model. We obtained different experimental results by sequentially increasing the size of the model and the number of trainings, and by predicting and analysing the language order of different models Figures 2 and 3.

It can be seen that among the three simplified LSTM variants, SLSTM1 performs well in the configuration of the large specification. However, SLSTM1's performance under other specifications is not very prominent. Choose different configurations in SLIM1, SLIM2 and SLIM3, the final performance is very different, and it is greatly affected by the parameters. Compared with other LSTM variants, it is not very stable Figures 4 and 5.

DA-LSTM shows good performance during the training process, especially under the configuration specifications of Large, but it did not perform as well as other variants during the test. Phasedlstm and Hyperlstm have stable performance with the change of the experimental configuration specifications, and have good performance in various configurations. In the experiment, if only the door is updated without changing the activation function, the result obtained is not optimal. The performance of ELSTM in these three specifications is more stable than the performance of multiple LSTM variants, and the ppl obtained in the experiment is generally low.

As shown in Figure 5, compared with other LSTM variants (Gated Recurrent Units (GRUs), Depth Gated RNNs, & Clockwork RNNs), ELU has more stable performance and effectively reduces time consumption. ELSTM has been simplified by the gate structure, reducing the amount of calculation and greatly shortening the convergence time. And under the contrast of many different activation functions, the ELU activation function shows the advantage of fast convergence speed.

6 CONCLUSION

In order to solve a series of problems such as too many parameters of the traditional LSTM model, a large amount of calculation, and vanishing gradients, we proposes a new model called ELSTM. ELSTM simplifies the components of the original LSTM input gate, and uses the ELU function to replace the sigmoid in the original LSTM output gate. Experiments show that ELSTM performs better and more stable than traditional LSTM in sequence prediction. It adjusts the information flow in the door structure, chooses to delete information or will add information, and completely integrates new information.

ACKNOWLEDGEMENTS

This work was supported by the National Key R&D Program of China (Grant No. 2018YFC0809700, 2018YFF0301000, and 2018YFC0704800), the National Natural Science Foundation of China (Grant No. NSF91746207), the Beijing Postdoctoral Research Foundation (Grant No. ZZ-2019-76), the China Postdoctoral Science Foundation (Grant No. 2019M660540), and the Key R&D Program of Xinjiang Province (Grant No. 2020B03001-2).

CONFLICT OF INTEREST

The authors declare no conflict of interest.

Biographies

Zhi Li is a postgraduate and is working in the Criminal Investigation Corps of the Public Security Department of Xinjiang Uygur Autonomous Region. Now, he is the deputy leader of the Serious Crimes Detachment of the Criminal Investigation Corps. He has been engaged in the investigation of major criminal cases such as homicide cases and guns for a long time and graduated from the Law Department of Southwest University of Political Science and Law in 2001 with a BS degree in law. In 2010, he graduated from the Law Department of Xinjiang University with MS degree in law. His research interests include crime mechanism, data mining, and artificial intelligence.

Qing Wang was born in Linyi, Shandong Province, China in 1998. In 2021, she received her BS degree from Shandong University of Technology. Currently, she is a postgraduate in Artificial Intelligence at Zhejiang Gongshang University. Her research interests include machine learning, data mining, and natural language processing.

Jia-Qiang Wang received his MS and PhD degrees from Shandong University of Technology (SDUT) and Hebei University of Technology (Hebut) in 2013 and 2018, respectively. Currently, he is the Chief Expert of Smart City in China Unicom Xiongan Industrial Internet Co., Ltd. His research interests include biometrics, machine learning, and data mining.

Han-Bing Qu is Doctor, Researcher, and is working in Beijing Institute of New Technology Application. Now, he is the director of the Artificial Intelligence and Big Data Research Center of Beijing Academy of Science and Technology and the director of Beijing Artificial Intelligence Society. He graduated from Harbin University of Technology in 2003 with a MS degree in control theory and control engineering, and in 2007 with a PhD degree in pattern recognition and intelligent systems from the Institute of Automation, Chinese Academy of Sciences. His main research interests are machine learning, data mining, and computer vision.

Ji-Chang Dong is Doctor, Professor, and is working in the School of Economics and Management of the University of Chinese Academy of Sciences. He is currently the Vice President of the University of Chinese Academy of Sciences, a member of the Management Science and Engineering Discipline Review Group of the Academic Degrees Committee of the State Council, and a standing director of the China Management Modernization Research Association. In July 2003, he received a PhD degree from the Academy of Mathematics and Systems Science, Chinese Academy of Sciences. His research interests include decision analysis and decision support system, industrial economy and management, and so on.

Zhi Dong is Doctor, Lecturer and is working in School of Innovation and Entrepreneurship, University of Chinese Academy of Sciences. In July 2017, he received a PhD degree from the School of Economics and Management, University of Chinese Academy of Sciences. His research interests include decision-making analysis, risk management, and policy research.

Open Research

DATA AVAILABILITY STATEMENT

No datasets were generated or analyzed during the current study.