The effect of reward expectation on the time course of perceptual decisions

Abstract

Perceptual discriminations can be strongly biased by the expected reward for a correct decision but the neural mechanisms underlying this influence are still partially unclear. Using functional magnetic resonance imaging (fMRI) during a task requiring to arbitrarily associate a visual stimulus with a specific action, we have recently shown that perceptual decisions are encoded within the same sensory-motor regions responsible for planning and executing specific motor actions. Here we examined whether these regions additionally encode the amount of expected reward for a perceptual decision. Using a task requiring to associate a gradually unmasked female vs. male picture with a spatially-directed hand pointing or saccadic eye movement, we examined whether the fMRI time course of effector-selective regions was modulated by the amount of expected reward. In both the pointing-selective parietal reach region (PRR) and the saccade-selective posterior intraparietal region (pIPS), reward-related modulations were only observed after the onset of the stimulus, during decision formation. However, while in the PRR these modulations were specific for the preferred pointing response, the pIPS showed greater activity when either a saccadic or a pointing movement was associated with a greater reward relative to neutral conditions. Interestingly, the fusiform face area showed a similar reward-related but response-independent modulation, consistent with a general motivational signal rather than with a mechanism for biasing specific sensory or motor representations. Together, our results support an account of perception as a process of probabilistic inference in which top-down and bottom-up information are integrated at every level of the cortical hierarchy.

Introduction

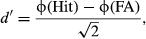

The categorization process during perceptual decision-making is not only guided by the actual sensorial information provided by the sensory stimulus, that is, sensory evidence, but is also strongly biased by prior expectations about the probability of its occurrence or the reward associated with its correct discrimination. A traditional account of the combined influence of these factors on perceptual decision-making is provided by the postulation, in the signal detection theory (SDT), of a sensitivity parameter (d′) quantifying the effect of sensory evidence and a criterion (C) parameter quantifying the effect of prior knowledge about stimulus probability or payoff (Swets et al., 1961; Green & Swets, 1966).

More recent conceptualizations of the decision process, such as the drift diffusion model, which account for the variability in response times in addition to choice probabilities, have elegantly reinterpreted the original SDT idea of a combined influence of prior knowledge and sensory evidence on decision-making into a dynamic model in which sensory information is accumulated over time from a starting point to a decision bound (Smith & Ratcliff, 2004; Ratcliff & McKoon, 2008). Within this framework, while the amount of sensory evidence from the stimulus is expected to determine the steepness of the accumulation rate (i.e., a modulation of the drift rate), factors such as stimulus probability or payoff have been associated with a modulation of the starting point of the accumulation process (i.e., a shift toward the more likely/more highly rewarded response option) (Ratcliff et al., 1999; Bogacz et al., 2006; Diederich & Busemeyer, 2006).

At the neural level, however, the mechanisms underlying the integration of these two different sources of information are still highly investigated and yet not fully understood. According to dominant neurobiological models of decision-making, the process of evidence accumulation during perceptual decision-making is not localized within a specialized higher cognitive center independent of the sensory-motor system but is intrinsically represented within the same sensory-motor structures that guide body movements (Gold & Shadlen, 2007; Shadlen et al., 2008; Cisek & Kalaska, 2010). Consistently with this ‘action-based’ or ‘intentional’ framework, human studies on perceptual decision-making have shown that decision-related activity, in terms of modulations by the amount/strength of sensory evidence for a certain response, can be observed within the same sensory-motor regions that govern the planning and execution of the actions used to report the decision (Ploran et al., 2007; Tosoni et al., 2008; Donner et al., 2009; Oliveira et al., 2010; Selen et al., 2012; Erickson & Kayser, 2013). For example, by recording functional magnetic resonance imaging (fMRI) activity during a task requiring human subjects to arbitrarily associate a visual stimulus (a place or a face picture) to a specific action (a hand pointing or a saccadic eye movement), we have shown that activity of pointing- and saccade-selective regions of the posterior parietal cortex reflects the strength of the sensory evidence toward the preferred response (Tosoni et al., 2008).

Importantly, by using a paradigm in which the sensory stimulus was gradually unmasked to maximize the temporal separation between evidence accumulation and action planning, we have subsequently shown that activity related to evidence accumulation was functionally dissociated in the pointing- vs. saccade-selective regions of the posterior parietal cortex (Tosoni et al., 2014). Indeed, while sensory evidence modulations were specific for the stimulus category associated with the preferred pointing response in the parietal reach region (PRR), they were effector-independent in the saccade-selective posterior intraparietal sulcus region (pIPS) (i.e., they occurred in an eye movement region during decisions that called for both a saccadic eye and a hand pointing response). Interestingly, by using a perceptual decision task in which monkeys indicated the direction of a random-dot motion stimulus by making an eye or a hand movement toward a left or a right choice target, a recent neurophysiological work has described an analogous functional dissociation between the evidence accumulation signals in the saccade-selective lateral intraparietal (LIP) region and the reach-related medial intraparietal (MIP) region in monkeys. While the LIP region accumulated evidence during both eye- and hand-movement trials, such modulations were specific for hand-movement trials in the MIP region (de Lafuente et al., 2015).

Importantly, although these studies have suggested a tight association between decision-related signals and motor-related regions, the observation of effector-independent decision modulations in the saccade-selective region partially contrasts with a rigorous interpretation of the ‘action-based’ framework but instead suggests a more abstract view of decision signals/mechanisms in the brain (Freedman & Assad, 2011).

The neural mechanisms underlying modulations by prior knowledge, and in particular the effect of reward expectation on perceptual decisions, however, remains much more elusive and unaddressed. One intriguing possibility is that reward information interacts with sensory evidence in the same sensory-motor regions implicated in the evidence accumulation process during perceptual decision-making. A recent study has shown that when monkeys were trained to execute an eye or a hand movement to select between two visual targets based on their desirability, these two regions also differentially encode action-specific signals during reward-based decisions (Kubanek & Snyder, 2015). In particular, while LIP activity appeared to be modulated by value or desirability of a target in the receptive field during both trials in which it was selected via an eye or a hand movement, MIP appeared to encode target desirability only during hand movement trials.

Here, we examined whether the pointing- and saccade-selective regions exhibiting evidence accumulation signals in our previous studies were additionally modulated by the amount of expected reward associated with a decision executed with the preferred/non-preferred response effector. As in our previous study, the task required to discriminate a noisy sensory stimulus (a male or a female picture) that was gradually unmasked over time according to two levels – an evidence level in which the stimulus was classified with ~ 85% accuracy and a noise level in which the stimulus remained substantially noisy – and to arbitrarily associate this stimulus with a specific action (a hand pointing or a saccadic eye movement). In the current study, however, the visual stimulus was preceded by a reward cue indicating the reward context of the trial, that is, whether one of the two movements/stimulus categories would be associated with a higher reward or whether they were equally rewarded.

We first measured whether these reward-biased conditions were associated with a modulation of the subjects’ behavioral performance by testing the effect of the sensory evidence (evidence vs. noise trials) and of the reward bias (biased vs. unbiased reward conditions with a separate encoding for conditions with a congruent/incongruent association between the reward bias and the stimulus evidence) on the discrimination accuracy and the SDT parameters, with sensory evidence expected to affect the d′ parameter and the reward the C parameter. We next measured modulations by reward expectation on the activity of the pointing- and saccade-selective regions by testing (i) whether reward modulations were specifically observed during evidence accumulation (i.e., during the stimulus unmasking period) or even before, during the presentation of the reward cue indicating the reward context of the trial; (ii) whether reward modulations were comparable across trials with different levels of sensory evidence (evidence vs. noise) or were particularly stronger during either evidence or noise trials; and (iii) whether the functional association between reward- and action-related signals in pointing- and saccade- selective regions was similar to that observed for sensory evidence modulations, that is, action-dependent in the pointing-selective regions and action-independent in the saccade-selective regions.

Notably, since previous studies have shown that the interaction between prior expectation and sensory signals can be observed both at the integration stage in sensory-motor structures (Rorie et al., 2010; Summerfield & Koechlin, 2010; Hanks et al., 2011; Mulder et al., 2012; Rao et al., 2012; de Lange et al., 2013) and at the sensory representation stage in sensory-related regions (Kok et al., 2012; Cicmil et al., 2015), we also investigated the effect of reward expectation on the perceptual decision time course of the stimulus-selective face-related fusiform face area (FFA) region.

Materials and methods

Subjects

The study is a follow-up of our recent work on perceptual decision-making (Tosoni et al., 2014) conducted on the same group of fifteen right-handed subjects (nine females, mean age 25 ± 4) who participated in the original experiment. Participants gave their written informed consent, and the experimental protocol was approved by the Human Studies Committee of G. D'Annunzio Chieti University.

Experimental paradigm

The experimental paradigm closely followed our previous work on perceptual decision (Tosoni et al., 2014) with the addition of a cue signal, informing subjects about the expected reward associated with the possible responses. Notably, our previous paper (Tosoni et al., 2014) only included the neutral condition, which in the current work was used as a contrast baseline for the reward conditions.

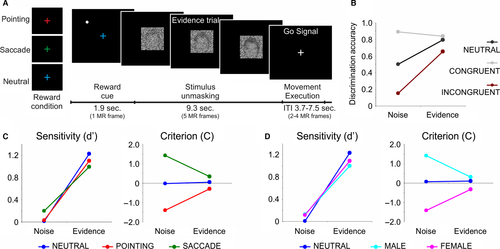

The original paradigm (Fig. 1A) involved the presentation on each trial of a noise-occluded face picture (male or female) that was gradually unmasked for 9.345 s at either of two different rates: a high unmasking rate (evidence trials), selected to yield a correct discrimination in about 85% of the trials (see below); and a low unmasking rate (noise trials) in which the picture remained substantially noisy for the entire interval, reaching 10% of sensory evidence at the end of the unmasking period. Following the offset of the noisy picture (go signal), subjects were instructed to indicate whether they had seen a male or female picture by making an eye or a pointing movement, respectively, to the remembered location of a visual target presented at the beginning of the trial on the right or left side of the screen. During the stimulus unmasking period subjects were required to hold down a button with their right hand while maintaining central fixation and, only after the image had disappeared, they were instructed to either release the button and rotate their wrist to point in the direction of the target while keeping central fixation (pointing decisions), or to move the eyes in the direction of the target direction while continuing to hold the button (saccade decisions), and then immediately return back to the starting point.

Here, in addition to perceptual evidence, we also manipulated the expected reward associated with the decision by changing the color of the fixation cross presented at the beginning of each trial along with the peripheral target (Fig. 1A). More specifically, each trial began with the presentation of a visual cue for 1.869 s, which consisted in a colored central fixation cross indicating the reward context of the following perceptual decision, that is whether one of the two movements/stimulus categories would have been associated with a higher reward or whether they would have been equally rewarded. In particular, while a blue color indicated a symmetric reward context in which the two choices were equally rewarded with three points (neutral reward condition), a red or green color indicated a greater reward for one choice over the other in the ratio of 5 : 1 points (biased reward condition). Reward points had motivational significance to the subjects as they were informed before the beginning of the experiment that the participant obtaining the highest score would have been rewarded with a financial bonus to be summed up with the standard compensation for participating in the study. Importantly, participants were told that only correct discriminations would be rewarded, and received no actual feedback during the experiment. At the end of the experiment they were informed about their total score.

Notably, in order to separately estimate the BOLD responses associated with the processing of the reward cue and the stimulus unmasking intervals (see below for details on the method), on a subset of the trials (23%) the cue interval was not followed by the stimulus unmasking period (catch trials) but by an inter-trial interval (ITI) of variable duration (3.7, 5.6, or 7.5 s).

Pointing movements were associated with male pictures in half of the subjects, and with female pictures in the other half. The association between color and reward points in the biased reward condition followed this counterbalancing of conditions so that in half of the subjects the conditions associated with more points were those in which pointing movements were executed to male pictures preceded by a red fixation cross and those in which saccadic eye movements were executed to female pictures preceded by a green fixation cross. In the other half instead, the biased reward conditions were those in which pointing movements were executed to female pictures preceded by a green fixation cross and those in which saccadic eye movements were executed to male pictures preceded by a red fixation cross. Based on these stimulus-response associations, conditions could be classified based either on the perceptual category (stimulus evidence or reward favoring a male/female response across response effectors) or on the response effector (stimulus evidence or reward favoring a saccadic/pointing response across stimulus category).

The level of sensory evidence associated with evidence trials was selected in each individual subject during a psychophysical session performed before the fMRI experiment, in which a staircase procedure was used to stretch the average decision times over a relatively long interval (~ 9 s) compatible with the temporal resolution of the fMRI while maintaining a relatively accurate level of performance (~ 85%). The sensory stimulus was initially unmasked until a level of 100% evidence (i.e., original picture without noise). Participants were instructed to respond as fast and as accurately as possible (i.e., as soon as they were able to discriminate the face stimulus even if it was not yet fully unmasked). In successive trials the final level of sensory evidence was progressively reduced until a stable accuracy performance of ~ 85% with an average reaction time of ~ 9 s was reached. Specifically, by decreasing the level of the available sensory evidence, reaction times were progressively lengthened because subjects needed to take advantage of the entire unmasking period in order to get the maximum level of available sensory information to accurately discriminate the face stimulus. This procedure ensured that neural signals during the stimulus unmasking period mostly reflected decision formation rather than post-decision components such maintenance in working memory of the selected action plan.

Notably, the level of the available sensory evidence was instead fixed across participants during noise trials and corresponded to a final level of 10% (i.e., the face stimulus was gradually unmasked from 0% to 10% of sensory evidence). As described above, following the psychophysical session collected outside the scanner on a RT version of the task, the main fMRI session was run using a delayed version of the task in which participants were instructed to withheld the response during the stimulus unmasking period (9.345 s) and to execute the selected movement after the offset of the picture (go signal). All subjects were trained on the fMRI paradigm prior to scanning to ensure that they fully understood the instructions and could perform the task.

Images were 240 by 240 pixel gray-scale digitized photographs of faces selected from a larger set developed by Neal Cohen, and used in our previous experiments (Tosoni et al., 2008, 2014). Noisy pictures were generated using a function that adds a certain weight of white noise to the images in the form of pixels of 4 × 4 mm. Images were gradually unmasked according to a linear function of time (y = t) that equally divides that total amount of noise (y = 0−1) by the time length of the unmasking interval (t: 0–1). Visual stimuli were generated using an in-house toolbox for Matlab (The Mathworks). For the psychophysical session before scanning, images were presented on a 17″ LCD computer monitor (1280 × 796 pixels, refresh rate: 60 Hz). Subjects viewed the display from a distance of 60 cm, with their head stabilized by a chin-rest. In the scanner, images were projected onto an LCD screen positioned at the back of the magnet bore and were visible to the subjects through a mirror attached to the head coil.

Localizer scans and regions of interest (ROIs)

In order to define a set of effector- and stimulus-selective regions of interest (ROIs), before the main experiment each participant underwent two localizer sessions: a localizer session of delayed pointing and saccadic eye movements to spatial targets and a localizer session of passive viewing of unmasked scene/place and face images.

The pointing/saccade localizer session alternated 18-s blocks of delayed hand pointing or saccadic eye movements with blocks of visual fixation of variable duration (mean duration = 13 s). Each block started with a written instruction (FIX, EYE, HAND) and contained four trials. Each trial began with observers maintaining central fixation while holding down a button on a response box with their right hand. On saccade or pointing trials a peripheral target, indicating the location for the upcoming movement, appeared for 300 ms in one of four radial locations (1/4, 3/4, 5/4, 7/4 π) at an eccentricity of 8° of visual angle. The targets were filled white circles of diameter 0.9° in size. After a variable delay (1.5, 2.5, 3.5, or 4.5 s), the fixation point turned red, and participants were either instructed to release the button and rotate their wrist (without moving the shoulder or the arm) to point with their right hand in the direction of the target while keeping central fixation (pointing blocks), or move the eyes in the direction of the target direction while continuing to hold the button (saccade blocks), and then immediately returned back to the starting point. The visual parameters for the presentation of peripheral target stimuli were the same in the localizer and the decision scans.

The face/place localizer alternated 16-s blocks of passive viewing of unmasked images of scenes (indoor and outdoor) or faces (male and female) with blocks of visual fixation of variable duration (mean duration = 13.6 s). Within each block, the images were centrally presented for 300 ms and were alternated every 500 ms.

The BOLD signals during these localizer sessions were then used to define a set of individually defined ROIs showing a preference for the planning/execution of pointing vs. saccadic eye movements and for the viewing of faces vs. places images. Our ROIs set specifically included the pointing-selective PRR, the sensory-motor cortex (SMC) and the frontal reach region (FRR), the pIPS and the frontal eye fields (FEF) regions and the face-selective FFA. Our previous paper (Tosoni et al., 2014) describes in detail the related procedures and the methods used to obtain these ROIs in each individual subject from the localizer scans.

fMRI acquisition and analysis

Functional T2*-weighted images were collected on a Philips Achieva 3T scanner using a gradient-echo EPI sequence to measure the BOLD contrast over the whole brain (TR = 1869 ms, TE = 25 ms, 39 slices acquired in ascending interleaved order, voxel size = 3.59 × 3.59 × 3.59 mm, 64 × 64 matrix, flip angle = 80°). Structural images were collected using a sagittal M-PRAGE T1-weighted sequence (TR = 8.14 ms, TE = 3.7 ms, flip angle = 8°, voxel size = 1 × 1 × 1 mm).

A total of 26 scans were collected in each subject, each lasting 388 s and including six catch trials (cue only), 12 evidence trials (four for each reward condition) and eight noise trials (four for the neutral reward conditions and four for the biased reward conditions) for a total of 676 trials.

Differences in the acquisition time of each slice in a MR frame were compensated by sinc interpolation so that all slices were aligned to the start of the frame. Functional data were realigned within and across scans to correct for head movement using six-parameters rigid body realignment. A whole brain normalization factor was uniformly applied to all frames within a scan in order to equate signal intensity across scans. Images were resampled into 3 mm isotropic voxels and warped into 711-2C space, a standardized atlas space (Talairach & Tournoux, 1988; Van Essen, 2005). Movement correction and atlas transformation was accomplished in one resampling step to minimize sampling noise.

Hemodynamic responses during decision trials were estimated without any shape assumption at the voxel level using the general linear model (GLM) and averaged across all the voxels of each ROI to generate regional time series. In particular, the separate contribution to the BOLD response of the closely spaced cue and the stimulus unmasking intervals was obtained by following the method introduced and validated by Ollinger et al. (2001a,b) which is based on the presentation of both ‘compound’ trials that elicit two or more distinct responses with starting points separated by fixed intervals and ‘partial’ or ‘catch’ trials in which only the first BOLD response is present (also see Shulman et al., 1999; Sestieri et al., 2010 for other methodological details). Since the only restriction of the method is that partial trials are cognitively equivalent to the initial stage of the compound trials and that trials are separated by a random ITI, we alternated trials in which the reward cue was followed by the stimulus unmasking period with catch trials in which the reward cue was identical to that presented in the compound trials (both physically and cognitively) but was not followed by the stimulus unmasking period. Crucially, these trials were randomly interleaved and separated by a variable ITI (3.7, 5.6, or 7.5 s).

The hemodynamic responses associated with these trials was than analyzed using both a between-trial GLM model estimating a separate time course of the BOLD response initiated by each trial type (reward cue only, reward cue + stimulus unmasking; this model yielded a single time course over the entire trial time locked to the onset of the trial) and a within-trial GLM model yielding a separate time course for the reward cue and the stimulus unmasking intervals (this model takes advantage of the commonality of the fixation cue period to yield distinct estimates of the BOLD responses initiated at the onset of the reward cue and the stimulus unmasking intervals). More specifically, in addition to terms on each scan for the intercept and for the linear trend, the two models included three cue-related regressors associated with the three reward conditions (neutral reward, reward bias for a pointing/saccadic or male/female response according to the type of condition classification; cue-related activity for the within-trial model and cue only-related activity for the between-trial model) and 18 stimulus-related regressors resulting from the interaction between stimulus evidence, reward expectation and selected response [(three reward conditions by three evidence levels (noise, evidence for a pointing/saccadic or male/female response according to the type of condition classification) by two responses (pointing, saccade)]. Cue-related regressors were modeled by a set of 10 delta functions covering 18.69 s following the onset of the cue stimulus in both the within- and the between-trial models, while the stimulus-related regressors were modeled by a set of 14 delta functions in the within-trial model (covering 26.16 s following the onset of the stimulus unmasking interval) and by a set of 15 delta functions in the between-trial model (covering 28.03 s following the onset of the trial, that is, the onset of the reward cue).

Notably, as depicted in Fig. S1, which compares the between and the within models for an example condition, the accuracy of the estimate of the cue- and stimulus-related activity obtained from the within-trial model was validated by the estimate of the cue only- and all trial-related activity obtained from the between-trial model.

Since we were particularly interested in examining the effect of reward expectation on decision-related activity and in the current paradigm decisions were formed between the onset of the stimulus unmasking interval and the go signal for the execution of the response, we focused on GLM estimates of the first seven time points following the onset of the stimulus unmasking period (time points 0–6 of the stimulus-related regressors of the within trial model) as these time points were likely not or minimally affected by signals reflecting action planning/execution.

Individual time point estimates were then entered into group analyses conducted through random-effect anovas in which the experimental factors, including reward condition, sensory evidence and response effector were crossed with the time factor.

Eye movement recording and analysis

During the training session, subjects were carefully trained to maintain fixation during the unmasking period and to execute either a pointing or a saccadic movement toward the peripheral visual target only after the go signal. Subjects were also trained to maintain fixation during the execution of a pointing movement. Eye position was monitored using an infrared eye-tracking system (RK-826PCI pupil/corneal tracking system; ISCAN, Burlington, MA) recording the gaze position of the right eye at 120 Hz, whereas pointing movements were monitored online through a video camera and recorded by button releases on a keypad. During the fMRI scans, eye position was recorded through an infrared eye-tracking system (ISCAN ETL-400 pupil/corneal tracking system, 120 Hz sampling rate; ISCAN, Burlington, MA). Due to technical problems in the eye-tracker software, eye traces from two experimental subjects were not recorded.

At the beginning of each session, gaze position was calibrated using a standard nine-point calibration procedure. Subjects were asked to fixate each of the calibration points distributed over a 3 × 3 grid that covered 10° of visual angle, corresponding to the horizontal projection of the four radial target locations in the training session (+5° right, −5° left). The spatial transformation of calibration data was then used to estimate the changes in eye position in degrees of visual angle in the horizontal axis.

A linear de-trending and a blink-removal algorithm was applied in order to obtain an artifact-free eye position time course. The resulting eye position time course was than analyzed as a function of the selected motor response (presence/absence of button release indicating a pointing/saccadic response) and peripheral target location (left, right; see Tosoni et al. (2014) for other methodological details). The eye position in the 500 ms before trial onset was used to estimate the relative change in the eye position during the following stimulus unmasking (38 time bins of 250 ms duration each) and action execution (16 time bins of 250 ms duration each) periods.

Eye movement data collected during the fMRI scanning showed elevated noise compared with those collected during the training session. In particular, due to the tilted head position, the shallow viewing angle and instrumental noise, eye traces were contaminated by a robust non-saccadic spiking activity. Thus, in order to discriminate saccadic from non-saccadic spiking activity, an in-house algorithm was developed to automatically classify fixation breaks as saccades or spikes, based on the amplitude and duration of the signal deviation. Eye position traces were then visually inspected by a condition-blind experimenter who accepted or rejected each proposed automated classification.

The number of detected saccades during the unmasking and action execution periods, the peak of amplitude of eye movements and the trial-by-trial time course of eye position was then computed as a function of stimulus evidence (evidence, noise) and reward condition (neutral reward, reward bias for a pointing/saccadic response).

Results

Behavior

Before conducting the fMRI analysis, we controlled that the reward manipulation produced a significant effect on the subjects’ behavioral performance. In particular, we first examined whether the accuracy of the decision was modulated by the perceptual difficulty of the discrimination, that is, the strength of the sensory evidence in the face stimulus, and whether this effect was differential for conditions with a reward bias (coded as a function of the consistency between the reward bias and the stimulus evidence) vs. neutral. In addition, we examined the effect of our manipulations on the sensitivity (d′) and the criterion (C) SDT parameters. Notably, since the task did not require speeded decisions but subjects provided their response after a fixed interval of ~ 9 s, reaction times were not meaningful measures of performance and thus were not considered in the analyses.

To examine the effect of sensory evidence and reward bias on discrimination accuracy, all the correct trials with a reward bias were classified according to the congruency/consistency between the reward bias and the stimulus evidence, that is, whether the reward bias and the stimulus evidence favored the same or a different response. We then compared the classification accuracy during these trials with that observed during the neutral reward conditions (note that an accuracy performance was also calculated for noise trials based on 10% of sensory evidence).

Consistent with the predictions, the results showed that the classification performance was not only strongly modulated by stimulus evidence (i.e., greater accuracy for evidence than noise trials) but also significantly affected by the reward context of the trial, especially in conditions of low perceptual evidence, that is, noise trials (Fig. 1B). In particular, a repeated measures anova with congruency (congruent, incongruent, neutral) and stimulus evidence (evidence, noise) as factors indicated that discrimination accuracy was significantly higher for congruent than incongruent trials during evidence trials and for congruent vs. both incongruent and neutral trials during noise trials (anova interaction: F2,28 = 61.7, P < 0.001).

Notably, each parameter was calculated as a function of both stimulus category (male, female) and response effector (pointing, saccade). As depicted in Fig. 1 (panels C and D), the results indicated a selective modulation of stimulus evidence on the sensitivity (d′) parameter and of reward on the criterion (C) parameter. Indeed, while the sensitivity parameter was strongly modulated by stimulus evidence, that is, higher d′ for evidence than noise trials, independently of the reward condition (dotted lines), the C parameter was modulated by reward condition during noise but not during evidence trials (solid lines). Statistically, this was supported by a significant anova main effect of stimulus evidence on the sensitivity parameter (d′) (coding based on response effector: F1,14 = 434.1, P < 0.001, Fig. 1C; coding based on stimulus category: F1,14 = 428.5, P < 0.001, Fig. 1D) and a significant anova interaction between stimulus evidence and reward condition on the criterion parameter (C) (coding based on response effector: F2,28 = 39.3, P < 0.001, Fig. 1C; coding based on stimulus category: F2,28 = 39.6, P < 0.001, Fig. 1D). These results suggest that whereas the increase in discrimination accuracy during evidence vs. noise trials was explained by a modulation of the sensitivity parameter, the effect of the reward bias was captured by a modulation of the decision criterion parameter especially during noise trials.

Eye movements

The eye movement data collected during the fMRI scanning showed that subjects maintained accurate fixation during the stimulus unmasking period. Indeed, the mean change in the horizontal eye position across time bins and experimental conditions was −0.06° (for detailed means across conditions see Table 1A), and saccades were present in only 6% of the trials. Furthermore, no significant difference was observed across reward conditions (neutral reward, reward bias for a pointing/saccadic response; P = 0.43) and stimulus evidence (evidence, noise; P = 0.64). This result indicated that subjects reliably maintained fixation during the unmasking period. During response execution, only 4% of the trials in which a button release was recorded contained a concomitant saccadic eye movement. No significant difference was observed across reward conditions (P = 0.18) and stimulus evidence (P = 0.30). During the action execution periods saccade movements peaked on average (across time bins and conditions) at +5° and −8° for right and left target in trials in which no button release was recorded (saccade) and +0.6° and −0.8° for right and left pointing trials (for detailed peaks across conditions see Table 1B). The event-related time course of eye position for the fMRI subjects with the best available calibration data is shown in Fig. S2. For the time course of the mean eye position during the behavioral training session see figure 7A,B of Tosoni et al. (2014).

| Reward bias for a pointing response | Reward bias for a saccadic response | Reward neutral | ||||

|---|---|---|---|---|---|---|

| Evidence | Noise | Evidence | Noise | Evidence | Noise | |

| (A) | ||||||

| Left | −0.09 | −0.40 | −0.24 | −0.27 | −0.39 | −0.01 |

| Right | 0.09 | 0.09 | 0.21 | 0.03 | 0.06 | 0.21 |

| (B) | ||||||

| Saccade (effector) | ||||||

| Left | −7.37 | −7.20 | −9.74 | −8.26 | −8.07 | −7.47 |

| Right | 5.01 | 5.68 | 4.40 | 5.07 | 3.81 | 4.91 |

| Pointing (effector) | ||||||

| Left | −0.85 | −0.87 | −0.87 | −0.85 | −0.87 | −0.68 |

| Right | 0.49 | 0.69 | 0.45 | 0.92 | 0.45 | 0.60 |

Reward modulations on the decision time course

We next examined the effect of reward expectation on the fMRI activity time course of the effector- and stimulus-selective ROIs defined in our previous decision study (Tosoni et al., 2014). In particular, we examined whether activity in the pointing- and saccade-selective regions of the fronto-parietal cortex and in the face-selective FFA region of the inferior temporal cortex was modulated by the expected reward for a decision and whether such modulation (i) occurred early after the presentation of the reward cue indicating the reward context of the trial, that is, during the initial cue interval, or only after the onset of the sensory stimulus when the perceptual decision was formed, that is, during the stimulus unmasking interval, and (ii) whether it was comparable across trials with different levels of sensory evidence (evidence vs. noise) or were particularly stronger during either evidence or noise trials.

Reward modulations on the decision time course were examined by conducting separate analyses on the cue period before stimulus onset (cue-related activity) and on the stimulus unmasking period in which the perceptual decision was formed (activity related to evidence accumulation). While cue-related analyses were conducted on all trials as a function of the cue type (neutral reward, pointing/saccadic reward bias), the analyses of reward modulations during the stimulus unmasking period (decision-related activity) were conducted on: (i) evidence trials associated with a correct response in the three reward conditions; (ii) noise trials in which the response followed the reward bias for the reward biased trials; and (iii) noise trials independent of the response for the trials for the neutral condition.

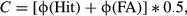

Cue-related reward modulations in effector-selective regions

We firstly examined how reward expectation modulated the activity of effector-selective regions early on during the decision trial before the onset of the sensory stimulus by analyzing their activity time course during the reward cue period in which the color cue indicated the reward context of the following perceptual decision. As displayed in the time courses plots shown in Fig. 2 and confirmed by an anova with cue type (neutral reward, pointing/saccadic reward bias) and time (10 time points) as factors, no significant reward modulation was observed in the cue-related BOLD time course of the effector-specific ROIs examined in our study (cue type, PRR, SMC: F2,28 = 2.3; pIPS: F2,28 = 0.72; FRR: F2,26 = 0.29; FEF: F2,16 = 0.99; cue type by time, PRR: F18,252 = 0.84; pIPS: F18,252 = 1.21; FRR: F18,234 = 0.90; FEF: F18,144 = 1.36; SMC: F18,252 = 0.79, all P > 0.05).

Reward modulations on evidence accumulation in the saccade-selective pIPS region

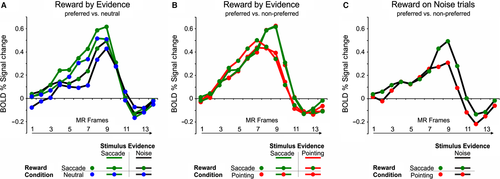

We then examined whether reward expectation modulated the activity related to evidence accumulation in the saccade-selective pIPS region by analyzing the activity time course of this region during the stimulus unmasking period as a function of stimulus evidence, that is, whether the sensory stimulus was gradually revealed (evidence trials) or remained substantially noisy (noise trials), and expected reward (neutral reward, pointing/saccadic reward bias). To this aim, we firstly examined activity modulations during evidence and noise trials in which the reward cue induced a bias toward a saccadic response vs. the same trials in the neutral reward condition by conducting an anova with reward condition (saccadic reward bias, neutral) and stimulus evidence (evidence for saccades, noise) as factors on the first seven time points of the stimulus unmasking period (time points 0–6, see Materials and Methods for details). As displayed in Fig. 3A, the results showed that the decision time course of this region was significantly modulated by both stimulus evidence (i.e., greater activity for evidence vs. noise trials: F1,14 = 23.16, P < 0.001) and reward condition (i.e., greater activity during trials with a saccadic reward bias vs. neutral: F1,14 = 6.35, P = 0.02). However, as shown by a significant anova interaction between reward condition, stimulus evidence and time (F6,84 = 3.43, P = 0.004), the reward modulation seemed to specifically involve the central part of the decision delay when the decision was presumably being formed during evidence trials (time points: 4, 5, 7), while it appeared more homogenously distributed over the unmasking period during noise trials (time points: 1, 2, 3, 6, 7).

We next examined whether reward modulations in this region were specific for the selection of the preferred response effector, that is, the eye, or independent of the selected response, by comparing the activity time course during evidence trials in which a saccadic vs. a pointing correct response was selected as a function of the reward bias (pointing/saccadic reward bias). As shown in the time course plot of Fig. 3B, no differential activity was observed in this region between trials in which the stimulus evidence or the reward bias favored the preferred saccadic or the non-preferred pointing response. This result, which was statistically supported by the lack of significant effects of sensory evidence or reward bias in an anova with stimulus evidence (evidence for pointing, evidence for saccade), reward condition (pointing/saccadic reward bias) and time (time points 0–6) as factors (stimulus evidence by time: F6,84 = 1.7; reward condition by time: F6,84 = 0.6; interaction: F6,84 = 2.1, all P > 0.05) extended our previous results on sensory evidence modulations (Tosoni et al., 2014) indicating an effector-independent coding of decision variables in this region.

We finally examined whether this region showed the same pattern of reward-related effector-independent activity during trials in which the sensory stimulus provided no valid sensory evidence by comparing the activity time course during noise trials with a saccadic vs. a pointing response bias. As shown in the time course plot in Fig. 3C and the statistical results of an anova with condition (rewarded/selected pointing vs. saccadic response) and time (time points 0–6), no reward modulation was observed in these trials (reward condition: F1,14 = 0.4,; reward condition by time: F6,84 = 1.3, all P > 0.05).

Reward modulations on evidence accumulation in the pointing-selective PRR region

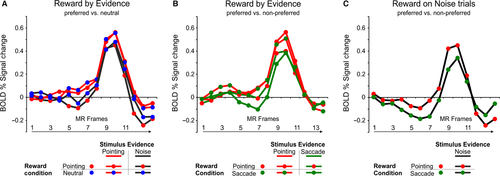

We next followed the same analysis pipeline to examine the effect of reward expectation on the perceptual decision time course of the pointing-selective PRR region. We thus firstly examined whether the activity time course of this region was modulated by reward expectation for the preferred pointing response as compared to neutral trials and whether these modulations were differential for evidence vs. noise trials. As shown in the time course plot in Fig. 4A and the results of an anova with reward condition (pointing reward bias, neutral), stimulus evidence (evidence for pointing, noise) and time (time points 0–6) as factors, the PRR region showed a significantly greater activity during evidence than noise trials (stimulus evidence by time: F6,84 = 5.53, P < 0.001) but not during trials in which the reward cue favored the preferred pointing responses vs. neutral trials in the same conditions (reward condition by time: F6,84 = 0.64; reward condition by stimulus evidence by time: F6,84 = 0.55, all P > 0.05).

Based on our previous findings of effector-specific modulations by sensory evidence in this region, however, we hypothesized that the effect of reward expectation in the PRR region could be only evident when comparing conditions with a reward bias for the preferred effector vs. the non-preferred response effector rather than vs. neutral trials. As for the pIPS region, we thus tested this question on effector-selectivity of decision-related signals by comparing its activity during evidence trials with a reward bias for the preferred pointing response vs. the same trials with a reward bias for the non-preferred saccadic response. As illustrated in Fig. 4B, indeed, the effect of reward expectation seemed to interact with the effect of stimulus evidence in such a way that conditions in which either the stimulus evidence or the reward bias favored a (preferred) pointing response were associated with a slightly higher BOLD modulation than conditions in which both the stimulus evidence and the reward bias favored a (non-preferred) saccadic response. This impression was statistically confirmed by the results of an anova with stimulus evidence (evidence for pointing, evidence for saccade), reward condition (pointing/saccadic reward bias) and time (time points 0–6) as factors, which not only confirmed the effector-selective sensory evidence modulation (i.e., evidence for a pointing response > evidence for a saccadic response) shown in our previous perceptual decision study (Tosoni et al., 2014), but also indicated a marginally significant interaction between stimulus evidence and reward condition (stimulus evidence by time: F6,84 = 2.3, P = 0.04; stimulus evidence by reward condition: F1,14 = 3.6, P = 0.07). Importantly, as shown in the time course plot of Fig. 4C, this activity pattern of pointing-selective reward modulations in the pointing-selective PRR region was confirmed by the observation of a greater BOLD activity in this region during noise trials with a pointing vs. a saccadic reward bias. As for the previous analysis, however, the effect of reward expectation in this region was marginally significant [anova with condition (rewarded/selected pointing vs. saccadic response) and time (time points 0–6) as factors: F1,14 = 3.83, P = 0.07], thus suggesting that reward modulations in this region were not only specifically associated with the preferred response effector but also statistically weaker than in the saccade-selective pIPS.

Reward modulations on evidence accumulation in the frontal pointing- and saccade-selective regions

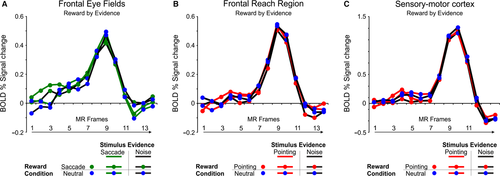

As for the effector-selective regions of the posterior parietal cortex, decision-related reward modulations in the pointing- and saccade-selective regions of the frontal cortex were firstly examined by comparing the activity time course during evidence and noise trials in which the reward bias favored the preferred response effector vs. the same trials in the neutral reward condition.

As shown in Fig. 5, inspection of the activity time courses in these regions suggested a BOLD modulation during evidence trials with a reward bias for the preferred response effector. However, as indicated by an anova with reward condition (reward bias for the preferred response effector, neutral), sensory evidence (evidence for the preferred response effector, noise), and time (time points 0–6) on the time course of these regions, this reward-related activity increase during evidence trials was associated with a statistically significant interaction effect in the pointing-selective SMC and in the saccade-selective FEF region but not in the pointing-selective FRR region [reward condition by stimulus evidence by time: SMC: F6,84 = 3.09, P = 0.008; FEF: F6,84 = 2.48, P = 0.03; FRR: F6,78 = 0.49, P > 0.05; post hoc tests on the interaction: SMC : reward bias/evidence for pointing > all at time point 3; > all noise trials (reward bias and neutral) at time point 4; FEF: reward bias/evidence for saccades > all at time point 2; > all neutral trials (evidence and noise) at time point 3; > neutral/evidence and reward bias/noise at time point 4; P < 0.05].

Reward modulations in the face-selective FFA region

We finally examined whether reward expectation also affected the activity of cortical regions associated with the perceptual representation of the sensory stimulus by analyzing the activity time course of the face-selective FFA region during the cue and the stimulus-unmasking period. In particular, we firstly examined whether the activity of this region was modulated by reward expectation before the onset of the sensory stimulus (male or female image) by comparing the BOLD time course during conditions in which the reward cue indicated that a male or a female correct discrimination would have been associated with greater reward vs. the neutral reward conditions.

As shown in Fig. 6A, no reward modulation was observed at the peak of the BOLD response and although an anova with cue type (neutral reward, reward bias for a male/female response) and time (10 time points) as factors indicated a significant cue type by time interaction in this region (F18,252 = 2.62, P < 0.005), post hoc tests on the interaction confirmed the visual impression that the interaction was guided by a significantly higher BOLD activity during neutral vs. reward biased conditions in the final part of the cue interval (time points 8, 9, 10). Notably, although this activity increase could be easily interpreted in terms of a sensory-level biasing mechanism in the FFA region (both male and female representations were boosted during the neutral conditions), this modulation was observed after the response had returned to the baseline, thus implying that any functional interpretation could be unwarranted.

We next examined whether reward expectation additionally modulated the time course of BOLD activity in the FFA during the stimulus unmasking period (activity related to evidence accumulation) by conducting both an anova comparing evidence and noise trials in the neutral vs. the reward biased conditions (separately for male and female conditions) and an anova comparing evidence conditions with a congruent/incongruent association between the reward bias and the stimulus evidence (i.e., in which the reward bias and the stimulus evidence favored the same or an opposite response). The first anova with reward condition (male reward bias, neutral) and stimulus evidence (male evidence, noise) as factors showed an interaction between reward condition, stimulus evidence and time (F6,84 = 3.79, P = 0.002) in addition to a main effect of stimulus evidence (evidence by time: F6,84 = 59.7, P < 0.0001). As shown by the time course plot displayed in Fig. 6B and post hoc comparisons, this interaction was explained by a stronger reward modulation (reward bias > neutral) during evidence than noise trials (evidence trials, time points: 3, 4, 5, 6, 7; noise trials, time points: 3, 7). The same anova conducted on trials with a reward bias for a female response showed a significant effect of stimulus evidence (F6,84 = 58.3, P < 0.0001) but a marginally significant interaction between reward condition, stimulus evidence and time (F6,84 = 1.95, P = 0.08). As for the first anova, inspection of the activity time courses (see Fig. 6C) and post hoc tests indicated that the interaction was explained by a selective effect of reward expectation during evidence trials (reward bias > neutral: time points: 3, 4, 7 for evidence trials; time points: 7 for noise trials). The third anova on evidence trials with stimulus evidence (evidence for a male or female response), reward condition (reward bias for a male or female response) and time (time points 0–6) as factors showed a statistically significant three-way interaction (F6,84 = 6.84, P < 0.001). As shown in Fig. 6D, however, this interaction was not explained by a greater BOLD response during congruent vs. incongruent conditions. While the current univariate approach does not allow to differentiate the BOLD signals associated with the sensory representations of the two categories within the FFA, this finding suggests that the effect of reward expectation in the FFA likely reflected a general activity boost for rewarded vs. neutral conditions (motivational signal) rather than a specific enhancement of the sensory representations associated with a greater reward (sensory-level biasing).

Discussion

Decision-making is traditionally viewed as an integrative process in which bottom-up information about the identity of a stimulus, that is, sensory evidence, is combined with prior knowledge, that is, top-down information, about the likelihood of its occurrence or the reward associated with its correct discrimination.

Although the neural bases of this interaction are still unclear, it has been proposed that one possible mechanism by which prior knowledge biases the decision process is through an increase of the baseline neuronal activity of neurons involved in the perceptual decision process. According to this interpretation, in particular, the effect of prior knowledge would be to shift the baseline firing rate of fronto-parietal neurons/cortical regions involved in the perceptual decision process toward the threshold associated with the more likely to be correct/more profitable decision response (Rorie et al., 2010; Summerfield & Koechlin, 2010; Hanks et al., 2011; Mulder et al., 2012; Rao et al., 2012; de Lange et al., 2013). Importantly, however, recent studies have shown that information about prior knowledge is not only reflected at the integration stage of visual perceptual decisions, represented in fronto-parietal regions, but also at the sensory representation stage in cortical regions associated with the perceptual representation of the sensory stimulus (Kok et al., 2012; Cicmil et al., 2015).

Here we showed that information about the expected reward for a perceptual decision modulated the activity of both sensory-motor regions of the fronto-parietal cortex associated with the planning/execution of the decision response and of sensory regions the inferior temporal cortex associated with the perceptual representation of the sensory stimulus. More specifically, by recording fMRI activity during a task requiring an arbitrary association between a face picture (female vs. male) that was gradually unmasked over time and a spatially directed action (a hand pointing or saccadic eye movement), we showed that reward expectation modulated both the activity of pointing- and saccade-selective regions of the fronto-parietal cortex and of the face-selective FFA region. Reward modulations in these regions (i) were specifically observed during the stimulus unmasking interval in which the decision was formed and not during the prior cue period in which a colored fixation cross indicated the reward context of the incoming perceptual decisions; (ii) were generally stronger during easy than difficult decisions and (iii) showed a different degree of association with the preferred response effector across motor-related regions, being effector-specific in PRR but effector-independent in pIPS. Interestingly, a reward-related, but response-independent, modulation was also observed in the FFA.

As to the first point, the observation that reward modulations were not apparent during the initial cue interval but only after the onset of the sensory stimulus when the perceptual decision was being formed seems at odd with the idea that prior knowledge modulates perceptual decision-making by boosting baseline activity of decision-related neurons. It must be noted, however, that compared to previous studies on this issue, we have adopted a more sophisticated design that was specifically intended to separate the BOLD responses associated with the cueing phase from those reflecting the formation of a perceptual decision and, more relevantly, a simultaneous manipulation of reward expectation and sensory evidence. Hence, although our findings suggest that reward expectation specifically modulates activity during the evidence accumulation phase in effector-selective regions, it is very likely that methodological differences between studies have significantly affected the probability to observe specific modulations (i.e., baseline vs. decision-related).

We additionally found that reward modulations during the stimulus unmasking period interacted to some degree with the effect of sensory evidence. In particular, as shown in the anovas with reward condition (reward bias toward the preferred response effector/stimulus category, neutral) and stimulus evidence (evidence, noise) as factors on the time courses of the pIPS, FEF, SMC and FFA regions, reward modulations (reward bias > neutral) during evidence vs. noise trials were typically stronger and/or more specifically observed during the central part of the stimulus unmasking interval when the decision was presumably being formed (see post hoc tests). Although this result seems at odd with our behavioral findings of a stronger reward modulation during noise trials (as decisions on these trials were only guided by the reward bias), in our view it provides a critical demonstration that the effect of reward expectation is not simply to bias the output of the decision process but to actively modulate the process of decision formation (i.e., the activity of neural populations whose spiking activity integrates sensory evidence toward a decision bound).

Our most important result, however, was that reward modulations were differently linked to the preferred response effector in the pointing- vs. saccade-selective regions of the posterior parietal cortex. In particular, while reward modulations were selective for the preferred pointing response in the pointing-selective PRR region (i.e., effector-specific reward modulations), the saccade-selective pIPS showed greater activity when either a saccadic or a pointing movement was associated with a greater reward relative to a neutral condition. We believe that these findings are particularly relevant as they complement a series of recent evidence from both neurophysiological and neuroimaging studies on functional differences between decision signals in these two regions. First, we have previously shown that sensory evidence modulations were effector-specific in the PRR (i.e., they were only observed for decisions associated with the preferred pointing response) but effector-independent in the pIPS region (i.e., they occurred in an eye movement region during decisions that called for a pointing response; Tosoni et al., 2014) and the present findings significantly extend these results to reward-related modulations. Second, recent neurophysiological studies have shown that decision-related modulations are not equivalent in the monkey LIP and PRR regions, with these differences suggesting an analogous dissociation between effector-independent vs. effector-specific signals for decision-making (Kubanek & Snyder, 2015; de Lafuente et al., 2015). For example, by using a reward-based decision task in which monkeys selected a target based on its desirability, Kubanek and colleagues have recently shown that decision-related signals were significantly less specific for saccadic choices in the LIP region than for reaching choices in the PRR region (Kubanek & Snyder, 2015). Our findings significantly extend these results to human arbitrary visual decisions by suggesting that, as in the monkey brain, these regions likely serve different functions beyond their effector specificity. Indeed, whereas the PRR region has been primarily associated with the planning and execution of pointing movements and at most with behavioral variables that potentially instruct such movements, several lines of evidence suggest that the pIPS region and its likely correspondent monkey LIP region can encode information about sensory evidence, expected reward or stimulus color not only when these variables are relevant to the execution of an eye movement, but also when sensory stimuli are used to indicate a categorical decision that is not directly linked to a motor response, thus suggesting that it plays multiple and independent roles in saccade-related motor activity, spatial and object selection (Freedman & Assad, 2011).

Thus, at a more theoretical level, albeit the evidence that reward-related effects are mainly observed in effector-specific regions provide further support to the view that parietal cortex essentially subserves decision-making to select targets of particular actions (Shadlen et al., 2008; Andersen & Cui, 2009; Kable & Glimcher, 2009; Cisek & Kalaska, 2010), our results of effector-independent modulations in the pIPS and FFA regions partially contrast with a rigorous interpretation of the ‘action-based’ or ‘intentional’ framework. This view, indeed, predicts that sensory-motor regions specifically represent the decision variable associated with the execution of the motor choice for which they are selective. In other words, the view according to which the neural correlates of putative decision variables (such as payoff) are expressed by the same neurons that encode the attributes (such as direction) of the potential motor responses used to report the decision is partially unsupported by empirical data. Interestingly, reward modulation in the FFA were similar to those observed in the pIPS, thus suggesting that the effect of reward expectation in these regions was not to bias specific representations (i.e., those of the response alternative associated with greater reward vs. those of the response alternative associated with lower reward) but simply to increase the general level of activity during biased vs. neutral reward conditions.

Finally, based on the observation that expected reward modulates both the activity of effector-specific and sensory-related regions, our data are consistent with the idea that prior knowledge shapes perception and action in parallel. This linkage is consistent with the data reported by a recent psychophysical study on brightness selection showing that an induced motor bias was correlated with an amplification of both the sensory signal and the internal noise without a systematic change in perceived overall brightness (Liston & Stone, 2008) and with the imaging works showing that prior knowledge modulates multiple stages of the information processing, that is, not only the activity of associative regions associated with the decision or action planning but also the response of sensory-related regions specific for the stimulus and their functional connectivity (Rahnev et al., 2011; White et al., 2012; Chen et al., 2015).

In conclusion, in accordance with the proposal drawn in the work by Kok et al. (2013), our findings support an account of perception as a process of probabilistic inference in which top-down and bottom-up information are integrated at every level of the cortical hierarchy through a mechanism of predictive coding. Under this framework, the presence of specific prior information about a perceptual stimulus/decision, such those provided by the association of a specific perceptual response to a greater payoff, determines the generation of conditional expectations at multiple hierarchical levels in the sensory-motor system with backward (or ‘reentrant’) connections within the ventral stream allowing this expectation-related signal to flow back to visual regions and guide object decisions as they unfold (Friston, 2003, 2005; Summerfield & Koechlin, 2008).

Acknowledgement

We are grateful to Carlo Sestieri for helpful discussions and technical help.

Conflict of interest

The authors have no conflict of interest to declare.

Author contributions

A.T. designed study, analyzed data, drafted paper; G.C. drafted paper; C.C. analyzed data; G.G. designed study, drafted paper.

Data accessibility

Data are available upon request. Please contact Dr. Annalisa Tosoni: [email protected].