Comparison of AI-Powered Tools for CBCT-Based Mandibular Incisive Canal Segmentation: A Validation Study

Funding: This research was financed in part by Coordenação de Aperfeiçoamento de Pessoal de Nível Superior (CAPES) – Finance Code 001; Agentschap Innoveren en Ondernemen; Conselho Nacional de Desenvolvimento Científico e Tecnológico.

ABSTRACT

Objective

Identification of the mandibular incisive canal (MIC) prior to anterior implant placement is often challenging. The present study aimed to validate an enhanced artificial intelligence (AI)-driven model dedicated to automated segmentation of MIC on cone beam computed tomography (CBCT) scans and to compare its accuracy and time efficiency with simultaneous segmentation of both mandibular canal (MC) and MIC by either human experts or a previously trained AI model.

Materials and Methods

An enhanced AI model was developed based on 100 CBCT scans using expert-optimized MIC segmentation within the Virtual Patient Creator platform. The performance of the enhanced AI model was tested against human experts and a previously trained AI model using another 40 CBCT scans. Performance metrics included intersection over union (IoU), dice similarity coefficient (DSC), recall, precision, accuracy, and root mean square error (RSME). Time efficiency was also evaluated.

Results

The enhanced AI model had IoU of 93%, DSC of 93%, recall of 94%, precision of 93%, accuracy of 99%, and RMSE of 0.23 mm. These values were significantly higher than those of the previously trained AI model for all metrics, and for manual segmentation for IoU, DSC, recall, and accuracy (p < 0.0001). The enhanced AI model demonstrated significant time efficiency, completing segmentation in 17.6 s (125 times faster than manual segmentation) (p < 0.0001).

Conclusion

The enhanced AI model proved to allow a unique and accurate automated MIC segmentation with high accuracy and time efficiency. Besides, its performance was superior to human expert segmentation and a previously trained AI model segmentation.

1 Introduction

Prior to dental implant placement in the anterior mandible, accurate visualization of the mandibular incisive canal (MIC) on cone-beam computed tomography (CBCT) scans is often challenging due to canal branching with decreased mineralization (De Andrade et al. 2001; Jacobs et al. 2002, 2007, 2014; Makris et al. 2010; Mardinger et al. 2000). The neurovascular bundle within the MIC gradually decreases in diameter toward the symphyseal midline, with branching and loss of canal corticalization (Jacobs et al. 2004, 2007; Mardinger et al. 2000; Mraiwa et al. 2003; Romanos et al. 2012). Additionally, imaging artifacts may further hinder the MIC visualization (Pereira-Maciel et al. 2015; Schulze et al. 2011). Consequently, the complex anatomy and high degree of human variability of this neurovascular plexus-like canal require a high level of expertise, training, and time during presurgical implant planning in the mandibular symphysis region (De Andrade et al. 2001; Jacobs et al. 2007, 2014).

Avoiding damage to the mandibular incisive nerve is crucial during dental and surgical procedures, given the severity of potential complications that can result in severe trauma, chronic pain, and loss of sensation in this region, leading to a substantial decrease in patient quality of life (De Andrade et al. 2001; Jacobs et al. 2014). Artificial intelligence (AI)-assisted visualization and segmentation play an essential role in minimizing these risks by improving preoperative planning, enhancing the predictability of surgical outcomes, and ultimately prioritizing patient safety and well-being (Elgarba, Fontenele, Mangano, et al. 2024; Elgarba, Fontenele, Tarce et al. 2024; Macrì et al. 2024).

AI, particularly convolutional neural networks (CNN), has demonstrated the ability to accurately segment three-dimensional (3D) images, offering significant improvements in accuracy, time efficiency, and consistency compared to conventional methods (Hung et al. 2020). This advancement is especially useful for identifying critical and complex neural structures such as the mandibular canal (MC) and the MIC (Jindanil et al. 2023; Lahoud et al. 2022; Oliveira-Santos et al. 2023).

Although previous studies have explored the simultaneous segmentation of MIC and MC, the complexity and variability of the MIC required enhanced segmentation of the AI model. This improvement is necessary for more comprehensive preoperative implant planning, improving accuracy and reliability for the surgical procedure. The CNN's learning capacity increases segmentation precision as it is introduced to complex morphological cases with different presentations, helping to prevent neurovascular damage during surgery while considering anatomical variations across populations (Yamashita et al. 2018). Additionally, the learning potential of a CNN architecture benefits from curricular learning in a cloud-based environment, enabling it to process large datasets efficiently, adapt to diverse imaging conditions, and consequently improve robustness and generalizability in clinical practice (Fontenele and Jacobs 2025). This enhancement applies not only to anatomical segmentation but also to implant planning and prosthetic crown design (Elgarba et al. 2023). Therefore, this study aimed to validate an enhanced AI model for dedicated automated segmentation of the MIC on CBCT scans and compare its performance with human-expert segmentation and previously trained AI model segmentation.

2 Material and Methods

2.1 Ethical Criteria

This validation study, based on retrospectively collected data, utilized CBCT scans from patients referred to the dentomaxillofacial radiology center for presurgical planning of third molar extractions and implant placements. The study protocol was preapproved by the local Medical Ethics Committee under protocol number S65708, in accordance with the World Medical Association's Declaration of Helsinki guidelines on medical research. Additionally, this study adhered to the Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) guidelines. All patient information was anonymized.

2.2 Sample Selection

A total of 140 CBCT scans were selected from the radiology database of the UZ Leuven Hospital, Leuven, Belgium. The scans were acquired using four different CBCT units: 3D Accuitomo 170 (J. Morita, Kyoto, Japan), Newtom VGI Evo (QR, Verona, Italy), Scanora 3Dx (Soredex, Tuusula, Finland), and Planmeca ProMax 3D (Planmeca, Helsinki, Finland) (Table 1). The patient age ranged from 10 to 86 years, with a mean age of 48 ± 19 years, comprising 51% female and 49% male patients. All CBCT scans were evaluated for eligibility criteria using Xero viewer (version 8.1.4.160, Agfa HealthCare NV, Mortsel, Belgium). Inclusion criteria required CBCT scans with a voxel size of 0.3 mm3 or less to ensure sufficient sharpness and detail, covering the anterior mandible with bilateral visibility of the MIC at least up to the canine region. Exclusion criteria included CBCT scans with metal or motion artifacts compromising the mandibular anterior region, as well as patients with bone fractures, abnormalities, lesions, or development teeth. All CBCT scans were randomized and anonymized.

| CBCT device | kVp | mA | FOV (cm) | Voxel size (mm3) |

|---|---|---|---|---|

| Accuitomo 170 | 90 | 5 | 8 × 8, 10 × 10, 14 × 10 | 0.125–0.25 |

| NewTom VGI evo | 110 | 3–21 | 8 × 8, 10 × 10, 12 × 8, 15 × 12, 24 × 19 | 0.125–0.3 |

| Planmeca ProMax 3D | 96 | 6 | 13 × 9 | 0.2 |

| Scanora 3Dx | 90 | 8 | 10 × 7.5, 14.5 × 7.5, 14.5 × 13.2 | 0.125–0.25 |

- Abbreviations: CBCT, cone beam computed tomography; cm, centimeters; FOV, field of view; kVp, kilovoltage peak; mA, milliamperage; mm3, cubic millimeters.

To avoid the risk of overfitting the enhanced AI model, the selection of the training sample was carefully conducted through a cross-checking process to assure that all patients included in the present dataset differed from those in the Jindanil et al. (2023) study. Additionally, the training sample size and data augmentation techniques were optimized to improve the model's generalization, serving as effective solutions to reduce overfitting.

2.3 AI Model Enhancement

One hundred CBCT scans in Digital Imaging and Communications in Medicine (DICOM) format were uploaded to the Virtual Patient Creator (version 2.2.0, ReLU, Leuven, Belgium), an online, user-interactive, cloud-based platform previously developed and validated to enable automatic segmentation of the MC and MIC simultaneously (Lahoud et al. 2022; Oliveira-Santos et al. 2023; Jindanil et al. 2023). Two observers (M.F.S.A.B. and T.J.), each with 4 years of experience in oral and dentomaxillofacial radiology, evaluated the AI-based segmentation of MC and MIC. Since under-segmentation was observed in most cases, manual refinement was performed using the brush tool, allowing observers to add or remove voxels to the segmentation maps. Subsequently, an experienced oral and maxillofacial radiologist with 10 years of experience (R.C.F.) rechecked the labeling to assess the segmentation quality. In cases of uncertainty regarding the MIC path, an oral and maxillofacial radiologist (R.J.) with over 30 years of experience in 3D imaging was consulted.

All segmentations were then exported as Standard Triangle Language (STL) files and imported into Mimics software (version 23.0, Materialise, Leuven, Belgium) to separate the MC and the MIC. Cropping planes were created based on the CBCT scans to standardize the cropped STL files across the different scans. Then, the cropped STL files were re-uploaded to the online platform for model enhancement.

Subsequently, 40 CBCT scans were used to externally validate the predictions of the enhanced AI model. These same scans were also employed to compare the segmentations produced by the enhanced AI model with those generated by the previously trained AI model, as well as with manual segmentations performed by a human expert. External validation involved comparing each AI-generated segmentation to the refined AI (R-AI) segmentation, which consisted of expert-reviewed manual adjustments. To evaluate human segmentation performance, 30% of this dataset (n = 12 CBCT scans, 24 MICs) was manually segmented by an oral and maxillofacial radiologist with 4 years of experience in 3D imaging (M.F.S.A.B.) twice, using the “Virtual Patient Creator” platform, with a 2-week interval between the segmentation sessions. Human segmentation was carried out using the brush tool available on the platform, which was labeled in a circular pattern. First, the MIC was identified and labeled in the sagittal reconstruction to the extent it was visible. Next, the axial reconstruction was examined to refine the initial segmentation by adding voxels corresponding to the MIC and removing those that did not. This process was repeated in the coronal reconstruction for further refinement. Finally, all three CBCT reconstructions were reviewed for final adjustments. A 3D model was then generated and evaluated to ensure that only the MIC was segmented, with corrections made for any inclusion of other structures.

2.4 CNN Model

Data augmentation techniques, such as padding, blurring, gaussian noise, salt-and-pepper noise, cylindrical cutouts, flipping, affine transformations, elastic deformations, and cropping, were used to improve the model's robustness and generalizability.

2.5 Analysis Metrics

The performance of MIC segmentation for both the enhanced AI model and the previously trained AI model was evaluated through voxel-wise comparisons. This evaluation was carried out by comparing the STL files automatically generated by the AI-driven segmentation of each model (enhanced and previously trained) with the STL files generated after refinement by an expert in the respective models (R-AI) that serve as a clinical reference.

To assess the reliability of human segmentation, the STL of the first session of human segmentation was compared with the STL of the second session of human segmentation, also based on a voxel-wise comparison approach.

- –

False positive (FP): Voxels incorrectly included in the AI segmentation but later excluded by experts during refinement.

- –

False negative (FN): Voxels that belong to the MIC but were initially missed by the AI segmentation and subsequently added by experts.

- –

True positive (TP): Voxels correctly identified by the AI as belonging to the MIC.

- –

True negative (TN): Voxels accurately not included from the segmentation as they do not belong to the MIC.

- –

Dice similarity coefficient (DSC): Measures the overlap between AI segmentation and R-AI segmentation (clinical reference). The DSC is calculated as:

- –

Intersection over Union (IoU): Evaluates the similarity between AI segmentation and R-AI segmentation (clinical reference) by assessing the ratio of the intersection to the union of the segmented regions. The IoU is defined as:

- –

Precision: Represents the proportion of correctly identified voxels among all voxels predicted as part of the MIC by the AI. It is calculated as:

- –

Recall (sensitivity): Measures the fraction of relevant voxels (those that truly belong to the MIC) that were correctly identified by the AI. The formula is:

- –

Accuracy: Indicates the overall correctness of the segmentation by AI, considering both true positives and true negatives. The accuracy is given by:

- –

Root mean square error (RMSE): Measures in millimeters the discrepancies between AI-generated surface segmentation map and R-AI segmentation map (clinical reference). The RMSE is computed as:

This formula assesses the average distance between corresponding points on the AI and manually segmented surfaces, offering insight into the segmentation's accuracy. An RMSE of 0 mm represents the perfect alignment between the predicted and reference segmentations.

2.6 Time Efficiency

One observer (M.F.S.A.B.) recorded the time in seconds for the enhanced AI model segmentation, R-AI segmentation, and human segmentation using the same dataset that was used to validate the enhanced model (n = 40). For the enhanced AI model segmentation, the recorded time started from the moment the DICOM file was uploaded to the platform until the algorithm completed the automatic segmentation and generated the MIC 3D model. For R-AI segmentation, the recorded time combined both the initial AI segmentation time and the additional time spent on manual refinement. For the human segmentation, the time was recorded from when the DICOM file was opened in the cloud-based platform until the MIC 3D model was generated.

2.7 Statistical Analysis

All statistical analyses were carried out using SPSS software version 25.0 (IBM, Armonk, NY) with a significance level of 5%. A power analysis indicated that the current sample size provided a statistical power of 95%. Besides descriptive statistics, metrics and time efficiency for the three segmentation models (enhanced AI model, previously trained AI model, and human segmentation) were compared using one-way Analysis of Variance.

3 Results

3.1 Accuracy Metrics

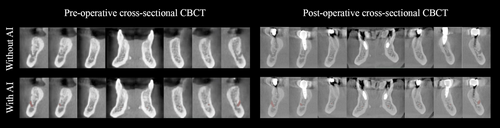

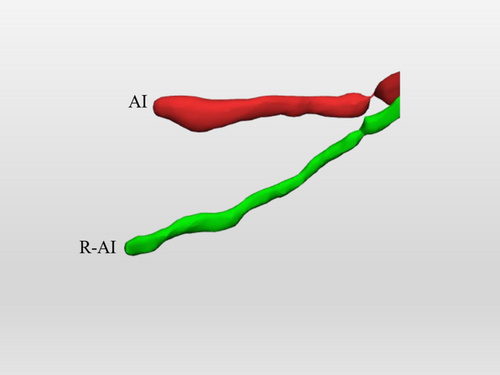

Table 2 shows the evaluation of accuracy metrics for the enhanced AI model, the previously trained AI model, and human segmentation. The enhanced AI model demonstrated robust performance across all segmentation metrics in terms of accuracy. The model achieved a mean IoU of 93% ± 12%, indicating a high overlap between AI and R-AI 3D models, and a DSC of 93% ± 17%. For voxel-wise evaluation, the recall was 94% ± 17%, the precision was 93% ± 17%, and the accuracy was remarkably high at 99% ± 1%. The RMSE was measured at 0.23 ± 0.83 mm, showing minimal deviation between predicted and clinical reference segmentations. Figure 1 presents example cases of highly accurate AI-based MIC segmentation.

| Accuracy metrics | Enhanced AI model | Previously trained AI model | Human segmentation |

|---|---|---|---|

| IoU (%) | 93 (12) A | 60 (15) C | 68 (13) B |

| DSC (%) | 93 (17) A | 71 (17) C | 80 (11) B |

| Recall (%) | 94 (17) A | 70 (20) B | 76 (13) B |

| Precision (%) | 93 (17) A | 75 (19) B | 86 (11) A |

| Accuracy (%) | 99 (1) A | 98 (01) B | 99 (1) B |

| RMSE (mm) | 0.23 (0.83) A | 1.32 (1.86) B | 0.10 (0.04) A |

- Note: Different uppercase letters indicate a statistically significant difference between the segmentation approaches (horizontally) in which A was significantly higher than B and C; and B was significantly higher than C. (p < 0.0001).

- Abbreviations: AI, artificial intelligence; DSC, dice similarity coefficient; IoU, intersection over union; mm, millimeters; RMSE, root mean square error; SD, standard deviation.

When comparing the accuracy metrics between the two AI models, the enhanced AI model significantly outperformed the previously trained AI model across all accuracy metrics (p < 0.001).

The enhanced AI model showed better performance compared to human segmentation (p < 0.0001). Human segmentation yielded lower values for IoU (68% ± 13%), DSC (80% ± 11%), recall (76% ± 13%), and accuracy (99% ± 1%) compared to the enhanced AI model. Precision and RMSE for human segmentation were 86% ± 11% and 0.10 ± 0.04 mm, respectively, and were comparable to those of the enhanced AI model (p > 0.05). In contrast, human segmentation exhibited significantly higher IoU, DSC, and precision values, as well as significantly lower RMSE values, compared to the previously trained AI model (p > 0.0001).

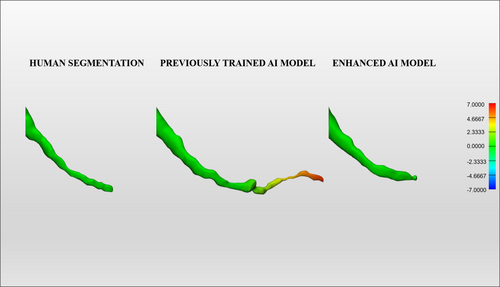

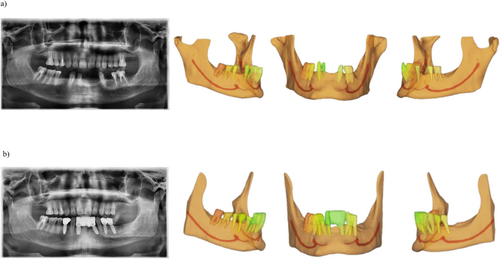

Figure 2 represents a color map of the human segmentation, the previously trained AI, and the enhanced AI segmentation of the same patient's MIC, comparing the first with the second segmentation for the human segmentation and the automated AI-driven segmentation with the enhanced segmentation for both AI models. Additionally, Figure 3 demonstrates the accurate performance of the enhanced AI model in segmenting the MIC in both preoperative (Figure 3a) and postoperative (Figure 3b) scenarios for implant placement in the anterior mandible.

3.2 Time Efficiency

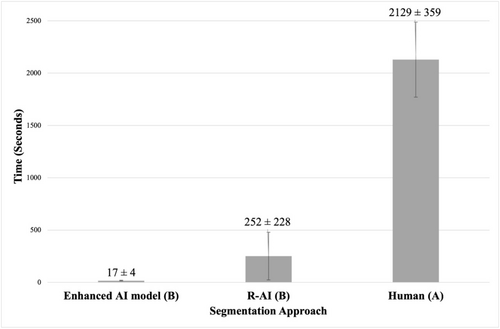

Figure 4 presents the results regarding the time-efficiency analysis comparing the time spent performing MIC segmentation using the different segmentation approaches tested. The segmentation times were 17 ± 4 s for the enhanced AI model, 252 ± 228 s for R-AI, and 2129 ± 359 s for human segmentation. Both the enhanced AI model and R-AI were significantly faster than human segmentation, with the AI-based method achieving a 125-fold reduction in time compared to human segmentation (p < 0.0001).

4 Discussion

Accurate identification of critical anatomical landmarks, such as the MIC, is essential for surgical planning in the anterior mandible. Given the high prevalence of MIC, it is important to minimize intra- and postoperative complications, which occur in 60%–87% of cases, to ensure patient safety and improve overall treatment outcomes (Soni et al. 2024; Taşdemir et al. 2023). This study highlights the significant impact of the enhanced AI model in optimizing MIC segmentation when compared to the previously trained AI model. When comparing their accuracy metrics (e.g., RMSE), the enhanced AI model presented a considerably lower value than the previously trained AI model, approaching the optimal value at 0.23 mm. The RMSE measures the mean error distance between the AI and refined segmentation, providing a clinically relevant measure of anatomical accuracy. By ensuring the precise spatial location of the canal, it helps reduce the risk of implant mispositioning. Furthermore, precision and recall values were significantly higher for the enhanced AI model compared to the previously trained AI model. High precision prevents over-segmentation, while high recall minimizes under-segmentation, ensuring that the full extent of the canal is identified, reducing its inadvertent perforation during drilling. The IoU and DSC values were also optimized; they measure the segmentation overlap between the AI and the reference standard, reflecting the global quality of the labeling and ensuring that the AI model provides a cohesive and anatomically accurate representation of the MIC (Mohammad-Rahimi et al. 2024).

The model represents a significant breakthrough in surgical planning by providing precise spatial localization of the MIC, a delicate structure that is often difficult to detect even with CBCT scans. This enhanced capability addresses a critical challenge in implant placement as well as oral and maxillofacial surgery. The MIC's complex anatomy and subtle radiographic appearance often lead to it being overlooked, which can result in nerve injuries and compromised surgical outcomes (Mardinger et al. 2000; Mraiwa et al. 2003; Jacobs et al. 2004). Integrating this AI model into surgical workflows has the potential to prevent such injuries and improve the standard of care.

In comparison to the previous studies, which simultaneously segmented the MC, anterior loop, and MIC, discrepancies may arise due to differences in the size and complexity of these structures (Jindanil et al. 2023; Lahoud et al. 2022; Oliveira-Santos et al. 2023). The MC, being longer and larger, may introduce additional challenges that affect segmentation accuracy. The designated AI model for MIC segmentation was specifically trained to improve accuracy in identifying this critical structure by training on a large set of CBCT scans and fine-tuning to address challenges, such as the identification of small structures. To enhance its robustness, techniques like image rotation and noise addition were applied, enabling the model to adapt to various image types and provide more accurate and reliable segmentation. In addition, the ReLU activation function used in this study was designed to facilitate continuous learning, enabling the model to enhance its segmentation capabilities as it processes more MC data. This adaptability not only improves current performance, but also lays the foundation for future advancements, potentially achieving even greater accuracy and efficiency in segmenting complex anatomical structures such as the MC and MIC.

The difference in accuracy values between the previously trained and improved AI models can be attributed to the following factors. Firstly, for the previously trained AI model, the MC and the MIC were considered a unique structure, being both segmented by the same algorithm simultaneously. In that matter, its AI model training was conducted with one STL file containing the MC and the MIC segmentation. However, the MC and the MIC present anatomical differences with evident radiographic unique features. Therefore, the training strategy for the enhanced AI model differed from that of the previously trained AI model. In the present study, separate algorithms were developed within the same AI model, one for MC segmentation and another for MIC segmentation, to account for the distinct characteristics of each structure. This specific training had the potential to increase the accuracy metrics of the MC and the MIC segmentation, as the different algorithms would add their learned patterns for that task, such as their anatomical characteristics and location. Moreover, the cases selected for external validation of the previously trained AI model likely included canals with reduced caliber, branching patterns, or poorly defined cortical boundaries, which posed additional challenges for the segmentation process. In addition, differences in image resolution, field of view, and other acquisition parameters may also help explain the model's performance in this context. These factors are known to complicate the segmentation of structures in CBCT scans, particularly when the anatomical features are less clearly defined. Notwithstanding, the improved accuracy metrics in the present study can also be attributed to the additional dataset used for training the enhanced AI model, which builds upon the previously trained model based on 160 CBCT scans (Jindanil et al. 2023). Moreover, methodological refinements, including improved data preprocessing and optimized data augmentation techniques, significantly contributed to improving the model's performance. As aforementioned, this algorithm is CNN based, and it is continuously learning and improving its performance when it receives new data.

The metrics achieved by the enhanced AI model were significantly superior to those obtained by human segmentation, highlighting the model's greater reliability than traditional methods. Human segmentation is inherently less reliable, particularly for delicate and hard-to-visualize structures like the MIC. This can explain the difference in metrics, as humans are more prone to inconsistencies when segmenting the same delicate structure at different time points. In contrast, the AI model is programmed to consistently apply the same method for every case. This result was also observed in previous studies that segmented fine structures, such as the pulp cavity and root canals, in which the AI-driven segmentation exceeded in accuracy metrics when compared to the human approach (Santos-Junior et al. 2025; Slim et al. 2024).

Concerning the RMSE results observed between the enhanced AI model and human segmentation, no statistically significant difference was found, with both being considered excellent. A clinically reliable segmentation is preferably below the standard voxel size of 0.2 mm. An RMSE value smaller than the voxel size indicates that the segmentation error is minimal, remaining within a range that does not exceed the dimension of a voxel in a standard protocol commonly used for implant planning, thereby reducing the likelihood of clinical impact (Fontenele et al. 2023). Moreover, the RMSE difference of 0.13 mm between the enhanced AI model and manual segmentation is unlikely to affect surgical outcomes or, for example, the accuracy of AI-driven automated implant planning, as a safety distance of 3 mm between the implant apex and the inferior alveolar nerve is generally recommended (Elgarba, Fontenele, Mangano, et al. 2024; Elgarba, Fontenele, Tarce et al. 2024; Mistry et al. 2021).

Moreover, human segmentation is considerably more time-consuming compared to AI-driven segmentation, as demonstrated in several studies that segmented anatomical structures in CBCT images using AI approaches (Elsonbaty et al. 2025; Fontenele et al. 2023; Jindanil et al. 2023; Lahoud et al. 2022; Santos-Junior et al. 2025; Slim et al. 2024). This increased efficiency not only improves digital workflow in dental practice but also reduces the potential for human error, ultimately optimizing surgical planning and safety in anterior mandibular surgical procedures.

Despite promising results, CBCT scans with bone lesions, a high level of artifacts, or developing permanent teeth in the anterior mandibular region were excluded from this study. Furthermore, the current sample did not include any CBCT scans with a voxel size higher than 0.3 mm. Those factors influence AI performance and impact MIC segmentation. Figure 5 illustrates an example of segmentation inaccuracy by the enhanced AI model in delineating the MIC. In this case, the AI labeling was incorrect, creating a different path for the canal, which was located above the actual anatomical position. To develop a more generalized and accurate AI model for clinical use in MIC segmentation, it is recommended to continue training with greater variability in terms of patient conditions, CBCT devices, and acquisition parameters. Besides, future studies should be conducted to investigate differences in segmentation accuracy between the different CBCT scanners under controlled conditions.

5 Conclusion

The present enhanced clinical AI model demonstrated highly accurate segmentation of the MIC. This AI model outperforms both human expert segmentation and the previous AI segmentation model. Additionally, it offers improved time efficiency compared to human-based segmentation methods. This enhanced AI model supports clinicians in producing accurate and consistent segmentation of the MIC.

Author Contributions

Maria Fernanda Silva da Andrade-Bortoletto: investigation, writing – original draft, methodology, visualization, validation, software, data curation. Thanatchaporn Jindanil: investigation, methodology, validation, visualization, writing – review and editing, software, data curation. Rocharles Cavalcante Fontenele: conceptualization, writing – review and editing, supervision, formal analysis. Reinhilde Jacobs: conceptualization, writing – review and editing, supervision. Deborah Queiroz Freitas: formal analysis, supervision, writing – review and editing.

Acknowledgments

This research was financed in part by Coordenação de Aperfeiçoamento de Pessoal de Nível Superior (CAPES) – Finance Code 001, and by the National Council for Scientific and Technological Development –Brazil (CNPq, process #312046/2021-9). Part of this research was conducted within the project entitled AIPLANT funded by VLAIO (Flanders Innovation & Entrepreneurship). The authors would like to acknowledge the AI engineers at Relu for their useful advice during the validation process.

Conflicts of Interest

The authors declare no conflicts of interest.

Open Research

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.