Endogeneity in Accounting and Finance Research: Natural Experiments as a State-of-the-Art Solution

Abstract

This paper provides a discussion of endogeneity as it relates to finance and accounting research. We discuss the textbook solutions: two-stage least squares, instrumental variables, differenced generalized method of moments (GMM) and system GMM and provide a unifying framework showing how they are related. We consider the limitations of these techniques and then detail a state-of-the-art solution, utilizing a natural experiment as a way of mitigating endogeneity and building stronger theory.

Endogeneity is more than a problem of econometrics. It is also a problem of theory. The issue for researchers is that often variables included in the structural equations are simultaneously determined, mismeasured and/or the theory is incomplete and so important variables are omitted. More sophisticated econometric techniques are only part of the solution. Better theory is also necessary to help researchers build more complete and accurately specified models.

In finance and accounting research it is important that empirical work is underpinned by theory. Accordingly a well-designed study must be clear about how and why variables influence one another and the logic and direction of the relationship must be specified (Larcker and Rusticus, 2007). However, much of the accounting and finance empirical literature is plagued by endogeneity, particularly in corporate governance studies (Roberts and Whited, 2012). A spate of recent articles addressing the concerns of endogeneity in the finance and accounting literature is putting this issue firmly on the table. For example, Chenhall and Moers (2007), Larcker and Rusticus (2007), and Van Lent (2007) in accounting. And in finance, Roberts and Whited (2012) and Brown et al. (2011). Endogeneity has always been present and recognized as a problem that undermines causal inference. However, it is often a problem that researchers either do not address at all, or simply note in passing that the problem exists. Table 1 selects the papers with endogeneity issues published in 2013 and 2014 in Asian Pacific journals including: Australian Journal of Management (9), Accounting and Finance (11), Abacus (1), Pacific Basin Finance Journal (2), and International Review of Finance (1). Out of the 24 papers listed in the table, only two performed all specification tests and more than half (13) performed no tests.

| Author/Year | Endogeneity Issue | Specification Tests (1), (2), (3) | Method |

|---|---|---|---|

| Hoang, Faff, and Haq (2014) | Endogenous explanatory variables: market discipline, bank capital, and charter value to explain bank risks | (1), (2), (3) | Two-step system GMM |

| Koerniadi, Krishnamurti, and Tourani-Rad (2014) | Return volatility and corporate governance variables, e.g., board size, block holding | (1) (No endogeneity found) | Pooled OLS regression, fixed effects, and GMM: control for three types of endogeneity: dynamic, simultaneity, and unobserved heterogeneity |

| Arqawi, Bertin, and Prather (2014) | The relationship between leverage and warranty provisions, e.g., unobservable characteristics of a manager who makes both decisions (simultaneity) | (1), (No endogeneity found) | GLS panel regression |

| Xu, Liu, and Huang (2014) | Cost of capital effects of CSR may be driven by omitted variables that are correlated with both CSR and the cost of equity capital (corporate governance) | 2SLS (IV) | |

| Choe, Dey, and Mishra (2014) | Diversification decisions and firm performance; and self-selection issue of diversification choices | Lewbel's (2012) instrumental variable estimation, Heckman's two-stage model | |

| Khan, Mather, and Balachandran (2014) | Managerial ownership and performance and investment | (1) | Simultaneous equation 3SLS (IV) |

| Tan and Cam (2014) | Independent trustees may endogenously restrict their voice because they rely on other trustees for nomination and election | Cross-sectional regression (fund costs and governance) | |

| Amir, Kallunki, and Nilsson (2014) | Stock returns to earnings per share (scaled by stock price, as the dependent variable) | Firm fixed-effects panel regression model | |

| Dichev and Li (2013) | Growth rate in PPE and accounting choices (e.g., lease, depreciation life decisions) at the cross-sectional level | Use changes in growth rates rather than levels using Fama-MacBeth regression with Newey-West adjusted standard errors | |

| Chu, Liu, and Tian (2014) | Control-ownership divergence and stock liquidity: (omitted variable problem) | Use a natural experiment of the non-tradable share reform | |

| Magee (2013) | Ratios of total FX derivatives to assets and total liabilities to assets | (1), (2), (3) | 2SLS, GMM IV |

| Chiang, Chung, and Huang (2015) | Ownership structures, board characteristics and default risks | Dynamic GMM | |

| D'Espallier, Huybrechts, and Schoubben (2014) | Cash flow resulting from potential correlation between cash flow and the idiosyncratic component of the error | (2) | System GMM |

| Al-Maskati, Bate, and Bhabra (2014) | Diversification decision and choice of governance structure | (3) | GMM |

| Choi and Lee (2014) | Choice of (high-quality) auditors is not random | (3) | Heckman's two-stage self-selection model |

| Fargher, Jiang, and Yu (2014) | CEO equity wealth and audit fees jointly determine firm risks | (1) | 2SLS |

| Martínez-Sola, García-Teruel, and Martínez-Solano (2013) | Trade credit decisions (e.g., high level of sales would lead to higher profits and also to more trade credit given) | (3) | GMM |

| Sultana and Van der Zahn (2015) | Governance variables and other contracting mechanisms | 2SLS | |

| Steijvers and Niskanen (2013) | Leverage and cash ratios | (1) | 2SLS (IV) |

| Hutchinson, Mack, and Plastow (2014) | Board gender diversity and the nomination committee gender diversity | 2SLS, 3SLS, Heckman's two-stage selection model | |

| Choi, Kwak, and Choe (2014) | CEO turnover and corporate performance; potential selection bias that a firm's CEO turnover decision is not random | 2SLS, Heckman's two-stage model | |

| Kouwenberg and Phunnarungsi (2013) | Corporate governance and firm value | Regression analysis on the market reaction (CAAR) to announcements of violations of listing rules by SET and SEC | |

| Cho and Lee (2013) | R&D investment and the degree of IPO underpricing | 2SLS | |

| Starks and Wei (2013) | Self-selection of bidders in merger transactions | Heckman's two-stage self-selection model |

- Note: Specification tests: (1) Hausman-Wu endogeneity test; (2) F-test; (3) Hansen's over identifying Chi-squared test.

- * Australian Journal of Management, Abacus, Finance and Accounting, Pacific Basin Finance Journal and International Review of Finance.

Ignoring the problem, however, does not mitigate the implications—endogeneity limits the validity of empirical testing of models. Here, we do not question the validity of finance and accounting research to date to the extent that it should be disregarded. We argue that endogeneity has become more of a concern as we model increasingly complex relationships. Accordingly, the power of our theories is more keenly scrutinized and tested.

While all agree the problem is pervasive there is some disagreement about how best to deal with it. Chenhall and Moers (2007) and Larcker and Rusticus (2007) argue that theory development is critical. Van Lent (2007) on the other hand, argues that theory is never likely to be complete and good instruments are hard to find and so there is little a researcher can do to mitigate endogeneity. He argues that good research should be judged by how stimulating the research question is and it is a mistake to pretend that models are fully specified. Researchers should rather defend why they chose that particular misspecification and articulate how the results would change had they chosen another misspecification of the model. Roberts and Whited (2012) emphasize the importance of researchers discussing endogeneity along with good empirical design using high-quality data and robust testing of empirical predictions. Researchers should not be discouraged from empirical testing because even if endogeneity is known to be present, such studies provide the foundation for future work (Roberts and Whited, 2012).

Where researchers recognize endogeneity as a limitation to their research, how they deal with it is constrained by current thinking on appropriate techniques. Commonly used research designs to deal with endogeneity include two-stage least squares (2SLS), instrumental variables (IV), differenced GMM (generalized method of moments), and system GMM as we can see from Table 1. In the next section we provide a unifying framework that illustrates and encompasses all of these approaches.

Looking beyond the textbook solutions, one research design that helps mitigate the problem of endogeneity is that utilizing a natural experiment. A study incorporating a natural experiment provides the researcher leverage over the commonly used textbook solutions to endogeneity because it involves making use of a plausibly exogenous source of variation in the independent variables of interest (Meyer, 1995). If the naturally occurring event is convincingly exogenous, and the study well implemented, then researchers have a way to isolate causal links, build new theory and clarify (confirm/disconfirm) existing theory by mitigating the issue of endogeneity. In this paper, we provide researchers with a road map for designing research based on natural experiments and in this way we contribute to the evolution of more rigorous research techniques in finance and accounting.

Endogeneity and Current Textbook Solutions

The current textbook solutions to endogeneity include 2SLS, IV, differenced GMM, and system GMM.1 In the section that follows, we work through these methods and provide a unified framework showing how these approaches are related.

What is Endogeneity?

In terms of econometrics we can think of endogeneity as having several dimensions. First, it is a problem of omitted variables, that is, variables other than the ones specified provide alternative or additional explanation for the relationship modelled. For example, the relationship between executive compensation and firm value intuitively depends on executives' abilities, which is difficult to quantify (Roberts and Whited, 2012). Further, if looking at the value of independent directors, again, the ability of the directors would be important but also it is difficult to measure independence, surely a function of director and CEO networks, which are not easy to establish.

Second, endogeneity is a problem of simultaneity, that is, when the dependent variable and one or more of the explanatory variables are jointly determined. For example, more police officers might reduce crime but cities with higher crime rates might demand more police officers; more diffuse firm ownership might affect performance but firms with strong performance might attract diffuse ownership; banks with adequate capital reserves might have good performance but good performing banks might have stronger capital reserves, etc. In all such cases, results from regression analysis might confound these relationships because the direction of causality is most likely two ways.

A third problem is that related to measurement error when proxies are used for unobservable or difficult to measure independent or dependent variables (Roberts and Whited, 2012). For example, looking at the relationship between firm value and board independence, researchers must proxy for the degree of independence from the CEO.

The following works through the current textbook approaches to endogeneity beginning with the errors in variables problem, which is familiar to most students or researchers in finance.

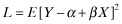

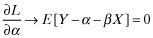

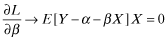

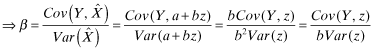

Errors in Variables Problem

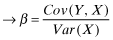

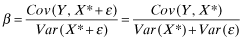

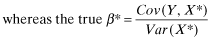

i.e., X* = True value and X measures X* with error

This implies that the X variable is not independent of the error and that omitted variables, which may be variables we are unaware of or simply cannot be quantified, are correlated with the error term.

()

() ()

()

Hence, the calculated Beta is a biased and inconsistent estimator of the true Beta. This comes about because the X variable is not independent of the error term.

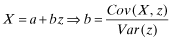

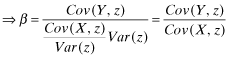

Two-stage Least Squares (2SLS), Instrumental Variable (IV)

The standard solution to the errors in variable problem is to use an IV z, which is (1) not correlated with the error term ε (i.e., exogenous) and (2) is relevant to X* (i.e., non-zero correlation).

| Stage 1: Run the regression |

|

| Now define the instrument to be: |

|

| Stage 2: Run the regression |

|

| Now substitute: |

from the first stage regression and we have: from the first stage regression and we have: |

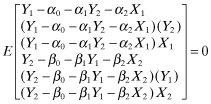

This implies that  the instrumental variable estimator. We will show how the GMM moment conditions based on the normal equations of OLS, that is, equations 1 and 2, will nest all of the endogeneity estimators.

the instrumental variable estimator. We will show how the GMM moment conditions based on the normal equations of OLS, that is, equations 1 and 2, will nest all of the endogeneity estimators.

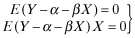

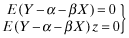

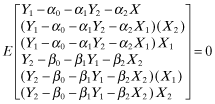

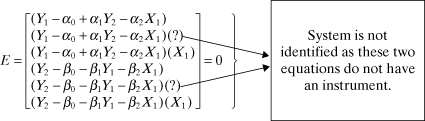

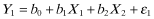

The GMM moment conditions based on the normal equations of OLS gives us another way to think of the IV estimator. Take the following example:

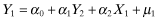

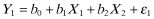

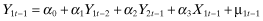

()

() ()

()- (1)

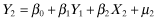

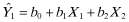

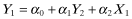

1st stage:

Regress Y2 on the exogenous variables:

- (2)

2nd stage:

In the regression for Y1 use the instrument

instead of Y2 as follows:

instead of Y2 as follows:

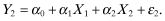

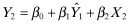

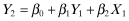

- (1)

1st stage:

Regress Y1 on the exogenous variables:

- (2)

2nd stage:

In the regression for Y2 use the instrument

instead of Y1:

instead of Y1:

In this way we can see that the system is identified. Textbooks spend many pages on rank and order conditions but basically all you need is to be able to write out the IV system as above and have a unique equation for every parameter.

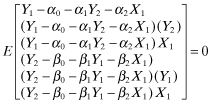

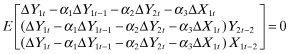

Differenced General Method of Moments (GMM)

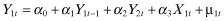

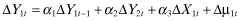

()

() ()

()

The instruments are at t-2 as the change in the X variables is from t-1 to t.

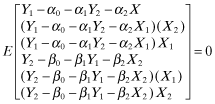

System GMM

The above are known as Arellano and Bond estimators.3 An excellent example of the application in corporate governance is Wintoki et al. (2012). However, in the GMM approach you can run your own GMM program and use whatever instruments you like. It is not necessary to use the lagged values that STATA employs.

Specification Tests

There are several important specification tests that are related to endogeneity. Here we cover three of the most important. First, a test for whether endogeneity is indeed a concern for your research, that is, the Hausman Wu test for endogeneity. The second and third specification tests deal with how good your instruments are. The second test examines whether your instrument is correlated with your endogenous variable and the third specification test examines whether your instrument is uncorrelated with the error.

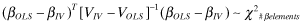

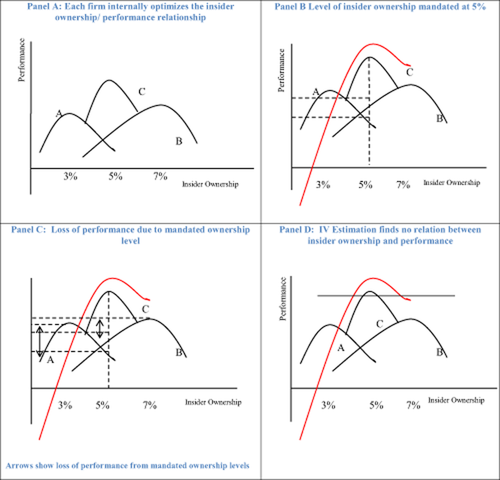

Testing for Endogeneity: The Hausman-Wu Test

The Hausman-Wu test of endogeneity is as follows:

Test: H0 : βOLS is efficient and consistent and

H1 : βIV is consistent.

And it is. However, because OLS is a maximum likelihood estimator, it is an efficient estimator, meaning that it has minimum variance. We know from Markowitz's mean variance frontier analysis that the covariance of the minimum variance portfolio with any other portfolio is the variance of the minimum variance portfolio, and the same rule applies for efficient estimators. So here the Cov(βOLS, βIV) term equals VOLS as the OLS estimator has the minimum variance property. The resulting cancellations lead to Var(βOLS − βIV) = VIV − VOLS.

Testing that Instruments are Correlated with Endogenous Variables: F Test

To verify that the instruments you employ are valid you conduct an F test as follows.

Check the R2 and F statistic of the regression. The rule of thumb is F>10 (Staiger and Stock, 1997). More formal tests are given in Stock et al. (2002) and Kleibergen and Paap (2006).

Testing that Instruments are Uncorrelated with the Error Term: Hansen's Over-identifying Chi-squared Test

In using IVs the researcher must first identify a suitable instrument. Using economically meaningful instruments is the best way to go but it is much more difficult than using lagged values of the variables in the system. Such instruments, as well as being economically meaningful, should be correlated with the endogenous predictor variable but uncorrelated with the error term. The researcher should provide justification for the use of any particular instrument (Larcker and Rusticus, 2010). In investigating potential and valid instruments, Roberts and Whited (2012) suggest asking the question: ‘Does the instrument affect the outcome only via its effect on the endogenous regressor?’ (p. 27). Researchers in finance and accounting have used a variety of creative instruments. Some examples used in the finance, accounting, and economics literature are shown in Table 2.

| Study | Focus | Instrumental variable |

|---|---|---|

| Angrist (1990) | Future earnings prospects | Vietnam War random lottery draw |

| Molina (2005) | Leverage and default probability | Marginal tax rate |

| Bennedsen, Nielsen, and Perez-Gonzalez and Wolfenzon (2006) | Family succession and firm value | Gender of first child |

| Bennedsen, Kongsted, and Nielsen (2008) | Board size on the performance of small to medium firms | Number of children of the CEO |

| Bamber, Jiang, and Wang (2010) | Disclosure policy | Top mangers prior military service |

| Bouwman (2013) | Executive compensation | |

| King and Williams (2013) | Executive compensation | Local sports star salaries |

There are many instrumental variables available—perhaps limited only by the researcher's imagination. Other creative instruments could be: local sports stars salaries as an IV for CEO salaries; and women on local councils as an IV for women on corporate boards. However, there are limitations to using IVs, such as refocusing the argument away from the presence of endogeneity to the appropriateness of the IV chosen (Brown et al., 2011).

Again referring to the 24 papers cited in Table 1, only two of the papers conduct the three specification tests and the most common method is 2SLS with only one natural experiment as the research design.

Endogeneity in Finance and Accounting

Much of the empirical corporate governance literature lacks formal theory (Hermalin and Weisbach, 2003) and there is little consensus in this literature on issues relating to performance and governance (Schultz et al., 2010). Yet corporate governance studies, of which there are many, showing a relationship between corporate governance and firm value, have impacted policy, for example, policy relating to board composition and executive compensation included in the Sarbanes-Oxley Act (SOX).4 Are such regulations justified in terms of the costs imposed on firms who have to adjust governance structures to be in compliance? Krishnan et al. (2008) estimate that $1.4 trillion has been spent on compliance with SOX. This translates to an average firm compliance cost of over $3 million (Financial Executives Institute, 2007) and reflects the high cost of restructuring corporate governance mechanisms, which involves the formation of committees and the hiring of additional directors. Such costs may well be justified if we had compelling causal evidence to support legislated governance restructuring.

Many studies show corporate governance structure to be a largely endogenous characteristic of the firm (Coles et al., 2012; Demsetz, 1983; Demsetz and Lehn, 1985; Hoang et al., 2014; Wintoki et al., 2012). So for the vast number of corporate governance studies showing a relation between governance and performance, even if rigorously designed, it is safe to say they are fraught with issues of endogeneity, that is, the optimal level of corporate governance is endogenously determined at the firm level (Demsetz, 1983). So if firms are at an internal equilibrium, then a forced change in governance structure may be detrimental to firm performance as sub-optimal governance structures may reduce performance.

The Hausman-Wu test for endogeneity will be significant when the OLS estimators are significantly different from the instrumental variables estimators. They find that the test is significant, indicating a significant difference in the OLS and IV coefficients and then proceed to estimate the governance/performance relationship using differenced GMM and system GMM approaches. Under both of these approaches, they no longer find a significant relationship between governance and performance.

Arguably, it is much better to model the ownership–performance relationship at the firm level, for example, model the pay–performance relationship at the firm level and observe the pay–performance sensitivity that maximizes performance. Coles et al. (2012) model the relationship at the firm level by looking at the productivity parameters for managerial input and investment that would give rise to the observed level of ownership and investment. Their results seem intuitive. They find that the productivity of managerial input is high in personal and business services and equipment industries such as education, software, networking, and computers. They also find that the productivity of managerial input is low in metal mining and utilities. Also, they model Tobin Q values and find that they are highly correlated with observed Q.

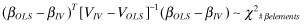

Graphic Example of the Detrimental Effects of Mandating Insider Qwnership

If firms are at an internal equilibrium, then a forced change in governance structure will not improve firm performance. In fact, not only is restructuring costly to implement but it may also be directly detrimental to firm performance. This is because the optimal level of ownership is endogenously determined at the firm level (Demsetz, 1983). Figure 1 graphically illustrates the cost to firms when forced to adopt a sub-optimal ownership structure, and a similar illustration appears in Larcker and Rusticus (2007). In Panel A, each firm internally optimizes their insider ownership–performance relationship. For Firm A that is 3%, for Firm B it is 7%, and for Firm C it is 5%.

Optimal Insider Ownership/Performance Relation

Assume now a senate committee commissions a study to examine the corporate governance and performance relationship. Based on this study, all firms are required (forced by regulation) to adopt the ‘optimal level’ of insider ownership. In Panel B, 5% ownership is considered the optimal ownership level. Firm A has been forced to increase its board size beyond its optimal 3%. Firm B is forced to reduce its size and is also no longer at its optimal level. Only Firm C is at its optimal structure post-regulation. We can see from Panel C the loss in performance to A and B if they have 5% ownership. In contrast an instrumental variables estimation approach tends to find no relationship. This is illustrated in Panel D of Figure 1. So if the optimal level of ownership is endogenously determined at the firm level, that is, the value maximizing governance choices of one firm may be very different to the value maximizing governance choices of another firm (Demsetz, 1983); then if firms are at an internal equilibrium a forced change in governance structure will not improve firm performance. In fact, it may be detrimental to firm performance. Policy prescriptions based on conflicting research will inevitably prove suboptimal in many cases. Developing better theory and more compelling evidence to fully describe the relationship between insider ownership and performance is accordingly part of the solution and this is where natural experiments can be valuable.

Natural Experiments: State-of-the-Art Solutions to Endogeneity

A natural experiment can be one of two things: (1) a randomized controlled trial (RCT) set up by the researcher in a natural setting5 to induce controlled variance; or (2) a naturally occurring state (event) resulting from a social or political situation and thus not intentionally set up by the researcher. This second type of experiment is often referred to as a quasi-experiment (Meyer, 1995). Proponents of experimental research argue that such research designs avoid a criticism often levelled at econometric studies, that is, they are based on questionable economic theories.

A randomized trial in a natural setting is set up and controlled by the researcher. Economists regularly use randomized trials to study a multitude of issues. These studies are designed to measure outcomes based on observations of a treatment group and a control (or comparison) group that have been randomly assigned. If implemented correctly the researcher creates two (or more) groups that are on average probabilistically similar so that any observed differences are due to the treatment and not to other differences in the groups (Shadish et al., 2002). Angrist and Pischke (2010) detail some influential randomized experiments in economics, for example, the ‘Moving to Opportunity’ program,6 or the Progresa7 program in Mexico.

The advantage of randomized trials is that when the research design is robust they can provide more convincing evidence. For example, randomized clinical trials provide Grade I evidence in the field of medicine (Leigh, 2010). Leigh (2010) argues that policy makers in Australia should make more use of randomized trials to better target policies and improve outcomes. He gives several examples that would lend themselves to this type of study, for example, is job training or wage subsidies best for long-term youth unemployed? And, would more generous post-release payments reduce criminal relapse?

Not everyone agrees that randomized controlled experiments necessarily provide any better evidence than econometric studies. Deaton (2010), for example, in the context of economic development and foreign aid, goes into detail about the validity of results from RCT. One inherent problem is that their results provide the population mean of the treatment effects. Policies then put in place based on population means can be misconceived if the averages are skewed by a small number of very positive outcomes when in fact most of the population is negatively affected by the policy. Deaton further addresses problems of external validity with RCT, that is, making generalizations from largely local results and it is important RCTs focus, not just on program evaluation, but also on developing theory. Further, not all policy questions lend themselves to randomized experiments as ethical issues are paramount. Randomized trials actually come with a multitude of practical difficulties, apart from ethical issues, in dealing with human subjects. Problems in randomizing group assignments, as well as gaining access to subjects, can be virtually impossible to overcome. Such trials are also expensive and time-consuming to implement.

In questions of finance, randomized experiments are difficult to implement. For example, it is neither feasible nor ethical to conduct randomized trials of bank failures, corporate governance changes, or tax changes. Thus there are relatively few examples of randomized trials in a natural setting in the finance literature. One notable exception is Kaplan et al. (2013), who examine the impact of short selling in stock markets. Specifically, they investigate whether short selling makes markets more efficient by improving price discovery, or if short selling distorts markets and adversely affects prices. The authors, with the cooperation of a large money manager, randomly made available or withheld stocks from the lending market. While such experiments provide novel results they require the cooperation of practitioners and investors and such cooperation may be difficult to attain.

Arguably, the next tier of evidence involves natural experiments in naturally occurring states or events (Leigh, 2010). This type of experiment provides more opportunities for finance and accounting researchers. A naturally occurring state often comes about from a social or political situation (Dunning, 2007) such as a government policy change. Natural experiments are not ‘true’ experiments and are sometimes referred to as ‘quasi’ experiments (Meyer, 1995). This is because the so-called naturally occurring state is not intentionally set up by the researcher and so the treatment group is not randomly assigned. Such experiments are more like observational studies where the researcher cannot manipulate the environment, although the researcher must choose the comparison or control group. In these types of research design, control groups and treatment groups may differ in systematic ways other than in regard to the treatment. The researcher therefore has to be concerned about ruling out such effects.

Some examples of naturally occurring events of interest to finance and accounting researchers may include legislated corporate governance changes (e.g., SOX), or tax changes across jurisdictions that can justifiably be seen as exogenous sources of variation in the explanatory variables of interest. Meyer (1995) gives the example of the Vietnam era draft mechanism, which depended on date of birth as an exogenous event to study the effects of military service on earnings. Research utilizing natural experiments is growing in the field of economics (Dunning, 2007), although it is not very common in finance and accounting.

Finance and accounting researchers may see an obvious roadblock to designing research around a natural event, that is, how often will issues yield themselves to the kind of actual randomization that Heider and Ljungqvist (2014), for example, exploit? Researchers though, may not be aware of the number of situations or naturally occurring events that could be used in natural experimental research and thus many more natural experiments may be available than researchers realize. Generally studies making use of natural experiments are motivated by existing evidence and thus address issues fundamental to a discipline. Table 3 provides a sample of finance and accounting research that utilizes natural experiments. All address issues fundamental in the disciplines. Using such events as exogenous factors in a model helps researchers to provide a constructive link between the real world and econometric methodology (Angrist and Pischke, 2010).

| Study | Focus | Source of natural experiment |

|---|---|---|

| Haselmann, Pistor, and Vig (2010) | Creditor rights and the size of creditor markets | Reforms of creditor rights across 12 transition economies |

| Kisgen and Strahan (2010) | Credit ratings and firms' cost of capital | Dominion Bond Rating Service becoming a recognized rating agency in 2003 |

| Iyer and Peydro (2011) | Financial contagion due to interbank linkages | Large bank failure outside of financial crisis |

| Nguyen and Nielsen (2010) | Corporate governance and firm value | Sudden death of CEO |

| Puri, Rocholl, and Steffen (2011) | Credit crises and supply of credit to retail customers | German retail banks pre- and post-GFC |

| Heider and Ljungqvist (2014) | Tax changes on capital structure | 25 US states: 67 corporate tax decreases and 35 corporate tax increases 1990–2011 |

| Kaplan, Moskowitz, and Sensoy (2013) | Effects of stock lending on security prices | Randomized stock lending experiment |

| Kelly and Ljungqvist (2012) | Information asymmetry and asset prices | Brokerage closures due to: 4429 coverage terminations due to 43 US brokerage firms closing research departments between Q1, 2000, and Q1, 2008, and 356 coverage terminations due to September 11 terrorist attacks |

| Gropp, Gruendl, and Guettler (2014) | Public guarantees on bank risk-taking | EU court ruling to remove government guarantee for savings banks |

| Gormley, Matsa, and Milbourn (2013) | CEO compensation and corporate left-tail risk | Workplace exposures to carcinogens and newly classified carcinogens |

- Note: This table is not exhaustive and is intended to provide the reader with examples of the types of events used as natural experiments in recent finance and accounting research.

Quasi-natural experiments have some advantages over randomized trials. First, they minimize ethical dilemmas involved in using human subjects. They also minimize problems that can be attributed to the researcher's influence when setting up a randomized trial stemming from the researcher's interaction with participants, for example, paternalism, inequity, and deception—even though these things are not intentional, they are difficult to completely avoid. Additionally, natural experiments remove the problem of access to practitioners, which can be a particular problem in financial markets, and generally they are useful when random assignment is neither possible nor ethical.

The inferences drawn from natural experiments are often criticized as being limited to a particular time and place. However, this is not necessarily the case. Angrist and Pischke (2010) cite several examples where natural experiments have been used to address issues, including those that affect the entire world or the march of history. For example, Nunn (2008) uses a wide range of historical evidence, including sailing distances on common trade routes, to estimate the long-term growth effects of the African slave trade. Deschênes and Greenstone (2007) look at random year-to-year fluctuations in temperature to estimate effects of climate change on energy use and mortality. In a study of the effects of foreign aid on growth, Rajan and Subramanian (2008) construct instruments for foreign aid from the historical origins of donor–recipient relations. Natural experiments have also provided the basis for significant policy choices for centuries. Dunning (2007) provides the example of cholera outbreaks in 19th-century London. This, and the examples above, speaks eloquently for the wide applicability of a natural experiment, design-based approach.

The advantage of using natural experiments, when well considered, is that they are an exogenous event. At least the researcher should convince her audience this is so. Studies using such events make a strong case for a causal interpretation of the results. Meyer (1995) argues that the main contribution of such research designs is in providing an understanding of the source of the causal relationship. The disadvantage is the time spent collecting data particularly considering there are often a lot of events. Another problem is validating the ‘as if’ random assignment of the comparison and control groups (Dunning, 2007).

Designing Research Around a Natural Experiment

To lay the foundation or road map for researchers wanting to utilize a natural experiment, we now detail two concrete applications. Both studies, which we offer as exemplars, make use of a natural experiment providing an exogenous source of variation in the main explanatory variable. The first study is that of Heider and Ljungqvist (2014). These authors exploit jurisdictional variation in tax changes across the United States to estimate the tax sensitivity of leverage from exogenous state tax changes. As the authors note, in an ideal world the researcher could set up a truly randomized experiment. In this case that would involve ‘randomly assigning different tax rates to firms and then comparing their debt policies to see if higher tax rates lead to higher leverage’. Random assignment would ensure that ‘observed differences in leverage could not be caused by unobserved firm-level heterogeneity’ (Heider and Ljungqvist 2014, p. 2). However, we do not live in an ideal world and such truly random experiments with a treatment and control group are not possible. The next best method is to seek tax changes that are plausibly exogenous. The second study we offer as an exemplar of utilizing a naturally occurring event is that of Nguyen and Nielsen (2010). Nguyen and Nielsen (2013) use the sudden death of independent directors as an exogenous event impacting firm value. Their focus is on the value of independent directors to shareholder value.

To date, the research on these two fundamental issues is plagued by problems of endogeneity, including a lack of solid theory. For example, do high-profit firms borrow more because debt offers valuable tax shields or because their marginal cost of debt is lower? The perplexing issue of endogeneity in the corporate governance literature has been discussed above.

Road Map Illustrated with Two Recent Studies

- Lay a solid foundation for the research design/methodology As is desirable for any research design, first outline the current thinking or theory related to the research question and in this case establish the existence and possible sources of endogeneity. The goal is to substantiate how a natural experiment could overcome such problems. In Heider and Ljungqvist (2014), we know from previous literature that taxes are important in capital structure, but to what degree? The sources of endogeneity noted by the authors include high-profit firms are in higher tax brackets, and such firms may borrow more to take advantage of tax shields, or high-profit firms may borrow more because their default risk is lower. ‘A simple comparison cannot tell if high-profit firms borrow more because debt offers valuable tax shields or because their marginal cost of debt is lower. Unobserved differences across firms would impart a positive bias in the estimated tax benefit’ (p. 3).

- Identify the natural event(s) This step determines the treatment group, that is, the group affected by the event. Here it is important to use as many data points as are available. Having multiple treatment groups, which may be affected by the event in different time periods, different locales, or with different intensities, strengthens the external validity of the results. Heider and Ljungqvist (2014) exploit the natural event of staggered changes in US state corporate income tax rates. State tax changes are used in preference to federal tax changes because state changes occur more frequently and at different times. Their data include 38 corporate tax increases in 25 states affecting 1,824 firms over 1990–2011, and 67 corporate tax decreases in 29 states affecting 7,021 firms. Nguyen and Nielsen (2010) use the sudden death of independent directors identified from companies listed on Amex, NASDAQ, and NYSE from 1994–2007. They identify 229 (out of 772 director deaths) cases that satisfy the strict definition of sudden death of a director. Of this 229, 108 are identified as independent directors.

- Verify the natural event is plausibly exogenous The researcher is obliged to provide the reader with clearly identified and understood exogenous events and thereby rule out other explanations. This part of the research is critical to the internal validity of the results. As Heider and Ljungqvist (2014) note, state tax changes occur for a reason and this could make them endogenous (simultaneously determined) to capital structure. For instance, tax changes could result from economic downturns and firms could borrow more in economic downturns. In this study the authors control for various confounds such as unobserved variation in local business conditions or investment opportunities, union power, and states' political leanings.

- Comparison of treatment group pre and post event As a method of preliminary analysis, the research design may include a simple comparison of the treatment group pre and post the event. By itself this is not likely to lead to strong inferences because it is difficult to determine that the treatment group would have been the same over time in the absence of the event. If this is the extent of the research design then the researcher would need to provide strong evidence that the treatment group would have been the same over time in the absence of the event (Meyer, 1995). This is essentially the approach taken by Nguyen and Nielsen (2010). They use an event study to look at CAR pre and post an independent director dying suddenly.

- Determine the control or comparison group The control or comparison group should not be affected by the exogenous change in the explanatory variable. The study is strengthened by identifying a comparison group that is similar to the treatment group in relevant dimensions; however, only randomly different across the variables under study. Heider and Ljungqvist (2014) use firms in neighbouring states not affected by tax changes, for example, firms in North Carolina affected by a tax rise are compared to firms in the same industry in the neighbouring state of South Carolina. Nguyen and Nielsen (2010) identify stock price reactions against director type, that is, inside and gray as well as the independent directors.

- Comparison of treatment and control group These groups may be compared using a number of approaches, for example, a difference-in-differences technique or an event study. Heider and Ljungqvist (2014) corroborate the exogenous nature of the tax changes by looking at what happens to firms in the same industry in neighbouring states where there are no tax changes. Nguyen and Nielsen (2010) use an event study to examine stock returns in the −5 day to +5 day event window coincident with the sudden death of an independent director. The results show pre- and post-event returns on the overall sample and separately for independent, gray, and inside directors.

- Control for standard variables found in the literature Including control variables is a standard way to allow for observable differences across the treatment and control groups. In this case Heider and Ljungqvist (2014) control for standard variables in the debt literature including: profitability (return on assets); firm size (total assets); tangibility (the ratio of fixed to total assets); and investment opportunities (market-to-book). In our second study Nguyen and Nielsen (2010) use a multivariate approach to control for director and firm characteristics. Such control variables include: director age; firm size; firm age; market-to-book; and board size. Further, the study applies a fixed effects approach to isolate and control for independent director ability and skill.

- Determine if the magnitude of the effects is economically meaningful The strength of the results and thus the conclusions regarding economic magnitude are reliant on having ruled out confounding influences. Heider and Ljungqvist (2014) include industry-year effects to eliminate time-varying industry shocks. Robustness tests also show that the results hold within-firm (ruling out that they are driven by time-invariant firm heterogeneity). Further tests also show that the results are robust to the inclusion of state fixed effects and changes in local economic conditions, changes in local labour market conditions, and changes in a state's political leanings. Nguyen and Nielsen (2010) investigate alternative specifications such as ruling out firms that may have been affected by confounding news at the time of the director's death and ruling out firms where the age of the director may increase the probability of mortality.

- Reversal of the initial event Findings are strengthened by investigating the effects of a reversal in the treatment (Meyer, 1995). For example, Heider and Ljungqvist (2014) look at reversals of tax increases and find leverage increases permanently. Not all events will lend themselves to reversal. The (2010) Nguyen and Nielsen study does not investigate the situation where independent board members are later replaced with inside directors.

The true test of research designs using natural experiments as sources of exogenous variation partly rests with the significance of the results. Heider and Ljungqvist (2014) find a positive relation between increases in taxes and leverage, that is, firms increase leverage on average by 114 basis points in response to a rise in their home state taxes. This effect is asymmetric, that is, firms do not decrease their leverage in response to tax cuts. This paper provides compelling evidence that taxes are a first-order determinant of capital structure choices. Importantly, the paper contributes to capital structure theory by providing strong evidence that the tax sensitivity of leverage is asymmetric, which is contrary to current belief.

Nguyen and Nielsen (2010) find that stock prices fall by 0.85%, which translates into an average of a $35 million decrease in firm value as a result of the sudden death of an independent director. The study more specifically shows that the value of an independent director is susceptible to the influence of powerful CEOs and also to the number of independent directors on the board. Further, this study shows that there is value added if independent board members perform a particular board function such as chairman or member of the audit committee. Previous studies on corporate governance and firm value fail to reach convincing and meaningful conclusions because corporate governance structure is endogenously determined. This study adds to a deeper understanding of the effects of corporate governance on firm value.

Limitations of Natural (Quasi) Experiments

In any research design, researchers need to be aware of issues that undermine the causal inferences. A natural experiment that is plausibly exogenous may diminish problems of omitted variable bias and simultaneity. However, in utilizing natural experiments researchers face other threats to internal and external validity.

External validity

Evidence provided by natural experiments is most convincing with more complex research designs, for example, using multiple exogenous changes in corporate governance. Using one event leaves the results open to criticisms that they are local and derived from a particular time and place. The response to this is to look for more evidence. It is possible, for example, to exploit national or jurisdictional borders since a policy shift on one side of a border may not affect groups on the other side of the border. Jurisdictional boundaries provide the most convenient basis for natural experiments (Dunning, 2007). Using multiple treatment and comparison groups allows further checks on hypotheses and strengthens the validity of the inferences (Meyer, 1995).

Internal validity

The researcher must provide a convincing argument that the source of the natural experiment is truly exogenous, for example, that policy changes are not in response to changes in the variables under study. Also, the researcher must perform exhaustive robustness tests to convince the reader of the validity of the results.

Conclusion

This paper provided a discussion of endogeneity in finance and accounting empirical research: the problem, its scope, and current textbook solutions. We note that endogeneity is increasingly seen as a concern and it is insufficient for researchers to label some variables endogenous and others exogenous without providing strong ‘institutional or empirical support for these identifying assumptions’ (Angrist and Pischke, 2010, p. 17). We provide a road map for research design using a-state-of-the-art solution—a natural experiment. Researchers can utilize such experiments to mitigate the problem of endogeneity and in doing so build better theory to justify their model. This technique involves using naturally occurring exogenous events.

However, there are two caveats. First, not all regulation or policy changes are sources of exogenous variation. Calling a policy change a natural experiment does not make it an exogenous source of variation (Meyer, 1995). Second, researchers, in looking for and using natural experimental events, should retain a clear focus on research design to improve empirical work and avoid seeking good answers over good questions. There is a chance that researchers will look for good experiments regardless of the importance of the questions they ask. On a positive note, well-designed studies around natural experiments will progress theory and thus these types of studies will get the most weight in the compiling of evidence, while other evidence, which we know is subject to issues of endogeneity, is likely to be treated as more provisional (Angrist and Pischke, 2010).