DeepPlayer: An open-source SignalPlant plugin for deep learning inference

Funding information: Czech Academy of Sciences, Grant/Award Numbers: RVO 68081731; Strategy AV21

Abstract

Background and Objective

Machine learning has become a powerful tool in several computation domains. The most progressive way of machine learning, deep learning, has already surpassed several algorithms designed by human experts. It also applies to the field of biomedical signal processing. However, while many experts produce deep learning models, there is no software platform for signal processing, allowing the convenient use of pre-trained deep learning models and evaluating them using any inspected signal. This may also hinder understanding, interpretation, and explanation of results. For these reasons, we designed DeepPlayer. It is a plugin for the free signal processing software SignalPlant. The plugin allows loading deep learning models saved in the Open Neural Network Exchange (ONNX) file format and evaluating them on any given signal.

Methods

The DeepPlayer plugin and its graphical user interface were designed in C# programming language and the .NET framework. We used the inference library OnnxRuntime, which supports graphics card acceleration. The inference is executed in asynchronous tasks for a live preview and evaluation of the signals. Model outputs can be exported back to SignalPlant for further processing, such as peak detection or thresholding.

Results

We developed the DeepPlayer plugin to evaluate deep learning models in SignalPlant. The plugin keeps with SignalPlant's interactive work with signals, such as live preview or easy selection of associated signals. The plugin can load classification or regression models and allows standard pre-processing and post-processing methods. We prepared several deep learning models to test the plugin. Additionally, we provide a tutorial training script that outputs an ONNX format model with correctly set metadata information. These, and the source code of the DeepPlayer plugin, are publicly accessible via GitHub and Google Colab service.

Conclusion

The DeepPlayer plugin allows running deep learning models easily and interactively. Therefore, experts and non-AI experts alike can explore and apply deep learning models for (biomedical) signal processing. Its ease of use and interactivity might also contribute to a better understanding and acceptance of AI methods in biomedicine.

Abbreviations

-

- ABP

-

- arterial blood pressure

-

- AFIB

-

- atrial fibrillation

-

- AI

-

- artificial Intelligence

-

- AVB

-

- atrioventricular block

-

- BP

-

- blood pressure

-

- BPM

-

- beats per minute

-

- CPU

-

- central processing unit

-

- CUDA

-

- compute unified device architecture

-

- CUDNN

-

- deep neural network library for CUDA

-

- ECG

-

- electrocardiogram

-

- EEG

-

- electroencephalogram

-

- GPU

-

- graphics processing unit

-

- LSTM

-

- long short-term memory

-

- MKL-DNN

-

- math kernel library for deep neural networks

-

- ONNX

-

- open neural network exchange

-

- PPV

-

- positive predictive values

-

- QRS

-

- ECG graphoelement consisting of Q, R, and S waves

-

- RMSE

-

- root mean square error

-

- ReLu

-

- rectified linear activation function

-

- SR

-

- sinus rhythm

-

- UNet

-

- u-shaped encoder-decoder network architecture

-

- XAI

-

- explainable or interpretable artificial intelligence

1 INTRODUCTION

A common way to process signals, including biomedical signals such as ECG or EEG, usually implements various transformations, detection approaches, and their combinations into a final model or program. However, massive developments in machine learning and, more specifically, deep learning techniques extended signal processing methods to an unprecedented scale. It can be demonstrated in submissions to the Computing in Cardiology/PhysioNet challenge, where 2015 was the last year a non-machine learning algorithm won.1 Furthermore, well-designed deep learning approaches usually occupy the first rank in the competition,2 and they are a preferred option since they do not need hand-crafted features.

Deep learning models are often prepared using free frameworks such as PyTorch,3 Tensorflow,4 Keras,5 and others. There is also a growing body of work focusing on practical implementation aspects, for example, using reinforcement learning to optimize graphics processing unit (GPU) allocation in deep learning research6 or visualization of model structures via tools such as NN-SVG,7 NETRON,8 or TensorBoard in the TensorFlow framework.4 However, the exchange of models, or just the application of models prepared by a third party, is not straightforward in practice. Even if the new user knows the base programming language, these frameworks are not equipped for agile signal inspection or processing. If the model user wants to know “how this model processes this piece of new, independent data,” the pathway may be quite convoluted. The user will have to write scripts to import and pre-process new signals, convert signals to tensors in the proper dimensions, check compliance with model libraries, extract and process outputs, and interpret the results from exported, static images. This can hinder explainable or interpretable artificial intelligence (XAI), where one wants to understand how or why a model generated its outputs. Thus, particularly for XAI in biomedical signal processing, an easy-to-use interactive environment, as it exists in other domains,9 would be extremely beneficial10 (FIGURE 1).

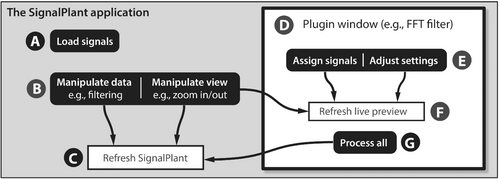

SignalPlant is a free tool to inspect, post-process, and annotate multimodal (biomedical) signals and is usually used for Electrocardiography (ECG),11-13 Electroencephalography (EEG),14-16 pressure signals,17 breathing signals,18 and others. Most of the SignalPlant plugins are interactive. Suppose the user wishes to use a filter. In that case, the user opens the filter user interface, sets filter properties, links the source signal, and sees the output preview in real time. The filter output preview updates whenever the user changes the displayed area of the source signal or filter properties. Therefore, the user quickly finds what happens when a signal is processed with specific filter settings or how the filter settings affect the output.

Since using deep learning models has become an essential aspect of biomedical signal processing, we intend to provide the same ease of use on working with deep learning models in SignalPlant as applying a low-pass filter. This paper presents DeepPlayer, a publicly available plugin for the free SignalPlant software, allowing agile use of deep learning models with a minimized burden on the users.

2 METHOD

DeepPlayer was designed as a plugin for SignalPlant. Therefore, we used the same framework (.NET) and the same programming language (C#). We used the Microsoft.ML.OnnxRuntime libraries featuring Compute Unified Device Architecture (CUDA) capabilities for model inference.

2.1 Loading deep learning models

Deep learning models may be imported in Open Neural Network Exchange file format (ONNX), which is the built-in functionality of the used OnnxRuntime library (ver. 4.0.30319). However, models vary in input/output dimensions, required sampling frequency, task (classification or regression), or the kind of output (single class vs. multiclass). Therefore, we use model metadata to access basic information related to the model. Table 1 presents metadata (key-value pairs) recognized by the DeepPlayer plugin. While “core” metadata are automatically used to set tensors into proper shape, custom metadata (optional) makes the user's life a bit simpler. For example, the sampling frequency from metadata is compared to the sampling frequency of the loaded signals. If they differ, the user is informed about that. Alternatively, the names of output signals may be defined, which is extremely useful when the model has many output signals (as in the multi-label classification problem of the PhysioNet/CinC Challenge 2021). The inference window is automatically set to the size of the highest item in the “InputDimension” array. We provide an example code to produce a deep learning model including metadata in the manuscript supplement (also online at https://colab.research.google.com/drive/1q-05mb_f8BvKxN4UrlbHoWwXLVIqIguD).

| Key | Metadata type | Explanation |

|---|---|---|

| InputDimension | Core | Dimension of input vector (e.g., [−1, 1, 15,000]) |

| OutputDimension | Core | Dimension of output vector (e.g., [−1, 4]) |

| fs | Custom | Preferred sampling frequency in Hz (e.g., 500) |

| Output_names | Custom | Names for outputs (e.g., [“AFIB,” “SR,” “AVB”]) |

- Note: Core metadata are mandatory, and they are automatically included in ONNX models. Custom metadata are defined by the model author. A value of −1 means “any.”

2.2 Plugin structure allowing interactive preview

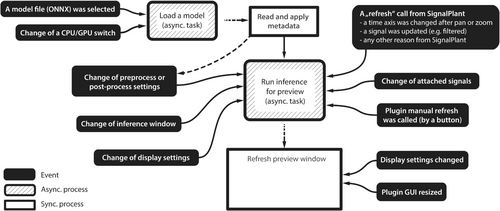

Since the plugin should be responsive and with an interactive preview, we used asynchronous tasks to compute the output preview in the background. Figure 2 shows a flowchart to describe plugin events and linked tasks, both synchronous and asynchronous.

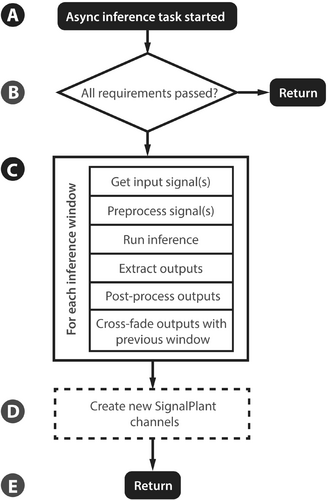

The model inference task (asynchronous) usually requires the most computing power. Therefore, it is possible to compute model inference using CPU or GPU. A flowchart of the asynchronous inference task is shown in Figure 3.

2.3 Implemented methods to pre-process signals

Signals usually are not pushed to the inference engine in their original form. Neural networks usually expect input data with zero-mean, further normalized or standardized. However, specific models may need other normalization forms, or they could even need to switch off any pre-processing. Therefore, the plugin allows all these possibilities to pre-process data. Pre-processing is done for each inference window separately. Inference window mean and standard deviation are preserved for the later inverse standardization process required in regression tasks (de-standardization). It should be noted that it is the user's responsibility to check that used signals have proper sampling frequency and that signals are filtered per model requirements.

2.4 Inference

The Microsoft.ML.OnnxRuntime library provides the inference of pre-processed data. It is performed using CPU or GPU, depending on user settings and device capabilities. After inference, outputs are extracted from tensors to regular C# arrays.

2.5 Output postprocessing

2.6 Crossfade outputs

Our deep learning experience has shown that model outputs might be incorrect very close to inference window borders. Therefore, we allow inference windows to overlap; the maximum overlap is half the window size. The output of overlapping areas is linearly crossfaded (or blended) if the overlap is used, as shown in Figure 4J.

2.7 Further processing of model outputs

When the user is satisfied with inference settings, model outputs can be extracted and appear as new signals in the SignalPlant. Consequently, the user may work with them as with any other SignalPlant signals, including measurement, filtering, peak detection, exporting to a file, and so forth.

3 RESULTS

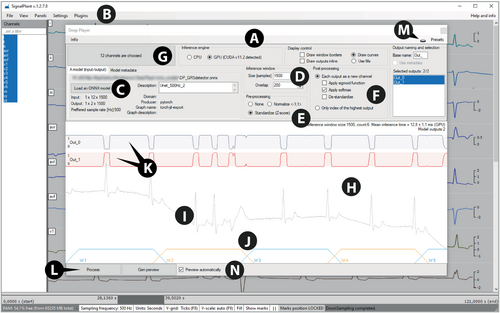

We developed the DeepPlayer plugin for the inference of deep learning models (Figure 4). Its design allows interactive behavior, as usual for SignalPlant plugins.

3.1 Built-in example models

To demonstrate DeepPlayer's capabilities, we prepared several deep learning models as examples. They cover several model architectures and use cases (Table 2). Models are free to download via http://www.signalplant.org/downloads.html.

| Filename (*.onnx) | Task | Input type | Sampling (Hz) | Inference window (s) | Output channels |

|---|---|---|---|---|---|

| iEEGnoise | Class. | EEG (1-lead) | 5,000 | 3 | 4 |

| ECGclassification12 | Class. | ECG (12-lead) | 500 | 30 | 30 |

| Lstm_hr | Reg. | ABP (1-lead) | 25 | 4 | 1 |

| QRSdet_on_off | Class. | ECG (12-lead) | 500 | 3 | 2 |

- Note: A more detailed description is presented in the text below. Class. refers to classification tasks, and Reg. refers to regression tasks.

3.1.1 EEG artifact classification model (iEEGNoise)

This deep learning model can be used to classify intracranial EEG signals. The output recognizes power grid noise, pathological activity, regular activity, and artifacts (only one value per class per inference window). The model was already proposed19 and was built using publicly available multicentric measurements.20

3.1.2 ECG classification model (ECGclassification12)

The model classifies the standard 12-lead ECG signal into 30 rhythm types and is derived from our winning submission to the CinC/PhysioNet 2021 challenge.21 However, it does not behave precisely the same way since the original solution consisted of three separately trained models, forming an ensemble. The model uses the ResNet architecture with an attention mechanism.

3.1.3 Heart rate estimation from blood pressure-type signals (lstm_hr)

The model was intended to showcase the setup, training, and export of a straightforward regression model for DeepPlayer using a public database. A complete approach from obtaining data to model evaluation and export is available in Jupyter notebook in paper supplement or via Google Colab service.22 The model estimates the pulse rate (in beats-per-minute) from blood pressure-type (BP) signals of 4 s duration. The Fantasia-Database23 from PhysioNet24 was used to train the model. It contains data from 40 “rigorously screened healthy subjects,” of which 20 are younger (21–34 years) and 20 are older (68–85 years). In this work, we used a subset of 10 older and 10 younger subjects. Data contained non-calibrated, non-invasive BP signals. The BP data were downsampled to 25 Hz, and reference beat annotations from the ECG were used to calculate the median heart rate of the window of interest. For data augmentation, copies of the signals were squeezed and stretched, thereby virtually increasing and decreasing the heart rate.

The model's architecture is similar to the approaches presented in References 25, 26. The network comprises four convolutional layers using rectified linear units (ReLU) as activation. Moreover, batch-normalization and dropout were used. After the convolutions, a long-short-term memory (LSTM) network was used, followed by three fully connected network layers. Except for the last layer, ReLUs were used. In contrast, the output of the last layer was processed with a sigmoid function to limit the model output to pulse rates within the range of the training data.

The notebook also contains an evaluation of the network, including Bland-Altmann-Analysis. We excluded four subjects from the training process. The network achieved an RMSE of approximately 6 BPM with limits of agreement of approximately ±10 BPM on this unseen data. No systematic error was observed. Finally, the notebook contains code that will export the model in the ONNX format with the appropriate meta-data to be used in DeepPlayer and can serve as a template for the interested reader.

3.1.4 QRS detector with onset-offset capability (QRSdet_on_off)

The model detects beats in ECG signals. It was trained to recognize QRS onset and offset, thus delivering QRS duration. The model with UNet architecture was trained on the 2,250 records and validated on 450 records, both from St. Anne's University Hospital Brno (Czechia), recorded at 5000 Hz for an ultra-high frequency study.27 Data sampling frequency was decreased to 500 Hz, which is more practical for general usage. The model exhibited a sensitivity of 97.73%, a PPV of 99.31%, and an F-score of 98.51% on the validation dataset. For the QRS duration prediction task, the model received a mean absolute error of 10.31 ms with a standard deviation of 5.15 ms. For testing the QRS detection model, an independent dataset from University Hospital Kralovske Vinohrady, Prague (Czechia)28, 29 was used. We received a sensitivity of 89.76%, a PPV of 97.22%, and an F-score of 95.29%.

3.2 Code organization

The plugin source codes are organized as a common Visual Studio C# WinForms project, where the file “plugins_deepplayer.csproj” describes the project contents. The object-oriented code describing the plugin functionality is defined in a single class (file “SVP_deep.cs”), extending .NET class <System.Windows.Forms.UserControl>. This approach is a usual way to write SignalPlant plugins: when a user selects a plugin to run, a new instance of a frame (class <signalViewer.standardPluginForm>) is created, and an instance of a specific plugin is deployed inside. The project also contains an “SVP_deep.designer.cs” file describing the plugin user interface, the file “SVP_deep.resx” defining project resources, and a file “packages.config” describing used libraries and their versions. File “AssemblyInfo.cs” in a folder “Properties” describes project assembly information.

Asynchronous tasks are provided by three <System.ComponentModel.BackgroundWorker> object instances: one for loading the ONNX model and preparing inference session, the second for preparing live previews, and the last one for producing results over the full data length.

4 DISCUSSION

The DeepPlayer plugin is designed as an addon (a dynamically-linked library, compiled as a *.dll file) to the SignalPlant software. Below, we discuss its features, limitations, and plans for plugin development.

4.1 Features

The DeepPlayer allows GPU acceleration. Therefore, it automatically recognizes if the computer is equipped with a graphic card supporting CUDA with Deep Neural Network Library drivers (CUDNN).

As well as most of the existing SignalPlant plugins, DeepPlayer is interactive. It also uses the same scheme of attaching signals (drag & drop or selection via dialog window supporting masks). Therefore, the user can load the model, attach the input signal, and observe the model output in the preview panel. The preview is computed for the time range of the SignalPlant main window. Thus, the plugin automatically recomputes the preview whenever the user changes the SignalPlant time axis. This capability is demonstrated in the DeepPlayer video tutorial.30

The DeepPlayer plugin allows the processing of both classification and regression model types. The number of input and output channels depends solely on the model itself.

4.2 Limitations

Although many accelerated deep learning mechanisms exist, such as DirectX, MKL-DNN, and others, the presented plugin only supports CUDA, which is limited to graphic cards with NVIDIA chipsets. This limitation is due to the architecture of machine learning libraries built for specific acceleration.

Deep learning models are usually trained in a batch, where the batch is formed of training “examples” pushed to the training simultaneously. However, this is not so clear for inference because we do not know how many “examples” we will evaluate simultaneously in the future. Therefore, for using models in DeepPlayer, it is required that the batch size is set to 1 or better to –1 (i.e., dynamic setting).

Deep learning models may contain multiple input and output heads, which is useful in scenarios when, for example, we need to detect QRS complexes from an ECG (as a classification task) and, at the same time, predict the patient's age (as a regression task). Although the DeepPlayer allows switching between multiple output heads, as in the example above, it does not let the user associate specific signals to theoretically existing input heads. Even if multiple inputs are present in a model, only the first one is always used.

4.3 Future roadmap

While the CUDA type of acceleration is by far the most common in deep learning, we would like to implement other GPU acceleration techniques in the future (e.g., DirectX or support of tensor processing units—TPUs). It should also be considered whether internal resampling (inside each inference window) should be implemented for cases where the model uses a different sampling frequency than the loaded signal. In addition, some of the existing SignalPlant plugins allow being called by batch processing. This functionality would allow the processing of multiple files using a script. Finally, we plan to implement standard types of y-axis scaling in the preview window as in other SignalPlant plugins.

5 CONCLUSION

We presented DeepPlayer version 1.0.1.4, a plugin for the free SignalPlant software. It has been included as a standard part of SignalPlant since version 1.2.7.7, available for download at www.signalplant.org. The plugin allows interactive exploration of deep learning model behavior with given signals. Therefore, the plugin helps users to understand the behavior of examined models even if they are not deep learning specialists. Plugin source codes are available at https://github.com/fplesinger/DeepPlayer under the MIT license. In addition, we supplied the plugin with four examples of deep learning models for several biomedical signal processing tasks (separate downloads at the same website). Example code to produce a model readable by this plugin is available as a Google Colab notebook22 or as a supplement in this manuscript. In addition, we provided a short video tutorial30 describing the elemental usage of DeepPlayer.

ACKNOWLEDGMENTS

This work was supported by the Czech Academy of Sciences, projects RVO 68081731, and Strategy AV21: “Breakthrough Technologies for the future - Sensing, Digitisation, Artificial Intelligence, and Quantum Technologies”.

AUTHOR CONTRIBUTIONS

The DeepPlayer plugin has been designed, developed,and tested by FP, IV, and AI. Included deep learning models and respective descriptions were prepared as follows: EEG artifact and ECG classification models by PN, heart rate estimation from blood pressure-type signals (including the public Jupyter Notebook example) by MR and CA, and QRS detector with onset-offset capability by ZK. KC and PL prepared the data required for the QRS detector model training. The manuscript was written by FP, RS, IV, ZK, PN, MR, and CA.

Open Research

DATA AVAILABILITY STATEMENT

Data sharing not applicable to this article as no datasets were generated or analysed during the current study.