Design of input assignment and feedback gain for re-stabilizing undirected networks with High-Dimension Low-Sample-Size data

Abstract

There exists a critical transition before dramatic deterioration of a complex dynamical system. Recently, a method to predict such shifts based on High-Dimension Low-Sample-Size (HDLSS) data has been developed. Thus based on the prediction, it is important to make the system more stable by feedback control just before such critical transitions, which we call re-stabilization. However, the re-stabilization cannot be achieved by traditional stabilization methods such as pole placement method because the available HDLSS data is not enough to get a mathematical system model by system identification. In this article, a model-free pole placement method for re-stabilization is proposed to design the optimal input assignment and feedback gain for undirected network systems only with HDLSS data. The proposed method is validated by numerical simulations.

1 INTRODUCTION

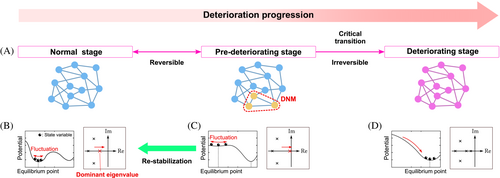

There always exist abrupt critical transitions before system deterioration in various complex network systems such as ecological systems, climate systems, power systems, and biological systems.1 In these days, critical transition detection methods have been increasingly studied.2-5 Chen et al.5 have proposed a method, called Dynamical Network Biomarker (DNB) theory, for predicting a critical transition from a healthy stage to a disease stage of a dynamical network in a living organism only with very few samples, referred to as High-Dimension Low-Sample-Size (HDLSS) data. In DNB theory, the progression of a disease is modeled as a system parameter shift of nonlinear dynamical systems and the critical transition is regarded as a bifurcation, but any information of the system model is not required. In a situation where a bifurcation is about to occur in the biological network system, the system state assigned to the nodes that constitute the subnetwork, called DNB nodes, show large fluctuations. The fluctuations of the DNB nodes can be captured only with HDLSS data, and we can detect the stage just before the bifurcation occurs. DNB theory is applied to various fields other than system biology to predict deterioration of a network system, which is called Dynamical Network Marker (DNM) theory in general sense.6-9

The realization of critical transition detection stimulates research on methods to avoid the critical transition at the stage of the detection. Given the control theoretical approach, preventing a network system in a pre-deteriorating stage, which is the stage just before the critical transition occurs, from falling into a deteriorating stage is regarded as designing a controller that improves the stability degree by pole placement, which we call re-stabilization. If the mathematical model of the system is available, or can be identified from the measured data, then conventional control methods can be employed to enhance the stability degree of the system. However, it is difficult of obtain the entire system model for a large-scale complex network system. Moreover the available data is sometimes HDLSS, which is not enough to identify the dynamics.10, 11 For example, in the case of gene network systems, the dimension of microarray data of gene expression is almost tens of thousands while the sample size is a dozen or more at most.12

A few studies on re-stabilization can be found. A data-driven method to find the input assignment with maximal controllability Gramian has been proposed,13 which relates to minimal energy control. However, the method needs abundant data whose sample size is equal to the dimension of the state variable. Thus, the method cannot be applied to the case of HDLSS data. In a very recent study, approximate pole placement for gene network systems with HDLSS data has been proposed,14 based on Brauer's theorem.15 Although the method is suitable for HDLSS data, no theoretical analysis has been presented for exact characterization of the solution of the pole placement problem. Also, a single-input assignment design for undirected network systems with HDLSS data has been proposed,16 where the proposed input assignment is the optimal solution for minimizing the input energy. The research has also given the approximated control method. However, no theoretical discussion for the approximation has been developed.

In this article, for undirected network systems, we propose a method to design the optimal feedback gain and the optimal input assignment for feedback control that makes the system more stable under pre-deteriorating stage, called re-stabilization, without the system model which adopts two different criteria: minimizing input energy and minimizing the Frobenius norm of the feedback gain to be designed. Minimizing the input energy is one of the typical criterion for control problem since the control energy corresponds to the effort needed to control a network system.17 Minimizing the Frobenius norm of the feedback gain is also significant in the case of multi-input control, because small gains are beneficial to reduce energy consumption and noise amplification or improve the transient response, where the Frobenius norm is often used as a measure of the size of the gain.18-20 For each case, we show that the optimal feedback gain and the optimal input assignment which shift the dominant eigenvalue of the Jacobian matrix away from the imaginary axis can be designed by only using the information derived from the HDLSS data of the state if the system is under the pre-deteriorating stage. Furthermore, we prove that the solution for minimizing input energy and the solution for minimizing the Frobenius norm of the feedback gain are equivalent, that is, the two problems can be solved in a unified manner. We also propose a practical design method in which the optimal feedback gain is approximated to be sparse with an analytic evaluation of the error caused by the approximation. In addition, we provide the specific algorithm for designing the input assignment and feedback gain by using HDLSS time-series data of the system.

The rest of the article is organized as follows. In Section 2, we give system description, a brief review of pre-deteriorating stage detection with HDLSS data, and the design criterion with the formal assumption. Section 3 presents the proposed method for minimizing input energy and for minimizing the Frobenius norm of feedback gain, the evaluation of the error of the practical design method, and the algorithm for the design. In Section 4, we show the proposed method is effective by numerical simulations. Finally, we conclude the article in Section 5.

2 PROBLEM DESCRIPTION

2.1 System description

2.2 Pre-deteriorating stage detection with HDLSS data

A pre-deteriorating stage detection method has been proposed.5, 8, 9 Based on the theoretical analysis presented by Chen et al.,5 the system under a pre-deteriorating stage shows some generic properties: there exists a group of nodes whose standard deviation drastically increases, and the covariance between every pair within the group also increases drastically in absolute value. This group represents the dynamical features of the network system and the nodes in the group are expected to form a subnetwork. Then, this subnetwork is regarded as a DNM and a node in the subnetwork is called a DNM node. The existence of DNM nodes implies that the system is under the pre-deteriorating stage.

We provide the details of the theoretical analysis of the system properties under a pre-deteriorating stage, which is based on Lyapunov equation. If a network system is under a pre-deteriorating stage, the following assumption holds:

Assumption 1.The system matrix is stable and the dominant eigenvalue satisfies .

Here, denotes the set of complex numbers. In addition, this article focuses on undirected networks, that is, the system matrix is symmetric and all the eigenvalues are real, which is described by the following technical assumption:

Assumption 2.The system matrix is symmetric and diagonalizable, and the eigenvalues satisfy .

From the above discussion, node such that shows large fluctuation under the pre-deteriorating stage. Note that is the th element of the eigenvector of corresponding to the dominant eigenvalue . Here, we define the dominant eigenvector as follows:

Definition 1.The vector is called the dominant eigenvector of if for the dominant eigenvalue of .

Then, the nodes corresponding to the non-zero element of the dominant eigenvector are with large fluctuations under the pre-deteriorating stage. We can capture the large fluctuation and identify the dominant eigenvector only with HDLSS data following the procedure explained in Section 3.1.

2.3 Design criterion

After detecting a pre-deteriorating stage, the next goal is to prevent the system from shifting to a deteriorating stage and bring it back to the normal stage, which we refer to as re-stabilization in this article. When the system is under a pre-deteriorating stage, the real part of the dominant eigenvalue of the system matrix, which corresponds to the linearization around , is close to zero. If the dominant eigenvalue of the system matrix is shifted away from zero, the system matrix becomes more stable. Then, the original nonlinear system also becomes more stable where the equilibrium point is still close to , that is, the re-stabilization can be achieved. Therefore, we consider the problem to shift the dominant eigenvalue away from zero based on pole placement method.

We formulate the re-stabilization problem assuming that some parameters of the system matrix can be adjusted. For example, in gene regulatory networks, gene knockdown, and gene overexpression can be implemented to adjust the system parameters.22 Naturally, two questions arise: which nodes should be targeted? How much the corresponding parameters should be adjusted? By regarding the perturbation matrix as a multiplication of the system input matrix and a feedback gain matrix, we address the questions as a problem of designing these two matrices.

- The dominant eigenvalue is shifted to ;

- The eigenvalues and the corresponding eigenvectors do not change,

The available data for the design of and is the time-series data of all the nodes at the steady state with sample size , which is expressed as . For some systems, such as power systems or biological systems, the sample size is much smaller than the dimension of .10-12 Data with such property is called HDLSS data, defined as follows:

Definition 2.Data is called HDLSS data if the sample size and the dimension of satisfy .

Since the measured data with a sample size greater than or equal to the dimension of are required for system identification, we cannot identify the system matrix by using HDLSS data. On the other hand, we can obtain the HDLSS data of the state since we can estimate the equilibrium point from the steady state data of .

Summarizing the above discussion, the problem we address here is to design the appropriate input assignment and the appropriate feedback gain with respect to minimizing or under Assumptions 1 and 2.

3 PROPOSED METHOD

In this section, we present a method for designing the appropriate input assignment and the appropriate feedback gain, which can be achieved only with the HDLSS data of .

3.1 Available information

3.2 Problem formulation

From the above discussion, under Assumptions 1 and 2, we assume that is available. Therefore, for minimizing , the problem we address is formulated as follows:

Problem 1.For the system (4), suppose Assumptions 1 and 2 hold and the dominant eigenvector of is available. Then, derive the optimal solution of the following optimization problem (P1) by using :

Similarly, we call Problem 2 for the problem where “ for all ” is replaced by “” in Problem 1.

3.3 Optimal design with the dominant eigenvector of the system matrix

For an arbitrary feasible set , Problems 1 and 2 can be solved by using the following theorem:

Theorem 1.For the system (4), suppose Assumptions 1 and 2 hold. Then, the optimization problem (P1) is equivalent to the optimization problem in Problem 2, and that solution is given as follows:

Proof of Theorem 1.Because is a real symmetric matrix, there exists an orthogonal matrix such that

On the other hand, because the eigenvalues of are all real and distinct, there exists a non-singular matrix such that

Now, for the optimization problem (P2), we fix the input assignment and consider the following subproblem:

On the other hand, we similarly consider the following subproblem of the optimization problem (P3) for given :

Theorem 1 shows that the solution of Problems 1 and 2 can be achieved in the same way only with the knowledge of the dominant eigenvector . Note that Theorem 1 holds only under the ideal case where is accurately obtained. For implementation, instead of , we use the dominant eigenvector of the sample covariance matrix of HDLSS data of , which is an estimated vector of when the system is under a pre-deteriorating stage. Namely, we implement the estimated optimal re-stabilization with the estimated optimal input assignment and the estimated optimal feedback gain, which are designed by replacing with in (9) and (10). The effectiveness of the estimated optimal design method is supported by numerical simulations in Section 4.

Corollary 1.For the system (4), suppose Assumptions 1 and 2 hold. If the feasible set is given as (51), the optimal solution of the optimization problem (P1) is given as follows:

Corollary 1 implies that, under the constraint (51), the optimal input ports are the nodes corresponding to the indices of the top elements in absolute value of the dominant eigenvector .

Proof of Corollary 1.Let be the index of input port designated by , that is, . Then, by using (9) in Theorem 1, the optimal input assignment is given as follows:

3.4 Error evaluation of the practical design

In practice, it is not easy to control interactions of many nodes of a complex network system at once. Thus, not only but also should be designed to be sparse. can be sparse by using some appropriate feasible set, (51) for example, while is not always sparse because the dominant eigenvector is not always sparse. Then, we simplify the optimal feedback gain to make it sparse by setting some elements with small absolute value to zero. In this case, there is an error between and the simplified feedback gain , which causes the error of the shift of the dominant eigenvalue.

Theorem 2.Let be the dominant eigenvalue of and be the first-order approximation of with respect to . Then, under the constraint (51), the following holds:

Theorem 2 implies that the difference between and is less than or equal to if is sufficiently small. In addition, becomes small as or increases.

Proof of Theorem 2.The system matrix of the closed-loop system with is written as follows:

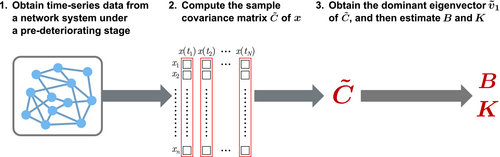

3.5 Proposed algorithm

- Obtain HDLSS data from a network system under a pre-deteriorating stage;

- Compute the sample covariance matrix of from the HDLSS data;

- Obtain the dominant eigenvector of ;

- Estimate the input assignment and the feedback gain according to Theorem 1, Corollary 1, or Theorem 2, where the dominant eigenvector of is replaced with .

4 NUMERICAL SIMULATIONS

In this section, we give numerical simulations to examine the effectiveness of the proposed method for re-stabilization of complex network systems. We use a mathematical model where the true values of the parameters are known so that we compare the results of re-stabilization using the true dominant eigenvector and using the estimated dominant eigenvector based on the observed HDLSS data of the state.

4.1 Simulation model

4.2 Parameter setting

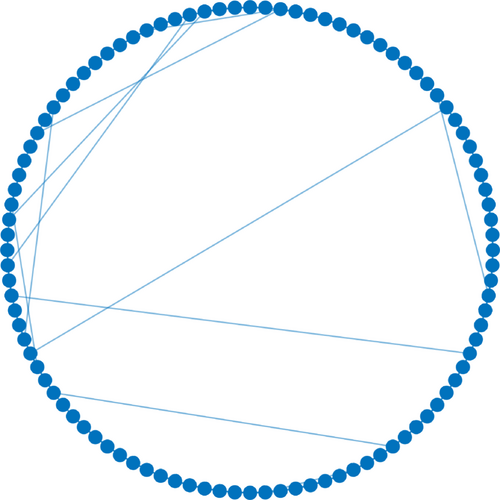

We use Watts-Strogatz model24 for the network structure, which determines the value of , with nodes, the average degree , and the edge rewiring probability . Figure 3 shows a network structure in this case.

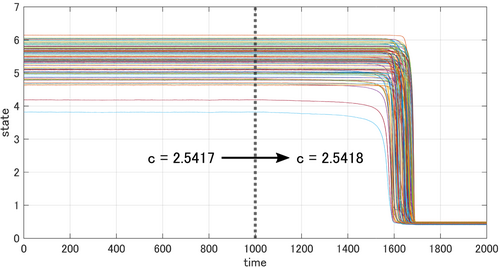

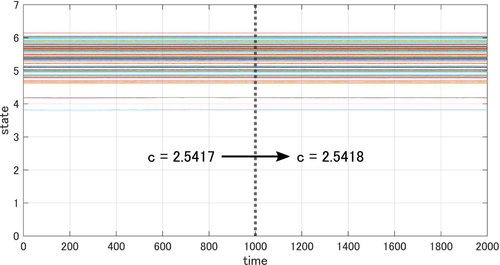

The constant parameters are given as , , , , , . We solve the model (69) by using the Euler–Maruyama method.25 Here, the time step width is , the number of steps is , and the initial state is one of the stable equilibrium point of the state. We use the convergence value of the noiseless system with no input of (69) as the equilibrium point. With these settings and some random parameter , the bifurcation occurs when is at (see Figure 4). Thus, we set so that the network system is at a pre-deteriorating stage and satisfies Assumptions 1 and 2. The observation data for the sample covariance matrix are sampled from the calculation results of the numerical model at the interval of steps, where is the sample size. This calculation iterates times while changing the values of random noise. The system matrix is obtained by linearly approximating the model (69) in the neighborhood of the equilibrium point. The constraint on the input assignment is (51) with , that is, the number of input port is . In addition, we set , which is used for design of the feedback gain.

4.3 Simulation results

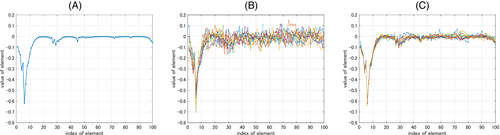

Figure 5 shows the dominant eigenvector of the system matrix and the dominant eigenvector of the sample covariance matrix with the sample size and , respectively. According to Corollary 1, the optimal input assignment is since the 6th and 7th elements are the top 2 elements in absolute value of (see Figure 5A). In the case of with , the 6th and 7th elements are the top 2 elements in absolute value for 9 out of the 10 samples, while the 6th and 4th are the top 2 elements in absolute value for the rest one (see Figure 5B). Thus, the estimated optimal input assignment is equivalent to for 9 out of the 10 samples. Although for the rest one sample, it is also considered effective, because the 4th element has the 3rd largest absolute value in . In the case of with , the 6th and 7th elements are the top 2 elements in absolute value for all 10 samples (see Figure 5C), and then

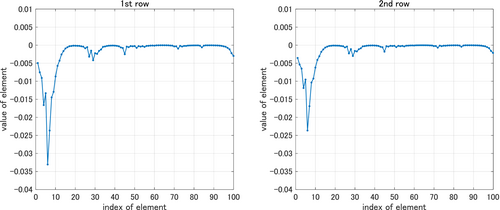

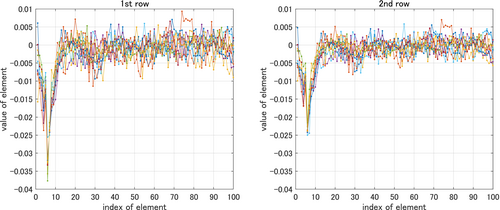

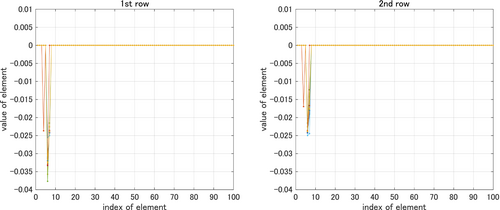

Figure 6 shows each row of the optimal feedback gain , which is obtained by using based on Corollary 1. Note that the feedback gain is a matrix since the system has 2 inputs. Figure 7 shows each row of the estimated optimal feedback gain by using with the sample size for 10 samples. Figure 8 shows each row of the simplified feedback gain , which is obtained by setting all but the top 2 elements in absolute value to zero with respect to each row of with the sample size for 10 samples. Hence, each row of has only two non-zero elements. For 9 out of the 10 samples, the 6th and 7th elements are the non-zero elements in both rows of , that is, the indices of the non-zero elements are equivalent to the indices of the top 2 elements in absolute value of each row of . For the rest one sample, the 4th and 6th element are the non-zero in each row.

Figure 9 shows the dominant eigenvalue of the system matrix for four cases: just before bifurcation without any input, using the optimal state feedback with , using the estimated optimal state feedback with with the sample size for 10 samples, and using the simplified state feedback with with the sample size for 10 samples. In the case of using any state feedback, the dominant eigenvalue is shifted away from zero, that is, the system is re-stabilized. Note that, in the case of using the simplified state feedback, the dominant eigenvalue that is closest to zero corresponds to the case of using the sample with which .

Figure 10 shows the state trajectory of the system with the simplified state feedback corresponding to the case where the dominant eigenvalue is closest to zero. Here, the bifurcation parameter slightly changes after . Even though changes, the equilibrium point does not change, that is, a bifurcation does not occur. Therefore, the network system is prevented from falling into a deteriorating stage.

From the above results, the simplified design method of the input assignment and feedback gain by using the dominant eigenvector of the sample covariance matrix is practically effective, although the change of the eigenvalues other than the dominant eigenvalue due to the simplification should be theoretically discussed.

5 CONCLUSIONS

In this article, we have proposed a method for designing the optimal input assignment and feedback gain for re-stabilizing an undirected network system based on pole placement under the condition that the system matrix is unknown and only HDLSS data of the state is available. The error evaluation of the simplification of the optimal design has also been presented. Furthermore, the numerical simulations have showed the effectiveness of the simplified design method.

This article assumes that the network of the system is undirected and the eigenvalues of the system matrix are distinct. Since a complex network system is not always undirected and the eigenvalues are not always distinct, we need to extend our method to the case where the network is directed and some eigenvalues are repeated. In addition, more detailed error analysis of the eigenvalue shift should be addressed.

CONFLICT OF INTEREST STATEMENT

The authors declare that they have no conflicts of interest.

FUNDING INFORMATION

This research was supported by The Moonshot Research and Development Program JPMJMS2021.

Open Research

DATA AVAILABILITY STATEMENT

The data that support the findings of this study are available from the corresponding author upon reasonable request.