Protein structure accuracy estimation using geometry-complete perceptron networks

Review Editor: Nir Ben-Tal

Abstract

Estimating the accuracy of protein structural models is a critical task in protein bioinformatics. The need for robust methods in the estimation of protein model accuracy (EMA) is prevalent in the field of protein structure prediction, where computationally-predicted structures need to be screened rapidly for the reliability of the positions predicted for each of their amino acid residues and their overall quality. Current methods proposed for EMA are either coupled tightly to existing protein structure prediction methods or evaluate protein structures without sufficiently leveraging the rich, geometric information available in such structures to guide accuracy estimation. In this work, we propose a geometric message passing neural network referred to as the geometry-complete perceptron network for protein structure EMA (GCPNet-EMA), where we demonstrate through rigorous computational benchmarks that GCPNet-EMA's accuracy estimations are 47% faster and more than 10% (6%) more correlated with ground-truth measures of per-residue (per-target) structural accuracy compared to baseline state-of-the-art methods for tertiary (multimer) structure EMA including AlphaFold 2. The source code and data for GCPNet-EMA are available on GitHub, and a public web server implementation is freely available.

1 INTRODUCTION

Proteins are ubiquitous throughout the natural world, performing a plethora of crucial biological processes. Comprised of chains of amino acids, proteins carry out complex tasks throughout the bodies of living organisms, such as digestion, muscle growth, and hormone signaling. As a central notion in protein biology, the amino acid sequence of each protein uniquely determines its structure and, thereby, its function (Sadowski & Jones, 2009). However, the process of folding an amino acid sequence into a specific 3D protein structure has long been considered a fundamental challenge in protein biophysics (Dill & MacCallum, 2012).

Fortunately, in recent years, computational approaches to predicting the final state of protein folding (i.e., protein structure prediction) have advanced considerably (Jumper et al., 2021), to the degree that many have considered the problem of static protein tertiary structure prediction largely addressed (Al-Janabi, 2022). However, in relying on computational structure predictions for protein sequence inputs, a new problem in quality assessment arises (Kryshtafovych et al., 2019). In particular, how is one to estimate the accuracy of a predicted protein structure? Many computational approaches that aim to answer this question have previously been proposed (e.g., Siew et al., 2000; Wallner & Elofsson, 2003; Shehu & Olson, 2010; Uziela et al., 2016; Cao et al., 2016; Olechnovič & Venclovas, 2017; Cheng et al., 2019; Maghrabi & McGuffin, 2020; Yang et al., 2020; Alshammari & He, 2020; Hiranuma et al., 2021; Baldassarre et al., 2021; McGuffin et al., 2021; Lensink et al., 2021; Akdel et al., 2022; Edmunds et al., 2023; Maghrabi et al., 2023). Nonetheless, previous methods for estimation of protein structural model accuracy (EMA) do not sufficiently utilize the rich, geometric information provided by 3D protein structure inputs directly as a methodological component, which suggests that future methods for EMA that can learn expressive geometric representations of 3D protein structures may provide an enhanced means by which to quickly and effectively estimate the accuracy of a predicted protein tertiary structure.

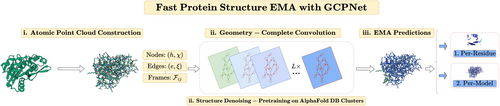

In this work, we introduce a geometric neural network, the geometry-complete perceptron network (GCPNet) for estimating the accuracy of 3D protein structures (called GCPNet-EMA). As illustrated in Figure 1, GCPNet-EMA receives as its primary network input a 3D point cloud, a representation naturally applicable to 3D protein structures when modeling these structures as graphs with nodes (i.e., residues represented by Ca atoms) positioned in 3D Euclidean space (Morehead et al., 2023). GCPNet-EMA then featurizes such 3D graph inputs as a combination of scalar and vector-valued features such as the type of a residue and the unit vector pointing from residue to residue , respectively. Subsequently, following pretraining on Gaussian noised-cluster representatives from the AlphaFold Protein Structure Database (Jumper et al., 2021; Varadi et al., 2021), GCPNet-EMA applies several layers of geometry-complete graph convolution (i.e., GCPConv) using a collection of node-specific and edge-specific geometry-complete perceptron (GCP) modules to learn an expressive scalar and vector-geometric representation of each of its 3D graph inputs (Morehead & Cheng, 2023a). Lastly, using its learned finetuning representations, GCPNet-EMA predicts a scalar structural accuracy value indicating the method's predicted lDDT score (Mariani et al., 2013) for each node (i.e., residue). Estimates of a protein structure's global (i.e., per-model) accuracy can then be calculated as the average of its residues' individual lDDT scores, following previous conventions for EMA (Chen et al., 2023).

2 RESULTS AND DISCUSSIONS

As shown in Table 1, for tertiary structure EMA, GCPNet-EMA without ESM embedding inputs (Lin et al., 2023) outperforms all baseline methods (Jumper et al., 2021; Olechnovič & Venclovas, 2017; Hiranuma et al., 2021; Chen et al., 2023) in terms of its MAE and Pearson's correlation in predicting per-residue lDDT scores. Similarly, GCPNet-EMA without ESM embeddings achieves competitive per-residue MSE and per-model MAE values in predicting lDDT scores compared to EnQA-MSA (Chen et al., 2023), the most recent state-of-the-art method for protein structure EMA. Analyzed jointly, GCPNet-EMA offers state-of-the-art lDDT predictions for each residue in a predicted protein structure and competitive per-model predictions overall, with more than 10% greater correlation to ground-truth lDDT scores for each residue compared to EnQA-MSA. Notably, in doing so, GCPNet-EMA also outperforms the lDDT score estimations produced by AlphaFold 2 (Jumper et al., 2021) in the form of its predicted lDDT (plDDT) scores. These results suggest that GCPNet-EMA should be broadly applicable for a variety of tasks in protein bioinformatics related to local and global tertiary structure EMA. In Figure 2, we show an example of a protein in the tertiary structure EMA test dataset for which AlphaFold overestimates the accuracy of its predicted structure but for which GCPNet-EMA's plDDT scores are quantitatively and qualitatively much closer to the ground-truth lDDT values, likely due to its large-scale structure-denoising-based pretraining on the afdb_rep_v4 dataset (Jamasb et al., 2024), a redundancy-reduced label-free subset of the AlphaFold Protein Structure Database (AFDB) (Jumper et al., 2021; Varadi et al., 2021).

| Method | Per-residue | Per-model | ||||

|---|---|---|---|---|---|---|

| MSE | MAE | Cor | MSE | MAE | Cor | |

| AF2-plDDT | 0.0173 | 0.0888 | 0.6351 | 0.0105 | 0.0802 | 0.8376 |

| DeepAccNet | 0.0353 | 0.1359 | 0.3039 | 0.0249 | 0.1331 | 0.4966 |

| VoroMQA | 0.2031 | 0.4094 | 0.3566 | 0.1788 | 0.4071 | 0.3400 |

| EnQA | 0.0093 | 0.0723 | 0.6691 | 0.0031 | 0.0462 | 0.8984 |

| EnQA-SE(3) | 0.0102 | 0.0708 | 0.6224 | 0.0034 | 0.0434 | 0.8926 |

| EnQA-MSA | 0.0090 | 0.0653 | 0.6778 | 0.0027 | 0.0386 | 0.9001 |

| GCPNet-EMA (pretraining, plDDT, and ESM) | 0.0106 | 0.0724 | 0.7058 | 0.0031 | 0.0427 | 0.8687 |

| GCPNet-EMA w/o pretraining | 0.0107 | 0.0725 | 0.7048 | 0.0041 | 0.0482 | 0.8097 |

| GCPNet-EMA w/ Null plDDT | 0.6672 | 0.8022 | 0.2633 | 0.6305 | 0.7877 | 0.4131 |

| GCPNet-EMA w/ null plDDT and w/o ESM | 0.3342 | 0.5603 | 0.2139 | 0.3207 | 0.5548 | 0.2790 |

| GCPNet-EMA w/o AF2 plDDT | 0.0120 | 0.0759 | 0.6588 | 0.0051 | 0.0514 | 0.7633 |

| GCPNet-EMA w/o pretraining or plDDT | 0.0134 | 0.0803 | 0.6043 | 0.0066 | 0.0606 | 0.6744 |

| GCPNet-EMA w/o ESM embeddingsa,b | 0.0092 | 0.0648 | 0.7482 | 0.0038 | 0.0420 | 0.8382 |

| GCPNet-EMA w/o plDDT or ESMa | 0.0105 | 0.0707 | 0.7123 | 0.0042 | 0.0461 | 0.8076 |

- Note: Results for methods performing best are listed in bold, and results for methods performing second-best are underlined. Pretraining indicates that a method was pretrained on the 2.3 million tertiary structural cluster representatives of the AFDB (i.e., the afdb_rep_v4 dataset (Jamasb et al., 2024)) via a 3D residue structural denoising objective, in which small Gaussian noise is added to residue positions and a method is tasked with predicting the added noise.

- Abbreviations: Cor, Pearson's correlation coefficient; MAE, mean absolute error; MSE, mean squared error.

- a A method that was selected for deployment via our publicly available protein model quality assessment server.

- b A method that is specialized for estimating the quality of AlphaFold-predicted protein structures.

Concerning CASP15 multimer structure EMA, Table 2 shows that GCPNet-EMA provides the most balanced performance compared to four single-model baseline methods (Jumper et al., 2021; Chen et al., 2023, 2023; Olechnovič & Venclovas, 2023) in terms of its per-target Pearson's and Spearman's correlation as well as its performance for ranking loss, for which it is better than AlphaFold 2 (i.e., AlphaFold-Multimer for multimeric benchmarking) plDDT yet marginally outperformed by VoroMQA-dark which is mostly uncorrelated with the quality of an individual decoy. Note that in contrast to tertiary structure EMA, for multimer structure EMA, we instead assess a method's ability to predict (a quantity correlated with) the TM-score of a given decoy corresponding to a protein target. Overall, these results demonstrate that, compared to state-of-the-art single-model multimer EMA methods, GCPNet-EMA offers robust, balanced multimer EMA performance in contemporary real-world EMA benchmarks such as CASP15.

| Method | Cor | SpearCor | Loss |

|---|---|---|---|

| AF2-plDDT | 0.3402 | 0.2641 | 0.1106 |

| DProQA | 0.0795 | 0.0545 | 0.1199 |

| EnQA-MSA | 0.2550 | 0.2378 | 0.1036 |

| VoroIF-GNN-score | 0.0639 | 0.0873 | 0.1342 |

| Average-VoroIF-GNN-residue-pCAD-score | −0.0156 | −0.0326 | 0.1499 |

| VoroMQA-dark | −0.0872 | −0.0119 | 0.0860 |

| GCPNet-EMA | 0.3056 | 0.2567 | 0.0970 |

| GCPNet-EMA w/o ESM embeddings | 0.2592 | 0.1969 | 0.1292 |

| GCPNet-EMA w/o plDDT | 0.0853 | 0.0450 | 0.1337 |

- Note: Results for methods performing best are listed in bold, and results for methods performing second-best are underlined. Note that all versions of GCPNet-EMA benchmarked for multimer structure EMA were pretrained using the AFDB, using the same structural denoising objective investigated in Table 1.

- Abbreviations: Cor, Pearson's correlation coefficient; Loss, ranking loss defined as the target-averaged difference between the TM-score of a method's top-ranked decoy structure and that of the ground-truth top-ranked decoy structure for all decoys corresponding to a given target; SpearCor, Spearman's rank correlation coefficient.

For general PDB multimer structure EMA, Table 3 shows that GCPNet-EMA outperforms 4 single-model baseline methods (Jumper et al., 2021; Chen et al., 2023, 2023; Olechnovič & Venclovas, 2023) in terms of its per-target Pearson's and Spearman's correlation as well as its state of the art performance for ranking loss, for which it is tied only with AlphaFold-Multimer plDDT. Notably, without plDDT as an input feature, GCPNet-EMA still surpasses the Pearson's and Spearman's correlation of DProQA, a recent state-of-the-art method for protein multimer structure EMA. Overall, GCPNet-EMA offers 6% greater Spearman's correlation to ground-truth TM-scores for each decoy of a given multimer target compared to AlphaFold-Multimer, the second-best-performing method. Observing that GCPNet-EMA is successfully able to generalize from being trained for tertiary structure EMA to being evaluated for multimer structure EMA, these results suggest that GCPNet-EMA should be useful for a variety of tasks related to accuracy estimation of multimeric structures.

| Method | Cor | SpearCor | Loss |

|---|---|---|---|

| AF2-plDDT | 0.3654 | 0.2799 | 0.0563 |

| DProQA | 0.1403 | 0.1563 | 0.0816 |

| EnQA-MSA | 0.3303 | 0.2395 | 0.0577 |

| VoroIF-GNN-score | 0.1017 | 0.1213 | 0.0715 |

| Average-VoroIF-GNN-residue-pCAD-score | 0.0483 | 0.0355 | 0.1198 |

| VoroMQA-dark | 0.0099 | 0.1036 | 0.0835 |

| GCPNet-EMA | 0.3756 | 0.2971 | 0.0563 |

| GCPNet-EMA w/o ESM embeddings | 0.2920 | 0.2387 | 0.0799 |

| GCPNet-EMA w/o plDDT | 0.2176 | 0.1973 | 0.1082 |

- Note: Results for methods performing best are listed in bold, and results for methods performing second-best are underlined. Note that all versions of GCPNet-EMA benchmarked for multimer structure EMA were pretrained using the AFDB, using the same structural denoising objective investigated in Table 1.

- Abbreviations: Cor, Pearson's correlation coefficient; Loss, ranking loss defined as the target-averaged difference between the TM-score of a method's top-ranked decoy structure and that of the ground-truth top-ranked decoy structure for all decoys corresponding to a given target; SpearCor, Spearman's rank correlation coefficient.

Lastly, in Table 4, we compare the runtime of GCPNet-EMA to the runtime of EnQA-MSA using the 56 decoys comprising the tertiary structure EMA test dataset referenced in Table 1. The results here show that GCPNet-EMA offers 47% faster EMA predictions for arbitrary protein structure inputs compared to EnQA-MSA, highlighting real-world utility in incorporating GCPNet-EMA into modern protein structure prediction pipelines.

| Method | Average prediction speed |

|---|---|

| EnQA-MSA | 15.3 s |

| GCPNet-EMA | 8.1 s |

- Note: Results for the fastest method are listed in bold.

3 CONCLUSIONS

In this work, we introduced GCPNet-EMA for fast protein structure EMA. Our experimental results demonstrate that GCPNet-EMA offers state-of-the-art (competitive) estimation performance for per-residue (per-model) tertiary structural accuracy measures such as plDDT, while offering fast prediction runtimes within a publicly-available web server interface. Moreover, GCPNet-EMA achieves state-of-the-art PDB multimer structure EMA performance across all metrics and performs competitively for CASP15 multimer EMA. Consequently, as an open-source software utility, GCPNet-EMA should be widely applicable within the field of protein bioinformatics for understanding the relationship between predicted protein structures and their native structure counterparts. In future work, we believe it would be worthwhile to explore applications of GCPNet-EMA's predictions of protein structure accuracy to better understand the presence (or absence) of disordered regions in protein structures, to better characterize the potential protein dynamics in effect.

4 MATERIALS AND METHODS

In this section, we will describe our proposed method, GCPNet-EMA, in greater detail to better understand how it can learn geometric representations of protein structure inputs for downstream tasks.

- Property: GCPNets are SE(3)-equivariant, in that they preserve 3D transformations acting upon their vector inputs.

- Property: GCPNets are geometry self-consistent, in that they preserve rotation invariance for their scalar features.

- Property: GCPNets are geometry-complete, in that they encode direction-robust local reference frames for each node.

ALGORITHM 1. GCPNet for estimation of protein structure model accuracy

1: Input: , ,

, graph

2: Initialize

3:

4: Project ,

5: for to do

6: ,

7: end for

8: Project ,

9: Output:

4.1 The geometry-complete perceptron module

GCPNet, as illustrated in Figure 1 and shown in Algorithm 1, represents the features for nodes within its protein graph inputs as a tuple to learn scalar features jointly with vector-valued features . Likewise, GCPNet represents the features for edges in its protein graph inputs as a tuple to learn scalar features jointly with vector-valued features . Hereon, to be concise, we refer to both node and edge feature tuples as . Lastly, GCPNet denotes each node's position in 3D space as a dedicated, translation-equivariant vector feature .

Defining notation for the GCP module. Let represent an integer downscaling hyperparameter (e.g., 3), and let denote the SO(3)-equivariant (i.e., 3D rotation-equivariant) frames constructed using the Localize operation in Algorithm 1, as previously described by Morehead and Cheng (2023a). We then use the local frames to define the GCP encoding process for 3D graph inputs. Specifically, for an optional time index , we define these frame encodings as , with and , respectively. Notably, Morehead and Cheng (2023a, 2023b) show how these frame encodings allow networks that incorporate them to effectively detect and leverage for downstream tasks the potential effects of molecular chirality on protein structure.

To summarize, the GCP module learns tuples of scalar and vector features a total of times to derive rich scalar and vector-valued features. The module does so by blending both feature types iteratively with the local geometric information provided by the chirality-sensitive frame encodings .

4.2 Learning from 3D protein graphs with GCPNet

In this section, we will describe how the GCP module can be used to perform 3D graph convolution with protein graph inputs, as illustrated in Algorithm 1.

4.2.1 A geometry-complete graph convolution layer

4.2.2 Designing GCPNet for estimation of protein structure model accuracy

In this remaining section, we discuss GCPNet-EMA—the overall GCPNet-based protein structure EMA algorithm (Algorithm 1).

Line 2 of Algorithm 1 uses the Centralize operation to remove the center of mass from each node (atom) position in a protein graph input to ensure that such positions are 3D translation-invariant for the remainder of the algorithm's execution.

Subsequently, the Localize operation on Line 3 crafts translation-invariant and SO(3)-equivariant frame encodings . As described in more detail in Morehead and Cheng (2023a), these frame encodings are chirality-sensitive and direction-robust for edges, imbuing networks that incorporate them with the ability to more easily detect the influence of molecular chirality on protein structure.

Notably, Line 4 uses to initially embed our node and edge feature inputs into scalar and vector-valued values, respectively, using encodings of geometric frames. Thereafter, Lines 5–7 show how each layer of graph convolution is applied iteratively via , starting from these initial node and edge feature embeddings. Important to note is that information flow originating from the geometric frames is always maintained to simplify the network's synthesis of information derived from its geometric local frames in each layer.

Lines 8 through 9 finalize the GCPNet-EMA algorithm for EMA by performing feature projections via to conclude the forward pass of GCPNet by returning its final node-specific scalar outputs.

4.2.3 Network outputs

To summarize, GCPNet-EMA receives a 3D graph input with node positions , scalar node and edge features, and , as well as vector-valued node and edge features, and , where all of such features used are listed in Table 5. GCPNet then predicts scalar node-level properties while maintaining SE(3) invariance to estimate the per-residue and per-model accuracy of a given protein structure, to avoid imposing an arbitrary 3D reference frame on the model's final prediction.

| Type | Symmetry | Feature name |

|---|---|---|

| Node | Invariant | Residue type |

| Node | Invariant | Positional encoding |

| Node | Invariant | Virtual dihedral and bond angles over the C trace |

| Node | Invariant | Residue backbone dihedral angles |

| Node | Invariant | (Optional) residue-wise ESM embeddings |

| Node | Invariant | (Optional) residue-wise AlphaFold 2 plDDT |

| Node | Equivariant | Residue-sequential forward and backward (orientation) vectors |

| Edge | Invariant | Euclidean distance between connected C atoms |

| Edge | Equivariant | Directional vector between connected C atoms |

4.2.4 Training, evaluating, and optimizing the network

As referenced in Table 6, we trained each GCPNet-EMA model on the tertiary structure EMA cross-validation dataset as discussed in Section 2, using its 80%–20% training and validation data splits for training and validation, respectively. Subsequently, for finetuning the afdb_rep_v4-pretrained GCPNet model weights, we performed a grid search for the best hyperparameters to optimize a model's performance on the EMA validation dataset, searching for the network's best combination of learning rate and weight decay rate within the intervals of [, , , ] and [, , ], respectively. The epoch checkpoint that yielded a model's best lDDT L1 loss value on the tertiary structure EMA validation dataset was then tested on the tertiary and multimer structure EMA test datasets as described in Section 2 for fair comparisons with prior methods. Note that we used the same training and evaluation procedure as well as hyperparameters in our ablation experiments with GCPNet-EMA. Moreover, pretraining was performed using Gaussian-noised afdb_rep_v4 structures with noised residue atom coordinates defined as , where and . Notably, as shown by Zaidi et al. (2023), this corresponds to approximating the Boltzmann distribution with a mixture of Gaussian distributions.

| Specification | Value |

|---|---|

| Number of GCP layers | 6 |

| Number of GCPConv operations per GCP layer | 4 |

| Hidden dimensionality of each GCP/GCPConv layer's embeddings | 128 |

| Optimizer | Adam |

| Learning rate | |

| Weight decay rate | |

| Batch size | 16 |

| Number of trainable parameters | 4 M |

| Pretraining runtime on afdb_rep_v4 (using 4 80GB NVIDIA GPUs) | 5 days |

| Finetuning runtime on the tertiary structure EMA dataset (using one 24GB NVIDIA GPU) | 8 h |

4.3 Datasets

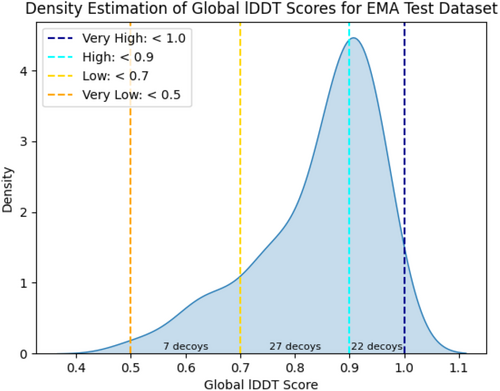

To evaluate the effectiveness of our proposed GCPNet-based EMA method (i.e., GCPNet-EMA) compared to baseline state-of-the-art methods for EMA, we adopted the experimental configuration of Chen et al. (2023). This configuration includes a standardized tertiary structure EMA cross-validation dataset for the training, validation, and testing of machine learning models, a dataset that we make publicly available at https://zenodo.org/record/8150859. As described by Chen et al. (2023), this cross-validation dataset is comprised of 4940 decoys (3906 targets) for training, 1236 decoys (1166 targets) for validation, and 56 decoys (49 targets) for testing, where such data splits are constructed such that no decoy (target) within the training or validation dataset belongs to the same SCOP family (Andreeva et al., 2014) as any decoy within the test dataset. Decoy structures were generated for each corresponding protein target using AlphaFold 2 for structure prediction (Jumper et al., 2021). We evaluate each method on the same 56 decoys (49 targets) contained in the test dataset to ensure a fair comparison between each method. Such test decoys, as illustrated in Figure 3, are predominantly ranked as “high” and “very high” quality decoys (i.e., lDDT values falling in the ranges of [0.7, 0.9] and [0.9, 1.0], respectively (Jumper et al., 2021; Varadi et al., 2021)), with the seven remaining decoys being of “low” structural accuracy as determined by having an lDDT value in the range of [0.5, 0.7]. We argue that evaluating methods in such a test setting is reasonable given that (1) the Continuous Automated Model EvaluatiOn (CAMEO) quality assessment category (Robin et al., 2021) employs a decoy quality distribution similar to that of the EMA test dataset; and (2) most protein structural decoys generated today are produced using high-accuracy methods such as AlphaFold 2.

- Sequence length: residues.

- Resolution: Å.

- Number of chains: .

- Hetero-multimer definition: Sequence identity between chains .

- Inter-chain contacts: At least 10 inter-chain residue-residue pairs with a minimum heavy atom distance of Å.

- Sequence similarity to known structures: sequence identity with monomer chains in the PDB prior to April 1, 2022 and no significant template hits (e.g., e-value >1) in the MULTICOM monomer template database (Liu et al., 2023a).

- Redundancy reduction: Clustering of subunits using MMseqs2 with a 0.3 sequence identity threshold and assigning the cluster ID of the hetero-multimer by the combination of the clusters of the subunits, followed by selection of the highest-resolution structure from each cluster ID of the hetero-multimers.

This general PDB multimer EMA dataset, characterized by its stringent filtering and focus on recently released hetero-multimers, provides a valuable benchmark for assessing the performance of multimer structure EMA methods, particularly in the context of challenging hetero-multimeric complexes. Furthermore, by way of its construction, it minimizes potential overlap between the tertiary structure EMA training and testing dataset, allowing for a meaningful assessment of each method's performance for multimer structure EMA. Note that the average TM-score of a decoy structure in this dataset is 0.7522, which as one might expect is slightly lower than that of the tertiary structure EMA dataset.

In conjunction with the PDB multimer EMA dataset, to compile a CASP15 multimer EMA test dataset we collected decoy structures generated by MULTICOM for the CASP15 assembly targets (Liu et al., 2023b). Note that 10 assembly targets (i.e., H1111, H1114, H1135, H1137, H1171, H1172, H1185, T1115o, T1176o, and T1192o) are not included due to various factors such as computational resource limitations and unavailable native structures or the presence of multiple conformations in native structures. As a result, this CASP15 MULTICOM multimer EMA dataset is comprised of an average of 254 decoy structures per target, all generated by AlphaFold-Multimer, across 31 assembly targets.

AUTHOR CONTRIBUTIONS

AM and JC conceived the project. AM designed the experiments. AM developed the source code. AM performed the primary experiments and data collection for tertiary structure quality assessment, and JL performed the experiments and data collection for multimer structure quality assessment. AM and JL analyzed the data. JC acquired the funding. AM, JL, and JC wrote the manuscript. AM, JL, and JC reviewed and edited the manuscript.

ACKNOWLEDGMENTS

This work was supported by one U.S. NSF grant (DBI2308699) and two U.S. NIH grants (R01GM093123 and R01GM146340).

CONFLICT OF INTEREST STATEMENT

The authors declare no conflicts of interest.

Open Research

DATA AVAILABILITY STATEMENT

The source code, data, and instructions to train GCPNet-EMA, reproduce our results, or estimate the accuracy of predicted protein structures are freely available at https://github.com/BioinfoMachineLearning/GCPNet-EMA, and a public web server implementation is freely available at http://gcpnet-ema.missouri.edu.