Categorical learning revealed in activity pattern of left fusiform cortex

Abstract

The brain is organized such that it encodes and maintains category information about thousands of objects. However, how learning shapes these neural representations of object categories is unknown. The present study focuses on faces, examining whether: (1) Enhanced categorical discrimination or (2) Feature analysis enhances face/non-face categorization in the brain. Stimuli ranged from non-faces to faces with two-toned Mooney images used for testing and gray-scale images used for training. The stimulus set was specifically chosen because it has a true categorical boundary between faces and non-faces but the stimuli surrounding that boundary have very similar features, making the boundary harder to learn. Brain responses were measured using functional magnetic resonance imaging while participants categorized the stimuli before and after training. Participants were either trained with a categorization task, or with non-categorical semblance analyzation. Interestingly, when participants were categorically trained, the neural activity pattern in the left fusiform gyrus shifted from a graded representation of the stimuli to a categorical representation. This corresponded with categorical face/non-face discrimination, critically including both an increase in selectivity to faces and a decrease in false alarm response to non-faces. By contrast, while activity pattern in the right fusiform cortex correlated with face/non-face categorization prior to training, it was not affected by learning. Our results reveal the key role of the left fusiform cortex in learning face categorization. Given the known right hemisphere dominance for face-selective responses, our results suggest a rethink of the relationship between the two hemispheres in face/non-face categorization. Hum Brain Mapp 38:3648–3658, 2017. © 2017 Wiley Periodicals, Inc.

INTRODUCTION

We do not perceive a “blooming, buzzing confusion,” but an orderly world composed of discrete objects [James, 1890]. The human visual system's ability to organize the world manifests itself in categorical, perceptual judgments (e.g., two patterns with very similar low-level attributes might nevertheless be perceived as members of different categories and hence as very distinct objects) [see Goldstone, 1994]. These judgments happen very quickly and accurately [Thorpe et al., 1996]. Moreover, category information (e.g., a scene containing an animal) is sufficient for participants to correctly identify a target image presented for only 125 ms in a succession of images, demonstrating how object categorization facilitates the instantaneous extraction of scene meaning [Potter, 1975]. Corresponding to this remarkable behavioral ability, multiple regions in the human ventral temporal cortex have been identified to be selectively responsive to object categories [e.g., Kanwisher, 2010; Op de Beeck et al., 2006]. Interestingly, whereas averaged response in these areas are positive to individual categories, the more intricate, multi-voxel patterns encode both preferential and non-preferential category information [Connolly et al., 2012; Guo and Meng, 2015; Haxby et al., 2001].

Learning shapes how the brain responds to different object categories [Folstein et al., 2013; Freedman et al., 2001; Jiang et al., 2007; Op de Beeck et al., 2006]. For example, functional magnetic resonance imaging (fMRI) of the brain before and after learning a novel object revealed changes in the spatial distribution of activity across the entire visual cortex [Op de Beeck et al., 2006]. Moreover, averaged fMRI activity in the lateral occipital cortex is modulated by categorical learning of car morphs regardless of task relevance [Jiang et al., 2007]. Interestingly, another study using car morphs found plasticity in averaged fMRI activity in the hippocampus, prefrontal cortex, and superior temporal gyrus after learning, suggesting plasticity in these brain regions may underlie learning of visual features important for discriminating car-types [Folstein et al., 2013]. Conversely, converging evidence from fMRI and single-unit recording suggest that the involvement of the prefrontal cortex in learning is task specific [Freedman et al., 2001; Jiang et al., 2007]. In sum, it remains unclear what mechanisms may underlie how learning shapes brain areas selective for natural object categories.

Most notably, an area in the lateral fusiform gyrus has been hypothesized to be selectively responsive to images of faces, a domain that is critical for human social behaviors (the fusiform face area, FFA) [see Kanwisher et al., 1997]. Moreover, the left and right FFA encode faces differently [Kanwisher and Yovel, 2006; Meng et al., 2012; Rangarajan et al., 2014; Rossion et al., 2000]. When participants passively view a set of stimuli ranging from non-faces to faces, the multi-voxel pattern in the right FFA (rFFA) encodes the stimulus category while the pattern in the left FFA (lFFA) encodes presence of facial features (i.e., the more features present, the higher the response) [Meng et al., 2012]. Thus, it is possible that learning can shape the multi-voxel patterns in the left and right FFAs to improve the categorization of face/non-face through categorical learning (rFFA representation) and/or face feature learning (lFFA representation). Categorical learning involves actively learning to differentiate categorical differences in stimuli with similar low-level features [see Folstein et al., 2013; Jiang et al., 2007; Liberman et al., 1957]. Face feature learning, conversely, involves attending to the presence of face features in each stimulus and assessing how face-like they are. The present study aims to test these possibilities of how the left and right FFA activity may be shaped by categorical learning and/or face feature learning.

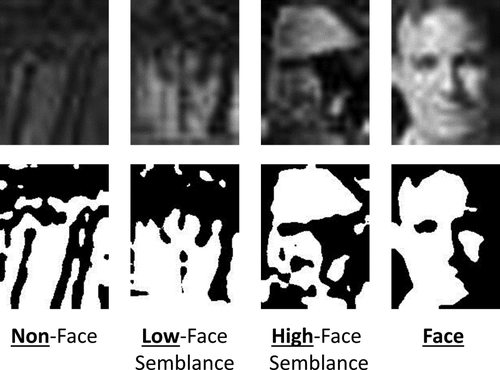

Human adults are so adept at face processing that detecting any neural effects of improvement for face categorization has been difficult. To overcome this challenge, we used the stimulus set from Meng et al. [2012]. This set contains natural pictures, which range in facial like-ness from non-faces to faces (the stimuli were separated into four groups: no face semblance non-face stimuli, low-face semblance non-face stimuli, high-face semblance non-face stimuli, and genuine faces; see Fig. 1). Moreover, this graded increase in facial semblance does not rely on morphing [see Folstein et al., 2013; Freedman et al., 2001, 2010; Jiang et al., 2007], providing a naturally occurring categorical boundary between faces and non-faces. To make categorization of the stimuli more difficult, the set was further manipulated into degraded, Mooney images [Mooney, 1957]. Mooney images are two-toned images with low-level diagnostic features completely removed, hindering recognition of object content. Mooney images have an advantage in learning paradigms because increased signal change and facilitated pattern recognition has been shown to occur in the processing of Mooney images only after participants learn the object content of the images [Dolan et al., 1997; Gorlin et al., 2012; Latinus and Taylor, 2005]. In the present study, participants were trained with the original stimulus set and tested with the degraded, Mooney pictures to assess learning without the influence of low-level visual information. We hypothesize that if categorical learning underlies improved face/non-face categorization, we would find a change in multi-voxel activity after categorical learning. As the rFFA already encodes the face/non-face categorical boundary [Meng et al., 2012], we hypothesize that this effect of learning/change would be found in the lFFA. However, if face feature learning underlies improved face categorization, then we hypothesize that the change in multi-voxel activity would occur in the rFFA, for all participants who were trained with face features.

Examples of the different stimulus conditions in both gray-scale (top row) and two-toned, Mooney images (bottom row). Note that only the face condition contains actual human faces.

METHODS

Participants

Twenty-four participants (11 females, 2 left handed, median age of 25) volunteered in the experiment. All participants had normal or corrected to normal visual acuity. Participants were compensated for their time. All participants gave written, informed consent and were debriefed on the purpose of the study immediately following completion. These procedures were approved by the Committee for the Protection of Human Subjects at Dartmouth College and conducted in accordance with the 1964 Declaration of Helsinki.

Materials and Procedure

A set of 300 gray-scale images was used in this study. These images were compiled previously [see Meng et al., 2012] and contained four types of images: 60 non-face images with no facial semblance, 90 non-face images with low facial semblance, 90 non-face images with high facial semblance, and 60 faces. The 180 non-face images with some face semblance were falsely categorized as faces by a face detection system (Pittsburgh Pattern Recognition System). Next, these images were rated by individuals for how face-like they were by showing participants two random images from the set and asking them to choose the one that was more face-like. With these behavioral data, elo ratings were calculated and the 180 non-face images with some face semblance were split into low face semblance and high face semblance groups accordingly. The set was manipulated into two-toned, Mooney images using MATLAB with SHINE toolbox [Willenbockel et al., 2010]. To accomplish this, a median luminance value was calculated for each image. Next, all pixels of the image with the median luminance value and higher were changed to white, and all other pixels were changed to black. The Mooney set was evenly divided into two subsets with equal numbers of no-face-semblance, low face-semblance, high face-semblance, and genuine face images. Participants were tested with one subset (150 Mooney images) in the MRI scanner before learning and the other subset (the other 150 Mooney images) in the MRI scanner after learning. Which subset was tested first was counterbalanced across participants.

Experimental fMRI scan runs consisted of a slow, event-related design. Each trial contained a Mooney image presented for 2 s followed by 12 s of a fixation cross to allow the blood oxygen level dependent (BOLD) response to return to baseline. Each run started with a 12 s fixation-only period and consisted of 30 trials (in total 432 s long), such that five experimental runs were used to show participants all 150 of the images in a randomized order.

In total, the experimental procedure contained three parts. Participants were randomly assigned to one of the two groups (categorical learning or face feature learning). First, participants viewed a subset of 150 Mooney images in the scanner. These images were completely novel to the participants. Participants were instructed to press the left button of an MRI-compatible response button-box if the image was a face and the right button if the image was not a face with their right hand. Next, outside of the MRI scanner, participants completed five days of behavioral training with the gray-scale images. During training, gray-scale images were shown for 300 ms followed by a noise mask. The categorical group was instructed to categorize each image as a face or not a face, corresponding to how, hypothetically, the rFFA encodes these images [Kanwisher et al., 1997; Meng et al., 2012], and received feedback for incorrect responses (a non-face response to a face stimulus or a face response to any of the three levels of face-like, non-face images). The face-feature group was instructed to assess the amount of facial features contained in each image and report this on a Likert scale of 1–5, with no feedback. The feature task was used to ensure the participants were attending to the facial aspects of each image, corresponding to how, hypothetically, the lFFA encodes these images [Meng et al., 2012]. Each day, subjects went through the entire stimulus set three times. Lastly, the second MRI scan was conducted with the same procedure as the first, using the remaining subset of Mooney images.

Additionally, separate fMRI runs were conducted to localize the left and right FFAs of each participant [Kanwisher et al., 1997]. These were block-designed runs, consisting of gray-scale face and house images independent of any stimuli used in the experimental runs. Blocks contained faces or houses shown every second for 16 s, with one or two images repeated consecutively during the block. Participants were instructed to press a button when this repetition occurred to make sure they were attending to the stimuli. The face and house blocks alternated. In each run, five face blocks and five house blocks were presented interleaved with 16 s fixation-only periods. Each MRI session contained two of the ROI localizer runs.

Data Acquisitions

MRI data was acquired with a 3.0 T Philips Achieva Intera scanner using a 32-channel head coil located at the Dartmouth Brain Imaging Center. The BOLD signal was collected using an echo-planar imaging (EPI) sequence (TR = 2,000 ms, TE = 35 ms, flip angle = 90°, FOV = 240 mm, voxel size = 3 × 3 × 3 mm, 35 slices). A high-resolution magnetization-prepared rapid acquisition gradient echo anatomical scan was acquired for each subject (TR = 8.2 ms, TE = 3.8 ms, flip angle = 8°, FOV = 240 mm, voxel size = 1 × 1 × 1 mm, 222 slices). Stimuli for the EPI scans were presented on a screen located at the back of the scanner via an LCD projector (Panasonic PT-D4000U, 1,024 × 768 pixels resolution) using MATLAB 2011b with Psychtoolbox [Brainard, 1997]. Participants viewed the screen through a mirror placed within the head coil.

Data Analysis

Analyses of variance (ANOVA) were performed on behavioral accuracy data and reaction times from the MRI sessions with stimulus type (face, high-face semblance, low-face semblance, and no-face semblance) and session as within-subject factors and type of learning (categorical or feature) as the between-subjects factor. Moreover, reaction times and accuracy from categorical training sessions as well as reaction times and ratings from feature training sessions were analyzed using 2-way ANOVAs with stimulus type and session as factors, to investigate how responses to each stimulus type changed across training sessions outside the MRI scanner.

SPM 8 (http://www.fil.ion.ucl.ac.uk/spm/) was used for preprocessing the MRI data. EPIs were motion corrected based on the image acquired directly before the anatomical scan, and the slice timing of each acquisition was corrected. For the univariate analysis of averaged BOLD responses, the data were also spatially smoothed with a 5-mm full width at half maximum filter. The EPI runs were then aligned to the anatomical scan and normalized into the Montréal Neurological Institute template, changing the voxel size to 2 × 2 × 2 mm3.

Data from the ROI localizer scans underwent a General Linear Model analysis, which calculated the beta values associated with each stimulus condition (face vs. house). The FFAs were localized and defined as contiguous voxels in the left and right fusiform gyri that were responsive to faces more than to houses using statistical contrasts (maximum P < 10−4, uncorrected). To control for any potential confounding effects of ROI size, the left and right FFAs were localized with roughly the same number of voxels (∼80 voxels) for each participant. Thus, the right FFA was identified in 23 participants, and the left FFA was identified in 19 participants. Using this contrast, the left and right occipital face areas (OFAs) were also localized. Moreover, by contrasting houses versus faces, the left and right parahippocampal place area (PPAs) and the left and right lateral occipital complex (LOC) was localized to test effects of learning in these areas (see Table 1 for ROI information).

| Region of interest | # of subjects (n = 24) | X | Y | Z |

|---|---|---|---|---|

| Right FFA | 23 | 41.5

3.3 3.3 |

−52.9

6.8 6.8 |

−18.9

3.3 3.3 |

| Left FFA | 19 | −39.9

2.7 2.7 |

−52.9

6.8 6.8 |

−18.8

2.9 2.9 |

| Right OFA | 15 | 46.7

5.6 5.6 |

−71.4

4.8 4.8 |

−3.5

6.5 6.5 |

| Left OFA | 15 | −45.7

7.1 7.1 |

−74

8 8 |

−5.7

7.9 7.9 |

| Right PPA | 16 | 29.1

3.4 3.4 |

−46.1

3.5 3.5 |

−9

3.1 3.1 |

| Left PPA | 16 | −28

3.6 3.6 |

−47.4

2.9 2.9 |

−7.8

2.3 2.3 |

| Right LOC | 16 | 36.6

5.9 5.9 |

−65.3

4.6 4.6 |

−11.1

4.7 4.7 |

| Left LOC | 16 | −39.3

6.4 6.4 |

−66.8

6.1 6.1 |

−8.3

4.8 4.8 |

| Searchlight | 24 | 42 | –56 | –18 |

- In the last row, results from the searchlight analysis are reported. The searchlight analysis was conducted with MVPA using voxels extracted from a spherical searchlight with a three-voxel radius (123 voxels in each searchlight including the central voxel). The searchlight moved throughout each participant's gray matter-masked data using PyMVPA [Hanke et al., 2009]. Face/non-face no facial semblance classification accuracy maps based on the searchlight were created for both before and after learning. Using a paired t-test between the accuracy maps from the two sessions, the reported ROI in the last row was the only surviving area at P < 0.0001 uncorrected.

For the analysis of the experimental runs, fMRI activity in the left and right FFAs was extracted. Both averaged fMRI activity, and multi-voxel activation patterns within the left and right FFAs were sorted by stimulus type (i.e., non-face, low-face semblance, high-face semblance, and real faces). Consistent with previous reports, the average fMRI response peaked at around 2 TRs (4 s) after stimulus onset, so we chose this TR for all further analysis [Aguirre et al., 1998; Kwong et al., 1992; Miezin et al., 2000].

First, multi-voxel pattern analysis (MVPA) was performed using PyMVPA [Hanke et al., 2009]. MRI activity from each voxel in each ROI was extracted and normalized (z-score) to remove any effects of overall signal intensity while maintaining multivariate response patterns. Voxel patterns from the face and no-face semblance conditions in the left and right FFAs of each participant for each session were used to train support vector machines (SVMs) using a leave-one-run-out cross-validation procedure. Next, the trained SVMs were tested on the voxel patterns from the low and high-face semblance conditions to evaluate how frequently those ambiguous conditions would be falsely categorized as faces. The percentage of each condition categorized as a face was used for subsequent statistical analyses. Specifically, a 4-way mixed ANOVA was conducted using stimulus type, session, and hemisphere as within-subject factors and learning type as the between-subject factor. Next, the data were sorted by learning type (categorical vs. feature), and 3-way ANOVAs were conducted using stimulus type, session, and hemisphere as factors. These ANOVAs were performed using the two critical conditions that sit across the categorical boundary to differentiate face and non-face (high-face semblance vs. face) in both hemispheres.

For comparisons, univariate analysis of averaged BOLD contrasts was also conducted. The percent signal change across all voxels in the right and left FFA for each condition was calculated for session one and two of every participant using the averaged activity of the two TRs before stimulus onset (–4 and −2 s) for each trial as the baseline. We focused on the high-face semblance non-face stimuli and face stimuli in this analysis as well. A 4-way ANOVA was conducted using stimulus type, session, and hemisphere as within-subject factors and learning type as the between-subject factor. Next, the data were sorted by learning type, and 3-way ANOVAs were conducted using stimulus type, session, and hemisphere as within-subject factors.

To further examine if Mooney face categorical learning may have occurred in any other brain areas, we also conducted a whole brain searchlight analysis [Kriegeskorte et al., 2006]. MVPA was performed using voxels extracted from a spherical searchlight with a three-voxel radius (123 voxels in each searchlight including the central voxel). The SVM was trained and tested on the face and non-face no facial semblance stimuli. The searchlight moved throughout each participant's gray matter-masked data using PyMVPA [Hanke et al., 2009]. Whole brain face versus non-face, no-facial semblance classification accuracy maps were created for both before and after learning. Using a paired t-test between the accuracy maps from the two sessions, no areas except the right FFA were found to be significantly different at P < 0.0001 uncorrected (See Table 1 for coordinates). Note that the searchlight analysis was based on comparing face and non-face, no-facial semblance conditions to localize potentially extra ROIs. Learning effects were not compared between the categorical group and the feature group at this step of the analysis. And as the right FFA has already been localized through univariate GLM analysis, the searchlight analysis did not yield any extra regions to add to our current ROIs.

RESULTS

Behavioral Results

Accuracy ranged from 79% to 99% correct before learning and 73% to 100% correct after learning. The 3-way ANOVA on the behavioral accuracy data revealed a significant main effect of stimulus type (F(3,66) = 24.73, P < 8.7 × 10−11,

= 0.53) as well as significant interactions between session and group (F(1,22) = 5.21, P = .032,

= 0.53) as well as significant interactions between session and group (F(1,22) = 5.21, P = .032,

= 0.19), stimulus type, and group (F(3,66) = 8.04, P = 1.0 × 10−4,

= 0.19), stimulus type, and group (F(3,66) = 8.04, P = 1.0 × 10−4,

= 0.27), and session, stimulus type, and group (F(3,66) = 17.04, P = 3.7 × 10−8,

= 0.27), and session, stimulus type, and group (F(3,66) = 17.04, P = 3.7 × 10−8,

= 0.44). The 3-way ANOVA on reaction time data revealed significant main effects of session (F(1,22) = 4.53, P = 0.04,

= 0.44). The 3-way ANOVA on reaction time data revealed significant main effects of session (F(1,22) = 4.53, P = 0.04,

= 0.17) and stimulus type (F(3,66) = 7.6, P = 0.0002,

= 0.17) and stimulus type (F(3,66) = 7.6, P = 0.0002,

= 0.26) as well as significant interactions between session and group (F(1,22) = 12.03, P = 0.002,

= 0.26) as well as significant interactions between session and group (F(1,22) = 12.03, P = 0.002,

= 0.35), session and stimulus type (F(3,66) = 2.92, P = 0.04,

= 0.35), session and stimulus type (F(3,66) = 2.92, P = 0.04,

= 0.12), and session, stimulus type, and group (F(3,66) = 7.36, P = 0.0003,

= 0.12), and session, stimulus type, and group (F(3,66) = 7.36, P = 0.0003,

= 0.25).

= 0.25).

For the categorical group, a further 2-way ANOVA revealed that reaction times became significantly faster for the non-face conditions (session: F(1,11) = 18.09, P = 0.001,

= 0.62; stimulus type: F(3,33) = 6.09, P = 0.002,

= 0.62; stimulus type: F(3,33) = 6.09, P = 0.002,

= 0.36; session × stimulus interaction: F(3,33) = 8.41, P = 0.0003,

= 0.36; session × stimulus interaction: F(3,33) = 8.41, P = 0.0003,

= 0.43), but not for the face condition. By contrast, for the feature group, behavioral reaction time data was not changed between sessions and no significant results were found (F < 2.2, P > 0.1).

= 0.43), but not for the face condition. By contrast, for the feature group, behavioral reaction time data was not changed between sessions and no significant results were found (F < 2.2, P > 0.1).

For the training trials with gray-scale pictures outside of the scanner, analysis of the categorical training accuracy revealed an improvement in categorizing faces and non-faces with low and high facial semblance (session: F(4,44) = 13.64, P = 2.6 × 10−7,

= 0.55; stimulus type: F(3,33) = 74.528, P = 9.0 × 10−15,

= 0.55; stimulus type: F(3,33) = 74.528, P = 9.0 × 10−15,

= 0.87; session × stimulus interaction: F(12,132) = 2.873, P = 0.002,

= 0.87; session × stimulus interaction: F(12,132) = 2.873, P = 0.002,

= 0.21). Accuracy for each stimulus type was above 95% however, even in the first training session; with all non-face stimuli being accurately categorized over 99% by the second training session, suggesting a possible ceiling effect. Analysis of feature training showed that ratings of the stimuli did not significantly change across sessions (session: F(4,44) = 0.172, P = 0.95,

= 0.21). Accuracy for each stimulus type was above 95% however, even in the first training session; with all non-face stimuli being accurately categorized over 99% by the second training session, suggesting a possible ceiling effect. Analysis of feature training showed that ratings of the stimuli did not significantly change across sessions (session: F(4,44) = 0.172, P = 0.95,

= 0.02; stimulus type: F(3,33) = 343.41, P < 1.0 × 10−15,

= 0.02; stimulus type: F(3,33) = 343.41, P < 1.0 × 10−15,

= 0.97).

= 0.97).

Reaction times during categorical training decreased for all stimulus types across the five sessions (session: F(4,44) = 16.18, P = 3.0 × 10−8,

= 0.60), with reaction time for faces being slightly longer than non-faces during each session (stimulus type: F(3,33) = 3.5, P = 0.026,

= 0.60), with reaction time for faces being slightly longer than non-faces during each session (stimulus type: F(3,33) = 3.5, P = 0.026,

= 0.24). Also, reaction times for feature ratings became significantly faster across sessions (session: F(4,44) = 33.33, P = 8.4 × 10−13,

= 0.24). Also, reaction times for feature ratings became significantly faster across sessions (session: F(4,44) = 33.33, P = 8.4 × 10−13,

= 0.75).

= 0.75).

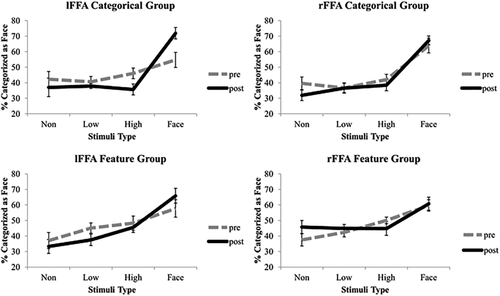

Changes of Multivoxel Activity Patterns in the Left FFA Underlie Categorical Learning

Multivoxel patterns in the left and right FFAs were used, respectively, to decode whether a face or non-face was seen in each trial. Percentages of trials that were classified as faces by the MVPA are plotted as a function of face-semblance levels (non-face, low face-semblance, high face-semblance, and face) in Figure 2. The 4-way ANOVA revealed significant interactions between session and stimulus type (F(1,17) = 15.93, P = 0.001,

= 0.484), session, stimulus type, and hemisphere (F(1,17) = 7.32, P = 0.015,

= 0.484), session, stimulus type, and hemisphere (F(1,17) = 7.32, P = 0.015,

= 0.301) and session, stimulus type, hemisphere, and group (F(1,17) = 8.15, P = 0.011,

= 0.301) and session, stimulus type, hemisphere, and group (F(1,17) = 8.15, P = 0.011,

= 0.324). Moreover, the 3-way ANOVA revealed a significant hemispheric asymmetry in the categorical group (hemisphere × session × stimulus type: F(1,9) = 15.73, P = 0.003,

= 0.324). Moreover, the 3-way ANOVA revealed a significant hemispheric asymmetry in the categorical group (hemisphere × session × stimulus type: F(1,9) = 15.73, P = 0.003,

= 0.636), but not in the feature group (F(1,8) = 0.011, P = 0.918). To further investigate how the representation of the stimuli were changed in the left and right FFAs, post hoc t-tests were performed between face stimuli and all three non-face stimuli types. For the lFFA, in session 1, t-tests reveal that categorization of the face stimuli was not significantly different than any of the non-face stimuli. However, in session 2, the face condition was significantly different to all non-face stimulus categories (Face vs. high face semblance t(9) = 10.54, P < 0.001, Cohen's d = 3.36; Face vs. low face semblance t(9) = 7.87, P < 0.001, Cohen's d = 4.03; Face vs. no face semblance t(9) = 4.15, P = 0.003, Cohen's d = 2.37; Bonferroni adjusted alpha = 0.008), while the non-face stimulus categories were not significantly different than each other (t < 0.2, P > 0.49). For the rFFA, both session 1 and 2 t-tests revealed categorization of face stimuli to be significantly better than non-face stimuli (t > 4, P < 0.008). These results reveal a significant effect of learning in the left FFA activation patterns but not in the right FFA activation patterns for face/non-face categorization, suggesting an important role of the left FFA plasticity in face category learning.

= 0.636), but not in the feature group (F(1,8) = 0.011, P = 0.918). To further investigate how the representation of the stimuli were changed in the left and right FFAs, post hoc t-tests were performed between face stimuli and all three non-face stimuli types. For the lFFA, in session 1, t-tests reveal that categorization of the face stimuli was not significantly different than any of the non-face stimuli. However, in session 2, the face condition was significantly different to all non-face stimulus categories (Face vs. high face semblance t(9) = 10.54, P < 0.001, Cohen's d = 3.36; Face vs. low face semblance t(9) = 7.87, P < 0.001, Cohen's d = 4.03; Face vs. no face semblance t(9) = 4.15, P = 0.003, Cohen's d = 2.37; Bonferroni adjusted alpha = 0.008), while the non-face stimulus categories were not significantly different than each other (t < 0.2, P > 0.49). For the rFFA, both session 1 and 2 t-tests revealed categorization of face stimuli to be significantly better than non-face stimuli (t > 4, P < 0.008). These results reveal a significant effect of learning in the left FFA activation patterns but not in the right FFA activation patterns for face/non-face categorization, suggesting an important role of the left FFA plasticity in face category learning.

Results of MVPA. Percentage of trials in each stimulus condition that were categorized as faces using SVMs trained on activation patterns corresponding to the face condition and the non-face condition is shown for the left FFA (left side) and right FFA (right side) for both the categorical group (top) and the face feature group (bottom) for pre and post learning. Error bars = +–SE across subjects.

The results were not different when the two left handed subjects were excluded from analysis. Categorical learning effects in other face-selective areas cannot be fully ruled out because only Mooney face images were examined. However, consistent with the results of a whole brain searchlight analysis (see methods), all other ROIs tested (OFA, PPA, LOC) did not show any significant effects of learning in the present study.

Changes of Averaged Cortical Response

For comparisons, the univariate-averaged fMRI responses are shown in Figure 3. Both the left and right FFAs responded increasingly to the Mooney stimuli from non-faces to genuine faces in a similar manner. A 4-way ANOVA revealed a main effect of hemisphere (F(1,17) = 6.18, P = 0.024,

= 0.267) as well as a marginally significant interaction between hemisphere and stimulus type (F(1,17) = 4.13, P = 0.058,

= 0.267) as well as a marginally significant interaction between hemisphere and stimulus type (F(1,17) = 4.13, P = 0.058,

= 0.196). However, when the data were broken down by group, the effects of hemisphere were not significant. A 3-way ANOVA on the categorical group data revealed a significant interaction between session and stimulus type (F(1,9) = 13.77, P = 0.005,

= 0.196). However, when the data were broken down by group, the effects of hemisphere were not significant. A 3-way ANOVA on the categorical group data revealed a significant interaction between session and stimulus type (F(1,9) = 13.77, P = 0.005,

= 0.605), showing that both the right and left FFAs suppressed responses to non-face stimuli with high facial semblance and increased responses to face stimuli. By contrast, the 3-way ANOVA performed on the data from the feature group did not show any significant effects of session. These results suggest that what we found using MVPA was not merely driven by the overall averaged BOLD response. MVPA has been shown to provide better statistical sensitivity than the univariate analysis of averaged BOLD responses for examining object category representations [Coutanche, 2013; Haxby et al., 2001; Mur et al., 2008]. Moreover, these results are consistent with the previous report that the asymmetry between the left and right FFAs was revealed more evidently through MVPA than through univariate analysis [Meng et al., 2012].

= 0.605), showing that both the right and left FFAs suppressed responses to non-face stimuli with high facial semblance and increased responses to face stimuli. By contrast, the 3-way ANOVA performed on the data from the feature group did not show any significant effects of session. These results suggest that what we found using MVPA was not merely driven by the overall averaged BOLD response. MVPA has been shown to provide better statistical sensitivity than the univariate analysis of averaged BOLD responses for examining object category representations [Coutanche, 2013; Haxby et al., 2001; Mur et al., 2008]. Moreover, these results are consistent with the previous report that the asymmetry between the left and right FFAs was revealed more evidently through MVPA than through univariate analysis [Meng et al., 2012].

Results of the univariate analysis of averaged BOLD activity changes. Average percent signal change for each condition in the left FFA (left side) and right FFA (right side) for both the categorical group (top) and the feature group (bottom) for pre and post learning. Error bars = +–SE across subjects.

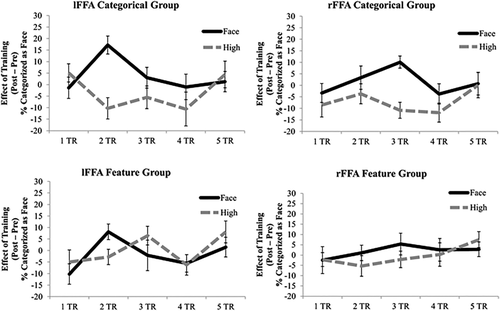

Asymmetry Between the Left and Right FFAs as a Function of Time

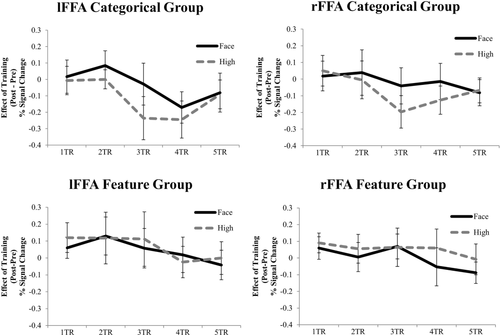

It has been postulated that following the image-level analysis of face-semblance, which correlates with activation patterns in the left FFA, activation patterns, but not averaged BOLD activity in the right FFA, correlate with categorical face/non-face perceptual judgments [Meng et al., 2012]. Thus, face/non-face categorization may have already been encoded in the activation patterns of the right FFA before learning. It is also possible that less robust effects were found than previously reported categorization results in the right FFA because we used Mooney images in the present study. For instance, learning of face categorization may occur in the left FFA, as a modulator, and any learning effects observed in the right FFA could have been indirectly induced by signals from the left FFA. To test this possibility, MVPA-measured learning effects (session 2–session 1) for the critical high-face semblance non-face stimuli and face stimuli were calculated and plotted as a function of time after stimulus onset for the left and right FFAs in Figure 4.

Effects of learning (post–pre) on the MVPA results of face conditions being correctly classified as faces (solid lines) and non-face, high-face semblance conditions being falsely classified as faces (dashed lines) as a function of time (TR) in the left FFA (left) and right FFA (right) for the categorical group (top) and the feature group (bottom). Error bars = +–SE across subjects.

Paired t-tests revealed significant effects of learning for the high-face semblance stimuli vs. the face stimuli in the left FFA two TRs (4s) after stimulus onset (t(9) = 4.63, P = 0.001, Cohen's d = 2.14) for the categorical group. In the left FFA of the feature group, the t-test was marginally significant at 2 TRs (4s) after stimulus onset (t(9) = 2.24, P = 0.052, Cohen's d = 1.14). In the right FFA, however, this learning difference was found at a later time point—3 TRs (6 s) after stimulus onset for the categorical group (t(11)= 5.08, P < 0.001, Cohen's d = 2.04). This learning difference was not found for the feature group at 3 TRs (6 s) after stimulus onset (t(10) = 1.77, P = 0.107, Cohen's d = 0.68). For both groups, no other time points showed a significant categorical learning effect (t < 1.62, P > 0.14). Note, however, despite hemodynamic delays, 4 and 6 s after stimulus onset is a substantial amount of time when considering the speed of neuronal firing. Further studies with better temporal resolution neuroimaging tools such as EEG/MEG are needed.

For comparisons, learning effects (post training–pre training) for the univariate activation corresponding to the critical high-face semblance non-face stimuli and the face stimuli were plotted as a function of time after stimulus onset in Figure 5. No significant differences in learning effects were found between stimulus conditions at any time points (t < 1.67, P > 0.12). Taken together, these results suggest that changes in activation patterns in the left FFA underlie learning about the face/non-face categorical boundary (i.e., instead of increased responses to faces, discriminability increases to differentiate what stimuli belong to the face category as well as what stimuli do not belong to the face category). These effects cannot be accounted for by changes in the magnitude of the activity in the left FFA, or by modulations from changes in the right FFA.

Effects of learning (post–pre) on the univariate averaged percent MR signal changes corresponding to face conditions (solid lines) and non-face high-face semblance conditions (dashed lines) as a function of time (TR) in the left FFA (left) and right FFA (right) for the categorical group (top) and the feature group (bottom). Error bars = +–SE across subjects.

DISCUSSION

Our results reveal that categorical learning instead of face feature learning drives neural improvement in differentiating faces from non-faces. Specifically, the effects of learning include increased activity corresponding to faces and suppressed activity corresponding to non-faces in the right and left FFA, as well as refined multi-voxel activation patterns to differentiate face/non-face in the left FFA of the categorical learning group.

Previous studies have shown that experience shapes cortical object representations [Baker et al., 2002; Freedman et al., 2001; Kourtzi et al., 2005; Op de Beeck et al., 2006]. However, infants are known to have some crude, initial abilities to distinguish faces from other categories [McKone et al., 2009; Morton and Johnson, 1991; Pascalis et al., 2005]. This suggests that sensitivity to the face/non-face categorical boundary is evolutionarily conserved in the human visual system and possibly bootstraps the learning of this category. Using Mooney images that are generated from a spectrum of face-like stimuli, our results further demonstrate plasticity in the adult human brain in that it can still learn to refine the categorical boundary of face/non-face. These results are unlikely caused by general increased visual familiarity with our stimuli because effects of learning for face/non-face categorization were found only in the categorical group. Additionally, participants were trained with gray-scale pictures but tested with Mooney pictures. What we have found, therefore, reflects the learning of face/non-face categorization independent of local visual features that are unavailable in the Mooney images.

Interestingly, the effects of learning are most evident in the left FFA with refined activation patterns to differentiate face/non-face. In a recent study, the activity pattern in the left FFA could discriminate between learned and unlearned face views [Bi et al., 2014]. Perhaps the left FFA is a moderator for all types of face learning, sending information to the rest of the distributed face processing network when new information about faces is acquired. Interestingly, our study strategically examined how learning may change FFA activity corresponding to non-faces as well. Our results suggest that the FFA response to non-faces is not inconsequential, as suppression of this response correlates with the learning of categorization between face/non-face, which complements enhanced activity corresponding to faces. While past studies indicated the FFA may not be entirely face-selective [Yue et al., 2010], learning about face/non-face categorization increases the face-selectiveness of the FFA, especially the left FFA, demonstrating the plasticity of face-selectiveness in this brain region.

Previous studies suggested that image-level face processing may rely on the left FFA more than the right FFA [Meng et al., 2012; Rossion et al., 2000]. Interestingly, the present study suggests that the left FFA may also be more important than the right FFA for the learning of face/no-face categorization, independently of local image-level visual properties (i.e., with Mooney images). Despite that neurophysiological studies with non-human primates found less hemispheric asymmetry for face processing than humans [Ku et al., 2011], hemispheric asymmetry for face processing in humans is well-known [e.g., Turk et al., 2002; Wolford et al., 2004]. Moreover, a recent study combining electrocorticography and electrical brain stimulation in human observers revealed strikingly different roles of the left and right fusiform gyri in visual processing of faces [Rangarajan et al., 2014]. Whereas human neuroimaging studies of face perception in the past nearly 20 years often focused on the right hemisphere because the right FFA was thought to be more critical than the left FFA [Barton, 2008; Behrmann and Plaut, 2013], our results highlight the importance of the left FFA in face category learning.

The asymmetry of function and plasticity between the left and right FFAs suggests that redundant and duplicated processing of faces in both hemispheres is unlikely. According to studies with patients who had corpus callosotomy, whereas both the left and right hemispheres are capable of independently processing faces to a large degree, they process faces differently [Wolford et al., 2004]. Given that our conscious experience is normally unitary, we hypothesize that the left and right FFAs should be coordinated to process faces. Consistent with this notion, effects of learning in the multi-voxel pattern was present earlier in the left FFA than in the right FFA (see Fig. 4), suggesting a possible sequentially dependent relationship between the left and right FFAs. Moreover, the sequential difference of learning in the left and right FFAs was not observed with univariate averaged BOLD activity changes. As attention is well known to modulate averaged BOLD activity [Kastner and Ungerleider, 2000], our results cannot be easily explained as simply some effects of attention. However, future studies using high temporal resolution techniques and/or in combination with causal modeling will be needed to further investigate the possible dynamic relationships between the left and right FFAs.

In sum, theoretical contributions of the present study are threefold. First, learning may shape face/non-face categorization even in adults. The neural plasticity underlying such effects of learning was found in the left FFA with Mooney images, showing that categorization did not rely on low-level local visual features. Therefore, our findings extend beyond previously reported perceptual learning with image level visual features [e.g., Dosher and Lu, 1998; Gilbert and Li, 2012; Sasaki et al., 2009; Yan et al., 2014], suggesting a novel neural plasticity mechanism in holistic visual cognition. Second, since human observers have adapted to detect faces so efficiently, one may expect, at least in adults, a highly robust neural mechanism to differentiate faces and non-faces. However, while learning is known to improve object recognition, the present study is the first to investigate neural plasticity for encoding and maintaining what is not a face. Note that our results not only show categorical improvements through increased activity corresponding to faces and suppressed activity corresponding to non-faces, but also, revealed using MVPA, refined activation patterns in the left FFA to differentiate face/non-face in both directions. This suggests knowing what does not belong to a category may be just as essential for making sense of the “blooming, buzzing confusion” [James, 1890]. Finally, abnormal brain lateralization is often found to be associated with neuropsychiatric conditions and developmental disorders [e.g., Holmes et al., 2001; Nielsen et al., 2014; Ross and Monnot, 2008]. In our study, effects of learning for face/non-face categorization were found with the univariate averaged BOLD results for the categorical group in both the left and right FFAs. Nevertheless, the asymmetry between the left and right FFAs was found with MVPA, and this asymmetry may be caused by a sequential relationship between the left and right FFAs. These results provide insights to understand how the two hemispheres may coordinate to enable a unified conscious experience. However, given the relatively poor temporal resolution of fMRI, future studies combining with perhaps EEG/MEG are needed to further investigate how the left and right FFAs might dynamically interact to process faces. Besides shedding light on the organization of face processing in the normal human brain, results of these studies will provide nominal references against which to compare brain activation patterns in patients with abnormal lateralization of brain function.

ACKNOWLEDGMENT

We would like to thank Bingbing Guo and Zhengang Lu for help collecting data and thoughtful comments throughout the experimental and writing process.