Machine learning in computational histopathology: Challenges and opportunities

Michael Cooper, Zongliang Ji, and Rahul G. Krishnan contributed equally to this study.

Abstract

Digital histopathological images, high-resolution images of stained tissue samples, are a vital tool for clinicians to diagnose and stage cancers. The visual analysis of patient state based on these images are an important part of oncology workflow. Although pathology workflows have historically been conducted in laboratories under a microscope, the increasing digitization of histopathological images has led to their analysis on computers in the clinic. The last decade has seen the emergence of machine learning, and deep learning in particular, a powerful set of tools for the analysis of histopathological images. Machine learning models trained on large datasets of digitized histopathology slides have resulted in automated models for prediction and stratification of patient risk. In this review, we provide context for the rise of such models in computational histopathology, highlight the clinical tasks they have found success in automating, discuss the various machine learning techniques that have been applied to this domain, and underscore open problems and opportunities.

1 INTRODUCTION

Anatomic pathology has undergone many important evolutions over the past centuries, from manual examination with bright-field microscopes to whole-slide imaging (WSI), computer vision and image analysis techniques, high-throughput molecular sequencing technologies and now artificial intelligence (AI). Since the development of the first microscope by Hans and Zacharias Janssen in 1590,1 the microscope has been an important driving force for many discoveries in pathology, with the first few microscopic analysis of human tissue by Marcello Malpighi,2 Anton van Leeuwenhoek,3 and Johannes Muller4 in the 16th and 17th centuries, theory of cell biology and cancer origin by Rudolf Virchow in 1855,5, 6 and the concept of recording histopathological characteristics (e.g., features) to make diagnoses such as the Reed–Sternberg cell for Hodgkin lymphoma in 1898/1902.7-10 Computer-based analyses did not emerge until 1966 when Prewitt and Mendelson used image analysis algorithms to extract quantitative features for cell subtyping in blood smears.11 The 1990s and following decade also marked a pivotal period in which commercial slide scanners were developed that could digitize histology slides into high-resolution WSIs,12-16 as well as computer-aided diagnosis (CAD) systems that implemented early machine learning (ML) techniques using handcrafted cell and tissue features from WSIs.17-24 In 2010, Dundar et al. presented the first formulation of multiple instance learning (MIL) for cancer subtyping in WSIs based on Haralick texture features, a framework that is still widely utilized today in performing weakly-supervised cancer diagnosis at scale without needing detailed pathologist annotations.25

Over the past half decade and still ongoing, the emergence of AI and ML, via deep learning, has been the latest driving force for advancements in pathology.26 Following the 2012 success of convolutional neural networks (CNNs) in the ImageNet Large Scale Visual Recognition Challenge,27-29 similar open challenges such as the CAMELYON30, 31 and PANDA32 challenges were created for lymph node metastasis detection and Gleason grading in prostate cancer, respectively, which led to important breakthroughs showing that CNN- and multiple-instance learning (MIL)-based classification systems could surpass pathologist-level performance on these diagnostic tasks.33 Given a large enough repository of diagnostic WSIs (n > 100),34 deep learning can be used to formulate and solve new clinical tasks beyond human pathologist capabilities such as metastatic origin of cancer prediction,35 cancer prognostication,36-40 and microsatellite instability (MSI) prediction.41-43 Looking beyond supervised learning applications via CNNs and MIL, the development of other techniques such as generative AI modeling,44-46 geometric deep learning,47, 48 unsupervised learning,49, 50 and multimodal deep learning51 may soon enable new clinical capabilities that could enter pathology and laboratory medicine workflows, such as virtual staining,52-54 elucidating cell and tissue interactions,55 untargeted biomarker discovery,56, 57 data fusion with genomics and other rich biomedical data streams.58-61

Though direct application of many deep learning techniques may appear to work “out-of-the-box” and have emergent capabilities in computational pathology (CPATH), there exists a variety of technical challenges that would limit their adoption in clinical translation, deployment, and commercialization. Compared to natural images, WSI as a computer vision domain can be much more challenging due to the multi-pyramidal, gigapixel image resolutions of the WSI digital format, which can add cost in annotating regions-of-interests, finding data storage, and running image analysis pipelines. For slide-level cancer diagnosis tasks, established approaches can overcome these limitations, such as employing a pretrained (CNN) to pre-extract features from nonoverlapping (e.g., 256 × 256 image resolution at 20×, 40×) tissue patches in the WSI, and then inputting the pre-extracted features into a downstream MIL framework.34 However, depending on the task, choices regarding patch image resolution, patch image magnification and spatial ordering of patch features would strongly influence model performance. Many slide-level tasks in computational pathology also suffer from small support sizes, especially in rare diseases, which constrains approaches to also be light-weight and data-efficient. The effect of how genomics, ancestry, self-reported race, and other environmental factors can manifest as biases within pathology data is understudied and may have more important implications when such models are deployed across diverse populations.62 Lastly, as many of these advances in computational pathology stem originally from advances made elsewhere in ML, computer vision and deep learning, limitations of these previous methods may still exist in their current application in pathology.

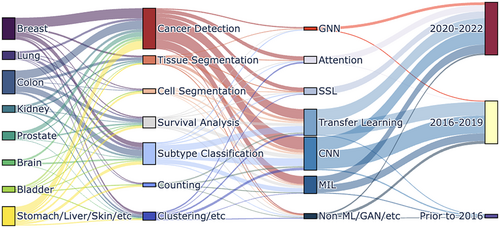

Computational pathology has received significant coverage from previous reviews and perspectives. References 7, 12, 63-66 cover early and historical overviews of digital and computational pathology. References 67-70 provide comprehensive overviews of current progress made in computational pathology. References 71-74 provide clinical perspectives on how AI developments can be translated to the clinic, with other views focusing on specific aspects such as MIL architectures,75 fairness,62 and individual cancer types.75 In this review, we organize a technical overview of current deep learning applications to pathology, disentangling the clinical tasks from the key methodological tools in ML used to solve them. We highlight several open opportunities for CPATH in the context of surrounding discussions in fairness, equity, interpretability, and the rise of large language models (LLMs). Figure 1 presents a visual overview of this review enumerating the models surveyed spanning cancer types, different ML models, learning strategies and their trends across time.

2 CLINICAL TASKS IN COMPUTATIONAL HISTOPATHOLOGY

Digitized WSIs are routinely read by pathologists to extract and acquire insights for diagnosing the current and future state of patient status. When placed under a digital microscope, whole-slide histopathological images of even small biopsy or surgical specimens are typically mega- or gigapixel images. With the development of digital scanners, high performance computers, graphical processing units (GPUs) and larger hard-drive storage, there has been research studying how computation can aid the analysis of digitized images using tools from ML. Following the success of deep learning for classifying natural images29 there have been numerous methods developed to tackle problems like image classification, object detection and image segmentation.29, 76-80 Drawing insights from these methods, new deep learning approaches have been designed for automating tasks of clinical interest using histopathological images. Here, we highlight some of the representative tasks for which ML has been leveraged.

For many of these studies, the data used to train models were curated and, in some cases, made available to the public. Many studies also leveraged The Cancer Genome Atlas (TCGA)81 for training and validation of ML models. Challenge datasets, publicly available WSIs and labels released with the goal of encouraging researchers to build and study ML models, have also played an important role in the advancement of computer vision CPATH. Some prominent examples of challenges based on WSIs include the CAMELYON dataset82 to identify breast cancer metastases in sentinel lymph nodes, the TUPAC dataset83 to predict tumor proliferation, and the PANDA challenge32 for Gleason grading of prostate cancer. At the patch level, the CRC-100 K for colorectal cancer,84 and BreastPathQ,85 ICIAR-BACH86 for breast cancer have proved valuable for the study of different ML methods in CPATH.

2.1 Classification

The winning solution29 of ImageNet 201228 showed the capability of deep neural network to accurately classify natural images with large, labeled dataset. Given histopathological WSIs, the most common task that an ML model can help with is to tell whether the scanned tissue possesses abnormalities of interest. According to cancer data released by the World Health Organization, the five most common cancer types are breast cancer, lung cancer, colon cancer, prostate cancer, and stomach cancer.87 Prior work in classification uses the word “detection” for certain diseases or disease subtypes. The task of detection in CPATH is different from the task of object detection in computer vision where images are significantly smaller and often contain a small number of objects. In CPATH, due to limited accessibility to bounding box or positional-level data, only a few studies in computational pathology88, 89, 83, 90, 91 can do fine-grained detection of mitotic events. Consequently, the vast majority of research methods focus on predicting the prevalence of clinically relevant patient or slide level outcomes posed as a classification task. The intended goal of such predictive systems can range from automation of prediction in low-resource settings to risk stratification for organizing clinical workflows.

For breast cancer, a key task is understanding whether the WSI contains mitotic or non-mitotic cells,89, 92-102 or tumorous cells.103, 102 Studies have explored the use of ML to distinguish breast cancer WSIs between normal, benign, carcinoma in situ (CIS) and breast invasive carcinoma (BIC).104 Classification has also been used to identify specific subtypes of immunostaining for estrogen, progesterone, and Her2 receptors (ER/PR/Her2),105, 106 Ductal or Lobular, Basal-like or non-Basal-like, and different tumor grades.105 Two-stage classification, first predicting if a given WSI tile contains a tumor, and then identifying whether the tumor image patch contains tumor-infiltrating lymphocytes (TIL) has also been explored.103 For lung cancer, an important goal is cancer subtyping into adenocarcinoma (LUAD) or squamous cell carcinoma (LUSC)38, 107 and distinguishing genetic mutations within subtypes.38 Research has studied the identification of histologic subtypes like lepidic, acinar, papillary, micropapillary, and solid108-110 and categorizing WSIs into PDL1-positive, and PDL1-negative.111 For colon cancer, in addition to cancer or non-cancer WSI classification,36, 43, 112-115 researchers have developed methods for classifying colon cancer cell subtypes into a 4-class categorization112, 116, 117 and a 9-class (adipose, background, debris, lymphocytes, mucus, smooth muscle, normal colon mucosa, cancer-associated stroma, and COAD epithelium) categorization.84, 118 For prostate cancer, research has focused on automating Gleason grading and checking whether the image represents benign or malignant prostate biopsy sample.119-121 For stomach cancer, normally studied in conjunction with colon cancer, deep learning methods categorize patches into tumor versus non-tumor and whether each WSI tile belongs to an adenocarcinoma, adenoma, and nonneoplastic type.122-124 Finally, for kidney cancer, some studies attempt to distinguish whether a given WSI tile contains kidney cancer cell or not125, 126 and categorize kidney tissue into 10 different classes including glomeruli, sclerotic glomeruli, empty Bowman's capsules, proximal tubuli, distal tubuli, atrophic tubuli, undefined tubuli, capsule, arteries, and interstitium.127

CPATH has made inroads into predictive problems for diseases in the brain, liver and skin. For brain gilomas, researchers have used ML for grading WSIs and identifying if the tissue morphology indicates a IDH1 mutation.128-130 For liver cancers,131 research has studied classification in the context of 2-class ballooning, 3-class inflammation, 4-class steatosis, and 5-class fibrosis,132 discriminating between two subtypes of primary liver cancer, hepatocellular carcinoma and cholangiocarcinoma, and built tools to assist real clinical workflows.133 For bladder cancer, studies have focused on grading cancers using WSIs.134, 135 For skin cancer, a key task is identifying whether a given melanoma will recur based on a WSI.136, 137 Mesothelioma tissue WSIs were used to build classifier to identify transitional mesothelioma (TM) or not-TM tissue.138, 139 Finally, research has studied tumor versus non-tumor and TIL classification across different cancer types.33-35, 140-143

2.2 Segmentation

While classification of clinical outcomes can provide utility at a slide level, ML has also been used to highlight the exact location and boundary of an abnormality in the WSI. This problem is typically posed as one of image segmentation. Pixel-level labels are required for models to perform segmentation tasks on histopathological images. However, pixel-level labels are hard to obtain since they would require pathologists to use software to draw boundaries around different type of tissues. Despite the effort required, researchers have made great progress in developing methods to automatically identify object of interests in histopathological images.

Due to the size of the WSI and the computational requirements of deep learning models, most methods split WSIs into patches where each patch has labeled segmentation masks (a binary mask over a pixel indicating which pixels represent regions of interest) for a model to learn. For colon cancer, research has studied the problem of segmenting glands90, 115, 144-146 and identifying different classes of tissues.147 For kidney cancers, segmenting glomeruli126, 148 or different subtypes of kidney tissues127, 149 are key tasks of interest. Researchers have also made progress on segmenting normal and abnormal parts from histopathological images for breast,90, 99, 150 lung,110, 151 bladder,134 stomach,123 prostate90 cancer.

2.3 Survival analysis

The successful prediction of patient outcomes such as mortality, and characteristics of their disease trajectory such as progression free survival, can help oncologists plan for treatments and assess individual patient disease severity. Histopathological images capture proxies of genetic abnormalities, tumor burden and subtype, all of which can inform this task. There are several studies that model patient trajectories using pathology data36, 39, 118-121, 124, 137 and those that blend clinical data with other data modalities such as demographic or genomics data.40, 152-154 In most biomedical studies, the time-to-event for many patients is not recorded due to loss of patients to follow-up. Consequently, data often contains the last-observed time points for patients rather than their actual event time. Such data is referred to as (right) censored and the tools used to predict event time, typically time of death, given such data fall under the umbrella of survival analysis.

Since the combination of neural networks with classical tools in survival models,155, 156 researchers have studied their utility for problems in CPATH for colon,36, 39, 43, 61, 118, 124 mesothelioma,138 brain,157 breast,55, 105, 158 lung,55, 152, 159, 160 prostate,119-121, 140 uterine,55 and kidney55, 125, 140, 161 cancer. Researchers have also explored the application of survival analysis on datasets comprising multiple cancer types at same time.37, 41, 55, 60, 140, 152, 162, 163

2.4 Counting

Clinicians are also interested in having detailed measurements of the sampled tissue at the cellular level. Counting mitotic cells is a typical clinical task for pathologists since the number of mitotic cells is a key factor for determining cancer grade. Research efforts have been made in counting mitosis cells in breast cancer, lymphocytes in breast cancer WSIs, centroblasts on follicular lymphoma WSIs, and plasma cells in bone marrow image patches.41, 64, 83, 164 Counting cells typically requires cell segmentation; a variety of attempts have been made for cell segmentation on breast cancer,165-168 colon cancer,146, 169 and bladder cancer.170 Research attempts have also been made on counting neuroendocrine tumor (NET) cells within the gastrointestinal tract and pancreas.171 Cell nuclei segmentation and counting has also been developed for grading squamous epithelium cervical intraepithelial neoplasia (CIN).172 Quantification of immunohistochemical labelling (e.g., MIB1/Ki-67 proliferation) is also an important prognostic application.173-175 Although supervised cell segmentation requires tremendous effort to obtain fine-grained annotations, several cell segmentation methods79, 176, 177 have obtained promising results. There have also been several unsupervised cell segmentation methods have been developed over the past decade.178-180

There has also been research studying the use of ML for tasks that can form the basis for future clinical workflows. Many WSIs from tissue samples come without labels and obtaining such labels may be hard or impossible. Consequently, unsupervised ML methods like clustering have been deployed to obtain insights from histopathological images. Given image patches from WSIs, researchers first extract features, or representations, using preexisting predictive models. These are then clustered to automate the identification of subgroups in lung,159 brain,159 breast,98, 181 colon cancer113 WSIs. In addition, research has begun to use ML to learn associations between gene expression and pathological images,110, 130, 182 perform stain normalization for histopathological images,106, 183-186 generate synthetic data,106, 130, 148, 151, 170 compress images187 and automate histopathological captioning and diagnosis generation.135

3 LEARNING STRATEGIES FOR COMPUTATIONAL HISTOPATHOLOGY

The goal of an ML model developed to tackle the tasks in Section 2 is to generalize well, that is, it must operate in regimes outside of those in which it was trained. Deep learning models learn functions that transform high-dimensional inputs like images to numbers representing event time (for regression, survival analysis), class probabilities (for classification), or other images (for segmentation). A deep learning model does so via the intermediate step of first translating inputs into representations,188 vectors of numbers whose entries serve as a compressed store of the information content in the input. As the model is trained, the network learns to produce representations that capture the appropriate structure for a predictive task at hand, so that they can be readily converted to the desired output. The choice of function used in model can vary—the simplest kind of function in deep learning is a multilayer perceptron, in which each layer of the network is comprised of a stacked linear and nonlinear functions. In CNNs, the function being learned is represented by compositions of convolution operations, a network architecture that exhibits a degree of spatial invariance that makes them useful for modeling pixels. Transformers189 are comprised of stacked attention layers, where a heat map is overlaid onto the previous layer's representations to compute the next layer. The parameters in a deep learning model are trained by solving an optimization problem, typically the maximization of a proxy to the accuracy of a model (or the minimization of a loss function) on a labeled training set. The triplet of a model, comprising a neural architecture, loss function, and dataset, play a large role in the degree to which a model generalizes. Most deep learning models are trained on GPUs whose hardware constraints often inform the form, complexity, and size of the model, loss function and dataset.

CPATH has challenges that make the straightforward adoption of tools from computer vision challenging. The cellular patterns in tumor microenvironments are often highly complex and require expertise to properly annotate, making it difficult to obtain a large amount of labeled data for training deep learning models. Histopathology images are large, requiring high computational resources and a large GPU memory to build predictive models. The patterns of interest are often small in size and low in contrast, making it difficult for deep learning models to accurately detect and segment these objects. Finally, images can be affected by various sources of noise and artifacts, such as staining variations. Over the years, these challenges have informed the various technical approaches researchers have adopted to build predictive systems. In what follows, we highlight three learning strategies and two neural network architectures that have found success for predictive applications in CPATH. Although they are by no means mutually exclusive or exhaustive—indeed many state-of-the-art methods mix and match among them—we have chosen to highlight the following methods as those which have seen recent success tackling some of the unique challenges of predictive modeling in this domain.

3.1 Multiple-instance learning

Deep learning models require that the input (in this case, images) be small enough to load onto GPU memory. The need for MIL arises because, as of 2023, a single histopathology image would exceed the available memory of a GPU. A popular approach is to decompose a large histopathology image into patches and treat the bag of patches as a collection, all of which are assigned the same label.

MIL190, 191 provides a class of methods for detecting whether a collection of objects contains one or more objects of interest. The input to the model consists of a collection of objects, each of which may be positive (of interest), or negative (not of interest). The aim of MIL is to determine which objects are positive and negative, based only on collection-level labels. MIL provides a practical means to make efficient use of training data with coarse labels. Rather than requiring pixel-wise annotations, methods in MIL allow for learning from slide- or region-wise annotations, which are significantly cheaper and easier to obtain.

Small-scale experiments applying MIL to CPATH have taken place as early as 2012. ccMIL112 extends the MIL algorithm by introducing a smoothness prior over proximal image patches. After training on a small dataset consisting of 83 slides (53 cancerous, 30 noncancerous), ccMIL obtains image segmentation performance comparable to a model trained directly on pixel-wise annotations (F-measure of 0.7 for ccMIL, 0.72 for pixel-wise annotations). ccMIL obtains 0.997 AUC on binary classification of images into cancerous or noncancerous, and 0.965 and 0.970 AUC, respectively, on multitask classification of images into different subtypes of cancer. These results outperformed contemporary methods including multiple-instance support vector machines,192 multiple-instance boosting,193 and multiple-clustered instance learning (MCIL).194

One of the first large-scale MIL models was published in 2019.33 In this study, the authors collected a dataset of 44 732 whole slide images from 15 187 patients, corresponding to biopsy samples spanning diagnoses of prostate cancer, basal cell carcinoma, and breast metastases. These images were not specially curated for clarity and contained artifacts that would be found on raw pathology slides. In binary classification of slides as cancerous or noncancerous, their MIL model achieved an AUC of 0.991 on detecting prostate cancer, 0.988 on detecting basal cell carcinoma, and 0.966 on detecting breast metastases. In evaluating generalization performance to external data, the authors conclude that weak supervision (e.g., slide-level labels) on larger datasets leads to better generalization than strong supervision (e.g., pixel-wise labels) on small datasets.

3.2 Transfer learning

The largest dataset to train computer vision models contains millions of labeled training examples. By contrast, the largest CPATH dataset may only have tens of thousands of labels (at the WSI level). Large, labeled datasets are an important way by which a deep learning model learns useful representations; however, datasets of such sizes may not be feasible in CPATH. Consequently, many researchers turn to a technique known as transfer learning.

Transfer learning refers to the class of methods that leverage parameters from a model trained on a prior task (also called an “upstream task”) as a starting point for learning an eventual task of interest (also called the “downstream task”). In computer vision, certain parameters within a model often transfer well between imaging modalities and tasks. The weights of the first few layers of a deep CNN, for example, often learn edge detection capabilities,195 which represents a common skill that is useful across many domains of computer vision task. It is therefore typical that a computer vision model that has been pretrained on an upstream image classification or regression task to be able to learn a novel data distribution more efficiently from fewer samples than a model that was trained from scratch.

ImageNet,27, 28 a large-scale dataset of natural images, is a popular dataset for pretraining image recognition models, and ImageNet-pretrained models are readily available as starting points for downstream image tasks. Despite clear visual differences between ImageNet's natural images and the fine-grained images of tissue morphology found in histopathology, pretrained models like VGG,196 ResNet,77 AlexNet,29 GoogLeNet/Inception,197, 198 remain effective starting points for building predictive models in computational histopathology (VGG,36, 84, 93 ResNet,125 AlexNet,93, 199 GoogLeNet/Inception93, 95). Occasionally, these pretrained models are used in combination, as in the ensemble approach of Reference 200: this system leverages pretrained Inception-V3,198 Xception,201 VGG-16196 and ResNet-5077 as individual networks in a weighted voting scheme for binary classification of histopathological slides. Another study202 provides a comparative analysis of different ImageNet-pretrained models within the context of colorectal cancer histopathology slide segmentation, finding that a DenseNet-121203 feature extractor, paired with a LinkNet204 segmentation architecture, is the most promising approach among the pretrained models they evaluated, which spanned DenseNets, Inception Networks, MobileNets,205 ResNets, and VGG architectures.

Recent work206 compares the performance of models pretrained on ImageNet with those pretrained using self-supervised (Section 3.3, Reference 207) or multitask learning208 on histopathology data. On binary classification of slides as cancerous or noncancerous on a dataset containing 413 WSIs from duodenal biopsies, they find that the self-supervised encoders achieve a greater AUC than those pretrained on ImageNet. Moreover, they observe no discernible relationship between a model's performance on ImageNet, and its subsequent performance on the downstream task. This presents a challenge for researchers and practitioners attempting to leverage transfer learning in practice, as these results suggest that there presently exists no pretraining heuristic that can accurately ascertain the performance of a fine-tuned ImageNet model on a downstream CPATH classification task.

3.3 Self-supervised learning

Deep learning models are trained to maximize the accuracy of the model on a training set. This requires access to labels. Self-supervised learning refers to the class of methods that allow models to learn features of images relevant to a task without access to underlying labels. The key insight that self-supervised learning leverages is that one can often use domain knowledge to create pseudo-labels or learning objectives which, when optimized, yield informative representations of images.

3.3.1 Contrastive learning

Contrastive learning performs self-supervised learning by means of assigning pairs of instances in data to be “positive pairs” or “negative pairs” and learning a representation such that positive pairs have similar representations and negative pairs do not. Methods of contrastive learning in CPATH may vary based on how positive and negative pairs are assigned. Reference 183 selects a collection of “reference images”, and for each reference image, produces a batch of other image samples that have been stain-normalized with respect to the reference. Positive pairs are different stainings of the same image, while negative pairs are any two distinct images. This method produces a model that learns to be agnostic to stain variation across slides. Reference 209 defines a positive pair of patches as those which are spatially proximal, and a negative pair as those which are spatially distant; they find that a ResNet-18 pretrained using their contrastive objective outperforms an ImageNet-pretrained and NPID-pretrained210 network on tumor tile retrieval in the CAMEYLON-16 dataset.

Two popular means of contrastive self-supervised learning include SimCLR50 and MoCo.211 Reference 207 finds that over 57 datasets, a ResNet with SimCLR self-supervision improves classification and segmentation performance beyond random initialization or ImageNet pretraining, though these performance gains diminish under access to additional data.212 modifies MoCo v3213 to obtain more positive pairs and finds that a joint CNN- and transformer-based189 architecture pretrained in this fashion achieves state-of-the-art performance on five tasks across nine CPATH datasets.

3.3.2 Non-contrastive learning

Contrastive learning requires both positive and negative pairs. Methods in non-contrastive learning, on the other hand, remove the requirement for negative pairs of images. Three modern, popular methods of non-contrastive learning include BYOL,214 SimSiam,215 and DINO.216 Reference 217 develops a hybrid CNN- and Transformer- based architecture pretrained using BYOL. The resulting model outperforms modern state-of-the-art vision models, including vision transformers (ViTs)218 and T2T-ViT-24,219 VT-ResNet220 and BoTNet-50.221 Reference 222 applies ViTs pretrained using DINO to several tasks, including cancer subtyping and colorectal tissue phenotyping. Although an ImageNet-pretrained ResNet presents a strong baseline model for their task, self-supervision with DINO typically outperforms both the transfer-learned approach and the contrastive SimCLR method. The current research suggests that self-supervised learning typically outperforms ImageNet-pretrained architectures on computational histopathology tasks, although there does not yet appear to be a singular dominant method of self-supervision within the context of CPATH.223 The advantages of self-supervised learning appear to be most pronounced when a limited quantity of labeled data is available for domain-specific transfer learning.

3.4 Neural attention with transformers

Attention is a type of neural network expressing the inductive bias that context determines the importance of each input variable to the current layer of the network. In computer vision, attention learns from surrounding pixels the importance of each pixel to the current layer of computation. Empirically, this inductive bias proves an effective assumption in the context of natural language and image processing. Transformers, neural networks constructed using stacked neural attention layers, have set the new standard in natural language processing tasks,189 while ViTs approach state-of-the-art results on vision tasks with significantly fewer parameters than convolutional networks.218 One of the key strengths of the attention mechanism is interpretability: by visualizing the attention weights over each pixel as a heat map, a user can interpret the learned relative importance of each pixel to the ultimate prediction task (e.g., as in Reference 222). This allows for domain experts to conduct post hoc interpretability of the learned model to assess whether the model has learned the right signal for the task at hand.

Much of the success of attention in CPATH has leveraged attention mechanisms as the pooling function in MIL.224, 225 In the former work,224 use an attention mechanism as the pooling operator for MIL, instead of a fixed pooling function. In a classification task on breast226 and colon cancer116 data, their gated attention-based MIL approach achieved higher image-level binary classification accuracy, precision, recall, F-score, and AUC than either instance-wise or embedding-wise MIL approaches. In the latter work,225 the authors propose DeepAttnMISL, a MIL-based survival model that employs an attention layer over the representation of patches to pool them for MIL. Clustering-constrained multiple-instance learning (CLAM)34 uses attention in combination with clustering to perform MIL. By using attention weights as pseudo-labels, they can stratify patches of the WSI into different clusters.227 develop a transformer based self-attention189 mechanism for histopathological images by exploiting hierarchical structure present in the images. The hierarchical image pyramid transformer (HIPT) first applies a ViT model at the cell-level, then at the patch-level, and finally at the region-level, with the output of each representation feeding into the subsequent model in the hierarchy. HIPT leverages the strengths of image transformers,218 without incurring intractability in the computation of the attention weights due to the size of the input image. In slide-level classification and survival prediction of H&E-stained slides from TCGA, HIPT outperforms other weakly-supervised techniques, including DeepAttnMISL, and the graph neural network (GNN)-based GCN-MIL.228

3.5 Graph neural networks

GNNs are a class of neural networks that encode a relational inductive bias: the assumption that properties of—and relationships between—discrete entities in the data are important to the overall prediction task.229 To do so, a GNN will compose each data sample as a series of nodes and edges and will learn a representation of this graph that most readily supports the overall prediction task. In the context of computational histopathology, nodes in the graph typically correspond to patches sampled from a slide. We can therefore group and compare GNN-based methods by the way in which the edges of each graph are assigned to their corresponding nodes.

3.5.1 Node feature similarity

Some methods will construct a graph out of each instance by placing an edge between nodes that are sufficiently similar to each other. This class of methods includes DeepGraphSurv,156 one of the first graph CNNs designed for the task of survival prediction from a WSI. After sampling patches from the WSI (which will act as nodes in the graph), DeepGraphSurv extracts 128-dimensional node features via an ImageNet-pretrained neural network. Edges are then added between pairs of nodes with a sufficiently small Euclidean distance in representation space. The authors find that on three survival datasets (glioblastoma multiform and lung squamous cell carcinoma slides from TCGA,81 and slides from the National Lung Screening Trial230), DeepGraphSurv's incorporation of topological relationships between patches yields a substantial gain in concordance231 when compared to competing models of survival. Rather than relying solely on node feature similarity,232 introduces the idea of an adjacency learning layer, which incorporates both global context and node features to learn the adjacency matrix of the graph during training. This approach set a new standard of accuracy for binary classification on the MUSK1 MIL dataset233 (92.6% accuracy), and for cancer subtyping (lung adenocarcinoma vs. lung squamous cell carcinoma) on WSIs from TCGA81 (89% accuracy).

3.5.2 Node spatial location

In this paradigm, nodes in the GNN are typically represented by patches, while the graphical structure is produced based on which nodes are spatially proximal. This encodes the inductive bias that the presence of a feature in one location (e.g., a cancerous cell) is likely to inform the presence of that same feature in other nearby locations. HGSurvNet157 is a GNN-based method that performs survival prediction from WSIs. To do so, it constructs two hypergraphs, and performs multi-hypergraph learning over the two graphs to produce a downstream survival prediction. One of the hypergraphs contains edges determined by the feature similarity of nodes, as determined by feature extraction via an ImageNet-pretrained model, while the other contains edges determined by spatial proximity to other nodes on the slide. Training this architecture using the Cox partial likelihood objective234 yields a survival model that outperforms competing methods like graph convolutional networks48 and DeepGraphSurv to achieve a concordance of 0.6730 on the LUSC dataset,235 0.6726 on the GBM dataset,235 and 0.6901 on the NLST dataset.230 Instead of using nuclei as nodes in the graph,236 uses cell nuclei as the nodes, then connects nuclei in the graph that are sufficiently proximal. Applying attention-based robust spatial filtering on this graph yields near-state-of-the-art on subtype classification of breast cancer86 and Gleason grading of prostate cancer237 tasks and admits interpretable attention maps in which well-attended nuclei correlate strongly with the presence of a cancerous cell. Graphs based on node spatial location have also been used to improve the tractability of the learning problem: Slide Graph238 constructs a graph in which nodes are cell nuclei, with edges placed between proximal nuclei. This approach provides a scalable means to efficiently capture cellular structure across a WSI. In evaluation on a dataset of breast cancer pathology slides from TCGA, this method achieved a 0.73 AUC on HER-2 status prediction (with the next closest baseline124 achieving 0.68), and a 0.75 AUC on PR status prediction (with the next closest baseline42 achieving 0.73).

3.5.3 Patch spatial location with superpixel node features

Reference 239 presents SegGini, a graph isomorphism network that leverages superpixel node features to perform weakly-supervised semantic segmentation of histopathological slides. It does so by way of node classification, wherein superpixel nodes are each classified into segmentation regions. A key advantage of this method is its ability to operate under inexact labels and partial annotation, and in evaluation on one prostate tissue microarray dataset240 and one prostate WSI dataset,241 SegGini performs state-of-the-art segmentation as measured by the Dice score, outperforming a human clinician on the first dataset.

4 CHALLENGES AND OPPORTUNITIES

Despite enormous progress over the last decade in computational histopathology, there remain several open challenges in the translation of ML tools in computational histopathology from research laboratories into assistive software tools for clinicians.

4.1 Bias, fairness, and equity

The interactions that patients have with the medical system can manifest in patterns within their clinical data. These interactions can bias clinical data242 used to train ML models and subsequently result in biased models affecting their ability to generalize. Reference 243 provides an overview of the different kinds of biases that can arise in medical imaging, highlight their statistical implications for building predictive models and provide insights into how tools from causal inference can prove valuable in bias mitigation.

The naive training of ML models to maximize average accuracy on a predictive task has been found to yield models that are prone to bias among subpopulations within the data. This is because populations represented in real-world datasets are diverse and average accuracy on a held-out set may not reflect the nuances of how the model will performs on members of various subpopulations during deployment.62, 244 In predictive systems for chest x-rays,245 demonstrate disparities in true predictive rates across subgroups based on protected attributes such as patient sex, age, race, and insurance type across various state of the art classifiers. Understanding the fairness and equity of predictive models is crucial to engender trust in deployed systems. Indeed, Reference 246 provides a simple theoretical model wherein repeated subgroup disparities in predictive systems can result in less frequent engagement by a minority group, increasing disparities in predictive outcomes.

One notion of bias and fairness that has recently been explored in computational histopathology is the implicit dependence that predictive models may have on the hospital from which the digitized slides are obtained. Reference 247 devises a method to reduce the dependence on the features extracted in histopathology images by explicitly encouraging the model to not capture patterns that are indicative of hospital identity via an evolutionary strategy. Reference 248 develops a learning strategy for predictive models to decrease variation in predictive performance among hospitals. In addition to variation across hospitals, the various hardware technology (e.g., scanners) that form the image procurement pipeline can also yield variation in predictive performance. Reference 249 evaluates the effect of this heterogeneity on the task of lymph node segmentation, and show that slide color normalization, model fine-tuning, and domain adversarial learning are promising means of accounting for such discrepancies.

One ripe area of opportunity in this space would be the development of benchmark datasets to quantify the bias associated with the various models and methods in this space. In medical imaging, for example, there have been several lines of work to highlight the need to better understand the fairness of algorithms in health care250: introduce a benchmark of medical images with paired annotations of sensitive attributes such as age, race and sex, with a view to study how different algorithms for bias mitigation perform across a suite of different fairness metrics in chest x-rays, fundus imaging, MRIs, CT scans and dermatology images. Because this dataset presents a means to quantify the bias and fairness of a given approach, these data have opened the door to applying methods from the ML fairness literature to medical image analysis. A similar initiative across different clinical tasks considered in histopathology image analysis would enable better understanding of the limitations of the current set of tools across the gamut of clinical tasks considered in Section 2.

4.2 Heterogeneity of predictive outcomes

Digitized histopathology images can exhibit variation that is dependent on the tumor microenvironment,251 the stage of the disease,252 the stain used in the image,253 and the patient's individual characteristics.254 This results in intra-observer variability from the subjective and manual interpretation of these images by pathologists. The manual annotations of these images, in many cases, form the labels used to train CPATH models. Many ML models assume that the noise present in the labels has the same degree of variation; the violation of this assumption can exacerbate bias in the resulting model. Reference 255 studies the effect of instance dependent noise in neural network models, showing that low-frequency noisy labels (such as those coming from a minority subpopulation) are more likely to be misclassified. A detailed study of the effects of label noise in computational histopathology, its origin, and mechanisms to mitigate its effects on generalization represents a promising area of future study and would further improve trust in CPATH models.

4.3 Multimodal integration and the need for interpretability

The treatment and care of patients suffering from cancers involves clinical decision making from a variety of modalities. Integrating and harmonizing this data from electronic medical records and clinical trials opens new avenues for ML to ask novel research questions such as individual risk prognostication and biomarker identification.256, 257 For example, Reference 182 combines clinical biomarker data with histopathology images from the NRG Oncology phase III randomized clinical trials to predict outcomes such as metastases and survival in prostate cancer.258 integrate clinical and genomics data, computed tomography scan images, and programmed death ligand-1, PD-(L)1, immunohistochemistry slides to predict individual response to PD-(L)1 blockade. For multimodal fusion of multiple stains,61 integrate H&E with multiplexed IHC for predicting response to neoadjuvant therapy in rectal cancer patients, and Reference 259 integrates H&E, Periodic acid–Schiff, Trichrome, and Jones' stains for kidney allograft rejection assessment.

In general, there remains a paucity of public data pairing anonymized patient characteristics with digitized pathology slides that would allow for the development and testing of multimodal approaches in the absence of preexisting collaborations between clinical centers and research labs. The publication of such data would represent a promising foundation on which future work exploring different techniques of multimodal integration can be built. Additionally, the success of contemporary multimodal predictive models in pathology begs the question of whether these successes can translate into a scientific understanding of the biological and mechanistic insights that drive the predictive signal. There is thus a need to develop tools to interpret the complex, multimodal signals captured by such models.

Interpretability in ML is a complex problem, with its own rich literature. Neural networks are susceptible to problems such as shortcut learning,260 wherein the model latches onto a signal that is predictive of a proxy for the outcome in the training set, rather than the outcome itself. The problem herein is that when deployed in new scenarios where the proxy is absent, the model will no longer generalize well. Research in ML has developed tools for interpretability that are model based and model agnostic. For the former, tools such as GradCAM261 leverage the gradients of the output with respect to the input a neural network and GNN-explainer262 identifies the minimal subgraph that correctly explains the prediction of a GNN. The latter class of methods are model-agnostic and includes tools such as SHAP263 and LIME.264

Reference 265 studies different methods for explaining graph-based predictive models of histopathology images and propose metrics using pathologically measurable concepts to rank different methods that generate explanations. Reference 34 shows how attention level heat maps, when overlaid onto patches, highlight metastatic regions and individual tumor cells. Reference 60 studies the improvement of multimodal integration using WSIs and molecular data in a pan-cancer setting, with local- and global-level interpretability used to understand how features correlate with risk. Reference 266 shows that subsets of neural network features capture discriminatory information for subtyping lung carcinomas. Reference 267 develops a variant of MIL that by design is interpretable (via the use of attention maps over patches). They show that they inferred attention maps correspond to regions in the WSI referenced by pathologists in clinical assessments. Reference 268 studies the low-dimensional organization of features captured in CNNs trained on histopathology data using nonlinear dimensionality reduction tools such as t-distributed stochastic neighbor embedding (t-SNE). While the field of explainable ML has made great strides, there is no single gold standard technique that guarantees the identification of the correct features that the model is relying on. Interpretability in computational histopathology must be combined with clinical expertise to ensure that there is a clinical or biological rationale behind the model's prediction. The problem of interpretability becomes harder for multimodal models where information content from multiple modalities is combined in opaque models to form a final prediction—developing reliable methods for practitioners to understand the predictions from multimodal models remains an open challenge.

Different methods of interpretability generate different types of explanations, and the utility of these explanations is often largely dependent on the context in which a predictive model is deployed. One promising line of work involves an ethnographic analysis of the workflow in which clinicians collaborate with algorithmic systems to perform diagnoses, to determine the circumstances under which each means of interpretability stands to provide the greatest utility to clinicians. One promising methodological avenue of future work consists of marrying methods from interpretability with the burgeoning field of causal inference269 to learn interpretations that are based on causal relationships in the data when such relationships are statistically identifiable.270-272 To our knowledge, these methods have yet to make their way into the computational histopathology literature. Such approaches may improve confidence that a model's interpretation is correctly characterizing the biological pathways linking WSI features and diagnostic outcomes, which—beyond improving trust in our predictive models—may improve our scientific understanding of the biological mechanisms relating observed WSI features with clinical outcomes.

4.4 On the rise of large generative models

Discovery of scaling laws273 for transformer-based models of natural language text has ushered in a new era of LLMs. Models in natural language processing were bespoke with a single model being trained on a dataset to solve a specific task at hand. By scaling models to hundreds of billions of parameters, researchers found that LLMs exhibit the ability to solve different natural language tasks with little to no supervision. In parallel, large scale diffusion models274 have democratized the generation of high-resolution image data using text-prompts single sentence alone. The ramifications of this technology are only just being explored in the context of medicine275 but the next half decade will inevitably find their utility in CPATH.

5 DISCUSSION

To change patient care, a good ML model alone is insufficient. Equally important is the smooth integration of the model into the clinical workflow—an endeavor that intersect computational histopathology with human computer interaction. Indeed, creating reliable software tools for pathologists and oncologists would require a rethink of how hospital infrastructure is organized. As hospitals and clinics move toward an entirely digitized pathology workflow there is an opportunity to create new assistive clinical decision support tools using computational histopathology. This will require the implementation of high-throughput storage for digitized histopathology slides, fast interoperability with hospital electronic medical record systems and (local or cloud-based) high-performance compute to run ML models in real-time.

In summary, the increasing digitization of pathology workflows alongside the rapid pace of advances in ML bears promise for accelerating scientific discovery and in the creation of assistive tools for oncologists across a variety of cancers. As the field moves from research to translation and deployment, there is a need to recognize the ultimate end-uses of predictive systems within the clinical workflow and translate the technical requirements a system must satisfy into research challenges. The clinical translation of these tools and technologies will require pathologists, oncologists, computer scientists, hospital administrators and regulatory agencies to collaborate and develop an environment where clinicians can utilize such tools safely and effectively.

ACKNOWLEDGMENTS

The authors thank Richard J. Chen for many helpful discussions, comments on the manuscript, and help framing the introduction.

FUNDING INFORMATION

This study was supported by the AI Chair Award, Canadian Institute for Advanced Research and the Health Systems Impact Fellowship, Canadian Institutes of Health Research.