The Subword-Character Multi-Scale Transformer With Learnable Positional Encoding for Machine Translation

Funding: This work was supported by The program of High-quality Sharing course Cross cultural communication in Jinzhong University of Shanxi Province in 2024 (Grant K2024402); Teaching reform and Innovation project of colleges and universities in Shanxi Province “Research and Application of VR Immersive Cross Cultural Communication Teaching and Training Platform: A Case Study of Jinzhong University” from Shanxi Provincial Education Department (Grant J20241332).

ABSTRACT

The transformer model addresses the efficiency bottleneck caused by sequential computation in traditional recurrent neural networks (RNN) by leveraging the self-attention mechanism to parallelize the capture of global dependencies. The subword-level modeling units and fixed-pattern positional encoding adopted by mainstream methods struggle to adequately capture fine-grained feature information in morphologically rich languages, limiting the model's flexible learning of target-side word order patterns. To address these challenges, this study innovatively constructs a subword-character multi-scale transformer architecture integrated with a learnable positional encoding mechanism. The model abandons traditional fixed-pattern positional encodings, enabling autonomous optimization of the positional representation space for source and target languages through end-to-end training mechanisms, significantly enhancing dynamic adaptability in cross-linguistic positional mapping. While preserving the global semantic modeling advantages of subword units, the framework introduces a lightweight-designed character-level branch to supplement fine-grained features. For the fusion of subword and character branches, we employ context-aware cross-attention to enable dynamic integration of linguistic information at different granularities. Our model achieves notable improvements in BLEU scores on the WMT'14 English-German (En-De), WMT'17 Chinese-English (Zh-En), and WMT'16 English-Romanian (En-Ro) benchmark tasks. These results demonstrate the synergistic effects of fine-grained multi-scale modeling and learnable positional encoding in enhancing translation quality and linguistic adaptability.

1 Introduction

Machine translation (MT), as a cornerstone of natural language processing (NLP), aims to eliminate language barriers by automatically converting source language text into target language. In recent years, the exceptional performance of transformers in MT [1, 2] has significantly improved translation quality, driving a series of innovations in transformer architectures. For the source sentence (German): “Die Katzen fangen die Mäuse ein.” Target sentence (English): “The cats catch the mice.” German exhibits rich morphological variations (e.g., noun plural “Mäuse”), while traditional transformers employ Byte Pair Encoding (BPE)-based subword segmentation. If BPE splits “Mäuse” into “Mäu” and “se,” models overly reliant on subword positional encoding may fail to dynamically adjust word order. For the source sentence (Chinese): “ 我喜欢吃苹果。” Target sentence (English): “I like eating apples.” The Chinese character “吃” (chī, “eat”) is mapped to an independent subword in segmentation. When “吃” neighbors contextual characters like “苹” (píng, “apple”), models may misinterpret its semantic role, causing fine-grained semantic association disruptions.

To address the limitations of traditional transformers [3] in handling long sequences or complex-structured data, multi-scale transformer models have been proposed to capture features at different hierarchical levels, better preserving both local and global information. Regarding research on multi-scale transformers, Refs. [4-6] proposed fixed-length window-based approaches that reduce computational complexity by restricting the scope of self-attention mechanisms while retaining local context; Ref. [7] introduced linguistically inspired local patterns to enhance the capture of language-specific features. However, these methods suffer from limited long-range dependency modeling due to fixed window sizes. Reference [8] designed a hybrid model integrating convolutional neural networks (CNNs) with self-attention, leveraging convolutional operations for local feature extraction followed by fusion with self-attention to achieve multi-scale feature modeling; Ref. [9] proposed a parallel architecture enabling simultaneous operation of convolution and self-attention to balance local and global information extraction. These hybrid models exhibit high structural complexity, making it challenging to balance complexity with computational efficiency. Other studies on multi-scale transformers generate sequences through subword segmentation methods such as Byte Pair Encoding (BPE) [10], WordPiece (WP) [11], and SentencePiece (SP) [12]. However, sequences generated by different subword methods vary in granularity (character, subword, word), posing challenges for cross-unit interaction modeling. SentencePiece [13] improved sequence generation by combining the advantages of subword segmentation but relied solely on subword units, neglecting character-level fine-grained features. To address this, we employ a multi-scale transformer with dual-path subword-character encoding to extract semantic information at different granularities, enabling dynamic interaction between subword and character-level fine-grained features.

Character-level features are influenced by subword structure and position, while the encoded sequential information of subwords can enhance translation quality. Therefore, positional encoding plays a crucial role in word order control. Traditional transformers inject positional signals by adding fixed positional encoding to word embeddings, assuming strict alignment between the absolute positions in the source language and ideal positions in the target language. Large language models (LLMs) [14] not only inherit sinusoidal positional encoding but also introduce trainable embedding parameters to enable dynamic positional weight adjustment. Shaw et al. [15] pioneered relative positional encoding but incurred substantial computational and memory overhead for long sequences while ignoring contextual dynamics. To address this, RoFormer [16] proposed Rotary Positional Encoding (RoPE), which integrates positional information through rotation angles mapped from position indices into geometric transformations in complex space, making attention scores explicitly reflect relative positional differences between tokens. However, while computationally efficient, the fixed-frequency rotation mechanism may restrict dynamic adaptation to complex positional patterns, particularly under non-uniform or semantically sensitive positional relationships, thereby limiting performance in fine-grained contextual tasks. To overcome this, we propose learnable positional encoding (LPE), which learns reordered positional information of target languages to dynamically adjust word order between source and target languages, further improving translation quality.

- This article adopts a dual-path subword-character multi-scale transformer (SCMT). Through its dual-path design with parallel subword and character branches, the architecture breaks through the limitations of traditional single-granularity encoding, compensating for the fine-grained information loss caused by subword segmentation dependency in existing methods.

- We propose integrating LPE with trainable mechanisms to enhance positional modeling. By incorporating dedicated neural modules between subword/character embeddings and transformer encoders, we dynamically infuse target language word order reordering signals into the source language encoding process, effectively resolving translation deviations caused by cross-linguistic word order misalignment.

2 Related Work

2.1 Machine Translation

MT is an important research direction in the field of NLP. Statistical machine translation (SMT) became mainstream, with its core idea being to learn translation models from large-scale bilingual corpora. The proposal of neural machine translation (NMT) marked the entry of machine translation into the era of deep learning. NMT adopts an end-to-end neural network architecture, directly mapping source language sentences to target language sentences. The sequence-to-sequence (Seq2Seq) model based on the attention mechanism, proposed by Bahdanau et al. [17], dynamically captures the alignment relationship between the source and target languages through the attention mechanism, addressing the issue of long-range dependencies. Subsequently, the transformer model proposed by Vaswani et al. [18] further revolutionized NMT. It is entirely based on the self-attention mechanism and feedforward networks, abandoning the traditional recurrent structure, significantly improving translation performance and reducing training costs. The transformer and its derivative models (such as BERT [19] and GPT) have not only excelled in machine translation but also have driven the rapid development of other NLP tasks.

2.2 Multi-Transformer

In the field of machine translation, research on multi-scale transformers aims to enhance the modeling capability of complex linguistic structures by capturing multi-level semantic information, thereby improving translation quality. Traditional transformer models face limitations when processing long sequences or complex structural data, while multi-scale transformers effectively address this deficiency through collaborative modeling of local and global features. Local attention methods based on fixed window sizes reduce computational complexity by restricting the scope of self-attention. For instance, Shaw et al. [15] proposed a local self-attention mechanism that confines computations within fixed windows to reduce processing overhead for long sequences. Yang et al. [20] further introduced dynamic window adjustment mechanisms to enhance local feature capture capabilities. Hao et al. [5] designed a multi-granularity self-attention approach that assigns different heads to handle hierarchical phrase structures. Hybrid architecture methods achieve multi-scale collaborative modeling by integrating CNNs with self-attention mechanisms. For example, Zhao et al. [9] developed a CNN-transformer hybrid model that extracts local features through convolutional operations and subsequently fuses them with self-attention to capture global contextual information. Similarly, Li et al. [7] proposed the UMST model, which redefines multi-scale correlations among subwords, words, and phrases. However, such models often encounter challenges in balancing structural complexity and computational efficiency.

Recent studies demonstrate that hybrid granularity modeling can significantly enhance the representation of complex linguistic structures. For instance, Ref. [21] employs subword-phrase multi-level attention to capture syntactic hierarchies, but its fixed-level partitioning limits the utilization of character-level features. Reference [22] adopts a CNN-transformer hybrid architecture to extract local–global features, yet fixed convolutional kernel sizes result in long-range dependency loss. In contrast, our dual-path design achieves dynamic multi-granularity interaction through differentiable cross-attention, preserving the global modeling strengths of subwords while enhancing local morphological awareness via character-level GCNs, thereby extending the parallel convolution-self-attention architecture established in [9].

2.3 Positional Encoding

Positional encoding, as a core component of the transformer architecture, directly influences the model's capability to model word order and syntactic structures. The traditional transformer employs absolute positional encoding [18], which generates fixed vectors for each position using sine/cosine functions to directly inject positional information into word embeddings. However, this static encoding approach struggles to adapt to the misalignment of word order between source and target languages in cross-lingual scenarios. Shaw et al. [15] proposed relative positional encoding, which dynamically adjusts attention weights by computing the relative distances between queries and keys, effectively capturing local word order relationships. Music transformer [23] integrated dependency syntactic distances into relative positional encoding to enhance the model's perception of syntactic structures. Wang et al. [24] introduced the Dynamic Position Reordering mechanism (DynPOS), which maps source-language positions to ideal target-language positions through learnable affine transformations to alleviate translation deviations caused by word order misalignment. Additionally, Ke et al. [25] designed hierarchical positional encoding to model positional relationships at multi-granular levels of characters, subwords, and phrases, strengthening the representation capability for complex linguistic structures.

3 Methods

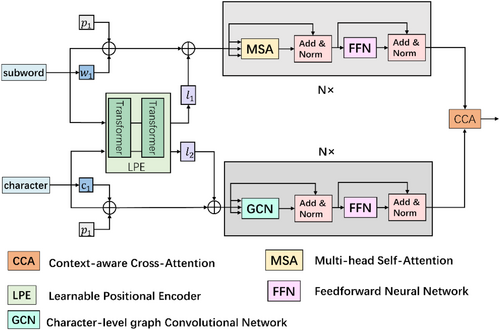

As shown in Figure 1, the proposed SCMT introduces innovative improvements based on the traditional encoder-decoder architecture, with its core innovation lying in the construction of a dynamically adaptive dual-granularity encoding system. In the encoder section, the model employs a dual-stream architecture with parallel subword and character branches. The subword branch inherits the original transformer encoder design, capturing global semantic associations across positions through multi-layer self-attention mechanisms—a design philosophy theoretically aligned with the deep feature interaction mechanisms emphasized in the weighted transformer proposed by [26]. The character branch incorporates neural network blocks to extract fine-grained features, distinct from the stacked structure of the multi-unit transformer by [27], effectively capturing character-level morphological variations and local neighborhood association features. The dual-path design of subwords and characters is derived from the hierarchical nature of linguistic structures: the subword branch models global semantic dependencies through self-attention mechanisms, designed to capture phrase-level relationships (e.g., verb-object dependencies), while the character branch employs lightweight graph convolutional networks (GCNs) to replace traditional self-attention. By constraining local interactions between characters through adjacency matrices, this approach effectively reduces computational complexity. Additionally, the model innovatively implements a LPE layer, which dynamically generates word order positional information for source and target languages through end-to-end training, replacing traditional fixed-pattern sinusoidal function encoding. This mechanism enables the model to autonomously adjust word order. At the feature interaction level, a context-aware cross-granularity attention gating mechanism is employed, dynamically regulating the fusion ratio of subword semantic features and character morphological features via learnable weight allocators, thereby achieving cross-granularity semantic compensation.

3.1 Subword-Character Multi-Scale Transformer

The pseudo-code for the interaction mechanism between subword branches and character branches is as follows in (Algorithm 1).

ALGORITHM 1. Two-branch interactive flow.

def cross_branch_interaction (h_char, h_subword, c_prev):

# Door Control Network

Gate = sigmoid (W_gate@concatenate (h_char, c_prev))

c_current = gate * h_char + (1 − gate) * c_prev

# Cross-grained attention

q = h_char@W_Q

K, V = h_subword@W_K, h_subword@W_V

attn_weights = softmax ([email protected]/sqrt (d_k))

output = LayerNorm(attn_weights@V + c_current)

return output, c_current

Through this cross-attention computation, the two branches can adaptively capture dependencies between character-level fine-grained features and subword-level semantic features, enabling dynamic fusion and complementary integration of contextual information.

To demonstrate the advantages of the dual-branched collaborative mechanism, we use Chinese-to-English translation as an example: When processing the Chinese compound word “银行” (yínháng, “bank”), the subword branch may correctly segment it into “银” (yín) and “行” (háng), while the character branch reinforces the distinction between “银行” (bank) and the homophonic phrase “很行” (hěn xíng, “very capable”) by leveraging the local morphological features of the “钅” radical (metal component) in “银” and the semantic context of “行.” This cross-granularity feature complementarity enables the model to dynamically resolve subword segmentation ambiguities.

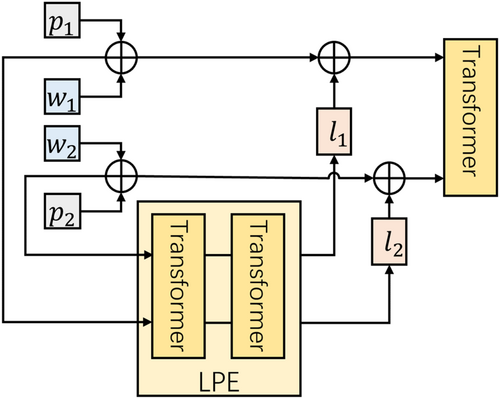

3.2 Learnable Positional Encoding

The proposed LPE mechanism breaks through the static limitations of traditional sinusoidal encoding, serving as an independent neural network block composed of two-layer transformer blocks. The first transformer layer is responsible for source-language positional feature extraction, learning relative positional relationships between words in the input sequence through self-attention mechanisms while fusing the initial features of subword embeddings () and sinusoidal positional encoding (). The second transformer layer (right-side network) performs cross-lingual positional remapping, dynamically learning target-language word order patterns through end-to-end training to map source-language positional encoding to ideal target-language positions . This layer incorporates FastAlign alignment supervision signals to enforce alignment between network outputs and target-language positional distributions. It takes the concatenated inputs of sinusoidal positional encoding and subword embeddings, enabling the learning of reordered positional information in target languages.

Unlike traditional transformers that rigidly rely on sinusoidal positional encoding to fix word order, our approach achieves flexible adjustment of positional information through the integration of sinusoidal positional encoding with subword embeddings. When a subword token at position in the source language optimally corresponds to position in the target language order, the input to the learnable positional encoder autonomously learns the distribution characteristics of the target position . This learned information is then combined with to inject cross-linguistic pre-reordering signals into , effectively mitigating translation errors caused by source-target language order misalignment.

4 Experiments

To comprehensively validate the effectiveness of the proposed model, we conduct rigorous experiments across three widely recognized English-centric machine translation benchmarks: WMT'14 English-German (En-De) [29] with 4.5 M parallel sentence pairs, WMT'17 Chinese-English (Zh-En) [30] containing 20 M bilingual segments, and WMT'16 English-Romanian (En-Ro) [31] comprising 0.6 M training instances. All datasets undergo standardized preprocessing, including byte-pair encoding (BPE) with 32 K merge operations, sentence normalization, and length-based filtering to ensure data quality.

4.1 Datasets

The WMT'14 English-German (En-De) [29] dataset comprises 4.5 million standardized bilingual sentence pairs, constructed through high-quality training set preparation steps including sentence length filtering, punctuation normalization, and duplicate data cleaning, with newstest2016 serving as the validation benchmark for hyperparameter tuning and the more challenging newstest2014 as the final performance test set.

The WMT'16 English-Romanian (En-Ro) [31] dataset employs a preprocessing framework jointly developed by [32, 33], implementing a cross-lingual Byte Pair Encoding (BPE) fusion strategy to build a vocabulary containing 40,000 shared subword units, where newsdev2016 and newstest2016 are designated for model validation and testing respectively.

The WMT'17 Chinese-English (Zh-En) [30] dataset presents greater challenges, integrating full resources from the WMT'17 competition to cover formal texts from the United Nations multilingual conference parallel corpus (15.8 million sentence pairs), multi-domain data from the CWMT corpus (9 million sentence pairs), and time-sensitive content from news commentary corpora (332,000 sentence pairs). Utilizing 18 million high-quality bilingual instances, it strictly adopts the officially partitioned newsdev2017 and newstest2017 as validation and testing benchmarks to ensure experimental result comparability and reliability.

4.2 Experimental Setup

4.2.1 Evaluation Metrics

4.2.2 Implementation Details

The experimental configuration utilized uniform parameter initialization with all weights set to 0.1, a value deliberately chosen to maintain numerical stability during the initial training phase and prevent gradient explosion. Computational workloads were distributed across 8 NVIDIA Tesla V100 GPUs (each with 32 GB VRAM) through synchronous data parallelism, employing optimized CUDA kernel implementations and Tensor Core-enabled mixed-precision training. We adopted the Adam optimizer [34] with its characteristic momentum parameters configured as , slightly adjusting the second-moment decay rate from the conventional 0.999 to better accommodate the statistical characteristics of multilingual training corpora. The learning rate followed a cyclic scheduling strategy with a base value of 3e−4, incorporating a 10,000-step warmup phase and a cosine annealing mechanism over 300,000 training iterations. Batch sizes were dynamically adjusted through gradient accumulation (4 steps per update) to maintain effective batch sizes, while gradient clipping at a 2.0 norm ensured stable optimization processes.

Additionally, the parameter configuration of the LPE module is as follows. The dimensionality of all input/output word embeddings is 512, with the output dimensions of attention layers and feed-forward layers also maintained at 512. Each attention layer has 8 heads, with each head having a dimensionality of 64. The dropout rate is set to 0.1 for regularization. The Adam optimizer is employed with parameters , .

4.3 Loss Function

5 Results

5.1 Ablation Study

To validate the effectiveness of the LPE proposed in this article, we conducted ablation experiments on LPE using the WMT'14 English-German (En-De) dataset. As shown in Table 1, the BLEU score of the Transformer Base increased by 0.8 after incorporating LPE, indicating that LPE enhances machine translation performance. Our proposed dual-path multiscale transformer based on characters and subwords achieved a BLEU score of 27.8 without LPE, and this score increased by 1.4 after adding LPE. This demonstrates that the proposed SCMT outperforms the transformer, and compared with sinusoidal positional encoding, LPE can flexibly capture positional information in the target language, improving the model's dynamic adaptation capability for translation.

| Model | Param(M) | BLEU |

|---|---|---|

| Transformer Base (−LPE) | 64.6 | 26.9 |

| Transformer Base (+LPE) | 70.5 | 27.7 |

| SCMT(−LPE) | 74.3 | 27.8 |

| SCMT(+LPE) | 77.8 | 29.2 |

Furthermore, as shown in Table 2, we further investigated the performance differences between the LPE and mainstream relative positional encodings on the WMT'14 En-De task. As shown in Table 2, LPE achieves a 0.7 BLEU improvement over RoPE on the standard metric and exhibits the smallest performance degradation in long-sequence scenarios (> 512 tokens). LPE outperforms ALiBi by 0.8 BLEU while also demonstrating superior performance in long-sequence contexts. T5-relative achieves a 1.0 BLEU decrease compared with LPE, exhibiting the most significant BLEU degradation in long-sequence scenarios while achieving a BLEU score of 25.3. This demonstrates that LPE effectively mitigates long-distance dependency attenuation through end-to-end learning of target-language positional information.

| Positional encodings types | Param(M) | BLEU | Long-sequence (> 512) BLEU |

|---|---|---|---|

| T5-relative | 72.1 | 28.2 | 25.3 |

| ALiBi | 70.8 | 28.4 | 26.7 |

| RoPE | 71.3 | 28.5 | 26.9 |

| LPE (Ours) | 77.8 | 29.2 | 27.8 |

The SCMT proposed in this article is composed of dual branches for subwords and characters. To validate that the character branch can learn local fine-grained features and enhance model performance when combined with the subword branch, we demonstrated the effectiveness of the character branch on the challenging WMT'17 Chinese-English (Zh-En) task. As shown in Table 3, with the addition of LPE, Sub-SCMT achieved a performance improvement of 0. compared with Sub-Transformer Big. Although the BLEU score of Char-Transformer Big is lower than that of Sub-Transformer Big, it confirms the effectiveness of the character branch. When the subword branch and character branch are combined, the translation performance of the model is significantly enhanced. This indicates that the dynamic interaction between character-level and subword-level feature information is key to improving machine translation quality.

| Model | Param(M) | BLEU |

|---|---|---|

| Sub-Transformer Big | 260.1 | 24.4 |

| Char-Transformer Big | 257.1 | 23.6 |

| Sub-SCMT | 265.3 | 25.2 |

| Char-SCMT | 263.0 | 24.8 |

| SCMT(Sub + Char) | 292.2 | 26.2 |

The previous experiments have demonstrated the effectiveness of the character branch. To further investigate the impact of character width on model performance, we designed ablation experiments to explore the effects of character widths of 16, 32, 64, 128, and 256 on the WMT'14 English-German (En-De) translation task. Table 4 presents the ablation results of varying character widths on model performance for the WMT'14 En-De task. When the character width is 16, the character branch captures fine-grained features, increasing the BLEU score to 28.6. As the width expands from 16 to 256, the number of model parameters grows linearly, with the peak performance achieved at a width of 32—corresponding to 77.8 M parameters and a BLEU score of 29.2, which represents a 1.5-point improvement over the baseline model. Notably, when the width further increases to 64/128/256, despite the continued growth in parameter size, the BLEU scores show a declining trend, indicating that excessive model width may lead to over-parameterization. This experiment reveals that the SCMT architecture achieves optimal performance balance at a width of 32, effectively enhancing translation quality without significantly increasing model complexity.

| Model | Width | Param(M) | BLEU |

|---|---|---|---|

| Transformer + LPE | — | 70.5 | 27.7 |

| SCMT | 16 | 74.9 | 28.6 |

| SCMT | 32 | 77.8 | 29.2 |

| SCMT | 64 | 79.2 | 28.6 |

| SCMT | 128 | 81.3 | 28.5 |

| SCMT | 256 | 83.5 | 28.4 |

5.2 Comparisons With Other Methods

We designed comparative experiments to evaluate the performance of SCMT against other advanced methods on the WMT'14 English-German (En-De), WMT'16 English-Romanian (En-Ro), and WMT'17 Chinese-English (Zh-En) tasks. Table 5 shows the performance comparison of models on the WMT'16 En-Ro task. The UMST model achieved the highest BLEU score of 35.5 with 61.3 M parameters, while the proposed SCMT model obtained a BLEU score of 34.8 with 71 M parameters, which, although slightly lower than UMST, significantly outperformed models like TNT (34.1) and DELIGHT (34.7). This is because Romanian exhibits flexible SVO/SOV word order with clitic doubling, necessitating dynamic positional adaptation. While our LPE mechanism operates under the target-side gradual reordering assumption, it may struggle with handling discontinuous dependencies prevalent in the language's non-configurational syntax. In contrast, UMST employs a fixed-window local attention mechanism that might better capture such local dislocation patterns through structural inductive biases. In the WMT'14 En-De task (Table 6), SCMT achieved a BLEU score of 28.7 with 75 M parameters, showing a 0.6 gap compared with the 130.3 M-parameter Multi-Unit (29.3 BLEU) but demonstrating superior parameter efficiency with 55.3 M fewer parameters. For the WMT'17 Zh-En task (Table 7), SCMT achieved state-of-the-art performance with 25.9 BLEU using 290.1 M parameters, outperforming UMST and TranSFormer by 0.7 and 0.3 BLEU points respectively.

Compared with previous methods, SCMT exhibits significant advantages due to its LPE design, which dynamically captures word order information in both source and target languages, effectively addressing the limitations of traditional fixed positional encoding in modeling word order. Additionally, the character branch design enables fine-grained multi-scale modeling, particularly demonstrating enhanced feature extraction capabilities for linguistic units with complex structures like Chinese characters. These two technologies form a synergistic effect, significantly improving the model's adaptability to different language pairs without excessively increasing parameter count (SCMT's parameter scale is controlled within ±7% of comparable models). Especially in the Chinese-English translation task, the character branch's modeling advantage for subword information such as Chinese radicals and strokes is fully unleashed, enabling the model to achieve remarkable performance improvements with only a 2.5% increase in parameters.

Our method demonstrates particularly outstanding performance in morphologically rich languages. As shown in Table 8, when the inflected form “învaţă” (he learns) of the Romanian verb “a învăța” (to learn) is segmented by BPE into “în + vaţă,” the character branch collaboratively corrects tense misjudgments caused by subword segmentation through morphological feature analysis of “ţ” (t with cedilla) and “ă” (a with breve).

| Inflected verb form | Reference translation (EN) | Baseline output | SCMT output |

|---|---|---|---|

| a învăța (infinitive) | to learn | to learn | to learn |

| Învață (3sg present) | he learns | learn (missing subject) | he learns |

| Învățau (3pl imperfect) | they were learning | they learned (tense error) | they were learning |

| Învățând (gerund) | learning | to learn (POS error) | learning |

| a fi învățat (perfect) | to have learned | to learn (aspect error) | to have learned |

6 Conclusion

This article proposes an innovative multi-scale transformer architecture—subword-character multi-scale transformer with learnable positional encoding (SCMT)—which achieves significant performance breakthroughs in machine translation through deep integration of linguistic features at different granularities. The core innovation of SCMT lies in its synergistically operating dual-branch structure, constructing a unique bimodal feature fusion framework. The subword branch employs the standard transformer architecture to capture global semantic relationships between words, while the character branch utilizes fine-grained modeling techniques to precisely capture local morphological features at the character level. These two branches dynamically interact through a context-aware cross-attention mechanism, enabling the exchange of character-level and subword-level feature information, thereby modeling global relationships while capturing subtle features. This effectively addresses the issue of fine-grained information loss in traditional multi-scale transformers caused by the dominance of coarse-grained features and neglect of character-level features. Notably, the architecture introduces a LPE module, which adaptively captures the positional relationships of subword sequences in both source and target languages through end-to-end training. This enhances the model's ability to model positional information of source and target words, demonstrating significant advantages in long-range dependency modeling and fine-grained perception of morphologically complex languages. The synergistic effect between SCMT's cross-granularity feature extraction mechanism and dynamic positional modeling provides a new technical paradigm for processing morphologically complex languages. Experimental results show that SCMT achieves remarkable performance improvements in mainstream machine translation tasks such as WMT'14 English-German (En-De), WMT'17 Chinese-English (Zh-En), and WMT'16 English-Romanian (En-Ro).

Author Contributions

Wenjing Yao: writing – review and editing, writing – original draft, validation, data curation, resources. Wei Zhou: writing – original draft, writing – review and editing, software, formal analysis.

Ethics Statement

The authors have nothing to report.

Conflicts of Interest

The authors declare no conflicts of interest.

Open Research

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.