Classifications and Analysis of Caching Strategies in Information-Centric Networking for Modern Communication Systems

ABSTRACT

Information Centric Networking (ICN), a paradigm shift in network design that prioritizes content distribution over host-centric methods, arises in this dynamic environment. Integrating built-in network caches into ICN is essential for optimizing content distribution effectiveness, which raises hit rates, boosts throughput, and lowers transmission delays. This paper provides a comprehensive exploration and classification of ICN caching strategies across diverse domains, including the Internet of Things (IoT), Internet of Vehicles (IoV), Mobility ICN, Edge Computing, and Fog Computing. It further offers a detailed analysis of these strategies based on their proposed methods, identifying key trends, strengths, and limitations. Through a balanced performance assessment, the study evaluates these strategies using critical metrics such as cache hit ratio, efficiency, retrieval latency, security, and throughput. Furthermore, the paper highlights open challenges and presents future research directions to advance caching mechanisms, fostering the continued evolution of ICN for scalable and efficient content delivery for users.

1 Introduction

The traditional address-based communication, grounded in distinctive IP addresses, established the foundation for the host-centric framework of the Internet [1]. However, the advent of content delivery enterprises and streaming platforms, exemplified by entities like Amazon [2], Netflix [3], Prime Video [4], and Disney+ Hotstar [5], in conjunction with prominent social networks such as Facebook [6], Instagram [7], and YouTube [8], swiftly transformed the landscape, marking a new era characterized by content-centric communication. As we delve into the second phase of this evolutionary process, it becomes apparent that content delivery platforms no longer merely serve as basic information channels; instead, they function as catalysts, fundamentally reshaping the essential nature of online interactions within the IP architecture [9, 10]. However, amid these advancements, it is imperative to scrutinize the limitations and challenges that the conventional IP-based internet infrastructure encounters in effectively delivering content, particularly due to network bottlenecks and slow content delivery when catering to large groups of audiences.

CDNs are introduced to address challenges inherent in traditional architecture by integrating replica servers into the distribution process [11]. These strategically positioned replica servers help alleviate network congestion, ensuring more efficient content delivery. However, with the ongoing increase in internet traffic and the growing demand for higher-quality service, implementing CDNs necessitates a substantial financial investment. Deploying these servers and the meticulous planning to ensure optimal locations with sufficient capacity are time-consuming processes [12, 13]. The rapid expansion of network traffic in recent years has led to constraints in the IT infrastructure and a shortage of storage space for existing CDN systems. Moreover, cloud computing provides adaptable resources, showcasing high scalability and the ability to manage extensive data loads [14-16]. This flexibility makes cloud computing an excellent choice for expanding CDNs and optimizing their workloads. However, insufficient attention has been directed toward the challenge of delays for end-users and data congestion when multiple cloud computing resources are concentrated in a single data center [17]. In contrast to conventional methods, P2P solutions have demonstrated significant advantages in content delivery and sharing applications. In P2P, each user contributes their resources to the streaming process. Nevertheless, suboptimal performance is achieved when numerous users simultaneously request data [18-21].

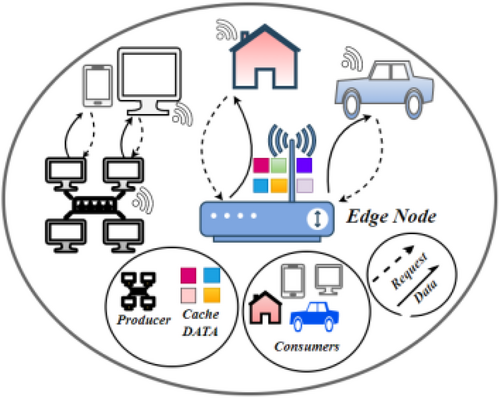

In addressing challenges related to content delivery, researchers are exploring a novel solution known as ICN [22-24]. The initiatives within the ICN framework aim to create an internet architecture that could potentially replace the current dominant IP-centric model. ICN has revolutionized internet architecture by introducing the concept of name-based routing, as Figure 1 shows consumers, producers, and CRs. In this diagram, consumers represent end-users or devices requesting content, while producers are entities that generate or provide content. CRs facilitate content delivery by managing the storage in their cache and retrieval of content objects. Together, these components form the backbone of ICN, reshaping how content is accessed and distributed across networks. In 2007, the DONA [25] proposed flat, self-unique names for information objects with integrated security and persistence features. Since then, various ICN research efforts [26] have emerged, including EU-supported projects like PURSUIT [27], its successor PSIRP [28, 29], and U.S.-backed initiatives like NDN [30] and CCN [31]. Additionally, projects such as MobilityFirst [32] and GreenICN [33] contribute to advancing ICN architecture.

1.1 Research Gap and Motivation

Despite increasing interest in ICN, most surveys focus narrowly on specific aspects of ICN caching. They often lack a comprehensive, cross-domain analysis of caching strategies, limiting their relevance across varied network environments. This results in a fragmented understanding of caching mechanisms and their broader applicability, creating a significant gap in the current body of research.

Caching strategies that work well in resource-constrained IoT environments, where connectivity is intermittent, may not be effective in high-mobility networks like vehicular networks, which require dynamic content distribution due to rapidly changing locations. Similarly, caching approaches optimized for edge computing, which utilizes localized resources and proximity to users, may not be suitable for fog computing, which involves hierarchical distributed computing and demands low-latency access. Therefore, a domain-specific analysis of caching strategies is crucial to fully understand their strengths, limitations, and applicability.

This paper addresses this gap by providing a cross-domain analysis of ICN caching mechanisms across various domains, including IoT, vehicular networks, mobility, edge computing, and fog computing. By exploring and classifying caching strategies within these contexts, the paper offers insights into their strengths and limitations and provides a more integrative assessment of their performance.

1.2 Our Contributions

- The paper emphasizes the core concepts of ICN and provides a classification and analysis of ICN caching strategies.

- The paper explores various caching solutions across multiple domains, including IoT, vehicular networks, mobile ICN, fog computing, and edge computing, systematically assessing their strengths, limitations, and applicability.

- A comparative analysis of existing surveys is conducted, highlighting their contributions and demonstrating how this study broadens the discussion of ICN strategies.

- Performance metrics, including caching efficiency, latency, cache hit rates, network security, and throughput, are analyzed to identify areas for improvement in network performance.

- Finally, the paper identifies open challenges and proposes future research directions, offering insights to advance the field of ICN.

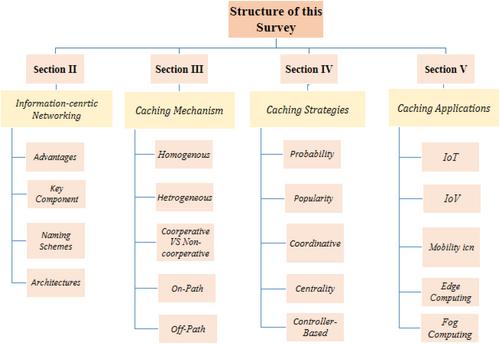

1.3 Paper Organization

The remainder of the article is organized as depicted in Figure 2: Section 2 provides an in-depth discussion of ICN features, architectures, and naming schemes. Sections 3 and 4 sequentially present the categories of caching, followed by an examination of existing caching strategies. Section 5 explores the role of caching in diverse applications, including IoT, IoV, mobility, Edge computing, and Fog computing. Section 6 conducts a comparative analysis with existing surveys, while Section 7 presents recent research advances on key ICN performance indicators. Sections 8 and 9 discuss future directions and conclude the article, respectively.

2 Information Centric Networking (ICN)

ICN is a paradigm shift in networking where content is accessed by its name rather than its location. This section is organized as follows: firstly, the advantages of ICN are highlighted, showcasing its ability to improve content delivery, enhance security, and enable efficient caching mechanisms. Following this, the key components of ICN, including content-centric routing and in-network caching, are discussed. Lastly, the naming schemes of ICN are discussed, followed by an examination of its architectures to demonstrate the diverse approaches within the ICN framework.

2.1 Advantages

-

Content Centric Routing

Content-centric routing, a core feature of ICN protocols, revolutionizes network architecture by shifting the responsibility of name resolution from the application layer to the network layer [34]. This innovation proves particularly advantageous in fragmented networks, where traditional location-based addressing may falter due to irregular or intermittent connectivity disruptions. For example, in environments without fixed network infrastructure, where connections are ad hoc, ICN's content name-based routing allows content to be replicated based on its name rather than its physical location. This means content can be efficiently distributed without needing servers like DNS resolvers at the application layer.

-

Content-Based Access Management

ICN empowers controlling data access, restricting it to specific users or groups through content-based security measures [35]. This capability enhances secure exchanges among peer users, particularly in remote network regions. For instance, consider a financial institution utilizing ICN. With content-based access management, sensitive financial data can be restricted to authorized personnel only. This ensures that confidential information remains secure and accessible only to those with proper clearance, even in remote branches with limited connectivity.

-

Data Objects Integrity

ICN architecture revolves around named data objects, where various proposals within ICN incorporate the concept of ‘self-certifying data’ into their naming schemes [36]. This involves embedding cryptographic information about the data's origin, enabling authentication without external entities. For instance, in a distributed sensor network utilizing ICN, each sensor could embed cryptographic signatures in its data packets, allowing recipients to verify the authenticity of the data without relying on centralized authentication servers. Consequently, security and reliability are significantly enhanced within ICN architectures.

-

In-Network Caching

In-network caching, a fundamental aspect of ICN, involves storing frequently accessed content strategically within the network infrastructure [37]. This approach effectively handles large volumes of traffic, thereby reducing network congestion. For example, caching at access nodes can mitigate congestion in backhaul links by delivering content from nearby caches.

-

Dynamic Networking

In modern networking, ICN eliminates the need for a continuous end-to-end connection [38]. The characteristic of ICN eliminating the need for a continuous end-to-end connection promotes seamless integration of conventional network setups and fragmented alternatives. ICN enhances data retrieval and distribution by reducing dependency on constant direct connections in traditional, stable network environments, such as home Wi-Fi or LANs. Meanwhile, in dynamic and less stable networks, like mobile or ad-hoc networks in disaster recovery scenarios, ICN allows devices to intermittently connect without disrupting communication. Data can be cached and retrieved from multiple points, ensuring continuous access even when network paths are unstable. This versatility makes ICN a robust solution for various networking challenges, enhancing efficiency and reliability across different network types.

2.2 Key Components

-

Naming

In the realm of ICN, a significant derivation from conventional networking methodologies is observed, particularly in how data is identified and accessed. Unlike traditional networks relying on hierarchical URLs, ICN uniquely assigns identities to data based on their names rather than their physical locations [41]. This shift enables a more versatile and dynamic approach to data retrieval, wherein flat names are frequently utilized to represent data. However, in the earlier ICN architecture, such as CCN, a distinct hierarchical organization of names prevails, enhancing readability and accessibility for users. This characteristic underscores ICN's flexibility, accommodating both flat and hierarchical naming conventions to suit diverse network architectures and user preferences.

-

Caching

Caching is a notable feature in ICN networks, facilitating content storage directly within network nodes. Each content item has the potential to be cached along the delivery route within these network nodes. Consequently, when subsequent requests for the same content arise, it can be efficiently delivered through the caches of these ICN nodes [42]. Implementing in-network caching occurs within ICN nodes between the content consumer and the content producer. This strategic approach not only reduces the workload on the content producer but also enhances data availability. Furthermore, in-network caching is pivotal in balancing load within the network, thereby decreasing content retrieval latency.

-

Routing

Routing in the ICN framework represents a strategic approach to managing numerous requests for the same content originating from a diverse user set. The process adheres to a specific protocol, wherein only the initial request is transmitted to a potential content source, and subsequent requests are systematically recorded and temporarily stored within the memory of the ICN node [43]. When the data is received from the source, it is then disseminated to each requester through the same interface where their original requests were initiated. As a result, ICN can inherently efficiently execute multicast, optimizing content delivery to multiple users.

-

Mobility

In the domain of ICN, mobility remains unaffected by the absence of a fixed connection structure. ICN operates on a model where no established connections exist, meaning that users can move freely without disrupting their access to content [44]. ICN's request-driven approach treats each request independently, allowing seamless content retrieval for mobile users, regardless of their location or when the request is made. This design, crafted for mobility, ensures continuous and efficient data access in dynamic environments.

-

Security

ICN provides robust self-protected security by utilizing encrypted and self-certified content, ensuring that only authorized users can access the content [45]. Unlike traditional methods where the connection between the data producer and the consumer is secured, ICN employs a distinct security approach. In ICN, the content producer incorporates a signature directly into the content. Consequently, consumers and intermediate caching points verify the legitimacy of the content using specific keys published by the content producer.

2.3 Naming Schemes

-

Hierarchical Naming

Hierarchical naming involves the construction of a name by assembling various components to represent an application's function, resembling the structure found in web addresses [46]. This approach simplifies the creation of easily comprehensible names and supports the organized management of many names. Nevertheless, challenges arise when addressing very small entities.

-

Flat Naming

In contrast to hierarchical names, flat names lack inherent meaning or structure. Their unconventional nature makes them less intuitive for people to comprehend, as they don't resemble typical words [47]. These names present difficulties when naming frequently changing entities, as they are not well-suited for new or dynamic content.

-

Attribute-Value-Based Naming

A name based on attributes and values is similar to having a collection of specific details about something [48]. Each detail is associated with a name, a category indicating the type of detail, and a list of potential options (such as creation date, content type, year, etc.). When these details are combined, they form a distinct name for the item and provide additional information about it. This naming system proves beneficial when searching for items using specific keywords. However, ensuring a quick and precise match isn't always straightforward due to the possibility of multiple things sharing the same keywords.

-

Hybrid Naming

A hybrid name involves blending various naming approaches discussed earlier to optimize the network's performance, enhance speed, and ensure information security and privacy [49]. For example, incorporating features from one method can facilitate efficient searches by intelligently combining names; utilizing concise and straightforward names from another method can conserve space, and adding details aids in keyword-based searches while maintaining security. However, crafting these unique hybrid names can be challenging, particularly when dealing with dynamic entities like real-time information.

2.4 Architectures

-

Content Centric Networking (CCN)

CCN is acknowledged as one of the most popular and user-friendly architectures in Information Centric Networking [50]. In CCN, a client initiates an information request within the network by transmitting an ‘INTEREST,’ which travels through a series of routers or nodes. These nodes communicate with their neighboring nodes to locate the requested information. If the desired content is found at any of these nodes, it is then directed back to the requester. Importantly, in CCN's operation, all intermediate forwarding nodes store a copy of the requested data in their memory for potential future requests along the same path. In cases where the content is not discovered along the path, caching occurs at the central server.

-

Data Oriented Networking Architecture (DONA)

The DONA architecture is recognized as a notable platform within the domain of ICN, choosing flat names over hierarchical naming. This architectural choice presents numerous advantages, primarily focusing on the capability to cache information at the network layer. This is made possible through innovative concepts such as autonomous systems and LCD integrated into its design. The use of AS ensures the seamless retrieval of information, even in scenarios where nodes are temporarily unavailable due to mobility, as the information is cached within a node incorporated into the AS.

DONA's robust cryptographic capabilities empower users to authenticate their requests while it still relies on IP and routing addresses at both local and global levels [51]. A pivotal component in DONA is the unique server known as RH, which plays a critical role in resolving names as network operations progress. Integrated into the AS, RHs and routers operate under the network's routing policies.

In DONA, like CCN, data is registered and cached by RHs within the network. When a publisher node wants to make data available, it sends a “REGISTER” message alongside the data to an RH. The RH then stores a copy of this data locally and keeps track of its location. This caching process allows RHs to quickly provide the data to other RHs within the network hierarchy without needing to fetch it again from the original source. By notifying the entire RH hierarchy about the data's availability, DONA ensures efficient data distribution and retrieval across its network segments.

-

Publish-Subscribe Internet Routing Paradigm (PSIRP)

In PSIRP, when subscribers want to access information objects, they send a request to a rendezvous handler. This handler acts as a mediator, facilitating the process of finding and retrieving the requested data [52]. The request travels through the nearest router, which checks if the data is available either in its cache or directly from the publisher node.

At the same time, the publisher node registers the data with its own rendezvous handler. This handler then collaborates with the subscriber's handler to locate a match [53, 54]. Once a match is found, the data is routed through internal routers along the registered path to fulfill the subscriber's request.

-

Network of Information (NetInf)

The NetInf architecture distinguishes itself within the ICN landscape by utilizing a publisher/subscriber model facilitated by the NRS [55]. Subscribers access data by performing ‘look-ups,’ where publishers NDO with specific key attributes. To efficiently manage data transmission, NetInf utilizes the multicast DHT routing algorithm. This approach ensures that consumer nodes consistently receive updated lists of requests. The NRS stations are crucial in optimizing the delivery of NDO, ensuring efficient and reliable information retrieval across the network. The NRS stations play a pivotal role in optimizing the delivery of NDO.

-

Name Data Networking (NDN)

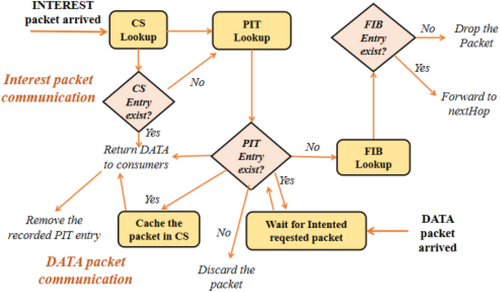

NDN comprises three fundamental components: producers, consumers, and NDN routers. Producers function as content distributors within the NDN framework. At the same time, consumers act as content subscribers, interacting with NDN elements such as CS, PIT, FIB, Faces, and the Forwarding Daemon to fulfill user requests for desired content [56, 57], as specified in Tables 1.

In the NDN architecture, communication occurs through INTEREST and DATA packets, as depicted in Figure 3.

| Functional components | Description |

|---|---|

| CS | The CS serves as a caching mechanism within NDN networks, preserving duplicates of DATA packets that traverse Content Routers. Each record in the CS is linked to a content name and its corresponding payload |

| PIT | The PIT is responsible for tracking and managing received INTEREST packets until the requested data arrives or the entry's lifetime expires. Each PIT entry is distinguished by a name prefix and retains a list of input Faces associated with the received INTEREST packets |

| FIB | The FIB operates as a name-based lookup table, associating each entry with a name prefix and featuring an ordered list of output Faces that specify the subsequent hop for data forwarding. In facilitating multiple paths for data delivery |

| Faces | Faces represent the network interfaces responsible for the transmission and reception of INTEREST and DATA packets. These interfaces can manifest in diverse forms, encompassing direct links between nearby network nodes through Ethernet, overlay communication channels connecting to remote nodes utilizing TCP, UDP, or WebSockets, or interprocess communication channels linking local applications on the same node |

| NFD | The NFD, created by the NDN team, undertake several crucial tasks. It incorporates NDN face abstraction, manages CR tables, implements the forwarding plane supporting various forwarding strategies, and manages the Routing Information Base (RIB), ensuring synchronization of routes in the RIB with the FIB table. The NFD holds a pivotal position in governing NDN network operations |

Initially, consumers send INTEREST packets to retrieve data. If the requested data is cached locally, intermediate nodes promptly respond with DATA packets [58]. However, if the data isn't cached, the intermediate node performs a lookup in its PIT. If no entry is found, a new PIT entry is created for the incoming request. Subsequently, the node forwards the INTEREST packet based on the FIB rule corresponding to the requested data name.

On the other hand, when a DATA packet arrives, the content router performs a lookup in its PIT. If a corresponding entry is found in the PIT, indicating that the data is awaited by one or more consumers, the entry is removed from the PIT, and the incoming packet is cached in the CS table. Subsequently, the cached packet is forwarded to the intended requesting consumers, adhering to the corresponding PIT entry. If no entry is found, then the DATA packet is discarded.

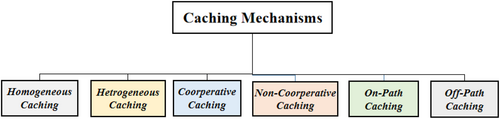

3 Caching Mechanisms

-

Homogeneous Caching

Inhomogeneous caching, content routers named CR1 and CR2 cache DATA packets as they pass by [23], maintaining the same cache size. For instance, both routers concurrently cache content chunks like prefix/c1, prefix/c2, and prefix/c3.

-

Heterogeneous Caching

In heterogeneous caching, not all content routers along the downloading path cache the Data packets. For instance, CR1 caches prefix/c1 and prefix/c3, while CR2 caches prefix/c2 [59]. Meanwhile, within this heterogeneous caching, each content router in the network has a different cache size.

-

Cooperative Caching

In cooperative caching, content routers within a network collaborate with other content routers, establishing cache states to cache and deliver more content chunks [60]. Cooperative caching can be further categorized into explicit cooperation and implicit cooperation. Implicit cooperation does not require content routers to convey cache states through an additional advertisement mechanism. Conversely, explicit cooperation mandates that content routers advertise their cache states to other content routers.

-

Non-Cooperative Caching

In non-cooperative caching, content routers within a network independently make caching decisions and do not share information about cached content with other content routers.

-

On-Path Caching

In on-path caching within a CCN or NDN environment, a DATA packet is cached along its path to the requester. This mechanism involves storing copies of requested content at intermediate routers or nodes situated along the path traversed by the request [61] for the desired content.

-

Off-Path Caching

In off-path caching, the caching of a Data packet depends on whether content routers along the delivery path choose to cache it or not. Off-path caching is feasible even when a centralized topology manager, such as RHs, is in place [62]. The authority to decide the location for caching a Data packet lies with the RH, who then dispatches a copy of the Data packet to the selected content router(s).

4 Caching Strategies

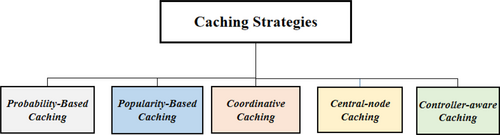

Over the years, various caching strategies have been developed to improve cache hit rates and minimize content retrieval delays. These strategies are typically categorized into Probability Caching, Popularity Caching, Popular-coordinative Caching, Central-node Caching, and Controller-aware Caching [63], as shown in Figure 5. In this section, we provide a detailed classification and analysis of these strategies, highlighting their strengths, limitations, and key challenges. Our analysis aims to evaluate the effectiveness of these strategies, identify key issues, and offer insights into potential solutions for enhancing ICN caching mechanisms.

4.1 Probability-Based Caching

-

CacheFilter

To mitigate data redundancy and support extensive content distribution in the network, the CacheFilter cache placement scheme is introduced in [64]. This scheme incorporates an additional field within the DATA packet known as the flag bit. A flag bit of 0 indicates that the content is already cached by a router within the network, while a flag bit of 1 signifies that the content needs to be cached.

-

Random Caching (RC)

RC is a method wherein nodes selectively cache incoming packets according to a predetermined static probability denoted as “p,” where 0 p 1 as discussed in [65]. The commonly selected value for p is often established at 0.5. This widely adopted value seeks to reduce data redundancy, achieving a balance that mitigates both excessive duplication and underutilization of available resources.

-

Prob(p)

The objective of Prob (p) is to decrease caching redundancy and improve efficiency. In Prob(p), each content router is programmed to cache a data packet with a certain probability, denoted as p in work [66]. Upon the arrival of a data packet at a content router, a random number within the range of zero to one is generated. If the generated random number is less than p, the content router caches a copy of the data packet. Otherwise, the data packet is forwarded without being cached.

-

Probcache and Probcache+

ProbCache incorporates two components in the packet headers: one in the Interest-packet header and the other in the Data-packet header. The ratio between them is denoted as the CacheWeight for specific content. Probcache computes both cache weight and TimesIn, which refers to the sum of time a packet is saved on the caching path, subsequently determining the caching probability at each content router using a defined equation as presented in [67]. A content router with a higher caching probability than others along the path will cache contents. ProbCache+ represents an enhanced version of ProbCache and, similar to ProbCache, calculates TimesIn and CacheWeight at each content router. A content router with a higher caching probability than others along the path will cache contents with greater likelihood.

4.2 Popularity-Based Caching

-

Leave Copy Down (LCD)

The purpose of LCD, as described in [68], is to reduce caching redundancy by introducing a caching proposal flag bit in the header of the data packet. The activation of the flag bit occurs when either the content source or a content router, having a replica of the corresponding content, receives a request. Utilizing this bit, a copy of the intended content is retained at the content router situated one level closer to the requester following each request.

-

Move Copy Down (MCD)

MCD, as discussed in [69], is employed to reduce caching redundancy, similar to LCD. In MCD, a duplicate of the content is transferred to the content router one level closer to the requester following each request.

-

Chunk Caching Location and Searching Scheme (CLS)

CLS, presented as an enhanced version of MCD in [70], aims to improve server workload efficiency. Consequently, CLS adopts a strategy where only a single replica of a chunk is maintained along the pathway connecting a server and a requester. Upon receiving a request, the chunk's copy moves one level closer to the requester. Conversely, during cache eviction, the chunk moves one level towards the server.

-

Chunk-Based Caching Scheme (WAVE)

The WAVE in the CNN framework is introduced by [71]. In this placement scheme, data packets are divided into uniform, fixed-sized fragments or chunks, which are then distributed across the network. WAVE's strategy combines the evaluation of content popularity and inter-chunk relationships as key factors in determining which content to cache. It strategically decides which specific chunks to cache based on the popularity of the content and the relationships between different chunks within the same content.

-

Most Popular Content (MPC)

A caching scheme called MPC was presented in [72]. This novel method entails edge devices actively monitoring and recording the frequency of requests for specific content names. These devices maintain a Popularity Table, essentially serving as a local repository that records the popularity metric for each content item. As the request counts accumulate, content names surpassing a fixed Popularity Threshold are recognized as popular. Once a content name attains this status, the hosting node for that content triggers a suggestion mechanism. This mechanism entails recommending neighboring nodes to cache the recognized popular content.

-

Dynamic Fine-Grained Popularity-Based Caching (D-FGPC)

Reference [73] has introduced an extended version of MPC named D-FGPC. D-FGPC incorporates a flexible popularity threshold function, determined by both the frequency of received INTEREST and the available cache capacity. Integrating this dynamic threshold function enhances the adaptability of the caching strategy employed by D-FGPC.

-

Popularity-Aware Closeness Caching (PaCC)

The goal of PaCC, as introduced by [74], is to systematically manage caching decisions by using the ‘PaCC’ field in the DATA packet. This aims to ensure efficient forwarding of content by identifying and utilizing the optimal router or node based on factors such as popularity and network characteristics, including hop distance.

-

Dynamic Popularity Cache Placement DPCP Scheme (DPCP)

The DPCP, as proposed in [75], is designed to manage the caching of data packets at a node efficiently. Upon the arrival of a data packet, a popularity calculation mechanism is triggered. This calculation incorporates the content's historical popularity in the last cycle and factors in the total number of requests in the current cycle. The DPCP scheme dynamically adjusts the threshold size through the AIMR algorithm, considering the current space occupation. Only content surpassing the current popularity threshold is cached, and the system strategically prioritizes the caching of content with a high probability of future requests.

-

Caching Popular and Fresh Content (CPFC)

The proposed CPFC caching decision scheme is introduced by [74]. It is a systematic approach for (CR) to manage the content cache effectively. Two significant metrics are employed by each CR: the Popularity metric, consisting of content prefixes and their corresponding request counts, and the Freshness metric, including content names and their respective lifetimes (times during which the content remains valid).

-

Two Layers Cooperative Caching (TLCC)

In the context of TLCC, as described in [76], CCN routers are grouped into Upper-Layer Group (ULG) and Lower-Layer Group (LLG) within an Autonomous System. CDULG caches received chunks based on hash comparison with the router's key range, while CDLLG caches chunks if the Local Popularity Count exceeds a threshold and the hash matches the router's key range.

-

DeepCache

Introducing the DeepCache framework in [77], highlighting the efficacy of LSTM-based models in predicting content popularity. The research addresses content popularity prediction as a seq2seq modeling problem and introduces the LSTM Encoder-Decoder model, a novel approach. Additionally, the DeepCache framework is presented for end-to-end cache decision-making.

4.3 Central-Nodes Based Caching

-

CRCache

Reference [78] introduced a caching placement scheme called CRCache. This approach involves making caching decisions by considering a comprehensive popularity metric determined by assessing the frequency of received INTEREST. This metric is thoughtfully combined with identifying popular routers within the network topology. Notably, routers with superior distribution are marked as popular routers in the CRCache scheme.

-

Edge Caching

Edge caching strategically places content closer to users by storing it in the latest content router along a distribution pathway, as introduced by [79]. The goal is to minimize the number of hops required to access a content source, resulting in reduced time for content delivery and decreased network traffic caused by the transmission of content requests.

-

BEACON

To optimize network resources, a betweenness-centrality-based content placement scheme called BEACON, introduced by [80], employs a time series Gray model to predict which content to cache and where to cache it.

-

Content Betweenness Centrality (CBC)

The objectives of CBC, as detailed in [81], aim to minimize the latency associated with content delivery, mitigate traffic and congestion through an increased cache hit rate, and simultaneously reduce server load. CBC indicates the betweenness centrality exhibited by each content router within the network. In this context, data packets are strategically stored at the content router deemed ‘important,’ showing the highest betweenness centrality rate along the delivery path.

-

Content Selection and Router Selection (CaRS)

Reference [82] proposes a CaRS caching strategy for network devices aimed at balanced content distribution. It selects cached contents based on Zipf's law, and to balance content distribution, routers monitor neighboring cache status using parameters like proportionate distance from the consumer, router congestion, and cache status.

-

Link Congestion and Lifetime Based In-Networking Caching Scheme (LCLCS)

The main purpose of LCLCS is to maintain an acceptable cache rate and notably optimize network delay. The LCLCS process, defined in [83], begins with a network analysis to identify ‘important’ content routers along the content delivery path. Subsequently, LCLCS utilizes the computed lifetime to make decisions regarding the eviction of currently cached content, ensuring adequate space for caching incoming content.

4.4 Controller-Aware Caching

-

Deep Learning-Based Content Popularity Prediction (DLCPP)

DLCPP, detailed in [84], proposed an auto-encoder-based popularity model implemented over the SDN controller to predict content popularity. It collected the data from the routers having spatial-temporal correlation to represent the flow of requested content at discrete intervals. Finally, a softmax classifier is employed to predict the popularity of content. Further, for popular placement, SDN selects a set of important routers with the help of the betweenness centrality approach.

-

Controller-Aware Secured Content Caching (CaCC)

CaCC, presented in [85], utilizes programmable switches with four key modules: “PARSER,” “CS,” “PIT” and “FIB,” and “Encryption and Decryption.” The CS module comprises an index list storing encrypted IDs of cached content and a CS server for storing the actual data, both stored in registers. Upon an INTEREST packet's arrival, the switch checks the index list for a match. If found, it retrieves the corresponding data from the CS server; otherwise, it checks the PIT entry. If no entry exists, it forwards the INTEREST packet following the FIB route, encrypting it before transmission. Upon receiving a DATA packet, it decrypts it and matches the content prefix with the PIT. If a match is found, it caches the content to the CS server and delivers it to a set of users simultaneously.

-

Centralized-Based Dynamic Content Caching (CDCC)

The paper [86] employed a CDCC caching scheme in which a heuristic algorithm utilized within the SDN controller plays a pivotal role in the efficient selection of cacher groups tasked with storing both popular and time-sensitive content. This method not only optimizes the allocation of caching resources but also enhances the overall performance of content delivery networks within dynamic network environments.

-

Centrality Content Caching (CCC)

CCC scheme, presented in [87], optimizes content caching across autonomous systems. The controller strategically determines optimal paths and installs them in forwarding devices. When an INTEREST packet reaches a CR, it checks its CS table. If no match is found, the CR consults its CIT for cached content within the autonomous system. If found, the request is directed based on the FIB route. Otherwise, it's forwarded to the controller, which verifies its CIT table for content information across systems. The controller sends the content if a match is found; otherwise, the request is redirected to content producers.

4.5 Coordinative Caching

-

ICC

ICC is a caching approach, presented in [88], designed for DONA, focusing on inter-domain cache cooperation, contrasting with intra-domain cache cooperation. In DONA, RHs can cache incoming DATA packets but don't share availability information with other RHs. ICC enhances cached content availability by having RHs advertise this information to their peering domains.

-

LCC

LCC, detailed in [89], is a hash-based caching mechanism for inter-domain cooperation. It hashes content names to unique content routers within a domain, effectively mapping content to selected routers for caching. A redundancy control scheme is introduced wherein domains coordinate with neighbors to determine cached content preferences.

-

Intra-AS Co

Intra-AS Co aims to minimize caching redundancy by enabling neighboring content routers to access cached content defined in [90]. Each content router maintains a Local Cache Content Table (LCCT) and an Exchange Cache Content Table (ECCT). The LCCT records content chunks cached locally, while the ECCT records content cached by neighboring routers. Additionally, content routers periodically share their local cache content information with directly connected neighbors.

-

Distributed Cache Management (DCM)

The purpose of DCM in work [91] is to maximize the traffic volume served from the caches. By DCM principles, a CM is installed to facilitate the exchange of cache states with other CMs. The determination of cached content placement and replacement depends upon the distributed online cache management algorithm employed by the CM.

-

CATT

CATT caches a Data packet at a single node along the downloading path within every network as discussed in [92]. Additionally, CATT introduces the content's expected quality to aid content routers in forwarding Interest packets. When content is cached or stored at a content router, its expected quality at that router is considered. Conversely, if the content is not cached at a content router, the expected quality at that router is Hop+1 calculated.

Summary and Lesson Learned

In Table 2, we have summarized the caching decision principles of various caching strategies. In the table, each strategy is categorized into its designated caching mechanisms, as previously discussed. The table provides a brief description of the decision mechanism for each strategy and highlights the key objective of each study. This structured overview enables a clear understanding of the different approaches to caching, facilitating easier comparison and analysis of their respective benefits and limitations.

| References | Method name | Caching strategy | Caching mechanism | Objectives | Limitations |

|---|---|---|---|---|---|

| [93] | CEE | Redundancy-driven | Homogeneous, cooperative, & on-path | Determine which demanded content aligns with the preferences of requesting users | Subjected to data duplication and limited content diversity |

| [67] | PopCache | Probability-driven | Heterogeneous & on-path | Assess the effects of attaching probabilities (0-1) to NDN packet arrivals | Suboptimal performance in cache hit rate due to static probability threshold |

| [71] | WAVE | Popularity-driven | On-path, implicit, & heterogeneous | Examine WAVE's caching strategy for popular content chunks and inter-chunk distances | WAVE may inefficiently utilize cache due to limited adaptability to popularity shifts and network changes |

| [64] | CacheFilter | Probability-driven | Non-cooperative | Enhance network performance by selectively caching desired content, optimizing content distribution efficiency | CacheFilter's static flag bit reliance may limit adaptability to dynamic content, affecting cache efficiency and content distribution |

| [72] | MPC | Popularity-driven | Cooperative, homogeneous, & on-path | Each node locally maintains the popularity table to decide whether to cache the requested content or not | Dependence on accurate popularity thresholds may lead to inefficiencies in cache utilization |

| [73] | D-FGPC | Popularity-driven | Implicit cooperation, & on-path | Employed the topological features in order to make the content caching decision | Experienced significant delays in content retrieval due to the router's low proximity to the requested users |

| [78] | CRcache | Central nodes-driven | On-path, & cooperative | Determine the selection criteria for important routers based on their content distribution power | Increased the average hop count because of caching on central nodes that could be distant from the consumers |

| [74] | PACC | Popularity-driven | Homogeneous, cooperative, & on-path | Assess the effectiveness of the closeness-aware metric based on provider hop-count distance from requesting users | It is a computationally expensive approach due to required updates at each forwarding node to determine the closeness-aware cacher |

| [80] | BEACON | Central nodes-driven | Implicit cooperation, & on-path | Study the correlation between request rate and discrete content arrivals for selecting popular content at CRs | Because of disregarding the historical records in the process of selecting popular content, a low cache hit rate was observed |

| [75] | DPCP | Popularity-driven | On-path, & cooperative | Evaluate the effectiveness of caching based on an exponential weighted moving average (EWMA) statistical distribution | DPCP does not identify the optimal placement for the most popular contents |

| [88] | ICC | Coordinative driven | Explicit cooperation, & on-path | Investigate the efficiency of inter-domain cache advertisement mechanisms to improve content availability | Scalability and coordination issues may arise in ICC due to inter-domain cache cooperation |

| [74] | CPFC | Popularity-driven | Cooperative, on-path, & homogeneous | Explore the relationship between popularity and freshness thresholds in content caching decisions | Suffered from a high cache miss rate due to the utilization of a fixed freshness threshold for making caching decisions |

| [87] | CCC | Controller-driven | Explicit, & on-path | Assess the impact of popularity tables on caching efficiency and content availability in distributed networks | No mechanism is designed to measure the optimal placement for each piece of content |

| [63] | CNIC | Coordinatively driven | Off-path, & heterogeneous | Investigate the effectiveness of coordinating with neighboring content routers to enhance content chunk delivery | Incurs computational and management issues due to operating in a distributed manner |

| [94] | PDPU | Popularity-driven | Explicit cooperation, & on-path | Assess the effectiveness of moving requested content one step closer to intended users as demand increases | Increases the average hop count due to the provider's distance from the content consumers |

| [85] | CaCC | Controller-driven | Heterogeneous, & explicit | Assess the efficacy of maintaining Hash-ID tables for popular content items in each mode | Constant encryption/decryption can cause latency, especially in high traffic |

| [82] | CaRS | Central nodes-driven | Cooperative, & homogeneous | Assess the efficacy of dynamic caching selection for achieving balanced content distribution in network devices | Scalability may be constrained by the computational burden of monitoring neighboring cache status |

- Note: This table summarizes various caching strategies, outlining their strengths and weaknesses based on their caching decision principles.

Based on our survey and comparative analysis, we have reached the following conclusions. Feng et al. [64], Laoutaris et al. [67], and Tarnoi et al. [65] employ probability-based caching strategies. However, due to the static probability threshold that remains unchanged over time, these strategies fail to adapt to the changing demands of users, resulting in a lower cache hit rate. Consequently, this limitation leads to decreased efficiency in resource utilization and may impact overall system performance. Additionally, a lower cache hit rate can lead to increased network retrieval delays and higher user access times.

Li et al. [71], Bernardini et al. [72], Ong et al. [73], and Zha et al. [75] have presented popularity-driven caching strategies, which make caching decisions based on user preferences. However, these strategies rely on single-variable-driven content popularity prediction, which may not efficiently capture the nuanced dynamics in user demands over time. This limitation can result in suboptimal caching decisions and reduced effectiveness in meeting user expectations for timely access to relevant content.

Wang et al. [78], Amadeo et al. [80], and Lal et al. [81] have introduced centrality-based caching strategies, where content is cached on high central nodes in the network. However, a potential drawback arises when the central node is distant from the user, leading to increased network retrieval delays. This limitation can degrade the overall user experience by prolonging access times for requested content, resulting in decreased user satisfaction and potentially higher miss rates.

Wu et al. [88], Wang et al. [90], and Eum et al. [92] have introduced coordinated caching strategies, where nodes make caching decisions based on their neighbors' status. However, this approach is vulnerable to several challenges, including manual configuration, scalability issues, and computational overhead. In NDN, entities operate in a distributed manner, requiring manual management of network configuration and flow rule creation. These complexities and potential drawbacks associated with coordinated caching strategies in NDN environments can increase network delays and impact overall efficiency.

Liu et al. [84], Ruggeri et al. [86], and Asmat et al. [87] have presented controller-based caching strategies, where nodes make controller-driven, different popularity algorithms-based caching decisions. However, there is no mechanism designed for this dynamic environment to select strategic placements for caching. This lack of a mechanism increases delays in the network and impacts the efficiency of the system.

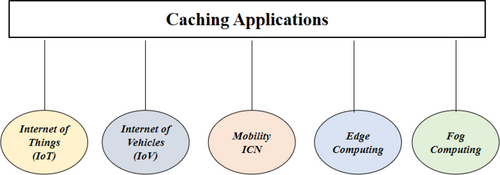

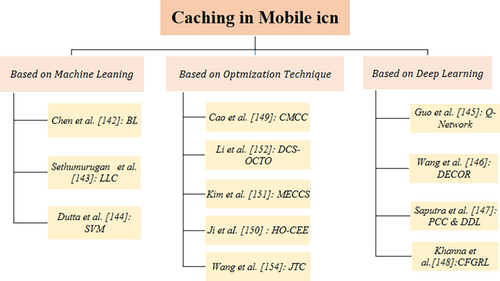

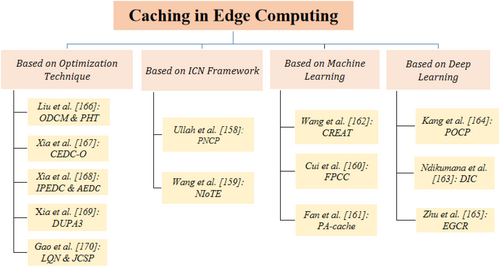

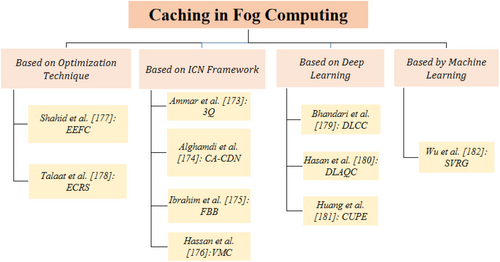

5 Caching Applications

Caching is a fundamental technique in modern communication systems, optimizing performance by storing frequently accessed data at or near retrieval points. This approach minimizes latency, enhances throughput, and improves overall system responsiveness. To explore the relevance of caching, this section examines various caching strategies used across different applications (see Figure 6). These strategies rely on ICN frameworks, Machine Learning and Deep Learning models, and optimization techniques. The strengths and limitations of these approaches will be analyzed within the context of caching systems, with a particular focus on their methodologies.

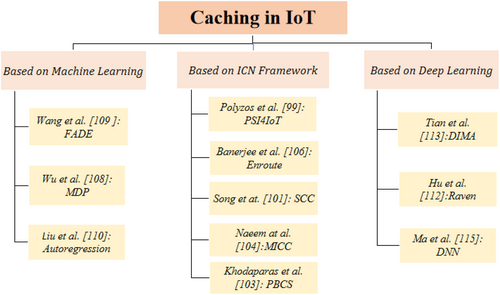

5.1 Internet of Things (IoT)

-

Caching Frameworks Based on ICN Networking

Polyzos et al. [97] present Publisher-Subscriber-Integration for IoT (PSI4IoT), an architecture based on the ICN paradigm, designed to enhance data management and security. The architecture employs an Access Control Provider (ACP) framework to ensure secure authentication and authorization, allowing only authorized users to access protected data. PSI4IoT integrates Proxy Re-Encryption (PRE) for secure data storage, where the device encrypts the data, which is stored with a designated publisher, and a Key Server re-encrypts it for the subscriber, ensuring the device's private key remains secure. Additionally, Hierarchical Identity-Based Encryption (HIBE) guarantees the integrity and verifiability of cached data. Together, these mechanisms optimize content caching, reduce latency, and improve the overall efficiency of IoT ecosystems.

Kurita et al. [98] proposed the Keyword-Based Content Retrieval (KBCR) architecture, which enhances the ICN paradigm for IoT applications. KBCR uses caching nodes to distribute requests based on keywords, initiating a search for relevant data across the network. Multiple responses are then aggregated into a single response, reducing redundant message transmission and improving content retrieval efficiency. This method minimizes latency, reduces network congestion, and enhances performance, making it ideal for resource-constrained IoT systems. Unlike NDN, which requires multiple request messages, KBCR sends a single request that is distributed and merged at intermediate nodes, significantly reducing the number of messages exchanged. Simulations show that KBCR outperforms NDN in large-scale networks, improving response time and scalability while requiring minimal additional processing for message merging.

Song et al. [99] proposed the Smart Collaborative Caching (SCC) scheme to improve the efficiency of information-centric IoT systems by tackling key challenges such as content storage, resource sharing, and node localization. This approach leverages the capabilities of ICN to streamline processes like cluster composition, content management, and position tracking through core actions, including caching, finding, joining, and leaving, all supported by specialized algorithms. Comparative evaluations against traditional methods, such as IP networks and ICN flooding, highlighted SCC's ability to significantly lower packet volume and transmission latency, especially in larger networks. Unlike ICN flooding, which generates excessive traffic and inefficiencies, SCC offers a more controlled and effective solution, reducing congestion and enhancing content retrieval. These advantages position SCC as a highly effective approach for scalable IoT applications.

Hua et al. [100] propose a novel caching design scheme characterized by three key innovative attributes. First, it introduces a Cluster-Based Approach (CBA), which leverages both in-network and end-user devices to cache content closer to the network edge, thereby optimizing content delivery as demand increases. Second, the scheme employs a Near-Path Approach (NPA), strategically positioning caching nodes along delivery paths to minimize latency and enhance retrieval efficiency. Finally, it integrates Reactive and Proactive Caching techniques (RPC) that work together to alleviate network congestion during peak periods. Simulations demonstrate that this design significantly enhances internal link load and path stretch metrics, outperforming eight popular benchmark techniques.

Khodaparas et al. [101] introduce a Pull-Based Caching Scheme (PBCS) aimed at improving content retrieval efficiency in IoT environments. In this method, nodes process data, extract key characteristics, and store the aggregated results locally via the cluster head (CH), instead of directly relaying data to cloud servers. The cached content is kept until its lifetime expires or a request is made, thereby reducing unnecessary transmissions and minimizing power consumption. By enabling local retrieval, the scheme significantly lowers latency compared to fetching content from the cloud. Simulation results show that PBCS increases cache hit rate by 40%, reduces retrieval time, lowers energy consumption, and enhances response times.

Ali Naeem et al. [102] introduce the Most Interested Content Caching (MICC) strategy for NDN-based IoT applications, aimed at improving content dissemination. MICC employs a time-based caching approach and utilizes the intuitionistic fuzzy mode (IFM) to dynamically adjust caching locations based on content interest frequency. Each router tracks content interest, optimizing caching performance metrics such as cache hit ratio and content eviction ratio. Simulations demonstrate that MICC outperforms other strategies like PCS, CCAC, and MPC in content retrieval efficiency, making it a promising solution for enhancing IoT and smart city performance.

Abkenar et al. [103] propose the Distributed Smart Clustering Caching Protocol (DSCCP) to enhance QoS in cache-enabled IoT networks. The protocol employs a smart clustering mechanism that enables Fog Nodes (FNs) to cache content locally based on channel conditions, reducing reliance on Central Nodes (CNs). By selecting optimal destination nodes for processing requests within service delay constraints, DSCCP effectively balances network delay, energy consumption, and overall benefit. Its novelty lies in maximizing network efficiency through intelligent clustering and local caching, outperforming traditional caching methods in responsiveness and resource optimization.

Banerjee et al. [104] proposed the ENROUTE algorithm, which integrates entropy-based techniques with caching to enhance content distribution in ICN. The algorithm uses entropy (EA) as a measure of link congestion and the condition of on-path caches, thereby optimizing routing and caching decisions. Nodes with higher entropy values store more popular content, while links with higher entropy are considered more congested. By improving cache entropy and selecting paths with lower entropy, the algorithm reduces delays and increases throughput. Simulation results demonstrate improvements in delay, cache hit rate, and throughput. Future research will focus on further refining content routing and caching strategies using entropy.

-

Caching Approaches Based on Machine Learning

The integration of ML-based models in IoT environments leverages predictive analytics to intelligently manage data storage and retrieval, optimizing resource utilization, enhancing system responsiveness, and enabling efficient real-time decision-making. Xiaoming et al. [105] present a Reinforcement Learning (RL) model specifically designed for edge-enabled IoT environments. This model addresses key challenges such as transmission latency and storage costs, aiming to maximize the Quality of Experience (QoE) by balancing content-centric caching quality with user satisfaction. Through its intelligent design, the model significantly improves resource utilization, system responsiveness, and real-time decision-making, demonstrating its potential to enhance IoT operations.

Wu et al. [106] propose a dynamic caching strategy that utilizes user request queues to optimize content delivery. They introduce a queue-aware cache update scheduling algorithm based on the Markov Decision Process (MDP), which aims to minimize the average Age of Information (AoI) delivered to users while accounting for content update costs. Additionally, they present a low-complexity suboptimal scheduling algorithm. Simulation results demonstrate that their approach significantly reduces the average AoI compared to existing strategies that do not incorporate user request queues.

Wang et al. [107] introduced the Federated Learning-based Cooperative Edge Caching (FADE) framework for IoT applications as a solution. This framework aims to enable fast training by decoupling the learning process from cloud-stored data in a distributed-centralized manner, allowing data training to occur on local User Equipment. By incorporating intelligent caching strategies, FADE optimizes the storage and retrieval of frequently accessed data at the network edge, reducing latency and improving response times. In terms of security and privacy, FADE ensures that sensitive data remains on local devices, minimizing data exposure to centralized servers, and leveraging federated learning to preserve user confidentiality. Trace-driven simulations show that FADE significantly outperforms the centralized DRL algorithm, demonstrating notable performance improvements.

Liu et al. [108] introduce an Auto-Regressive (AR) model tailored for IoT environments, supported by detailed mathematical derivations to highlight its effectiveness. By employing the least squares method, they develop a content popularity prediction model that accurately tracks trends in content popularity, enabling more efficient caching strategies in IoT networks. Simulation results confirm the model's effectiveness, showing notable improvements in cache hit ratios, reduced network traffic, and decreased content retrieval delays.

-

Caching Approaches Based on Deep Learning

The development of DL-based caching solutions for IoT represents a major advancement in optimizing caching efficiency and resource utilization within IoT environments. Zhang et al. [109] present an innovative approach that combines Deep Reinforcement Learning (DRL) with cooperative edge caching to address challenges related to varying data item sizes. The proposed method utilizes the Multi-Agent Actor-Critic (MAAC) algorithm to facilitate collaboration among edge servers, optimizing the caching process. This collaboration enables edge servers to efficiently manage and distribute content, resulting in improved cache hit rates and overall system performance. Simulation results demonstrate the approach's effectiveness, with significant improvements in cache hit rates and system efficiency compared to traditional caching algorithms.

Xinyue et al. [110] introduce Raven, an innovative learning-based caching framework inspired by the optimal Belady algorithm. Raven employs a Mixture Density Network (MDN) to learn the distribution of object arrival times without relying on prior assumptions, allowing for a probabilistic approximation of Belady's algorithm. The framework adapts its cache eviction decisions to the unpredictable and dynamic nature of object arrivals, taking into account factors such as randomness, time-dependent fluctuations, and the absence of fixed arrival patterns. Simulation results demonstrate that Raven outperforms existing algorithms by improving object hit ratios, reducing average access latency, and minimizing traffic to origin servers.

Tian et al. [111] introduce DIMA, a Distributed Intelligent Microservice Allocation scheme. DIMA equips each IoT device with a DRL agent, specifically a D3QN agent, along with a replay buffer. This setup enables the devices to make independent decisions on optimal caching and microservice replacement through interactions with the environment. Simulations demonstrate the effectiveness of DIMA, highlighting its superiority over existing baseline approaches. This innovative solution shows great potential for optimizing microservice caching in dynamic environments, leading to improved performance and resource utilization in IoT ecosystems.

Xu et al. [112] propose a Deep Q-Learning (DQL)-based approach in a software-defined model that integrates computing and caching functionalities. In this model, controllers manage resources from ICN routers and Mobile Edge Computing (MEC) gateways. Traditional algorithms struggle with the high complexity and dimensionality of the problem, as well as the challenge of balancing immediate and long-term rewards. The DQL-based joint optimization approach outperforms other schemes by increasing rewards and efficiently managing resource scheduling, even in dynamic network conditions. It demonstrates superior convergence, faster loss reduction, and more effective decision-making compared to methods that focus solely on caching or computing resources, highlighting the advantages of DQL within the IC-IoT framework.

Ma et al. [113] propose a dynamic caching scheme that leverages Neural Networks (NNs) to optimize content updates in Heterogeneous Networks. The scheme incorporates a queue-aware cache content update scheduling algorithm designed to minimize the average AoI for dynamic content delivery. By utilizing user request queue data, the method predicts and prioritizes the delivery of various dynamic content, ensuring timely and efficient updates. Unlike existing algorithms, the proposed framework anticipates user demands with greater accuracy, thereby improving responsiveness and operational efficiency. Comprehensive evaluations demonstrate that this approach significantly reduces AoI and enhances the overall performance of dynamic content caching systems.

Zhang et al. [114] introduce a dynamic content importance-based caching scheme (D-CICS) that selects and replaces content based on factors like modal type, popularity, size, and network conditions. Unlike traditional methods that focus solely on popularity, D-CICS uses a real-time evaluation model based on Deep Reinforcement Learning with Double Q-Learning (D3QN) to assess the importance of multi-modal content. The scheme incorporates lightweight caching and replacement policies that are specifically tailored for dynamic networks. By efficiently replacing and evaluating content, this approach reduces the risk of unauthorized access or misuse by minimizing unnecessary data transfers and ensuring that only relevant content is cached. This helps mitigate the exposure of sensitive information, thereby enhancing both the security and privacy of the system by limiting the exposure to authorized content only.

-

Caching Approaches Based on Optimization Applications

Weerasinghe et al. [115] introduced ACOCA, an optimization approach specifically designed for IoT ecosystems to maximize the cost and performance efficiency of Context Management Platforms (CMPs). ACOCA addresses the challenge of efficiently selecting context for caching while managing the additional costs associated with context management. It employs a scalable and selective agent for caching context, implemented using the Twin Delayed Deep Deterministic Policy Gradient (TD3) method. Additionally, ACOCA integrates adaptive context-refresh switching and time-aware eviction policies. A comparative analysis demonstrates ACOCA's superior performance in both cost and performance efficiency, establishing it as a promising optimization solution for IoT ecosystems (Figure 8).

Summary and Lesson Learned

Table 3 provides a summary of the various caching frameworks or algorithms implemented in IoT applications, along with an overview of the caching mechanisms used in each study. It offers a concise description of the decision-making processes for each IC-IoT framework and outlines the primary objectives of the respective studies. This structured summary enables a more straightforward understanding of the different caching strategies and facilitates easier comparison and analysis of their advantages and limitations.

| References | Method name | Caching mechanism | Objectives | Limitations | Category |

|---|---|---|---|---|---|

| [97] | PSI4IoT | Cooperative, & on-path | Investigate IoT security, emphasizing access control, secure proxies, and trust in ICN architectures | Challenges in ensuring compatibility with diverse IoT devices and protocols | ICN |

| [98] | KBCR | Cooperative | To enhance efficiency in IoT environments by aggregating multiple responses into a single response with the KBCR network approach | KBCR's keyword-based retrieval may expose sensitive information, highlighting the importance of secure dissemination to prevent unauthorized access or manipulation | ICN |

| [99] | SCC | On-path, & cooperative | Aimed at focusing on effectively managing increasing IoT device counts and fluctuating data transmission levels with the smart collaborative caching scheme. | SCC's scalability may be limited by coordination and communication overhead as device participation increases | ICN |

| [100] | CBA, NPA, & RPC | Explicit cooperative, & on-path | Implementing a cluster-based scheme to cache content closer to the edge network, leveraging both in-network and end-user devices | Affected by dynamic IoT network conditions, including demand shifts, device mobility, and topology changes, influencing caching and congestion strategies | ICN |

| [107] | FADE | Implicit cooperative | Decentralize the learning process from cloud-stored data and enable local data training on user equipment | Dependency on trace-driven simulations may limit the generalizability of FADE's performance to real-world IoT environments | Machine learning |

| [111] | DIMA | Implicit, & heterogeneous | Optimize microservice caching in dynamic environments by empowering each IoT device with a D3QN agent for independent caching decision | Training deep Q-networks in distributed settings may be computationally intensive and time-consuming | Deep learning |

| [112] | DQL | Implicit cooperative | Maximize efficiency by integrating computing and caching functionalities through optimized resource scheduling | Implementing joint resource scheduling in IC-IoT may face challenges with variable data and computational demands | Deep learning |

| [101] | PBCS | Non-cooperative | Optimize caching at cluster heads to reduce transmissions, power usage, and latency, boosting cache hits and cutting retrieval time | The cluster head reliance introduces failure points, affecting caching during hardware failures or unavailability | ICN |

| [102] | MICC | Explicit cooperative, & heterogeneous | Enhance content dissemination by strategically caching content based on interest frequency, using a time-based deployment strategy | Limited adaptability to rapidly changing interest frequency patterns may hinder MICC's effectiveness, potentially leading to suboptimal cache utilization | ICN |

| [104] | EnRoute | On-path, & implicit cooperative | The aim of integrating entropy-based techniques into caching and content routing is to improve delay reduction and enhance throughput during content delivery | Inaccurate entropy data may limit optimal caching and routing strategies, impacting content delivery efficiency | Machine learning |

| [112] | DQL | Implicit, & on-path | To enhance QoE in edge-enabled IoT environments through optimized content-centric caching and user experience | The computational complexity and resource requirements of deploying deep Q-learning in IoT networks may limit its adoption and scalability | Deep learning |

| [115] | ACOCA | Cooperative, & on-path | Aiming to maximize both the cost and performance efficiency of a Context Management Platform (CMP) in near real-time | ACOCA's effectiveness may be limited by data item variability, impacting caching decisions | Optimization technique |

| [110] | Raven | On-path | Optimize cache eviction decisions using a Mixture Density Network, with the aim of outperforming existing algorithms in production workloads | Training and maintaining the Mixture Density Network incurs computational overhead, limiting its scalability in high-throughput caching environments | Deep learning |

- Note: This table summarizes various caching strategies in IoT, along with their objectives and limitations.

IoT caching strategies employ various methods to enhance system performance and improve user experience. Based on our review and analysis, we conclude that scalability is a major challenge for IoT-based caching approaches, especially as networks expand in size and complexity. As the number of devices and the volume of data increase, traditional caching algorithms often become less efficient, making it more difficult to retrieve content effectively. Large-scale IoT networks, with their diverse devices and vast amounts of data, can overwhelm conventional caching systems. Researchers, including Zhang et al. [99], Kurita et al. [100], Liu et al. [101], Wu et al. [106], and Wang et al. [107], emphasize the necessity for scalable content distribution solutions that can efficiently manage growing network traffic and data loads.

Adaptability is a key challenge in the development of IoT caching strategies. Caching systems must be able to respond to real-time changes in content demand and varying network conditions, requiring solutions that can adjust dynamically. However, many traditional caching approaches are based on static models that assume relatively stable patterns of content access. This can lead to inefficiencies when demand fluctuates. According to Xiaoming et al. [105], Zhang et al. [110], and Liu et al. [116], numerous existing frameworks struggle to adjust to evolving user needs or network conditions, resulting in lower cache hit rates, increased delays, and suboptimal resource utilization. Therefore, implementing adaptable caching mechanisms is critical for improving performance in dynamic IoT environments.

Computational complexity presents a significant challenge for IoT caching strategies, particularly for those that incorporate resource-intensive techniques like deep learning or reinforcement learning. These algorithms often require considerable computational resources and memory, which may not be available in resource-limited IoT devices. For example, approaches such as DRL and deep Q-learning necessitate extensive processing power for both training and deployment, making them unsuitable for many low-power IoT devices Zhang et al. [109], Xu et al. [112]. Moreover, the high computational load associated with these models can cause delays, negatively impacting the system's responsiveness in real-time applications.

The key lesson learned from the reviewed studies is that effective IoT caching strategies must overcome several challenges, including scalability, computational complexity, and security. Caching systems must efficiently handle the increasing volume of data traffic and the growing number of devices while maintaining performance. Moreover, these systems need to be adaptable to real-time fluctuations in network conditions and content demand. While integrating advanced techniques like deep learning can enhance performance, it is crucial to balance these approaches with the resource limitations inherent in IoT environments to ensure their practical feasibility.

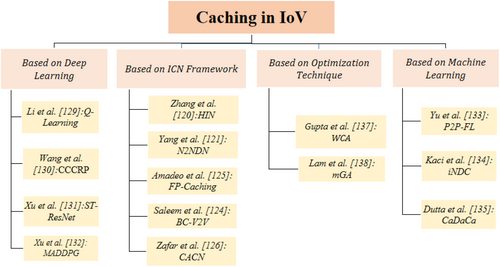

5.2 Internet of Vehicles (IoV)

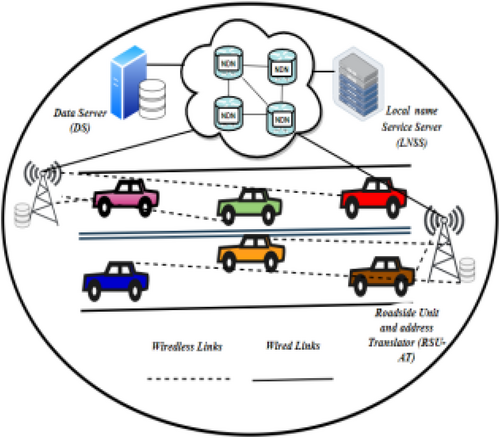

The IoV refers to the network of interconnected vehicles that communicate with each other and with roadside infrastructure to enhance transportation systems. Figure 9 details caching in IoV, involving data servers that own and store data contents, Local Name Service Servers (LNSS) that cache domain names, and a set of NDN entities that forward frequent data chunks to roadside units. These roadside units, devices deployed along roadsides to enable communication between vehicles and the network, deliver data to vehicles with minimal delay. This setup significantly improves communication efficiency within the IoV framework [117, 118].

-

Caching Frameworks Based on ICN Networking

Zhang et al. [116] present a content caching strategy for the IoV that uses Heterogeneous Information Networks (HIN) to address data complexity and the rising demand for popular content. Their method predicts user interest, enabling dynamic caching that reduces network traffic and improves both QoS and QoE. Experiments demonstrate that their approach outperforms traditional methods in content prediction, user satisfaction, and network load reduction, offering better performance, scalability, and lower delays while minimizing reliance on edge computing.

Yang et al. [119] propose an NDN-based framework for CV applications, addressing scalability issues caused by rapid mobility and a large number of vehicles. They introduce the hierarchical hyperbolic NDN backbone (H2NDN), which organizes geographic locations into a hierarchical namespace and router topology. Within this hierarchy, traffic is redirected to higher-level routers, optimizing Interest packet forwarding with static FIB entries. These features significantly improve the efficiency and scalability of the H2NDN backbone, making it well-suited for CV applications.

Chen et al. [120] address the limitations of traditional TCP/IP stacks in IoV scenarios by focusing on spatial-temporal characteristics for data caching in Vehicular Named Data Networking (VNDN). They classify data into emergency safety, traffic efficiency, and entertainment messages, using these categories to determine caching decisions. Their expanded simulation platform shows that their caching strategy outperforms others in terms of hit ratio, hop count, and cache replacement times.

Chen et al. [121] enhance the security of the IoV system by leveraging Named Data Networking (NDN) and Blockchain technology. They utilize Communicating Sequential Processes (CSP) to model the system, ensuring critical properties such as deadlock avoidance, data availability, and protection against fake PIT deletions and Content Store (CS) pollution. These properties are rigorously validated through the Process Analysis Toolkit (PAT). To further reinforce security, the authors integrate Blockchain technology to safeguard the system against unauthorized modifications, ensuring data integrity through immutable transactions. This Blockchain integration fortifies the system against security breaches by providing a tamper-resistant layer that guarantees the authenticity of data exchanges within the NDN-based IoV architecture, enhancing the overall system's resilience to malicious attacks.

Saleem et al. [122] address challenges in VANETs, including communication reliability, security, and scalability. They propose a blockchain-based solution for secure V2V communication, referred to as BC-V2V. This method utilizes a K-Means dynamic clustering algorithm for cluster formation and an NDN approach for efficient content placement, ensuring secure and reliable content distribution. The blockchain framework protects the data exchanged between vehicles, preventing unauthorized access and ensuring the integrity of communications, making it a secure and reliable solution for content distribution in VANETs.

Amadeo et al. [123] propose the Freshness and Popularity Caching (FP-Caching) method to address the challenges of caching transient content in VNDN. This approach focuses on real-time data, such as road traffic updates and parking availability, which quickly lose relevance. While freshness is crucial for caching decisions, especially in safety and non-safety applications, the authors emphasize the importance of considering content popularity, particularly in cases of skewed distribution, such as Zipf-like behavior. This dual focus on freshness and popularity is key to optimizing caching strategies and improving VANET performance.

Zafar et al. [124] propose a hierarchical context-aware content-naming (CACN) scheme for NDN-based VANETs, enabling efficient content forwarding and caching using information from content names. The scheme simplifies communication and storage through an innovative coding technique and reduces latency with a decentralized context-aware notification (DCN) protocol. The DCN protocol broadcasts event notifications, prioritizing vehicles based on location and proximity. Simulations show the scheme's effectiveness in improving VANET communication.

Javed et al. [125] propose a solution for managing large-scale data generation and dissemination in IoV by introducing Vehicular Edge Computing (VEC). In this framework, Road Side Units (RLUs) act as edge servers for caching and task offloading, addressing the increased demands of vehicular applications. The approach includes a task-based architecture for content caching, focusing on content popularity prediction, cache placement, and retrieval.