Power analyses to inform Duplex Sequencing study designs for MutaMouse liver and bone marrow

Accepted by: S. Smith-Roe

Abstract

Regulatory genetic toxicology testing is essential for identifying potentially mutagenic hazards. Duplex Sequencing (DS) is an error-corrected next-generation sequencing technology that provides substantial advantages for mutation analysis over conventional mutagenicity assays including: improved accuracy of mutation detection, ability to measure changes in mutation spectrum, and applicability across diverse biological models. To apply DS for regulatory toxicology testing, power analyses are required to determine suitable sample sizes and study designs. In this study, we explored study designs to achieve sufficient power for various effect sizes in chemical mutagenicity assessment. We collected data from MutaMouse bone marrow and liver samples that were analyzed by DS using TwinStrand's Mouse Mutagenesis Panel. Average duplex reads achieved in two separates studies on liver and bone marrow were 8.4 × 108 (± 7.4 × 107) and 9.5 × 108 (± 1.0 × 108), respectively. Baseline mean mutation frequencies (MF) were 4.6 × 10−8 (± 6.7 × 10−9) and 4.6 × 10−8 (± 1.1 × 10−8), with estimated standard deviations for the animal-to-animal random effect of 0.15 and 0.20, for liver and bone marrow, respectively. We conducted simulation analyses based on these empirically derived parameters. We found that a sample size of four animals per group is sufficient to obtain over 80% power to detect a two-fold change in MF relative to baseline. In addition, we estimated the minimal total number of informative duplex bases sequenced with different sample sizes required to retain power for various effect sizes. Our work provides foundational data for establishing suitable study designs for mutagenicity testing using DS.

1 INTRODUCTION

Conventional in vivo mutagenicity tests rely on individual reporter genes for mutation quantification. The most widely used mutagenicity test is the transgenic rodent (TGR) assay (OECD, 2022). This assay relies on bacterial reporter genes for mutation quantification by using viral phages to encapsulate these genes and infect bacteria; mutations are identified by plaque formation in bacterial lawns grown under selective conditions (Lambert et al., 2005). These reporter-based assays have several important limitations: (a) they quantify mutations in single bacterial genes that do not accurately represent the entirety of the mammalian genome; (b) they are impractical for the analysis of mutation spectra and thus cannot effectively inform on mutagenic mechanism (Beal et al., 2020); and (c) they rely on specific rodent strains and cannot be integrated with test methods using other model organisms. Development of methods to circumvent these limitations would allow for better quantification and understanding of mutagenesis to protect human health.

Duplex Sequencing (DS), an error-corrected next-generation DNA sequencing technology, provides an alternative method to quantify mutations that addresses the limitations of reporter-based assays (Valentine et al., 2020). DS quantifies mutation frequency (MF; mutations per bp) with an extremely low error rate of <1 in 107 sequenced nucleotides (Kennedy et al., 2014) in any tissue type and model organism, while simultaneously informing mutagenic mechanisms (Marchetti, Cardoso, Chen, Douglas, Elloway, Escobar, Harper, et al., 2023; Salk & Kennedy, 2020). DS evaluates mutagenicity across custom panels that capture different endogenous genomic loci to reflect genome-wide changes. These panels are composed of twenty 2.4 kb genic and intergenic loci spread across the autosomes, spanning both coding and non-coding regions, and reflecting the GC content of endogenous mammalian genomes. The loci were chosen for being selectively neutral, optimal performance in hybrid capture, and absence of pseudogenes or repetitive elements that could confound alignment or variant calling (Dodge et al., 2023). These panels are commercially available for mutagenesis studies in mouse (LeBlanc et al., 2022), rat (Smith-Roe et al., 2023), and human (Cho et al., 2023) tissues. While not without limitations (e.g., coverage of <1% of the genome, requirement for a reference genome and model-specific panels), DS has gained traction as a powerful tool for regulatory mutagenicity testing (Marchetti, Cardoso, Chen, Douglas, Elloway, Escobar, Harper Jr, et al., 2023).

DS accurately detects and characterizes mutations by creating consensus sequences from both strands of DNA (Kennedy et al., 2014). Briefly, DS labels fragmented DNA molecules with unique molecular identifiers so that, following PCR amplification and sequencing, reads from both strands may be assembled and grouped into tag families. Each read within a tag family is compared to create single strand consensus sequences (SSCS), which are then grouped for a second round of error correction to produce duplex consensus sequences (DCS). True mutations are scored if they are present at the same position in all reads of a tag family and on both strands of the DNA, as it is unlikely that a sequencing or PCR error would occur at the exact same location on the sister strand. Thus, DS eliminates almost all sequencing errors, increasing sensitivity for de novo mutation detection.

DS has been effective at measuring dose-dependent increases in MF following exposure to well-established mutagens. Prior research has analyzed benzo[a]pyrene (LeBlanc et al., 2022), procarbazine hydrochloride (Dodge et al., 2023), N-nitrosodiethylamine (Bercu et al., 2023), and N-ethyl-N-nitrosourea (Smith-Roe et al., 2023) exposures in rodent models. These studies demonstrate the potential for DS in mutagenicity testing. However, every new assay must be evaluated to determine the minimum detectable “signal” and the optimal approach to detect it, given such a signal exists. Power analyses are necessary to establish acceptable study design parameters to advance the use of DS in mutagenicity assessment.

Power is the probability that the null hypothesis can be correctly rejected given the sample size and effect size of the study. An underpowered study is more likely to miss true effects resulting in misleading conclusions, whereas an overpowered study may use more animals than necessary raising ethical concerns. Typically, 80% power is considered standard and what researchers aim for in their studies (Festing, 2018); it indicates an 80% chance of detecting a true effect if it exists and a 20% chance of missing it, balancing ethical and practical considerations with the risk of overlooking true effects.

We conducted simulation studies to evaluate the sample size and required number of informative duplex bases sequenced per sample for different effect sizes relevant to analyses of mouse bone marrow (BM) and liver. These tissues are widely used in regulatory mutagenicity testing and have very different characteristics. BM is highly proliferative, allowing DNA damage to be quickly converted into mutations and fixed in the genome. In contrast, liver is only moderately proliferative, but is the most metabolically active tissue and the first pass organ for detoxication. We simulated different study designs to evaluate how power changes with different parameters and draw conclusions on feasible sample size and depth of sequencing.

2 METHODS

2.1 Baseline data

The DS data used in this study are from two studies on the livers and BM of MutaMouse males exposed daily for 28 days by oral gavage to olive oil (vehicle control: VC) or toxicants of interest (Schuster et al., 2024; LeBlanc et al., in preparation). These studies will henceforth be referred to as “Study A” and “Study B.” The methodology for DNA extraction, DS library preparation, and DS data analysis was as described previously (Dodge et al., 2023), except that DNA was enzymatically fragmented, not ultrasonically sheared, during library preparation. The computational analysis of raw DS data was handled by TwinStrand via their DNAnexus® pipeline. The protocol for this pipeline is detailed by Kennedy et al. (2014) and the underlying code published (Kennedy-Lab-UW/Duplex-Seq-Pipeline, 2023).

The data from Study A and Study B were used to identify a reasonable estimate of baseline MF and the number of informative duplex bases retrieved for mouse BM and liver DNA analyzed with a 500 ng DNA input by DS. The standard deviations used for the power analysis cover a range of values observed in the literature and in our own studies. In BM, these values were 0.06 (Dodge et al., 2023; LeBlanc et al., 2022), 0.10 (Study A), and 0.27 (Study B). In liver, standard deviations were 0.14 (Study A and Study B). There are three other papers that have used DS for mutagenicity assessment in chemically treated rodents (Bercu et al., 2023; Smith-Roe et al., 2023; Valentine et al., 2020). Unfortunately, none of these papers report the standard deviation on their MF. Thus, we use the values from Dodge, LeBlanc and those presented herein as reasonable estimates of possible standard variations to be used for the calculations. To calculate standard deviation, a generalized linear mixed model (GLMM) with a binomial error distribution was used to fit the data, incorporating fixed effects (treatment groups, targets, and their interaction) and random effects (experiment and animal ID) to account for overdispersion and estimate animal-to-animal variation using variance component analysis (Dertinger et al., 2023). These variance component estimates served as the basis for the range of standard deviations used in the simulation experiments for power analyses.

2.2 DS data interpretation

Mutation scoring in the studies used was done under the assumption that all identical mutations within a sample are the result of clonal expansion from a single mutational event and so are counted once (Dodge et al., 2023). Germline mutations (with variant allele frequency (VAF) >0.01) were filtered out and only true de novo mutations were retained. Additionally, the DNAnexus® pipeline removes missing values based on whether a base can be confidently identified for each position.

2.3 Power analysis

Overdispersion occurs when the observed variance is greater than the variance expected under a standard binomial distribution, often due to heterogeneity among the subjects (animals). GLMM provides a suitable model to estimate this variability, as an input to the simulation for the power analysis. Gelman and Hill (2007) described an approach similar to the one we took for our power analyses, albeit in a more complex multivariate context. We applied a simplified version to simulate univariate overdispersed binomial data using a random intercept model. The first step of simulation-based power analysis was to simulate a large number of data sets. When simulating responses from an overdispersed binomial distribution, we only needed to provide an estimate of the MF and an estimate of the animal-to-animal variability; these were derived from the results of the GLMM from studies A and B or from previously published studies (Dodge et al., 2023; LeBlanc et al., 2022). Estimates for these parameters, or a range of plausible values, are often available from previous studies or from a pilot study. If no data are available, a plausible range of parameter values is justified based on prior knowledge of the study system.

The rnorm() function was used to randomly generate realizations for the random variable. The rnorm() function required three parameters: the required sample size n which was set to 1, the mean which was set to zero, and the standard deviation which was set to be the animal-to-animal variation parameter determined by the simulation scenario.

We performed a power analysis at varying sample sizes and total number of informative duplex bases and degree of animal-to-animal variation. Simulated data for hypothetical treatment groups were randomly generated to produce defined increases in MF (e.g., 1.2-fold, 1.5-fold, and 2-fold). A generalized linear model (GLM) was used to calculate significance using the Wald statistic estimated using the doBy R library (Halekoh & Højsgaard, 2024; version 4.6.19). Experiments were simulated at least 1000 times for each scenario and the power was estimated as the frequency of experiments resulting in a significant difference between simulated treated and control groups at a significance level of 0.05.

3 RESULTS AND DISCUSSION

- The number of test animals used (sample size) per dose group. Study A and Study B used 4 animals per dose group. The data from these experiments were combined and simulated to produce different sample sizes for the power analysis (described below).

- Background MF. The VC MF was 5.33 × 10−8 (± 7.35 × 10−9) and 3.79 × 10−8 (± 3.35 × 10−9) mutations per bp for liver in Study A and Study B, respectively, and 4.13 × 10−8 (± 1.35 × 10−9) and 5.02 × 10−8 (± 1.69 × 10−8) mutations per bp for BM in Study A and Study B, respectively. The average pooled VC MF (i.e., combining both studies; n = 8 per tissue) for liver and BM was 4.56 × 10−8 (± 6.75 × 10−9) and 4.58 × 10−8 (± 1.14 × 10−8) mutations per bp, respectively. As the averages for both tissues are very similar, an overall average of 4.57 × 10−8 mutations per bp was used in the subsequent power analyses.

- Informative duplex bases sequenced per sample. The number of raw reads for each dataset was 3.57 × 108, 2.47 × 108, 3.48 × 108, and 3.36 × 108 for liver and BM in Study A and B, respectively. The average total number of informative duplex bases achieved for each sample was 8.34 × 108 (± 9.21 × 107) and 8.51 × 108 (± 6.05 × 107) duplex bases in the liver studies, and 8.32 × 108 (± 7.51 × 107) and 1.12 × 109 (± 7.39 × 107) duplex bases in the BM studies. Note that these numbers are lower that what is expected based on the conversion formula Equation (2) because the studies achieved peak tag family sizes that were larger than the optimal study described therein; peak tag family sizes in these experiments was a minimum of 9.

| Tissue | Study | Sample size per dose groupa | Average background MFb mutations per bp (± SE) | Average informative duplex bases per samplec (± SE) |

|---|---|---|---|---|

| Liver | A | 4 | 5.33 × 10−8 (±7.35 × 10−9) | 8.51 × 108 (±6.05 × 107) |

| B | 4 | 3.79 × 10−8 (±3.53 × 10−9) | 8.34 × 108 (±9.21 × 107) | |

| Bone marrow | A | 4 | 4.13 × 10−8 (±1.35 × 10−9) | 8.32 × 108 (±7.51 × 107) |

| B | 4 | 5.02 × 10−8 (±1.69 × 10−8) | 1.12 × 109 (±7.39 × 107) |

- a Male mice were exposed for 28 days by oral gavage to olive oil (vehicle control) or toxicants of interest (Study A and Study B).

- b MF of the vehicle controls in each study.

- c Number of informative duplex bases is averaged across all samples used in the experiments (i.e., both exposed and vehicle controls).

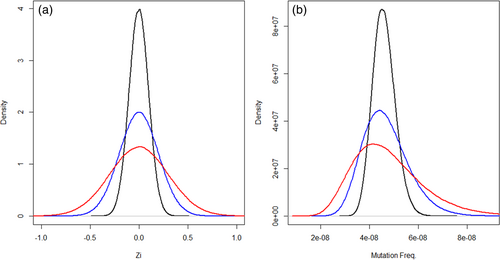

These empirical parameters were used to generate a range of hypothetical characteristics for potentially feasible DS experiments: sample sizes of 3–8 per dose group; a background MF of 4.57 × 10−8 mutations per bp consistent across liver and BM; an average total informative duplex bases sequenced per animal of up to 2 × 109 duplex bases; a fold change of 1.5 from the VC group; and sample standard deviations of 0.1, 0.15, 0.2, and 0.3. The standard deviation in this context represents the sample variability of MF on the ln (natural logarithm) scale as the logit link function was used in the GLMM to model the probability of observing a mutation. For instance, an MF of 4.57 × 10−8 is −16.90 on the ln scale; with a standard deviation of 0.3, the generated adjusted MF for each observation () would then randomly fall anywhere within the range of (−16.90 × 0.97 = −16.39) to (−16.90 × 1.03 = −17.41) based on the density distribution provided in Figure 1. This interval translates back to an MF range of 2.75 × 10−8 to 7.59 × 10−8. Figure 1 also shows the distribution of the animal-to-animal random effect for the other standard deviation values as well. As the chosen test parameters are based on empirical data, they enhance the biological relevance of the analysis.

Our study investigated MFs and sequencing depths produced in the analyses of BM and liver in MutaMouse males. These values may vary for other models, tissues, and laboratories. For example, Dodge et al. (2023) and LeBlanc et al. (2022) used sonication for DNA fragmentation and reported higher control MFs (e.g., 1.31 × 10−7 mutations per bp reported by Dodge et al. (2023) versus our 4.57 × 10−8 mutations per bp). Dodge et al. (2023) and LeBlanc et al. (2022) sequenced averages of 7.98 × 108 and 8.50 × 108 duplex bases, respectively, which are within our typical range. However, Smith-Roe et al. (2023) sequenced 5.36 × 108 duplex bases and 4.89 × 108 duplex bases in their study, which is below our typical range. Laboratories that achieve lower sequencing depths may need more samples to achieve sufficient power, whereas those with higher depths could require fewer samples. The general power analysis approach presented herein is extendable to other parameters, and other researchers can readily apply the code we have provided (see Supplementary Materials in Data S1) to determine sample sizes to maintain 80% power for their systems.

Our study focused solely on biological variability measured in-house. Comprehensive studies on analytical and technical variability are forthcoming; preliminary data suggest that this variability is negligible with DS. For instance, results showed remarkable reproducibility across two laboratories that sequenced samples from two identically-designed in vitro studies at the same facility (Cho et al., 2023) and in the same samples prepared and sequenced at two different locations (LeBlanc et al., 2022). In both cases, DS produced highly similar results, indicating very low technical variability.

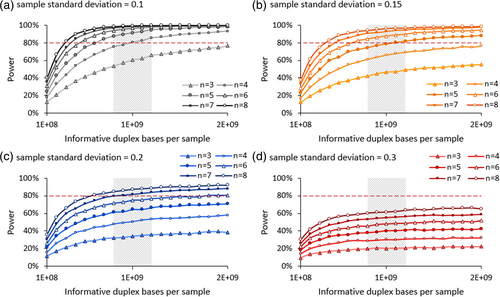

In our study, we first simulated data to determine power to detect a 1.5-fold change in MF between control and exposed groups as the test parameters vary (Figure 2). We found that when the sample standard deviation is 0.1, 3 animals per dose group is insufficient to achieve 80% power with 2 × 109 informative duplex bases sequenced per animal—the maximum investigated in this study. However, increasing sample size to four achieves over 95% power with this number of informative duplex bases and 80% power with 1 × 109 informative duplex bases. Increasing the sample size further decreases the required number of duplex bases for any given power value. When the sample standard deviation is 0.15, 80% power is achieved with a minimum sample size of five animals per dose group and 1 × 109 informative duplex bases. With a standard deviation of 0.2, 80% power is achieved with a minimum sample size of 6 animals per dose group and 1.5 × 109 informative duplex bases; lower samples sizes yield <71% power. When the sample standard deviation is 0.3, 80% power is not achieved with any combination of sample size and numbers of duplex bases investigated here. Overall, increasing either the sample size or the number of informative duplex bases sequenced per animal increases the power of the study design. However, although larger sample sizes notably enhance power, increasing the number of informative duplex bases beyond about 1.2 × 109 duplex bases yields minimal additional power. This convergence occurs when you have sequenced deeply enough to have an accurate measure of changes in MF. Thus, increasing the number of samples has more impact on power than duplex depth by this point. Our analysis sheds light on the dynamics of statistical power as sample size and sequencing depth vary.

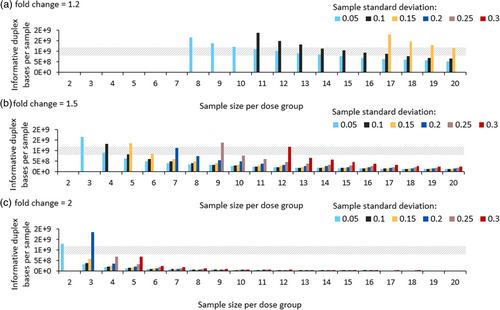

We ran additional modeling experiments to determine the minimum sequencing depth and sample size needed to observe a significant increase in MF with a power of 80% and a p-value of <0.05 for different effect sizes and variances. For this analysis, the investigated parameter range was expanded: sample sizes were varied from 2 to 20 animals per dose group; background MF was kept constant at 4.57 × 10−8 mutations per bp; the average total number of informative duplex bases sequenced per animal was modeled up to 2 × 109 duplex bases; sample standard deviations ranged from 0.05 to 0.3, with increments of 0.05; and expected fold changes of 1.2, 1.5, and 2 were considered from the VC group to cover a wide range of responses. For each combination of sample size, variance, and expected fold change, we varied the number of informative duplex bases sequenced per animal until we found the minimum value needed to observe a significant increase in MF with a power of 80% (± 0.01%). This analysis provides a foundation to establish study designs required to achieve statistically significant results in diverse mutagenicity studies.

Our results demonstrate that several feasible study designs are suitable across the above range of parameters (Figure 3). A 1.2-fold change is only detectible with a minimum of 8 animals per dose group and an average of 1.6 × 109 informative duplex bases sequenced per sample if the sample standard deviation is extremely low—0.05. Further sample size increases lower this required number of informative duplex bases; however, even with as many as 20 animals per dose group, study designs with sample standard deviation >0.15 cannot detect 1.2-fold changes, given that the required number of duplex bases modeled did not exceed 2 × 109 informative duplex bases. Detecting a 1.5-fold change is more feasible. Studies with a sample standard deviation of 0.05 are sufficiently powered with at least 3 animals per dose group, requiring a corresponding 1.2 × 109 informative duplex bases per sample. Studies where the sample standard deviation is 0.3—the highest investigated variance—are only sufficiently powered with 12 animals per dose group and 8.9 × 108 informative duplex bases. Finally, detecting a 2-fold change requires a sample size of 2 and 1.2 × 109 informative duplex bases when the sample standard deviation is 0.05, and a sample size of 5 and 7.0 × 108 informative duplex bases when the sample standard deviation is 0.3. Overall, our analysis reveals study designs that are amenable to detecting different fold changes using different sample sizes and sequencing depths.

The feasibility of various parameters in rodent DS studies needs to be considered when conducting power analyses. Some designs require more samples per dose group than ethically permissible to achieve sufficient power. One way to reduce sample size requirements is to increase the number of informative duplex bases sequenced per sample. However, with a fixed sample size, power tends to plateau with an increasing number of informative duplex bases (Figure 2). Where this happens depends on the characteristics of the dataset. Therefore, increasing the number of informative duplex bases to use smaller sample sizes might not always be possible. Thus, the trade-off between increasing sample size or the number of informative duplex bases must be carefully considered when designing studies to accommodate high sample deviations and small effect sizes.

Another consideration when increasing the number of informative duplex bases is the biological limit of how deeply we can sequence our samples. There is a limited availability of unique DNA molecules in a given amount of input DNA—known as DNA library complexity. Complexity must be balanced against the number of informative duplex bases achieved. Starting with a more complex sample (i.e., with a larger amount of input DNA) would allow us to increase the number of informative duplex bases. This area presents an opportunity for future research exploration to determine optimal DNA inputs and the number of informative duplex bases for specific DS applications.

Our analysis enabled us to consider acceptable study designs based on two main factors: the minimum sample size per dose group and the most feasible number of informative duplex bases sequenced per sample. In our laboratory, we typically sequence to a depth of 1.2 × 109 informative duplex bases per sample, which is economically feasible at present. Since the required number of informative duplex bases per sample and sample size are inversely correlated, several study designs that minimize sample size are outside the scope of what we can realistically sequence. Given this restriction, we considered study designs for DS that require ≤2.0 × 109 informative duplex bases (Figure 3). Using this restriction, detecting a 1.2-fold change in MF is challenging; large sample sizes are required to compensate for power loss due the lower number of informative duplex bases per sample and the low expected fold change in MF. In contrast, the detection of a 2-fold change is well within our scope. With a sample size of 4, we achieve sufficient power to analyze samples across most variances and at an easily attainable number of informative duplex bases. Increasing the sample size to 5 extends this capability to include samples with a standard deviation of 0.3. Overall, our results suggest that samples sizes of 4–5 are sufficient to detect at least a 1.5-fold change with a feasible number of informative duplex bases even with high sample deviation using the present enzymatic fragmentation protocol in MutaMouse liver and BM samples. The TGR test guideline indicates that a minimum of five mice per dose group should be used to ensure sufficient statistical power to detect at least a 2-fold change in MF (OECD, 2022). Although most OECD mutagenicity tests are designed to be able to detect this level of change, DS can feasibly detect changes as small as 1.5-fold, making it the more sensitive technology. Thus, DS has a higher power to detect the required effect sizes recommended in mutation analyses than the TGR assay.

The parameter range investigated in this study did not encompass all possible values. Study parameters in other experiments may differ, potentially altering the recommended study designs. Sample standard deviation, background MF, and effect size are not under the control of the researcher; they are determined by the study compound characteristics and the biology of the organism. However, researchers can adjust sample size and sequencing depth to optimize the power of the study design. In addition, we calculated power for experiments with two experimental groups: control and treatment. However, the strategy we used is also applicable to studies with multiple treatment groups. The main difference in the analysis would be the application of a Bonferroni correction to adjust the significance level for multiple testing. Our results provide a foundation for strategic optimization of study designs for mutation analysis.

4 CONCLUSIONS

Study designs should be based on the biological characteristics of the test system and the objective of the mutagenicity assessment. With DS, ethical considerations about animal use must be balanced against sequencing (cost) feasibility, anticipated effect size, and group variances. Our power analysis provides a basis for study design selection for liver and BM tissues in MutaMouse animals. We show that a variety of study designs can work to detect fold changes of 1.5 and 2. However, the routine detection of a 1.2-fold change is likely beyond our present capabilities. Curation of historical controls will be critical for DS analyses across different tissues. This will enable us to achieve greater accuracy in our estimates of background MF and sample deviation. Once enough data are collected, a similar power analysis could be done for mutation spectra. Overall, our study informs sample size and the required number of informative duplex bases needed per sample for DS study designs in MutaMouse BM and liver tissue to promote regulatory testing using this powerful new technology.

ACKNOWLEDGMENTS

CLY is grateful for funding from the Natural Sciences and Engineering Research Council of Canada (RGPIN-2021-02806), with infrastructure support through the Canadian Foundation of Innovation John Evan's Leadership Fund (#233109), the Ontario Research Fund (#40569), and uOttawa. CLY acknowledges that this project was conducted thanks, in part, to funding provided through the Canada Research Chairs Program (CRC-2020-00060). CLY and FM are thankful for funding through the Burroughs Wellcome Innovations in Regulatory Sciences Award (#1021737). FM acknowledges support from Health Canada's Genomics Research and Development Initiative and the Chemicals Management Plan.

CONFLICT OF INTEREST STATEMENT

The authors declare that there is no conflict of interest.

Open Research

DATA AVAILABILITY STATEMENT

Data sharing is not applicable to this article as no new data were created or analyzed in this study.