Illuminating the collective learning continuum in the Colorado River Basin Science-Policy Forums

Abstract

Although considerable research over the past two decades has examined collective learning in environmental governance, much of this scholarship has focused on cases where learning occurred, limiting our understanding of the drivers and barriers to learning. To advance knowledge of what we call the “collective learning continuum,” we compare cases of learning to cases where learning was not found to occur or its effects were “blocked.” Through semi-structured interviews with key stakeholders in science-policy forums in the Colorado River Basin, a large and complex river basin in western North America, we examine differences and patterns that explain moments of learning, blocked learning, or non-learning, drawing insights from the collective learning framework. Our results find various factors that influence learning, blocked learning, and non-learning. We discover technical and social factors as common drivers of both learning and blocked learning. In contrast, we find more structural factors associated with non-learning. At the same time, the cases reveal insights about the role of political factors, such as timing, legal constraints, and priorities, which are underdeveloped in the collective learning framework. Overall, these findings advance theoretical knowledge of the collective learning continuum and offer practical insights that may strengthen the coordination of science and management for effective governance within the Basin.

1 INTRODUCTION

Collective learning is critical for our capacity to effectively govern dynamic and emerging environmental issues. It involves acquiring, translating, and disseminating new knowledge in governance processes, which may result in learning products, such as new collective actions, routines, or decisions (Heikkila & Gerlak, 2013). Learning supports the performance of environmental governance by fostering more sustainable behavioral changes (Newell et al., 2022) and bolstering the adaptive capacity of environmental governance institutions (Gupta et al., 2010). Government investments in environmental monitoring, science advisory groups, and program and policy evaluation further suggest that the benefits of collective learning are widely recognized and embedded within the design of governance systems.

Although considerable research over the past two decades has examined how collective learning happens in environmental governance (Gerlak et al., 2018), much of this scholarship has focused on the factors that shape where and when learning occurs (e.g., Koebele, 2019; Mukhtarov et al., 2019; Ricco & Schultz, 2019). This body of scholarship emphasizes different types of learning (Dunlop & Radaelli, 2018), as well as theories of how learning co-evolves with institutional structures and other elements of the governance system (Van Assche et al., 2022). However, there is a dearth of research analyzing where and when learning either does not occur (e.g., Dussauge-Laguna, 2012; Shipan & Volden, 2012) or where learning processes or products arise to some extent but are blocked. Such questions are of growing interest to scholars of learning across various policy and governance contexts (e.g., Dunlop, 2017a; Dunlop, 2017b; Howlett, 2021; Leong & Howlett, 2022; Van Assche et al., 2022), as a lack of learning may undermine effective governance processes and outcomes. Hence, theories of learning must be expanded to consider a continuum of learning, including the complete absence of learning (i.e., “non-learning”) and “blocked learning,” during which learning processes are triggered but information may not be translated or disseminated across the group or into action at the broader collective levels of governance. In addition to considering these types of learning, it is critical to identify factors that drive or inhibit learning, similar to Dunlop and Radaelli (2018)'s approach to identifying triggers and hinderances in different learning contexts. Thus, we ask the following research question: What factors explain learning, blocked learning, and non-learning, and how do these factors vary across the continuum?

We adopt a qualitative, empirical approach to developing the continuum of collective learning based on interviews with governance actors from seven science-policy forums in the Colorado River Basin (CRB), a large and complex river basin in western North America. We ask diverse actors in the science-policy forums to describe examples of learning, blocked learning, and non-learning in order to identify major drivers and barriers across learning types. The following section lays out the theoretical foundation for our comparison of learning, blocked learning, and non-learning. We then provide a background on the CRB, justification for the selection of forums, and methods for collecting and analyzing interview data. Our results show the frequency of factors influencing each learning type (i.e., learning, blocked, and non-learning). Following a presentation of the findings, we offer a discussion and conclusion about this research's theoretical insights for learning in environmental governance, as well as practical implications for management within the CRB.

2 COLLECTIVE LEARNING IN ENVIRONMENTAL GOVERNANCE

2.1 The continuum of collective learning

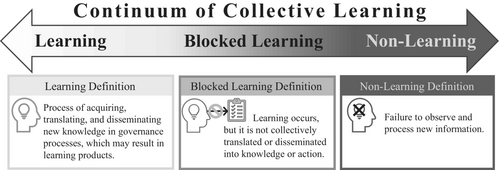

Theoretical guidance and empirical evidence of how and why learning does or does not occur are needed to advance knowledge of collective learning in environmental governance. The broader learning literature in public policy and governance often deals with learning as a binary concept, denoting its presence or absence (Rietig & Perkins, 2018) or recognizing different degrees or intensities of learning (Vagionaki & Trein, 2020; Zito & Schout, 2009). Yet, there are many instances in which learning does not occur or is not translated into decision-making or policy, though these are infrequently discussed in the literature (Dunlop & Radaelli, 2018; Heikkila et al., 2024). At the same time, not all collective learning is productive. Sometimes we learn the wrong lessons, whether unintentionally or intentionally, which can undermine our ability to govern effectively. In this vein, “dark learning” may occur when new knowledge is used to reinforce existing power structures and private self-interest rather than collective benefits (Howlett, 2021; Leong & Howlett, 2022; Van Assche et al., 2022). In this study, we focus on comparing more productive forms of collective learning, at one end of a continuum, to blocked and non-learning at the other end of the continuum (Figure 1) in order to expand both the theory and empirics of collective learning.

Figure 1 highlights the continuum of collective learning. Collective learning is observed through learning processes (e.g., acquiring, translating, disseminating knowledge) that may result in changes to collective beliefs or actions, such as policies or decisions. Blocked learning falls between learning and non-learning on the continuum, where some learning occurs, but it is not collectively translated or disseminated into knowledge or action (Vagionaki, 2018). On an individual level, blocked learning can occur when people acquire information but fail to update or translate that information into their policy beliefs or actions (Heikkila et al., 2020; Moyson et al., 2017), potentially as a result of their cognitive biases (Heikkila et al., 2024). On a collective level, blocked learning can occur due to power and resource asymmetries between those who learned and those with the authority to respond (Morrison et al., 2019). Or blocked learning may occur because of a failure to develop relationships among actors who contribute knowledge or have the authority to make decisions. Institutional arrangements may also influence the distribution of power, resources, and relationships, and thus the evolution of knowledge over time. Blocked learning suggests that individual learning is often not enough to induce collective understanding or change; instead, learning must permeate the thinking of key decision-makers with the appropriate authority to act (Zito & Schout, 2009).

Non-learning is at the opposite pole of learning, defined as a failure to observe and process new information (Leong & Howlett, 2022; Vagionaki, 2018). Non-learning can occur both at the individual level, when individuals fail to reflect on new information, or at the collective level, when decision-makers refuse to consider new knowledge (Jarvis, 2012; Rietig, 2021). Policymakers and institutions may be unwilling or unable to adapt to new information (Heclo, 1974; Leong & Howlett, 2022), or they may avoid engaging with a problem meaningfully, which undermines potential learning, whether consciously or unconsciously (Janis, 1972; Janis & Mann, 1977; Leong & Howlett, 2022). Non-learning can also be a function of systemic organizational and social structures that enable collective ignorance (Van Assche et al., 2022) or cognitive biases at the individual level (Heikkila et al., 2024). Policy failures may occur as an outcome when the processes of learning have failed (Dunlop, 2017a; Dunlop, 2017b; Leong & Howlett, 2022). Of course, learning is no guarantee that successful policies or governance decisions will result. Yet, productive forms of learning, relative to non-learning, can increase the capacity for governance systems to adapt to emerging problems and knowledge.

We argue that failing to distinguish between blocked learning and non-learning may mask underlying dynamics important to the overall environmental governance system. For instance, some might assume that an absence of change signifies a lack of learning. Yet, it is possible that some learning occurred, but the information from that learning was blocked from translation or dissemination. Additionally, environmental governance scholars must understand what drives these different types of learning. While scholars are increasingly interested in understanding different types of learning, empirical research on the factors that enable learning relative to blocked or non-learning is not well established. Thus, understanding how the presence or absence of particular factors explain the continuum of learning, blocked learning, and non-learning can build more robust learning theories.

2.2 A framework for explaining the learning continuum

To help explore the factors that explain the continuum of learning, we turn to research questioning why learning occurs, as well as emerging research on non-learning. To help organize these theoretical insights, we use Heikkila and Gerlak's (2013) collective learning framework, which identifies several types of contextual factors—categorized under social dynamics, structure, and technological domain—that can shape collective learning.

The social dynamics shaping learning can be seen as the nature of interactions among governance actors, including their levels of trust, conflict, or cooperation, and frequency of interactions (Heikkila & Gerlak, 2013; Newig et al., 2010). Certain interactions, including sharing knowledge, ideas, and cultural norms, as well as communication and open dialog between diverse actors, may foster collaboration, which helps actors learn from and with one another (Heikkila & Gerlak, 2013). Other learning scholars, particularly those studying dialectical learning, or learning that occurs through discussion and deliberation, similarly recognize how social interactions and the qualities of those interactions matter for supporting more productive forms of learning (Alta & Mukhtarov, 2022; Van Assche et al., 2022), and potentially for mitigating non-learning or blocked learning. These authors point to dialectical learning as the most important form of learning for policymaking in democratic societies.

The structural factors of a governance setting can also shape and co-evolve with learning. They may include the “rules of engagement” or institutions that define who can participate and in what form, thereby bounding the nature of interactions, the diversity of actors engaged, and their roles and authority (Heikkila & Gerlak, 2013; Newig et al., 2010). The structure of a collective group and its organization, including shared objectives and tasks, facilitates opportunities to acquire new and diverse information (Heikkila & Gerlak, 2013). Structural factors include the existence and arrangement of multiple policy forums within the governance system and their roles. While science-policy forums may primarily develop scientific and other relevant knowledge, they may also influence the networks among policymakers and scientists, which are a critical component of collective learning. Learning scholars widely recognize the importance of structure and institutional design in shaping learning (Dunlop & Radaelli, 2018; Lee & Ma, 2020; Wagner & Ylä-Anttila, 2020). In particular, the ways in which institutional structures, like polycentric systems, support the diversity of thinking and knowledge sources can shape the capacity for collective learning (Bousema et al., 2022; Castille et al., 2023; Rittelmeyer et al., 2024).

Finally, the technological domain of a governance setting can include the type of available information or data (e.g., from monitoring or tracking governance processes and outcomes), the nature of the issue being addressed, and the tools and resources for gathering and storing information (Heikkila & Gerlak, 2013). For instance, the level of uncertainty in a policy domain can shape problem tractability and the ways in which learning emerges in different decision contexts (Dunlop & Radaelli, 2018). Uncertainty within the technological domain can stem not only from substantive issues being addressed, but also from strategic and institutional processes (Leong & Howlett, 2022). However, functional processes within a governance setting—such as following adaptive management approaches to formalize knowledge sharing and assessment—can help reduce both uncertainty about the problem, as well as uncertainty about how stakeholders respond (Kochskämper et al., 2021). Additionally, the technical expertise of actors is a large determinant of how a collective group can access new knowledge and data and, potentially, learn from it (Heikkila & Gerlak, 2013).

From a theoretical standpoint, these three categories of factors can be seen as potential drivers of, or barriers to, collective learning. Thus, we use the three broad categories—social, structural, and technological—to help organize our analysis of why learning, blocked learning, and non-learning occur, as well as to inductively identify more specific explanatory factors within these overarching categories, as discussed in more detail below.

3 STUDY SETTING

To illuminate the continuum of collective learning, we explore learning in seven science-policy forums in the CRB. The Colorado River provides water to seven U.S. states (Arizona, California, Colorado, Nevada, New Mexico, Utah, Wyoming) and two Mexican states (Baja California and Sonora), as well as 30 Indian Tribes (Figure 2). The river supplies more than 40 million people with water, in addition to supporting massive agricultural production, other industries, and a host of endemic species (Fleck & Udall, 2021). Hotter climate conditions and greater aridity, less precipitation, and drier soils are straining the Colorado River, its estuaries, and major reservoirs (Hoerling et al., 2019). The impacts are felt across the Basin and exacerbate the historical over-allocation of the river. Policies over-allocating the river's water date back to the 1922 Colorado River Compact, where an overestimated average annual flow was used as the basis for initial allocations to Basin states in the U.S. (Robison & Kenney, 2012).

(Source: USGS, 2016)

.Due to this history and the nature of the CRB as a highly complex socio-ecological system, decisions made to improve one aspect of the system often have the potential to impact something else of value. For instance, more stored water may be withdrawn to maintain supplies during low-flow years, which reduces reservoir storage for future years and may limit hydropower generation or have negative environmental impacts. Consequently, the history of water politics in the CRB is often characterized as a “zero-sum” conflict at various scales of governance (Wescoat, 2023).

To overcome conflicts and take policy actions that support effective environmental governance, actors representing multiple perspectives often need procedural and structural arrangements to coordinate their activities (Bressers et al., 1994; Wiechman et al., 2023). Policy forums are venues where multiple agencies and actors can come together to discuss an issue without being focused on a single interest, such as an agency's mission (Fischer & Leifeld, 2015). They are not typically created for decision-making but rather for problem definition (with experts), implementation (with managers), or policy formulation (with executives). Different policy forums can, therefore, provide spaces for learning. For example, in recent decades, several forums focused on incentivizing collaboration among diverse actors and developing consensus-based solutions have arisen in the CRB, leading to the development of multiple policies aimed at increasing water sustainability (Karambelkar & Gerlak, 2020; Koebele, 2020, 2021).

Forums that focus on the intersection of science and policy are seen as particularly critical for learning in social-ecological systems (Gerlak et al., 2018). Prior research on governance forums in the CRB has found a recurrent emphasis on science-based decision-making to address specific resource challenges across programs (Gerlak et al., 2021). Even if learning occurs in these forums, however, their common lack of decision-making authority can block learning from translating into policy outputs, making them a particularly useful set of cases in which to explore the drivers of learning, blocked learning, and non-learning.

4 METHODS

4.1 Case selection

We purposively selected seven science-policy forums in the CRB using a three-step approach, adapted from Tambe et al. (2023), of pooling, clustering, and selecting cases. In the first step, we conducted a systematic web-based search for science-policy forums within the Basin. Our criteria for a science-policy forum included: (a) the forum's mission or objectives refer to the use, monitoring, production, and assessment of science within the Basin; (b) a collaborative entity comprised of multi-sector stakeholders and groups; (c) information being collected by the forum is used in policy formation, implementation, decision-making, or management.

We began with an initial pooling of 24 forums in the CRB that appeared to meet these criteria. As a second step, we reviewed gray literature, such as forum annual reports, meeting minutes, and news media to understand forum structure and characteristics. This allowed us to cluster the forums into four categories based on their structure, which included: (1) federally-initiated programs, (2) Tribal-led programs, (3) academic initiatives, and (4) funded pilot projects. In the final step, we elected to explore the federally initiated programs (n = 7) for this study because managing key issues in the Basin relies heavily on federal agency support, facilitating a “most similar case” study design. Federally initiated programs can be formed in response to either a legislative act, a multi-party or cooperative agreement, or a U.S. Department of the Interior Record of Decision. Thus, our selected seven cases are similar in terms of their science-policy interface and federal role, but we expect them to differ widely across the continuum of learning they experience due to factors such as their longevity, the specific actors involved, and the issues at the core of their mission.

These seven cases include (1) The Colorado Salinity Control Program (Salinity Program), (2) The Upper Colorado River Endangered Fish Recovery Program (Upper Colorado River Program), (3) San Juan River Basin Recovery Implementation Program (San Juan Program), (4) Glen Canyon Adaptive Management Program (Glen Canyon Program), (5) Lower Colorado River Multi-Species Conservation Program (Lower Colorado River Program), (6) Gunnison Basin Selenium Management Program (Gunnison Selenium Program), and (7) Colorado River Delta Environmental Work Group (Environmental Work Group) (additional information and characteristics of each program are shown in Table A1). The oldest program we studied, the Salinity Control Program, was established in 1974, while the newest program, The Delta Environmental Work Group, launched in 2012. Each program involves various partners, including federal agencies, academic institutions, Native American Tribes, conservation groups and non-profit organizations, power companies, state agencies, and water users. All programs were established to manage ecosystems or species and conduct research activities within the Basin.

4.2 Data collection and analysis

Our unit of analysis for this study is a learning, blocked learning, or non-learning “moments” within any of the forums, as identified by key informants involved in the forums. We define a learning moment as either a process or product of acquiring, understanding, and disseminating new knowledge among policymakers, managers, and other key stakeholders (Heikkila & Gerlak, 2013). This provides a novel way of evaluating the learning continuum. A blocked learning moment was operationalized as an example where interviewees described some learning but also identified internal or external factors blocking dissemination or potential action or change. However, if a learning process occurred and decision-makers decided that this information reinforced the need to keep current management actions as they were and no action needed to be taken, then we considered this as a learning moment, not blocked learning. We considered an example of a non-learning moment when the interviewees noted the value of studying a specific phenomenon, but some factors (i.e., lack of forum funding or conflicting stakeholder interests) inhibited that learning (Birkland, 2006; Gerlak & Heikkila, 2011).

To gather this information, we conducted semi-structured interviews with two to four key informants within each forum (N = 21). We purposively sampled expert stakeholders across the programs, including scientists and administrative personnel, to gain their perspectives on how science or knowledge impacted collective action within the CRB. Interview participants included representatives from federal agencies, state agencies, non-profit organizations, and academic institutions. Some interview participants were involved in multiple forums. Interviews took approximately 45 min each and were conducted via Zoom from January 2023 to July 2023. Interviewee codes and the program they represented are shown in Table B1. This research was approved by the University of Arizona IRB policies.

Interviewees were asked to “talk through a specific example or two of science impacts from [forum x's] work that contributed to learning in the forum or in the basin” and “to think of any examples of where [forum x's] science activities didn't lead to an impact on learning or other outcomes and describe what happened or what impeded learning.” These prompts elicited learning, blocked, and non-learning moments. See Appendix C for the interview questionnaire. Our interviews aimed to hear about key moments related to aspects of the learning continuum that were highly salient to interviewees, including the factors that may have influenced the learning outcomes.

We analyzed the descriptions of learning, blocked, and non-learning within the forum using an abductive coding approach (Moyson et al., 2017; Rietig, 2021). Abductive reasoning is a methodological approach that combines inferences from both inductive and deductive reasoning, allowing us to build our own theory while using pre-identified themes from the literature (Vila-Henninger et al., 2022). Abductive coding starts with a theoretical framework to structure the coding process but is also open to refining the theory and coding process based on emerging results. We began by coding participants' examples into “learning,” “blocked learning,” and “non-learning” moments. We recognize that asking participants about their perceived learning moments may be different from what was learned by the forum they represent. We attempt to mitigate this bias by triangulating the findings from forum documents, information from web-based searches, and other forum participant interviews. However, we also recognize that validating the blocked or non-learning moments is harder as they may not be directly reported.

Three coders participated in the process. Coder #1 summarized qualitatively the explanations interviewees gave for “why” learning, blocked, or non-learning occurred. Coders #2 and #3 worked together to review the first round of coding to indicate agreement among codes. In the second coding round, Coders #2 and #3 identified emergent themes. These themes were considered the drivers of, or factors related to each learning, blocked, or non-learning moment. Finally, Coder #1 verified and coded the remaining cases using the same emergent themes identified by Coders #2 and #3 and any additional themes. This iterative coding process supports the credibility and reliability of the data. We utilized the three main categories from the collective learning framework that explain learning—social, structural, and technological (Heikkila & Gerlak, 2013)—as an overarching guide to organize the explanations for why learning did or did not occur.

5 RESULTS

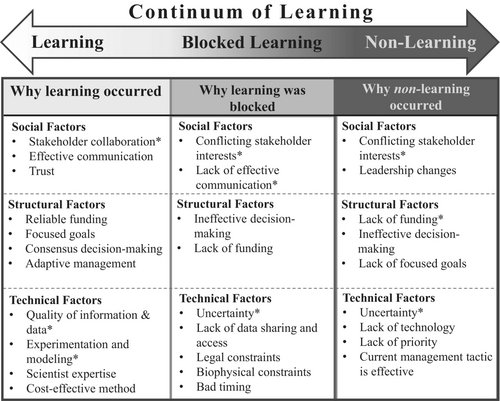

Below, we discuss our results in three sections based on the collective learning continuum: learning, blocked learning, and non-learning. Figure 3 summarizes1 the overall findings. Figures 4, 5, and 6, as well as Tables D1 and E1 portray additional information of these factors and their frequencies.

5.1 Why did learning occur?

We identified 40 learning moments across the 21 interviews. These focused on a range of scientific and management issues, such as a new understanding of biophysical systems, how the science-policy forums could improve management strategies, or how these strategies were affecting ecosystems.

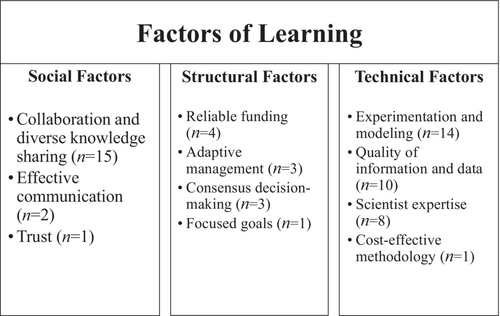

Across these interviews, we identified 63 total explanations for why learning occurred, which overlap thematically. We then categorized the explanations for learning according to the three overarching themes in the collective learning framework: social, technical, and structural factors (Gerlak & Heikkila, 2011; Heikkila & Gerlak, 2013) (Figure 4).2 We also found one factor that did not fit into one of the three themes that we refer to as an “external political” factor.

Most of the explanations for learning were categorized as technical factors (33 out of 63), but social (18 out of 63) and structural factors (11 out of 63) explain the presence of learning as well. Overall, the most common sub-themes describing why learning occurred included collaboration and diverse knowledge sharing (n = 15), experimentation or modeling efforts (n = 14), and the quality of information or data (n = 10). We also note that these factors do not occur independently. Interviewees described multiple types of factors in relation to a given learning moment.

Learning moments included examples of discovering new sources of biophysical or chemical properties within a habitat. We see this most prominently in the Salinity Program and Gunnison Selenium Program. For instance, in the late 1970s and early 1980s, the Salinity Program questioned whether salt loads were depositing into Lake Mead from hot springs in St. George, Utah, but at the time, there was no funding to investigate it. Fast forward to just a decade ago when the Salinity Program studied the connectivity between the St. George hot springs and Lake Mead as the St. George area began to urbanize. The Program scientists learned there was connectivity between the two via the Virgin River Gorge. They are now further investigating how to capture the groundwater before it discharges to the Virgin River, as well as other water treatment options by partnering with the local water districts (Interviewee #3). Learning occurred in this example for various reasons. First, the program was able to run the study and monitor the salt loads from the hot springs and the river connecting to Lake Mead. A lack of funding initially hampered research when the research question was first proposed. However, over time, researchers were eventually able to do the experiment because funding became available. An interviewee with the Salinity Program stated it this way: “I think that we're often wondering about things, but either funding, or timing, or the hydrology may not be right at a given time, but later we reexplore” (Interviewee #3).

Similarly, the Gunnison Selenium Program also learned about new detections of selenium within the Basin. The U.S. Geological Survey (USGS) regularly performs selenium monitoring efforts. Interviewee #20 denoted the main reasons for learning about the sources of selenium in the system (e.g., via leaking septic systems) and how it is transported into the system (e.g., irrigation methods) through the collaboration of the Program's various stakeholders, as well as the expertise of scientists with the USGS.

Another example of a learning moment is learning how endangered species exist in a particular system and interact with other species. This was highlighted in the Glen Canyon Program when it was discovered that endangered fish populations in the river were limited due to the low diversity of aquatic insect prey in their habitat. The Program studied the food web systems within the Glen Canyon Dam and discovered a linkage between the dam operations and hindrances to aquatic insects and their breeding conditions (Interviewees #11 and #12). In 2016, the Program suggested mitigating egg mortality and low species diversity by providing stable and low flows during the weekends. One interviewee explained, “The science was compelling enough that it was on the menu of experiments under the 2016 Environmental Impact Statement” (Interviewee #11). In 2018, the Program tested the strategy and found a significant increase in aquatic species in the system and continued the management actions in 2019 and 2020.

The Glen Canyon Program learned through experimentation. “We had years of monitoring research that said, ‘let's try this experiment,’ where if we change dam operations slightly, we may be able to find a way to increase the production of invertebrates that a lot of the fish species rely on” (Interviewee #12). Learning about the food web system and dam operation impacts also transpired from stakeholder collaboration. “The program involves multiple tribes, recreational voters, recreational anglers, State wildlife management, Arizona Game and Fish, and the State water resource agencies for the seven Basin States. It's a whole group of stakeholders that ultimately makes these recommendations” (Interviewee #12). Another Glen Canyon interviewee mentioned, “We have a dedication to achieve this science standard. This standard requires stakeholders to hold the science accountable” (Interviewee #10).

We did a lot of experimenting and a lot of removals… At the end of the day, we realized we can't have an effect on this population [Channel catfish] right now with this effort. We came to the decision that we are not going to do anything about the non-native fish until we develop a different methodology to try to deal with them.

Learning about management strategies in this example was driven by a few factors. First, the San Juan program stakeholders agreed that the current strategy was not effective and decided to try other techniques. Both consensus decision-making and adaptive management help explain why they learned. The stakeholders of the San Juan Program must generally agree to move forward with a management action or change (Interviewees #7 and #8). Specifically, interviewee #7 commented, “We operate by a two-thirds majority. The majority is on board with this path [the decision to stop with Channel catfish removal].” In addition, Interviewee #7 stated, “We don't have a formal adaptive management plan, but we are conducting research, and we use that information to drive decisions.”

Similarly, the Upper Colorado River Program leaders learned about a management strategy to improve the protection of the endangered razorback sucker. One such strategy is to alter water flows within the system and provide a channel for the endangered species (Interviewee #4). A study with partners at Colorado State University and the U.S. Fish and Wildlife Service showed they struggled with larval fish growing to an adult stage. The researchers experimented with altering the Flaming Gorge Reservoir's flow releases by waiting until the larval fish entered the river and then pulsing them into nearby wetlands, where they are less subject to predation. By doing so, the fish had more food options, could grow larger, and could be released back into the river (Interviewees #4 and #6). Through this study, program officials learned that the timing of the dam operations and flows needed to be altered for the larvae to enter the system and then be relocated to the wetland habitat (Interviewees #4 and #6). Since 2012, the program has used this methodology. Interviewee #6 states, “It was not a big shift. We basically just changed the timing of the flow to be more appropriate to the biology of the species.”

In this learning moment, many factors were at play. First, learning to improve the management strategy happened through experimentation by the Upper Colorado River Program partners. Second, similar to the San Juan Program, the Upper Colorado River Program also runs on consensus decision-making, which led to the decision to experiment with dam operations and flow alterations. Third, the experiment was conducted in collaboration with Colorado State University and U.S. Fish and Wildlife Service researchers. Fourth, the scientists showed effective communication when reporting the findings to the rest of the program partners. Interviewee #6 specified, “The researchers who are involved are good communicators in terms of trying to narrow down what their findings were.”

5.2 Why was learning blocked?

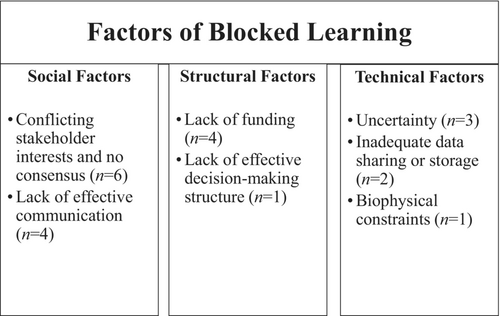

Although it was more common for interviewees to describe examples where learning occurred within the forum, we identified 19 blocked learning moments across the 21 interviews, associated with 24 explanatory factors that overlap thematically (Figure 5).3 Overall, the blocked learning examples included instances where a learning process occurred, but some factor(s) blocked the dissemination of ideas in the learning process or blocked learning products (e.g., uptake of new knowledge or subsequent action). However, if a learning process occurred and decision-makers decided that this information reinforced the need to maintain the status quo, we considered this as a learning moment.

With blocked learning, participants may have gained new knowledge of a biophysical or chemical property within the system, but either uncertainty of the results or lack of funding prevented action to what was learned. Social factors (10 out of 24 times) were considered the main reasons for blocked learning in the forum (Figure 5). We also find two additional factors outside of our categories identified in blocked learning: legal constraints and bad timing.

Conflicting stakeholder interests and beliefs, a social factor, is the most common factor explaining blocked learning. Here some learning may occur within a forum, but a lack of agreement among stakeholders prevented learning translation to management outcomes. We see an example of this from the Lower Colorado River Program. From a two-decade-long study, the Lower Colorado River Program learned that non-native sport fish consume 90% of native fish. However, there is no interest in controlling sportfish by the program because they are highly desirable for the angler communities (Interviewees #14 and #15).

There's way more science done, and it never gets applied to policy. I think that's true everywhere. Not just in the Delta… As an example, the Sonoran Institute [Environmental Work Group stakeholder] has done a lot of data collection in the estuary, and we haven't seen much of an impact. We can't point to the benefits from the little bit of water that gets that far. So, to some extent, we're constrained.

Finally, lack of funding is the most cited structural factor blocking learning. This can be seen in the Lower Colorado River Program, where one of the main methods for controlling sportfish is through non-native removals. However, Interviewees #14 and #15 note that “sportfish are just so prolific, and they occupy every little niche within the corridor… We have a limited amount of funds in the program. What are things we can do to help conserve the [endangered] species? And where is the money best prioritized, and which action should you take?” In this case, limited program funding prevented the non-native removal management strategy.We don't have a lot of science behind it. I don't know what our actions would be. Would it be to build another Lake Powell or another Lake Mead so we could sequester more salt? I don't know. It's not because we're dodging the science, but rather we're looking at it and saying, ‘this is interesting, intriguing, but what do we do with it?’

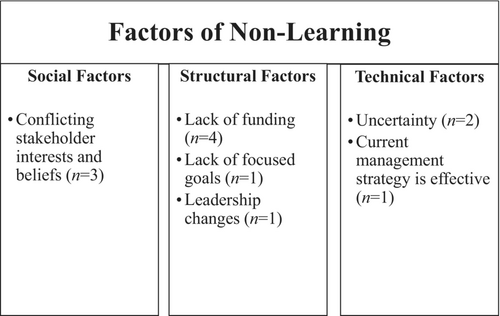

5.3 Why did non-learning occur?

On the opposite side of the continuum from learning, we identified 10 non-learning moments from the 21 interviews, with 13 overlapping explanatory factors. Non-learning examples were mentioned in all the forums except for the Delta Environmental Work Group, where interviewees only shared examples of blocked learning. We find an additional factor outside of our categories identified in non-learning: “lack of priority”.

We assessed why there was non-learning. As we did for the learning and blocked learning moments, we identified factors that prohibited learning in the forums and categorized them into social, technical, and structural factors (Figure 6).4 Structural factors were the leading indicators of non-learning (6 out of 13) compared to social and technical factors, which differed from the learning moment examples. We found three same themes from blocked learning moments as from the non-learning moments. These included a lack of funding, conflicting stakeholder interests and beliefs, and uncertainty.

Lack of funding (structural factor) is the most common reason (4 out of 13) for non-learning. For example, as discussed previously in the Upper Colorado River Program, there is not much new science due to limited funding. Since they funded the larger Colorado State University and U.S. Fish and Wildlife Services research projects 10–15 years ago, they have not had the budget for new science projects (Interviewee #5). Interviewee #5 indicates, “It's hard to have enough funding to be able to keep research moving forward and having us take those next steps that we're interested in or other kinds of novel techniques to eradicate non-native fish.” Additionally, forum leaders believe they already have current effective management strategies (technical factor) (Interviewee #5), and, thus, no new science is perceived as necessary, which further explains non-learning in this case.

Stakeholders contribute funding to the program…We spend our time, money, and resources on the most important thing. But then, when you start talking about importance, that becomes a contention between stakeholders of different opinions… So, there is a tension there, and sometimes we do a very bad job of spending too much time just learning things and not enough time learning things that matter about how to manage the system better.

6 DISCUSSION

In this study, we set out to understand the continuum of learning in environmental governance by analyzing moments of learning, blocked learning, and non-learning (Figure 1). We studied seven federally-initiated science-policy forums in the CRB to provide the first empirical evidence exploring this continuum. We focus here on the differences and patterns that explain moments of learning, blocked learning, or non-learning across science-policy forums (Figure 3).

One of our key findings is that the presence of certain factors support learning, while the absence of those same factors can block learning or lead to non-learning. For example, as Figure 3 illustrates, among the social factors, stakeholder collaboration plays a key role in supporting learning moments. At the same time, the absence of effective collaboration is associated with blocked and non-learning due to conflicting stakeholder interests and beliefs. Likewise, with respect to technical factors, the quality of the information and data was imperative, but the uncertainty of the data or results blocked further actions or caused non-learning.

When looking at the explanatory factors relative to one another, based on their frequency, we also discovered that technical factors, such as experimentation, were more common than social or structural factors in instances of learning (Figure 4). It is not surprising that technical factors support learning in these forums, as high-quality science demands high-quality data, methods, and approaches that later support decision-making. Investments in novel sources of information can be key to promoting learning and overcoming barriers to learning. Prior research has found that learning can be enhanced in governance structures that facilitate the active pursuit of novel sources of information (Bodin, 2017). Others highlight the importance of experimenting with scenario-planning tools to bring in new sources of information and explore the disparity between what is known and what needs to be known for decision-making (Ben-Haim, 2019; Haasnoot et al., 2018). At the same time, technical factors rarely operate in isolation. That is, they may be necessary, but insufficient factors in supporting learning.

In addition, we discovered that structural factors, such as lack of funding and lack of focused goals, were the most frequent factors associated with non-learning (Figure 6). However, structural factors were not commonly cited factors for learning and blocked learning (Figures 4 and 5). This suggests that on most occasions, it is not that the forums did not have the technical capacity to conduct and inform science, but rather, the forum's structure inhibited learning opportunities. Moreover, structural factors, like limited funding, intersect with external political factors, such as legal constraints that the programs must abide by or a lack of prioritization for certain types of learning. At the same time, key technical and social factors also worked in tandem with the structural factors in the non-learning moments. This aligns with recent scholarship that highlights the interdependencies between actors and institutions, along with the goals that are set and funding arrangements (Bousema et al., 2022; Van Assche et al., 2022).

We also found a variety of factors to explain blocked learning, which is perhaps the most under-theorized area of the proposed continuum. The most common social factors of blocked learning are conflicting stakeholder interests and beliefs and a lack of effective communication. These findings suggest that governing systems must recognize the pre-existing social and cultural dynamics inherently brought into collaborative forums, even in these more technical science programs, to overcome blocked learning. This is where dialectical or social learning approaches could help overcome blocked learning by emphasizing more intentional dialog and discussion among actors, thereby building capacity for learning (Pahl-Wostl et al., 2007; Van Assche et al., 2022). Doing so may help reveal intentional efforts that may be at play to undermine learning (Leong & Howlett, 2022). It may also reveal mismatches in the “structuring of organizational and social identities” (Van Assche et al., 2022: 1220) that can produce blocked or non-learning. For instance, especially in venues that require a high degree of consensus to move forward with a decision, stakeholders must often negotiate and make tradeoffs. This means that the same learning moment may be viewed as learning by the majority of stakeholders and blocked or non-learning by the minority with conflicting beliefs (Dunlop & Radaelli, 2018).

Uncertainty is the most common technical factor for blocked learning, meaning there were instances when science was conducted, but appeared to offer no clear conclusions for management or policy. These findings raise questions about whether or to what extent such uncertainty is used intentionally to block policy learning, a topic of long-standing interest in environmental policy (Sarewitz, 2004; Wynne, 1992), and policy learning more broadly (Dunlop & Radaelli, 2018; Leong & Howlett, 2022; Nair & Howlett, 2017). Alternatively, it may point to the need to redefine uncertainty as a source of knowledge and a mechanism to inform adaptive management and learning (Bradshaw & Borchers, 2000). Further, uncertainty can be seen as more than a lack of knowledge about a particular issue; instead, uncertainty may be related to the unpredictability of strategies deployed or institutional uncertainty that arises due to the complexity of interactions (Leong & Howlett, 2022; Nair & Howlett, 2017), further highlighting the intersection of different factors including the technical, social, and structural.

In addition to the social, technical, and structural factors, we found a few themes that emerged from the inductive coding process that did not readily fit into our three overarching categories. These themes fell into their own category, which we label “external political” factors. These included timing, which positively influenced learning. For example, in the Salinity Control Program, there were research needs approximately 10 years prior for monitoring salinity in hot springs in St. George, Utah. However, cost-effective methods for monitoring and management priorities for this initiative were lacking at the time. Ten years later, as the area began to rapidly urbanize, the research and learning proceeded. The appropriate time to advance the study was an exogenous factor that influenced learning, while permission to proceed with the project at the correct time was controlled by conditions outside of the program. Another example where external political factors led to blocked learning comes from the Glen Canyon Program. Members mentioned a moment when learning occurred, but because of outside legal constraints, the management recommendations never occurred. An external political factor, which we call lack of priority of an issue, also contributed to non-learning. Here actors in the Salinity Control Program identified certain research needs, but because they do not rank as high as others, no work is being conducted, and no learning is occurring.

These external political factors coincide with the growing scholarship on how exogenous factors—possibly political, social, and economic changes—influence collective learning processes (Heikkila & Gerlak, 2013). We consider each of these subthemes as external political factors because they are indicators prompted by outside individuals or groups that impact the learning process or outcome—all outside of the control of the actors involved in the collective. Researchers have found that external forces, like external funding and staffing, can greatly enhance learning (Crow & Albright, 2019). Similarly, Bousema et al. (2022) found that organizational interdependencies around funding can support learning in a polycentric context. Also, in her study of climate negotiations, Rietig (2019) found timing to be a key external factor promoting learning. Other researchers emphasize the importance of the ongoing role of external factors in both promoting and inhibiting learning (De Voogt & Patterson, 2019). Such factors may help us better understand what conditions might be important in fostering a transition from non-learning or blocked learning to learning, as well as what conditions can increase the likelihood of non-learning or blocked learning.

Finally, we recognized some empirical challenges in discerning learning from blocked and non-learning. For example, we observed that forum members may have learned something but then realized there was highly contested action that the research led to. In cases like this, we did not consider this blocked learning because the action or response was halted. Rather, we interpreted these actions to fall on the learning side of our continuum. For example, the USGS and other members of the Salinity Control Program learned from their research results that there was no action needed to be taken and that their current management approach was effective. Therefore, subsequent steps were unnecessary. Thus, learning can occur when forum actors become aware that certain information is irrelevant or possibly not valuable to the forum. However, we cannot say whether such learning is productive overall for the collective good in the system. That is, it is difficult to know when governance actors are learning the wrong lesson.

7 CONCLUSION

In this paper, we illuminate the continuum of collective learning in environmental governance, using cases from science-policy forums in the CRB. We contribute to collective learning theory by studying cases where learning occurred, was blocked, or did not occur, and highlighting factors explaining why these cases emerged. These theoretical insights highlight the role of social, structural, and technical factors in learning, blocked learning, and non-learning, as well as the rich interactions of these factors. While our findings highlight how the factors that predict learning are absent as governance actors move towards non-learning, we also find different drivers of non-learning versus learning, such as external political factors. This suggests the need to build theory around how external political factors outside of the collective learning processes may promote or hinder learning. These findings inform the collective learning framework by examining how social, technical, and structural factors not only influence cases of learning but also how their absence (especially social and technical factors) can impede learning. At the same time, the external political factors, which are identified in the collective learning framework, have largely been under-developed. These findings underscore the need to have a ripeness or window of opportunity in the management or policy system for learning to take hold—similar to theories of agenda setting and policy change. While we used the collective learning framework to guide our analysis, our findings also speak to the broader literature on policy learning and the emerging scholarship around non-learning. In particular, we offer novel empirical evidence of blocked learning and non-learning and connect them to factors such as uncertainty and conflicting stakeholder interests.

Understanding how to effectively translate and disseminate knowledge across collective governing systems is crucial for policy and management actions. Our findings provide several practical implications for the management of the CRB. First, funding supports the production of actionable science, just as the lack of funding can undermine learning. As the CRB faces increasingly rapid socio-environmental change, additional financial investments in the basin will likely be needed. Second, while CRB governance has been recognized for becoming increasingly collaborative, investments in the institutional infrastructure of science-policy forums are needed. For instance, developing forums that invest in relationships and trust-building among stakeholders may help address barriers such as a lack of focused goals or a lack of clear communication. Finally, it is critical to examine the science-policy forums in the Basin as a system—no one forum can or should meet all the science needs of the Basin. However, enhancing coordination among the various governance forums, including those not primarily focused on the production of science, may strengthen the ability to share funding and knowledge, promote effective collaboration, and otherwise foster learning across the governance system.

We recognize that this study does not come without shortcomings. First, the continuum of learning is much more multifaceted than we have portrayed, and we acknowledge that learning cannot be entirely simplified into our three categories. Future work could look at additional forms of learning to test whether the patterns we find along the continuum hold. Second, we were unable to interview all participants within each forum. With additional interviewees in each forum, we may have identified other forms of learning and additional examples of learning, blocked learning, and non-learning. Third, we recognize the risk of certain biases that come along with participants' self-reporting their interpretation of learning. Interviews from additional forum participants to gain further insight into the governing organization's collective learning could prevent this bias. Lastly, we highlight that reporting the frequencies of learning, blocked learning, and non-learning moments may fail to capture the varying importance of certain learning, blocked, or non-learning moments over others regarding their impact or contributions to CRB management.

In future research, an important next step is understanding how factors that explain why learning occurs can interact, trigger one another, or co-evolve over time. This would require researchers to drill down into a particular case and follow a process tracing approach. Although the approach of exploring multiple learning moments across forums in this paper prevented such an examination, taking a deeper, longitudinal dive into key cases across the continuum of learning can offer rich theoretical insights for learning in environmental governance. In addition, we suggest further research into studying what leads to non-learning. It might be a lack of recall and specificity on behalf of the interviewees or a lack of structural and social processes to both process new knowledge and produce new learning products. Uncovering these obstacles to reflection is likely an important part of the puzzle of why and where non-learning is occurring (Rietig & Perkins, 2018). Finally, we did not assess the quality of the normative learning outcomes, which could have been productive/positive or non-productive/negative (Dunlop & Radaelli, 2018; Leong & Howlett, 2022). Future research could assess the quality of the ultimate policy or decision to further connect the learning process to policy outputs. Overall, the findings discussed in this study can provide insight into best learning practices for natural resources decision-makers, practitioners, and researchers, especially those managing complex social and ecological systems like those in the CRB.

ACKNOWLEDGMENTS

We would like to acknowledge the Udall Foundation for funding this work, and the various forum participants for taking the time to provide their perspectives and insight in the interviews.

CONFLICT OF INTEREST STATEMENT

The authors declare no conflict of interest.

APPENDIX A

| Establishment year | Establishment law or policy | Geographic scope | Program partners | Program partner types | Science activities | |

|---|---|---|---|---|---|---|

| Colorado River Basin Salinity Control Program | 1974 | Cooperative Agreement for the Recovery Implementation Program for Endangered Fish Species in the Upper Colorado River Basin | Entire Colorado River | Bureau of Reclamationa, b, Bureau of Land Managementa, b, US Geological Surveyb, US Fish and Wildlife Serviceb, Natural Resources Conservation Servicea, b, US Environmental Protection Agency, States of Arizona, California, Colorado, Nevada, New Mexico, Utah, and Wyoming | Federal Agencies, State Agencies | Salinity monitoring |

| Upper Colorado River Endangered Fish Recovery Program | 1988 | Endangered Species Act compliance on Bureau of Reclamation 1991 Animas-La Plata Project and Cooperative Agreement for Endangered Species in San Juan River Basin | Upper Colorado River Basin above Glen Canyon Dam, excluding San Juan River sub-basin | State of Coloradob, State of Utah, State of Wyoming, Bureau of Reclamation, Colorado River Energy Distributors Association, Colorado State Universityb, Colorado Water Congress, National Park Service, The Nature Conservancy, US Fish and Wildlife Servicea, b, Utah Water Users Association, Western Area Power Administration, Western Resource Advocates, Wyoming Water Association | Academic, Conservation Groups, Federal Agencies, Power Companies, State Agencies, Water Users | Endangered fish species population monitoring and estimation and their interactions with Roller Dam operations |

| San Juan River Basin Recovery Implementation Program | 1992 | Grand Canyon Protection Act (1992); U.S. Department of Interior Record of Decision (1996) | San Juan River (tributary of the Colorado River) and sub-basin | State of Coloradob, State of New Mexicob, Jicarilla Apache Nationb, Navajo Nationb, Southern Ute Indian Tribeb, Ute Mountain Ute Tribeb, Bureau of Indian Affairsb, Bureau of Land Management, Bureau of Reclamationb, The Nature Conservancyb, US Fish and Wildlife Servicea, b, Utah State Universityb; Water Development Interests | Academic, American Indian Tribes, Conservation Groups, Federal Agencies, Power Companies, State Agencies, Water Users | Endangered fish species hatchery development and population and life stage monitoring |

| Glen Canyon Adaptive Management Program | 1997 | Grand Canyon Protection Act (1992); U.S. Department of Interior Record of Decision (1996) | Colorado River between Glen Canyon Dam to the western boundary of Grand Canyon National Park | Bureau of Reclamationa, Bureau of Indian Affairs, Colorado River Board of California, Colorado River Commission of Nevada, Colorado River Energy Distributors Association, Colorado Water Conservation Board, Grand Canyon River Guides, Hopi Tribe, Hualapai Tribe, National Park Service, Navajo Nation, Pueblo of Zuni, Southern Paiute Consortium, Southeast Rivers, State of Arizona, State of New Mexico, State of Utah, Trouts Unlimited, U.S. Fish and Wildlife Serviceb, U.S. Geological Surveyb, Western Area Power Administration, Wyoming Interstate Streams Engineer |

American Indian Tribes, Conservation Groups, Federal Agencies, Power Companies, State Agencies, Water Users | Varies depending on the stakeholders' goals; previously and currently studying aquatic food webs, native and non-native fish species, and conducting high-flow experiments to create sandbars |

| Lower Colorado River Multi-Species Conservation Program | 2005 | Multi-party Agreements (1994, 1995, 1996, 1997); U.S. Department of the Interior Record of Decision (2005) | Lower Colorado River Basin; 400 miles of the Colorado River from Lake Mead to the Southern International Border | Approximately 56 members; member categories are listed below. Federal Participant Groups, including Bureau of Reclamationa and U.S. Fish and Wildlife Serviceb (n = 6), Arizona Participant Groups (n = 26), California Participant Groups (n = 12), Nevada Participant Groups (n = 5), Native American Participant Groups (n = 3), National Conservation and Other Interested Participant Groups (n = 4) |

American Indian Tribes, Conservation Groups, Federal Agencies, Power Companies, State Agencies, Town entities | Fish augmentation, species monitoring, system monitoring, and conservation area development and management |

| Gunnison Basin Selenium Management Program | 2009 | Gunnison Basin Programmatic Biological Opinion issued by the US Fish and Wildlife Service directed Reclamation to develop the Program | Gunnison Basin | Bostwick Park Water Conservancy District, Bureau of Reclamationa, Bureau of Land Management, Colorado River Water Conservation District, Colorado Water Conservation Board, Delta Conservation District, Natural Resources Conservation Service, Shavano Conservation District, Uncompahgre Valley Water Users Association, Upper Gunnison River Water Conservancy District, U.S. Fish and Wildlife Service, U.S. Geological Surveyb | Conservation Groups, Federal Agencies, State Agencies, Water Users | Selenium monitoring |

| Colorado River Delta Environmental Work Group | 2012 | Minute 317 (2010); Minute 319 (2012); Minute 323 (2017) | Lower Colorado River Basin and the extent of the Colorado River within Mexico | For the United States: Environmental Defense Fund, National Audubon Society, Sonoran Institute, Colorado River Basin States, The Nature Conservancy, University of Arizonab, Bureau of Reclamationa, U.S. Geological Surveyb For Mexico: El Colegio de la Frontera Norte, Comisión Nacional de Áreas Naturales Protegidas, Comisión Nacional de Agua, Pronatura Noroeste, Universidad Autónoma de Baja Californiab |

Academic, Conservation Groups, Federal Agencies, State Agencies | Monitoring and management of environmental restoration sites and species in the Colorado River Delta; occasional high-release flow experiments |

- a Implementing agency.

- b Agency conducting science activities.

- Source: Adapted from Gerlak et al., 2021.

APPENDIX B

| Interviewee code | Science enterprise venue represented | Date interview conducted |

|---|---|---|

| Interviewee 1 | Colorado River Basin Salinity Control Program | January 2023 |

| Interviewee 2 | Colorado River Basin Salinity Control Program | February 2023 |

| Interviewee 3 | Colorado River Basin Salinity Control Program | February 2023 |

| Interviewee 4 | Upper Colorado River Endangered Fish Recovery Program | February 2023 |

| Interviewee 5 | Upper Colorado River Endangered Fish Recovery Program | February 2023 |

| Interviewee 6 | Upper Colorado River Endangered Fish Recovery Program | February 2023 |

| Interviewee 7 | San Juan River Basin Recovery Implementation Program | March 2023 |

| Interviewee 8 | San Juan River Basin Recovery Implementation Program | April 2023 |

| Interviewee 9 | San Juan River Basin Recovery Implementation Program | April 2023 |

| Interviewee 10 | Glen Canyon Adaptive Management Program | January 2023 |

| Interviewee 11 | Glen Canyon Adaptive Management Program | January 2023 |

| Interviewee 12 | Glen Canyon Adaptive Management Program | January 2023 |

| Interviewee 13 | Glen Canyon Adaptive Management Program | January 2023 |

| Interviewee 14 | Lower Colorado Multi-Species Conservation Program | January 2023 |

| Interviewee 15 | Lower Colorado Multi-Species Conservation Program | January 2023 |

| Interviewee 16 | Lower Colorado Multi-Species Conservation Program | March 2023 |

| Interviewee 17 | Colorado River Delta Program | January 2023 |

| Interviewee 18 | Colorado River Delta Program | March 2023 |

| Interviewee 19 | Colorado River Delta Program | March 2023 |

| Interviewee 20 | Gunnison Basin Selenium Management Program | July 2023 |

| Interviewee 21 | Gunnison Basin Selenium Management Program | July 2023 |

APPENDIX C

C.1 Interview questionnaire

C.1.1. Introduction Questions

- Tell us about your role in [Forum X].

-

We understand that the mission of Forum X is to [include a very short summary of the mission/goals on the website]. Can you tell us a little bit more about the purpose and history of [Forum X] from your experience?

- 2.1

Probe: Who is involved in the venue and why?

- 2.1.1

Sub-Probe: How did they become involved?

- 2.1.1

- 2.1

-

We are trying to understand how different venues engage in science or scientific activities in the Colorado River Basin. What do you see as [Forum X's] role around science or scientific activities in the Colorado River Basin? For instance, are you engaged in producing science/scientific data (including modeling or monitoring), science coordination, providing advice to scientific communities, and using science to directly inform management strategies?

- 3.1

Probe: Which of these activities do you see as most important for [Forum X's] work in the Basin?

- 3.1

C.1.2. Learning questions

-

From the work [Forum X] does related to science/science activities, where do you see those activities having an impact? For instance, the impact could include improving knowledge of issues or problems in the Basin, understanding water management alternatives, informing management actions, shaping implementation or policy choices, or other types of learning.

- 4.1

Probe: Are any of these actions informing a specific management action (a specific decision about resources; ex: a salinity control barrier)?

- 4.2

Probe: How have you seen this impact outside [Forum X] (e.g., in other venues or decision-making forums in the Basin)?

- 4.1

- To what extent is the science in this forum being coordinated with science being produced in other forums?

- To what extent do you think these activities you described contribute to adaptive management in the basin? (By adaptive management we mean an iterative decision-making process that allows science and monitoring to regularly inform management actions, and update those decisions based on new information.)

-

Could you talk through a specific example or two of those impacts from the work of [Forum X] that contributed to learning in the forum or in the Basin?

- 7.1

Probe: What do you think enabled that learning or outcome?

- 7.1.1

Sub-Probe: Were there certain characteristics of the people involved, the processes of communication, the timing, the nature of the issue that mattered?

- 7.1.1

- 7.1

-

Can you think of any examples of where [Forum X's] science activities didn't lead to an impact on learning or other outcomes?

- 8.1

If so, can you describe what happened and what you think impeded learning?

- 8.1

- What do you see as some of the challenges for integrating science into adaptive management or decision-making across the Colorado River Basin? What do you see as some solutions to these challenges?

C.1.3. Conclusion questions

- Do you have anything else about your experiences within the role of science in the Colorado River Basin that you would like to share?

- Who else do you think we should talk to either in [Forum X] or other people/organizations in the basin who are knowledgeable about or directly engaged in science activities? If possible, can you suggest someone who might provide a different perspective from you?

APPENDIX D

| Colorado River basin salinity control program | Upper Colorado River endangered fish recovery program | San Juan River basin recovery implementation program | Glen Canyon adaptive management program | Lower Colorado River multi-species conservation program | Gunnison Basin selenium management program | Colorado River Delta environmental work group | |

|---|---|---|---|---|---|---|---|

| Learning | 8 | 4 | 10 | 4 | 4 | 2 | 8 |

| Blocked learning | 3 | 1 | 3 | 3 | 1 | 2 | 6 |

| Non-learning | 3 | 3 | 1 | 1 | 2 | 0 | 0 |

APPENDIX E

| Learning, blocked learning, non-learning moments | Reasons why | Interviewee code |

|---|---|---|

| Colorado River Basin Salinity Control Program | ||

| Learning | Quality of information and data | 1 |

| Learning | Experimentation; quality of information | 2 |

| Learning | Experimentation; quality of information | 3 |

| Learning | Reliable funding | 3 |

| Learning | Experimentation; expertise of scientists | 3 |

| Learning | Quality of information; expertise of scientists | 3 |

| Learning | Experimentation; reliable funding; timing | 3 |

| Learning | Experimentation | 3 |

| Blocked | Lack of effective communication | 3 |

| Blocked | No consensus; conflicting stakeholder interests and beliefs | 3 |

| Blocked | Uncertainty | 3 |

| Non-learning | Lack of funding | 1 |

| Non-learning | Prioritization of issues | 1 |

| Non-learning | Conflicting stakeholder interests and beliefs | 2 |

| Upper Colorado River Endangered Fish Recovery Program | ||

| Learning | Adaptive management | 4 |

| Learning | Consensus decision-making | 4 |

| Learning | Experimentation | 4 |

| Learning | Reliable funding | 5 |

| Learning | Collaboration | 5 |

| Learning | Collaboration | 5 |

| Learning | Experimentation; collaboration; effective communication | 6 |

| Learning | Quality of information | 6 |

| Learning | Quality of information; expertise of scientists | 6 |

| Blocked | Conflicting stakeholder interests and beliefs | 5 |

| Non-learning | Uncertainty | 4 |

| Non-learning | Uncertainty | 6 |

| Non-learning | Current management is effective; lack of funding | 5 |

| San Juan River Basin Recovery Implementation Program | ||

| Learning | Consensus decision-making; adaptive management | 7 |

| Learning | Quality of information | 7 |

| Learning | Collaboration; experimentation | 8 |

| Learning | Experimentation | 8 |

| Learning | Experimentation | 9 |

| Learning | Funding | 9 |

| Learning | Cost-effectiveness; quality of information | 9 |

| Learning | Experimentation | 9 |

| Blocked | Legal constraints; biophysical constraints | 7 |

| Blocked | Conflicting stakeholder interests and beliefs; Lack of appropriate decision-making structure | 8 |

| Blocked | Lack of funding; lack of data sharing | 9 |

| Non-learning | Conflicting stakeholder interests and beliefs | 8 |

| Glen Canyon Adaptive Management Program | ||

| Learning | Collaboration; adaptive management; expertise of scientists | 10 |

| Learning | Experimentation | 11 |

| Learning | Collaboration | 12 |

| Learning | Quality of information | 12 |

| Learning | Collaboration; expertise of scientists | 13 |

| Blocked | Lack of effective communication | 11 |

| Blocked | Uncertainty | 12 |

| Blocked | Legal constraints | 13 |

| Non-learning | Conflicting stakeholder interests and beliefs; Lack of focused goals; Lack of reliable funding | 10 |

| Lower Colorado River Multi-Species Conservation Program | ||

| Learning | Collaboration; local trust; expertise of scientists | 14 and 15 |

| Learning | Collaboration | 14 and 15 |

| Learning | Focused goals and structure; collaboration | 16 |

| Learning | Collaboration; expertise of scientists | 16 |

| Blocked | Conflicting stakeholder interests and beliefs; lack of funding | 14 and 15 |

| Non-learning | Lack of funding | 16 |

| Non-learning | Leadership change | 16 |

| Colorado River Delta Environmental Work Group | ||

| Learning | Quality of information; effective communication | 17 |

| Learning | Collaboration; effective communication | 17 |

| Learning | Collaboration | 18 |

| Learning | Experimentation | 19 |

| Blocked | Conflicting stakeholder interests and beliefs | 17 |

| Blocked | Lack of effective communication | 18 |

| Blocked | Timing; uncertainty | 18 |

| Blocked | Lack of effective communication | 19 |

| Blocked | Lack of effective communication | 19 |

| Blocked | Lack of data sharing | 19 |

| Gunnison Basin Selenium Management Program | ||

| Learning | Collaboration; expertise of scientists | 20 |

| Learning | Collaboration | 21 |

| Blocked | Lack of funding | 20 |

| Blocked | Conflicting stakeholder interests and beliefs; lack of funding | 21 |

REFERENCES

- 1 External political factors not shown in the figure includes “timing” for learning; “legal constraints” and “bad timing” for blocked learning; “lack of priority” for non-learning.

- 2 Timing (n = 1) was an external political factor for why learning occurred.

- 3 Legal constraints (n = 2) and timing (n = 1) were external political factors to why blocked learning occurred.

- 4 Lack of priority (n = 1) was an external political factor for why non-learning occurred.