Acoustic features as a tool to visualize and explore marine soundscapes: Applications illustrated using marine mammal passive acoustic monitoring datasets

Abstract

Passive Acoustic Monitoring (PAM) is emerging as a solution for monitoring species and environmental change over large spatial and temporal scales. However, drawing rigorous conclusions based on acoustic recordings is challenging, as there is no consensus over which approaches are best suited for characterizing marine acoustic environments. Here, we describe the application of multiple machine-learning techniques to the analysis of two PAM datasets. We combine pre-trained acoustic classification models (VGGish, NOAA and Google Humpback Whale Detector), dimensionality reduction (UMAP), and balanced random forest algorithms to demonstrate how machine-learned acoustic features capture different aspects of the marine acoustic environment. The UMAP dimensions derived from VGGish acoustic features exhibited good performance in separating marine mammal vocalizations according to species and locations. RF models trained on the acoustic features performed well for labeled sounds in the 8 kHz range; however, low- and high-frequency sounds could not be classified using this approach. The workflow presented here shows how acoustic feature extraction, visualization, and analysis allow establishing a link between ecologically relevant information and PAM recordings at multiple scales, ranging from large-scale changes in the environment (i.e., changes in wind speed) to the identification of marine mammal species.

1 INTRODUCTION

Abrupt changes in the ocean environment are increasing in frequency as climate change accelerates (Ainsworth et al., 2020), resulting in the loss of key ecosystems (Sully et al., 2019), and shifts in endangered species' distributions (Plourde et al., 2019). Detecting such changes requires both historical and real-time (or near-real-time) data made readily available to managers and decision-makers. Scientists and practitioners are being tasked with finding efficient solutions for monitoring environmental health and detecting incipient change (Gibb et al., 2019; Kowarski & Moors-Murphy, 2021). This challenge includes monitoring for changes in species' presence, abundance, distribution, and behavior (Durette-Morin et al., 2019; Fleming et al., 2018; Root-Gutteridge et al., 2018), monitoring anthropogenic activity and disturbance levels (Gómez et al., 2018), monitoring changes in the environment (Almeira & Guecha, 2019), detecting harmful events (Rycyk et al., 2020), among others.

Environmental sounds provide a proxy to investigate ecological processes (Gibb et al., 2019; Rycyk et al., 2020), including exploring complex interactions between anthropogenic activity and biota (Erbe et al., 2019; Kunc et al., 2016). Sound provides useful information on environmental conditions and ecosystem health, allowing, for example, the rapid identification of disturbed coral reefs (Elise et al., 2019). In concert, numerous species (i.e., birds, mammals, fish, and invertebrates) rely on acoustic communication for foraging, mating and reproduction, habitat use and other ecological functions (Eftestøl et al., 2019; Kunc & Schmidt, 2019; Luo et al., 2015; Schmidt et al., 2014). The noise produced by anthropogenic activities (e.g., vehicles, stationary machinery, explosions) can interfere with such processes, affecting the health and reproductive success of multiple marine taxa (Kunc & Schmidt, 2019). In response to concerns about noise pollution, increasing effort is being invested in developing, testing, and implementing noise management measures in both terrestrial and marine environments. Consequently, Passive Acoustic Monitoring (PAM) has become a mainstream tool in biological monitoring (Gibb et al., 2019). PAM represents a set of techniques that are used for the systematic collection of acoustic recordings for environmental monitoring. It allows collection of large amounts of acoustic recordings that can then be used to understand changes happening in the environment at multiple spatial and temporal scales.

One of PAM's most common applications is in marine mammal monitoring and conservation. Marine mammals produce complex vocalizations that are species-specific (if not individually unique), and such vocalizations can be used when estimating species' distributions and habitat use (Durette-Morin et al., 2019; Kowarski & Moors-Murphy, 2021). PAM applications in marine mammal research span from the study of their vocalizations and behaviors (Madhusudhana et al., 2019; Vester et al., 2017) to assessing anthropogenic disturbance (Nguyen Hong Duc et al., 2021). PAM datasets can reach considerable sizes, particularly when recorded at high sampling rates, and projects often rely on experts to manually inspect the acoustic recordings for the identification of sounds of interest (Nguyen Hong Duc et al., 2021). For projects involving recordings collected over multiple months at different locations, conducting a manual analysis of the entire dataset can be prohibitive, and often only a relatively small portion of the acoustic recordings is subsampled for analysis.

At its core, studying acoustic environments is a signal detection and classification problem in which a large number of spatially and temporally overlapping acoustic energy sources need to be differentiated to better understand their relative contributions to the soundscape. Such an analytical process, termed acoustic scene classification (Geiger et al., 2013), is a key step in analyzing environmental information collected by PAM recorders. Acoustic scenes can contain multiple overlapping sound sources, which generate complex combinations of acoustic events (Geiger et al., 2013). This definition overlaps with the ecoacoustics definition of soundscape (Farina & Gage, 2017), providing a bridge between the two fields, where a soundscape represents the total acoustic energy contained within an environment and consists of three intersecting sound sources: geological (i.e., geophony), biological (i.e., biophony), and anthropogenic (i.e., anthrophony). A goal of ecoacoustics is to understand how these sources interact and influence each other, with a particular focus on biological-anthropogenic acoustic interactions. The concept of soundscape has recently been reframed and expanded to encompass three distinct categories: the distal soundscape, the proximal soundscape, and the perceptual soundscape (Grinfeder et al., 2022). The distal soundscape describes the spatial and temporal variation of acoustic signals within a defined area or environment. The proximal soundscape represents acoustic signals that occur at a specific location within a defined area – a distal soundscape can be interpreted as a collection of proximal soundscapes and includes all potential receiver positions. The perceptual soundscape is the subjective interpretation of a specific proximal soundscape and involves sensory and cognitive processes of the individual. In this study, we focus on the analysis of distal soundscapes, which allow investigating how biotic and abiotic factors relate to acoustic recordings.

Automated acoustic analysis can overcome some of the limitations encountered in manual PAM analysis, allowing ecoacoustics researchers to explore full datasets (Houegnigan et al., 2017). Deep learning represents a novel set of computer-based artificial intelligence approaches, which has profoundly changed biology and ecology research (Christin et al., 2019). Among the deep learning approaches, Convolutional Neural Networks (CNNs) have demonstrated high accuracy in performing image classification tasks, including the classification of spectrograms (i.e., visual representations of sound intensity across time and frequency) (Hershey et al., 2017; LeBien et al., 2020; Stowell, 2022).

CNNs have been applied successfully to several ecological problems, and their use in ecology has been growing (Christin et al., 2019), such as to process camera trap images to identify species, age classes, numbers of animals, and to classify behavior patterns (Lumini et al., 2019; Norouzzadeh et al., 2018; Tabak et al., 2019). CNN's algorithms perform well for acoustic classification (Hershey et al., 2017), including the identification of a growing number of species vocalizations such as crickets and cicadas (Dong et al., 2018), birds and frogs (LeBien et al., 2020), fish (Mishachandar & Vairamuthu, 2021), and lately marine mammals (Usman et al., 2020). Recent applications of deep learning to the study of marine soundscapes include automated detectors for killer whales (Bergler et al., 2019) and humpback whales (Allen et al., 2021), the detection of North Atlantic right whales under changing environmental conditions (Vickers et al., 2021), and the detection of echolocation click trains produced by toothed whales (Roch et al., 2021).

Most CNN applications focus on species detection rather than a broader characterization of the acoustic environment. Furthermore, automated acoustic analysis algorithms often rely on supervised classification based on large datasets of known sounds (i.e., training datasets) used to train acoustic classifiers; creating training datasets is time-consuming and requires expert-driven manual classification of the acoustic data (Bittle & Duncan, 2013).

Recent developments in acoustic scene analysis demonstrate how the implementation of acoustic feature sets derived from CNNs, along with the use of dimensionality reduction (UMAP), can improve our ability to understand ecoacoustics datasets while providing a common ground for analyzing recordings collected across multiple environments and temporal scales (Clink & Klinck, 2021; Mishachandar & Vairamuthu, 2021; Sethi et al., 2020).

- We used the pre-trained VGGish algorithm to extract sets of acoustic features at different temporal resolutions for both datasets.

- Using UMAP, we reduced the acoustic features from the WMD to visualize the dataset structure and explore the relationship between audio recordings and labels describing species taxonomy and geographic locations.

- For the PBD dataset, UMAP visualizations were paired with the use of balanced random forest classifiers fitted on the VGGish acoustic features. With this, we tested how learned acoustic features can be used to identify the biophonic (humpback whale vocalizations) and geophonic (wind speed, surface temperature, and current speed) components of the distal soundscape of Placentia Bay.

This approach is not tied to a specific environment or group of species and can be used to simultaneously investigate the macro- and microcharacteristics of marine soundscapes.

2 MATERIALS AND METHODS

2.1 Data acquisition and preparation

We collected all records available in the Watkins Marine Mammal Database (Watkins Marine Mammal Sound Database, Woods Hole Oceanographic Institution and the New Bedford Whaling Museum) website listed under the “all cuts” page. For each audio file in the WMD the associated metadata included a label for the sound sources present in the recording (biological, anthropogenic, and environmental), as well as information related to the location and date of recording. To minimize the presence of unwanted sounds in the samples, we retained only audio files with a single source listed in the metadata. We then labeled the selected audio clips according to taxonomic group (Odontocetae, Mysticetae) and species.

We limited the analysis to 12 marine mammal species by discarding data when a species: had less than 60 s of audio available, had a vocal repertoire extending beyond the resolution of the acoustic classification model (VGGish), or was recorded in a single country. To determine if a species was suited for analysis using VGGish, we inspected the Mel-spectrograms of 3-s audio samples and retained only species with vocalizations that could be captured in the Mel-spectrogram (Appendix S1). The vocalizations of species that produce very low frequency or very high frequency were not captured by the Mel-spectrogram; thus, we removed them from the analysis. To ensure that records included the vocalizations of multiple individuals for each species, we considered only species with records from two or more different countries. Finally, to avoid overrepresentation of sperm whale vocalizations, we excluded 30,000 sperm whale recordings collected in the Dominican Republic. The resulting dataset consisted in 19,682 audio clips with a duration of 960 ms each (0.96 s) (Table 1).

| Species | Location (year) | N | Total |

|---|---|---|---|

| Bowhead whale | Canada (1988) | 705 | 772 |

| United States (1972, 1980) | 67 | ||

| Beluga | Canada (1949, 1962, 1965) | 153 | 224 |

| United States (1963, 1965, 1968) | 71 | ||

| Southern right whale | Argentina (1979) | 99 | 109 |

| Australia (1983) | 10 | ||

| North Atlantic right whale | Canada (1981) | 205 | 376 |

| United States (1956, 1959, 1970, 1974) | 171 | ||

| Short finned pilot whale | Bahamas (1957, 1961) | 576 | 696 |

| Canada (1958, 1965, 1966, 1967) | 83 | ||

| St. Vincents and the Grenadines (1981) | 37 | ||

| Long finned pilot whale | Canada (1954, 1975) | 1154 | 2029 |

| Italy (1994) | 26 | ||

| North Atlantic Ocean (1975) | 166 | ||

| United States (1977) | 426 | ||

| Unknown (1975) | 257 | ||

| Humpback whale | Bahamas (1952, 1955, 1958, 1963) | 4819 | 5601 |

| Puerto Rico (1954) | 6 | ||

| British Virgin Islands (1992) | 254 | ||

| United States (1975, 1979, 1980) | 269 | ||

| Unknown (1954, 1961) | 253 | ||

| Orca | Canada (1961, 1964, 1966, 1979) | 492 | 4416 |

| Norway (1989, 1992) | 1696 | ||

| United States (1960, 1997) | 2228 | ||

| Sperm whale | Bahamas (1952) | 4 | 4368 |

| Italy (1985, 1988, 1994) | 1143 | ||

| Madeira (1966) | 1 | ||

| Malta (1985) | 220 | ||

| Canada (1975) | 966 | ||

| Canary Islands (1987) | 7 | ||

| St. Vincents and the Grenadines (1983) | 18 | ||

| United States (1972) | 1954 | ||

| Unknown (1961, 1962, 1963, 1975) | 55 | ||

| Rough-thooted dolphin | Italy (1985) | 67 | 75 |

| Malta (1985) | 8 | ||

| Clymene dolphin | Santa Lucia (1983) | 286 | 907 |

| St. Vincents and the Grenadines (1983) | 621 | ||

| Bottlenose Dolphin | Croatia (1994) | 58 | 109 |

| United States (1951, 1984, 1989) | 38 | ||

| Unknown (1956) | 13 |

The Placentia Bay Database (PBD) includes recordings collected by Fisheries and Oceans Canada in Placentia Bay (Newfoundland, Canada), in 2019. The dataset consisted of 2 months of continuous recordings (1230 h), starting on July 1, 2019, and ending on August 31, 2019. The data was collected using an AMAR G4 hydrophone (sensitivity: −165.02 dB re 1 V/μPa at 250 Hz) deployed at 64 m of depth. The hydrophone was set to operate following 15 min cycles, with the first 60 s sampled at 512 kHz, and the remaining 14 min sampled at 64 kHz. For the purpose of this study, we limited the analysis to the 64 kHz recordings.

2.2 Acoustic feature extraction

The audio files from the WMD and PBD databases were used as input for VGGish (Abu-El-Haija et al., 2016; Chung et al., 2018), a CNN developed and trained to perform general acoustic classification. VGGish was trained on the Youtube8M dataset, containing more than two million user-labeled audio-video files. Rather than focusing on the final output of the model (i.e., the assigned labels), here the model was used as a feature extractor (Sethi et al., 2020). VGGish converts audio input into a semantically meaningful vector consisting of 128 features. The model returns features at multiple resolution: ~1 s (960 ms); ~5 s (4800 ms); ~1 min (59,520 ms); ~5 min (299,520 ms). All of the visualizations and results pertaining to the WMD were prepared using the finest feature resolution of ~1 s. The visualizations and results pertaining to the PBD were prepared using the ~5 s features for the humpback whale detection example and were then averaged to an interval of 30 min in order to match the temporal resolution of the environmental measures available for the area.

2.3 UMAP ordination and visualization

UMAP is a non-linear dimensionality reduction algorithm based on the concept of topological data analysis, which, unlike other dimensionality reduction techniques (e.g., tSNE), preserves both the local and global structure of multivariate datasets (McInnes et al., 2018). To allow for data visualization and to reduce the 128 features to two dimensions for further analysis, we applied Uniform Manifold Approximation and Projection (UMAP) to both datasets and inspected the resulting plots.

The UMAP algorithm generates a low-dimensional representation of a multivariate dataset while maintaining the relationships between points in the global dataset structure (i.e., the 128 features extracted from VGGish). Each point in a UMAP plot in this paper represents an audio sample with duration of ~1 second (WMD dataset), ~ 5 s (PBD dataset, humpback whale detections), or 30 min (PBD dataset, environmental variables). Each point in the two-dimensional UMAP space also represents a vector of 128 VGGish features. The nearer two points are in the plot space, the nearer the two points are in the 128-dimensional space, and thus the distance between two points in UMAP reflects the degree of similarity between two audio samples in our datasets. Areas with a high density of samples in UMAP space should, therefore, contain sounds with similar characteristics, and such similarity should decrease with increasing point distance. Previous studies illustrated how VGGish and UMAP can be applied to the analysis of terrestrial acoustic datasets (Heath et al., 2021; Sethi et al., 2020). The visualizations and classification trials presented here illustrate how the two techniques (VGGish and UMAP) can be used together for marine ecoacoustics analysis. UMAP visualizations were prepared using the using the umap-learn package for python programming language (version 3.10). All UMAP visualizations presented in this study were generated using the algorithm's default parameters.

2.4 Labeling sound sources

The labels for the WMD records (i.e., taxonomic group, species, location) were obtained from the database metadata.

For the PBD recordings, we obtained measures of wind speed, surface temperature, and current speed (Figure 1) from an oceanographic buy located in proximity of the recorder.2 We choose these three variables for their different contributions to background noise in marine environments. Wind speed contributes to underwater background noise at multiple frequencies, ranging 500 Hz to 20 kHz (Hildebrand et al., 2021). Sea surface temperature contributes to background noise at frequencies between 63 and 125 Hz (Ainslie et al., 2021), while ocean currents contribute to ambient noise at frequencies below 50 Hz (Han et al., 2021) Prior to analysis, we categorized the environmental variables and assigned the categories as labels to the acoustic features (Table 2).

| Variable | Labels | Number of samples | n estimators | Balanced accuracy |

|---|---|---|---|---|

| Wind speed | 0–4 m/s | 986 | 150 | 0.72 |

| 4–6 m/s | 906 | |||

| 6–8 m/s | 746 | |||

| 8–16 m/s | 304 | |||

| Surface temperature | 8–10°C | 148 | 200 | 0.41 |

| 10–12°C | 806 | |||

| 12–14°C | 478 | |||

| 14–16°C | 445 | |||

| 16–18°C | 980 | |||

| Current speed | 0–20 mm/s | 148 | 200 | 0.35 |

| 20–60 mm/s | 590 | |||

| 60–110 mm/s | 735 | |||

| 110–170 mm/s | 733 | |||

| 170–260 mm/s | 587 | |||

| 260–400 mm/s | 148 | |||

| Humpback whale vocalizations | Absent (0) | 3279 | 200 | 0.84 |

| Present (1) | 181 |

Humpback whale vocalizations in the PBD recordings were processed using the humpback whale acoustic detector created by NOAA and Google (Allen et al., 2021), providing a model score for every ~5 s sample. This model was trained on a large dataset (14 years and 13 locations) using humpback whale recordings annotated by experts (Allen et al., 2021). The model returns scores ranging from 0 to 1, indicating the confidence in the predicted humpback whale presence. We used the results of this detection model to label the PBD samples according to presence of humpback whale vocalizations. To verify the model results, we inspected all audio files that contained a 5 s sample with a model score higher than 0.9 for the month of July. If the presence of a humpback whale was confirmed, we labeled the segment as a model detection. We labeled any additional humpback whale vocalization present in the inspected audio files as a visual detection, while we labeled other sources and background noise samples as absences. In total, we labeled 4.6 h of recordings. We reserved the recordings collected in August to test the precision of the final predictive model.

2.5 Label prediction performance

We used Balanced Random Forest models (BRF) provided in the imbalanced-learn python package (Lemaître et al., 2017) to predict humpback whale presence and environmental conditions from the acoustic features generated by VGGish. We choose BRF as the algorithm as it is suited for datasets characterized by class imbalance. The BRF algorithm performs under sampling of the majority class prior to prediction, allowing to overcome class imbalance (Lemaître et al., 2017). For each model run, the PBD dataset was split into training (80%) and testing (20%) sets.

The training datasets were used to fine-tune the models through a nested k-fold cross validation approach with 10-folds in the outer loop and five-folds in the inner loop. We selected nested cross validation as it allows optimizing model hyperparameters and performing model evaluation in a single step. We used the default parameters of the BRF algorithm, except for the ‘n_estimators’ hyperparameter, for which we tested five different possible values: 25, 50, 100, 150, and 200. We choose to optimize the model for ‘n_estimators’ as this parameter determines the number of decision trees generated by the BRF model and finding an optimal value reduces the chances of overfitting. Every iteration of the outer loop generates a new train-validation split of the test dataset, which is then used as input to a BRF.

We choose balanced-accuracy scores as the evaluation metric for both datasets as it is suited for measuring model performance when samples are highly imbalanced (Brodersen et al., 2010).

All predictive models for the PBD were trained and tested on the 128 acoustic features generated by VGGish. The UMAP plots were used to visually inspect the structure of the PBD and WMD features datasets. For the WMD dataset, we used violin plots to explore the distribution of the two UMAP dimensions in relation to the clusters of data points labeled according to taxonomic group, species, and location of origin of the corresponding audio samples.

3 RESULTS

3.1 Watkins marine mammals sounds database

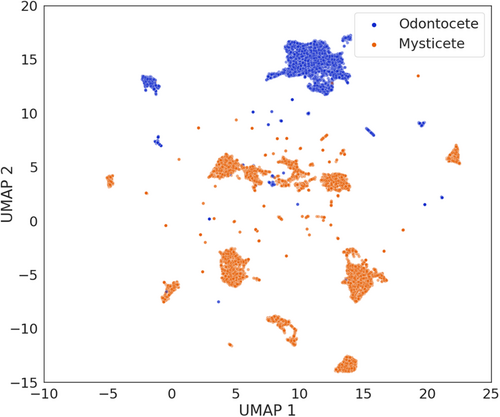

The UMAP visualizations of the WMD features showed a complex structure that reflected both taxonomic labels (group and species) and locations. At the macroscale, UMAP separated samples according to the taxonomic group label. Samples belonging to the mysticete and odontocete species occupied two distinct regions of the plot, with little overlap (Figure 2). When looking at the distribution of the two UMAP dimension, this separation was more evident along the second UMAP dimensions, while samples had a higher degree of overlapping values along the first dimension (Appendix S2, Figure S2.1).

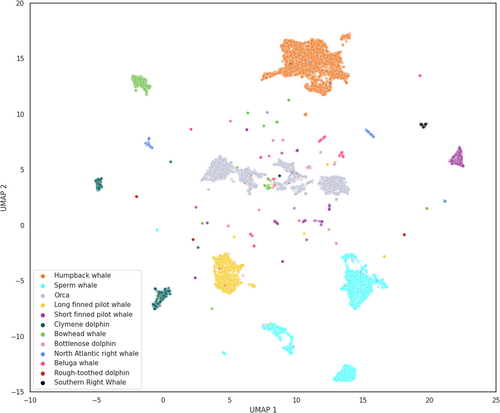

Of the 12 species considered, eight species formed clear and large clusters: humpback whales, bowhead whales, sperm whales, orcas, long and short finned pilot whales, Clymene dolphins, and North Atlantic right whales (Figure 3). Samples belonging to bottlenose dolphins, beluga whales, rough-toothed dolphins, and southern right whales, on the other hand, did not form distinct clusters. The distribution of the two UMAP dimensions showed that species were better separated along the second UMAP dimension, while species had overlapping distribution along the first UMAP dimension (Appendix S2, Figure S2.2).

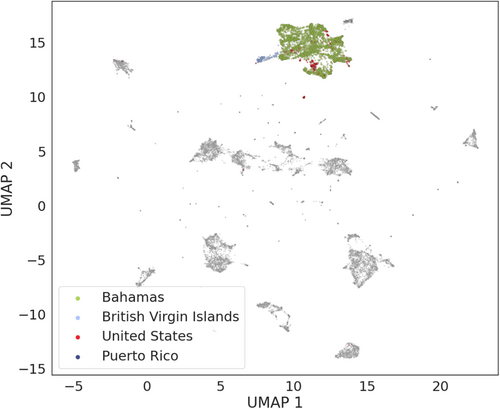

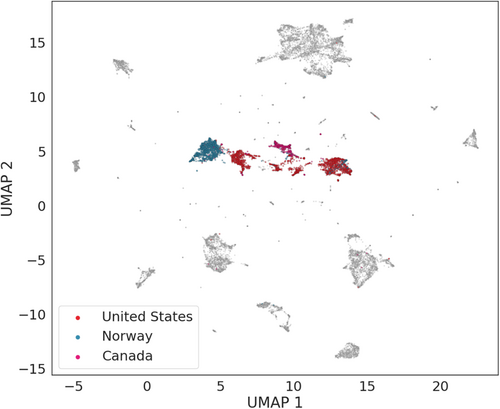

Samples collected in different locations but belonging to the same species formed close clusters in the UMAP plots. For example, samples of humpback whale vocalizations collected in the Bahamas, the British Virgin Islands, Puerto Rico, and the United States formed a large cluster (Figure 4) with overlapping distributions of the two UMAP dimensions (Appendix S2, Figure S2.3). Similarly, the killer whale samples, collected in the United States, Canada, and Norway, all occupied the same region of the UMAP plot (Figure 5).

3.2 Placentia Bay dataset

Results of model parameter selection for the four BRF algorithms fitted on the PBD labels are shown in Table 2.

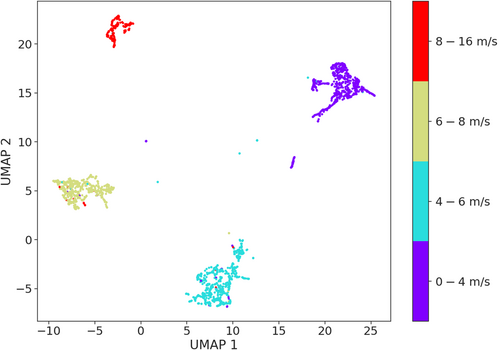

3.3 Oceanographic variables

Of the three BRF fitted on environmental variables, only the model fitted to the wind speed labels provided overall accurate predictions. This is reflected by the model's balanced accuracy score (0.72) (Table 2). The model accurately discriminated between low (0–4 m/s) and medium (4–6 m/s) wind speeds, while the model ability to correctly classify the higher wind speeds (6–8 m/s and 8–16 m/s) was lower (Appendix S2, Figure S2.4). The BRF models fitted on surface temperature and current speed performed poorly, achieving balanced accuracy scores of 0.41 and 0.35, respectively (Table 2). In the case of temperature, the lowest (8–10°C), the medium (12–14°C), and highest (16–18°C) values were correctly classified for approximately 50% of the testing datasets (Appendix S2, Figure S2.5). In the case of current speed, only the lowest (0–20 mm/s) and highest (260–400 mm/s) were correctly classified for approximately 60% of the dataset (Appendix S2, Figure S2.6). These results are reflected in the UMAP visualizations for the oceanographic variables. Samples labeled by wind speed formed clear and separated clusters (Figure 6). Samples labeled by surface temperature and current speed did not show clear clusters separating the acoustic samples (Appendix S2, Figures S2.8 and S2.9).

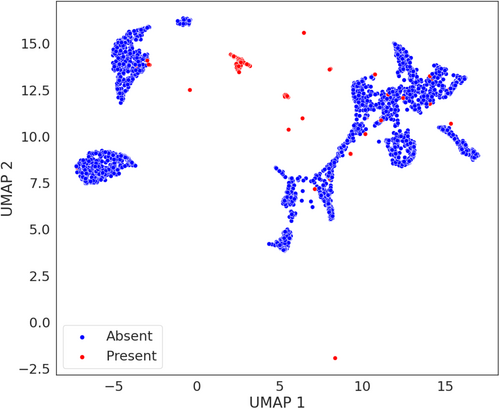

3.4 Humpback whale detections

The BRF fitted on the humpback whale labels achieved a balanced accuracy score of 0.84 (Table 2) and showed similar performance for both the presence and absence labels (Appendix S2, Figure S2.7). The UMAP visualization for the humpback whale labels showed a clear cluster of presences (Figure 7). However, several presences plotted within the clusters formed by samples labeled as absences, and a few samples were located between the absences and the presences clusters.

Finaly, the humpback whale BRF model, trained and tested on PBD samples collected in July, predicted 19 presences when run on the samples collected in August. Of these, 15 samples were true presences while the remaining four were false presences, resulting in a precision score of 0.79. All predicted presences were limited to the 23rd of August.

4 DISCUSSION

Managing the wellbeing of ecosystems requires identifying when and where human activities are impacting species' occurrence, movement, and behavior. PAM is a useful approach for the detection of both large- and small-scale changes in urban and wild environments, as it allows for continuous and prolonged ecosystem monitoring. Challenges in employing PAM as a standard monitoring tool arise after data collection, when researchers and practitioners need to quickly extract useful information from large acoustic datasets, to understand when and where management actions are needed to preserve the well-being of ecosystems. The relatively new field of ecoacoustics provides the theoretical background for linking specific characteristics of the acoustic environment to biodiversity and ecosystem health.

The objective of our study was testing how the acoustic features generated by a pre-trained CNN (VGGish) can be used to link recorded sounds to environmental features and better understand processes happening in marine environments at multiple scales—from changes in oceanographic conditions over the span of months to punctuate events such as the vocalizations produced by marine mammals.

Our analyses revealed several applications for inferring population- and location-specific information from acoustic datasets. The analysis conducted on the WMD dataset shows that the VGGish acoustic features are suited for discriminating between marine mammal species recorded in different environments.

Understanding the evolution of vocal diversity and the role of vocalizations in the ecology of a species is one of the key objectives of bioacoustics research (Luís et al., 2021). Full acoustic repertoires are not available for most species, as building comprehensive lists of vocalizations requires considerable research effort. Here, we show how a general acoustic classification model (VGGish) used as a feature extractor allows us to detect differences and similarities among marine mammal species, without requiring prior knowledge on the species' vocal repertoires. For example, all humpback whale samples formed a compact cluster (Figure 4) and humpback whale populations share common traits in their songs, even when populations are acoustically isolated (Mercado III & Perazio, 2021). Killer whales, on the other hand, formed distinct clusters (Figure 5), and different populations of orcas are characterized by differences in call repertoires and call frequencies (Filatova et al., 2015; Foote & Nystuen, 2008). Although we cannot consider our results as definitive evidence of convergence or divergence in vocal behavior for these two species, we suggest that this aspect should be further investigated, perhaps using more recent recordings of these two species from different populations. Samples from four of the 12 marine mammal species (bottlenose dolphins, beluga whales, rough-toothed dolphins, and southern right whales) did not form clear clusters. This was most likely due to the low number of samples available for these four species (Table 1).

The analysis conducted on the PBD dataset shows how the VGGish features can be used as a tool to establish relationships between sound recordings and the environment at multiple scales. At the macroscale, the VGGish features were successful in classifying acoustic recordings according to measured wind speeds. This result is particularly useful for determining how winds contribute to underwater background noise. At the fine scale, the VGGish features could be used to identify vocalizations of humpback whales in Placentia Bay. However, presences for the month of August occurred within a single day, indicating that the BRF model may be declaring a large number of samples containing humpback whale vocalizations as absences. Furthermore, the model labeled some of the PBD samples containing only background noise and low-frequency noise from a passing ship as presences (Appendix S1). The results of the BRF model trained on humpback whale detections could be improved by extending the analysis to longer time frames and to multiple locations, and by including labels for additional sound sources.

Our results highlight a limitation of using a general acoustic classification algorithm trained on recordings collected in terrestrial environments. The audio files used as input in VGGish are limited to a sampling rate of 16 kHz, resulting in a Nyquist frequency of 8 kHz. This is sufficient to capture marine mammal vocalizations that overlap with VGGish frequency limit (Appendix S1), while the method is not suited for species using high-frequency (e.g., harbor porpoises) or very low-frequency (e.g., blue and fin whales) vocalizations. This led to the removal of a large number of samples from the WMD dataset. This limitation also explains the poor performance of the models trained on surface temperature and current speed, as their contribution to background noise is evident at frequencies below 125 Hz. Nonetheless, the acoustic features relative to species vocalizing within the 8 kHz range provide useful information relative to the acoustic behavior of marine mammal species. Similarly, the features provided information relative to changes in the acoustic environment of Placentia Bay due to changes in wind speeds. Other CNN approaches, such as AclNet (Huang & Leanos, 2018), allow processing audio with higher sampling rates (e.g., 44.1 kHz) at the cost of increased computing requirements.

Machine-learned acoustic features respond to multiple marine sound sources and can be employed successfully for investigating both the biological and anthropic components of marine soundscapes (Heath et al., 2021; Sethi et al., 2020). However, their ability to detect species and changes in marine environments is limited by the algorithm's frequency range. A second limitation is that acoustic features are not a plug and play product, as establishing links between features and relevant ecological variables requires additional analyses and data sources. The objective of this study was to explore the application of the methods proposed by Sethi et al. (2020) in a new and unexplored context—the analysis of underwater soundscapes. This approach was particularly suitable for our study as the acoustic samples are not pre-processed to remove background noises. This approach has also been demonstrated to be resilient to the use of multiple recording devices, as well as to different levels of compression and recording schedules (Heath et al., 2021; Sethi et al., 2020), making it ideal for the analysis of the WMD dataset. An alternative approach where datasets of spectrogram images are directly used as input to dimensionality reduction algorithms is provided by Sainburg et al. (2020) and Thomas et al. (2022). However, this approach relies on removing background noise from the recordings, which, in the case of our study, would have led to loss of information relative to the relationship between environments and acoustic recordings.

By presenting a set of examples focused on marine mammals, we have demonstrated the benefits and challenges of implementing acoustic features as descriptors of marine acoustic environments. Our future research will extend feature extraction and testing to full PAM datasets spanning several years and inclusive of multiple hydrophone deployment locations. Other aspects warranting further investigation are how acoustic features perform when the objective is discriminating vocalizations of individuals belonging to the same species or population, as well as their performance in identifying samples with multiple active sound sources.

Acoustic features are abstract representations of PAM recordings that preserve the original structure and underlying relationships between the original samples, and, at the same time, are a broadly applicable set of metrices that can be used to answer ecoacoustics, ecology, and conservation questions. As such, they can help us understand how natural systems interact with, and respond to, anthropogenic pressures across multiple environments. Finally, the universal nature of acoustic features analysis could help bridge the gap between terrestrial and marine soundscape research. This approach could deepen our understanding of natural systems by enabling multi-system environmental assessments, allowing researchers to investigate and monitor, for example, how stressor-induced changes in one system may manifest in another. These benefits accrue from an approach that is more objective than manual analyses and requires far less human effort.

AUTHOR CONTRIBUTIONS

Simone Cominelli: Conceptualization (lead); data curation (equal); formal analysis (equal); investigation (equal); methodology (equal); software (lead); validation (lead); visualization (lead); writing – original draft (lead); writing – review and editing (lead). Nicolo' Bellin: Conceptualization (lead); data curation (lead); formal analysis (lead); investigation (lead); methodology (lead); software (lead); validation (lead); visualization (lead); writing – original draft (lead); writing – review and editing (lead). Carissa D. Brown: Funding acquisition (lead); project administration (lead); supervision (lead); writing – review and editing (equal). Valeria Rossi: Funding acquisition (supporting); project administration (supporting); supervision (lead); writing – review and editing (supporting). Jack Lawson: Funding acquisition (lead); project administration (lead); resources (lead); supervision (lead); writing – review and editing (supporting).

ACKNOWLEDGEMENTS

This project was funded by the Species at Risk, Oceans Protection Plan, and Marine Ecosystem Quality programmes of the Department of Fisheries and Oceans Canada, Newfoundland and Labrador Region, by Memorial University of Newfoundland and Labrador, and by the Ph.D. program in Evolutionary Biology and Ecology (University of Parma, agreement with University of Ferrara and University of Firenze) and by the TD Bank Bursary for Environmental Studies. This work has benefited from the equipment and framework of the COMP-R Initiative, funded by the ‘Departments of Excellence’ program of the Italian ministry for University and Research (MUR, 2023-2027). We would like to express our gratitude to the curators of the Watkins Marine Mammal Sound Database as we believe that open access databases are incredibly relevant to the development of global monitoring of natural systems. We thank all the graduate students of the Northern EDGE Lab for their support, and their effort in creating a welcoming and inclusive work environment. We would also like to thank Madelyn Swackhamer, Sean Comeau, Lee Sheppard, Gregory Furey, and Andrew Murphy from DFO’s Marine Mammal Section for collecting the data used in this study, providing detailed information about the hydrophone deployments, and for their help and support with accessing DFO’s PAM databases.

CONFLICT OF INTEREST STATEMENT

The authors declare that there are no conflicts of interest.

ENDNOTES

Open Research

DATA AVAILABILITY STATEMENT

- Dryad (data tables): https://doi.org/10.5061/dryad.3bk3j9kn8

- Zenodo (python scripts): https://doi.org/10.5281/zenodo.10019845