Issue highlights—July 2024

The integration of artificial intelligence (AI) into hematology diagnostics represents a significant advancement, particularly within the context of flow cytometry. AI enhances flow cytometry by applying advanced algorithms and machine learning to identify patterns in cellular data that may not be detectable through traditional methods. This integration is expected to improve diagnostic accuracy and efficiency, offering more personalized patient care. This issue focuses on the application of AI in enhancing flow cytometry for hematology diagnostics. The articles highlight current advancements and the potential of AI to set new standards in the diagnostic accuracy and management of hematological disorders, emphasizing the importance of this technology in facilitating more effective and tailored treatment strategies.

The manuscript “Recommendations for using artificial intelligence in clinical flow cytometry” by Ng et al. is a high-level review of basic terminology and general applications of AI and machine learning in the clinical flow cytometry laboratory. The authors list a set of preliminary and general considerations regarding development, validation, quality assurance, explainability, and regulatory considerations. They posit AI could be used in clinical flow cytometry to improve efficiency, assist in the prioritization of cases, reduce errors, and highlight previously unrecognized immunophenotypes underlying biological processes. Currently, the interpretation of clinical flow cytometric data relies on manual gating approaches and the subjective assessments of two-dimensional dot plots (Wood, 2020). AI software can process large amounts of data quickly and accurately. The authors argue it will be used to aid in the interpretation of clinical flow cytometry data by leveraging its high dimensional pattern recognition and decision-making capabilities, improving efficiency, and reducing errors. They point out that the rigor for algorithm validation should be proportional to the comparative impact on clinical workflows. Low-risk situations, such as ordering additional antibody panels or identification of cases for an urgent review, should require a less stringent validation process than a high-risk situation, such as the autonomous, human out-of-the-loop process for the interpretation and/or sign-out of cases. That withstanding, they insist review by an experienced hematopathologist should always be required.

The authors emphasize the importance of collaboration and data sharing among all stakeholders involved in diagnostic flow cytometry to ensure the successful integration of AI. The success of AI in the clinical flow cytometry laboratory will likely rely on the availability of large amounts of annotated data sets (Lee et al., 2008). At present, however, such clinical data sets are not widely accessible for a variety of reasons. To maximize the effectiveness of AI in our field, a shift in mindset will be required. By sharing cases and files, stakeholders can collectively contribute to building robust AI models, improving accuracy in diagnosis, and ultimately enhancing patient care outcomes.

The topic of “explainability,” defined as a description of the process that would permit the users to understand how the algorithm arrived at its prediction or classification, is discussed. This is a new area of research in machine learning and part of an ongoing debate about AI applications in medicine (Huang et al., 2024) and nicely addressed by Shopsowitz et al. (2022) and van de Loosdrecht et al. (2023). The manuscript suggests that explainability in clinical flow cytometry could lead to the identification of novel cellular subsets correlated with underlying biological processes. It also mentions that explainability could create trust in how AI systems work, potentially serving as an adjunct requirement to classical assay validation.

In addition to these and other topics, the manuscript concludes with a discussion about the application of regulations to this field. In Europe, a distinction is made between software that drives a device and software as a medical device (SaMD). Software, which drives the device falls within the same class as the device itself. However, when the software is used in conjunction with an approved medical device, it is not considered a medical device and is not subject to the same regulations. In the States, according to current FDA guidance, flow cytometry software and AI-based algorithms fail to meet the requirements for an exception. However, because this is an emerging field, regulations are evolving. The authors argue that we should remain vigilant of proposed regulations that attempt to enforce too broad a standard by trying to encompass multiple fields.

The manuscript titled “MAGIC-DR: an interpretable machine-learning guided approach for acute myeloid leukemia measurable residual disease analysis,” authored by Kevin Shopsowitz et al. from Xuehai Wang's group in British Columbia, presents a novel methodology for analyzing flow cytometric data in the context of AML measurable (formerly minimal) residual disease (MRD) using a combination of XGBoost predictions with UMAP visualization. The authors developed a pipeline, which they called MRD Analysis Guided by Integrated Classifier and Dimensionality Reduction (MAGIC-DR), which automatically calculates AML probability scores and UMAP coordinates for each cell in a query case. This new data is then appended to the original flow cytometry standard (FCS) files, allowing for further human analysis in standard flow cytometric software.

XGBoost is an implementation of the gradient boosting algorithm specifically designed for speed and performance. It sequentially builds decision trees, with each tree correcting errors made by the previous one. By visualizing the XGBoost predictions as a color parameter on UMAP dot plots, these abnormal events can be gated on to visualize and confirm the abnormal population. This approach not only enhances the accuracy compared with raw AML classifier predictions but also enables detailed immunophenotypic characterization of rare, suspicious clusters, facilitating human interpretation of MRD and verification of the machine learning output. This approach is an excellent example of how the human-in-the-loop and explainability issues raised by Ng et al., 2024 can be addressed.

The XGBoost algorithm was able to effectively identify and distinguish diverse AML cell populations from normal cells. It achieved a median AUC of 0.97, indicating high accuracy in detecting abnormal cell populations. As reported by others (Fang et al., 2022; Mizuta et al., 2024), markers that were the most important for delineating normal from abnormal populations were CD117, CD34, and CD38; CD64 was the most important in all except one of the monocytic populations. Despite its overall success, there were, however, specific AML immunophenotypes that posed challenges for the XGBoost algorithm. The algorithm struggled with abnormal populations that had more heterogeneous phenotypes compared with others in the dataset. For example, one population the algorithm had difficulty with was a monocytic population with blasts expressing CD34+ and variable CD14. This highlights the importance of further improving the algorithm's generalizability and potential by incorporating additional datasets from rare or challenging AML cases and the need for broader sharing of data from novel cases (Ng et al., 2024).

The manuscript entitled “Minimal residual disease assessment in B-cell precursor acute lymphoblastic leukemia by semi-automated identification of normal hematopoietic cells – a EuroFlow study” submitted by Martijn W.C. Verbeek on behalf of the EuroFlow consortium describes the design and validation of an automated gating and identification (AGI) tool for MRD analysis in B-ALL patients using two tubes from the EuroFlow 8-color B-ALL MRD panel. This is similar to the tools they had previously developed for acute leukemia and multiple myeloma (Flores-Montero et al., 2017; Lhermitte et al., 2021). The AGI software compares, in multidimensional space, the fluorescence intensities of different population clusters in unknown samples with the fluorescence intensities of normal cells stored in the Infinicyt database. It joins a growing list of reports exploring the use of machine learning to assist in the classification of B-ALL and other B cell malignancies (Awais et al., 2024; Dinalankara et al., 2024; Shopsowitz et al., 2022). The author's algorithm attempts to phenotypically match each cluster of cells with a normal cell population in the database. For any cluster that is not directly assigned to one of the pre-defined normal cell populations in the database, it is labeled with the population it most closely resembles and designated as a group to manually “check.” In the final step, the check populations defined by the AGI tool are reviewed by the human expert for final assignment into either a normal or an abnormal population.

Their validation set consisted of 174 data files obtained from patients treated with chemotherapy or targeted therapies that were acquired on either a FACSCanto or FACSLyric. Of these, 103 samples were considered by manual analysis to be MRD positive. In every patient with MRD, the abnormal events were identified by the AGI tool and placed into a check population, confirming the tool's high sensitivity. It was able to correctly classify abnormal cells even in patients who were receiving CD19 targeted therapies using alternative antigens to classify B cells (Chen et al., 2023; Mikhailova et al., 2022). Of the 55 negative cases the manual and AGI approaches were in agreement on 39 cases (71%). The remaining 14 samples were scored positive by manual analysis but negative by the AGI tool. The 30 (17%) discordant cases were reanalyzed by four blinded experts and the AGI tool and a consensus was reached for all but 6 cases (97% concordance). Overall, these data indicate that MRD assessment was comparable between the manual analysis and the AGI tool. Still, the study underscores that, as others have found, appropriate training is absolutely required for the correct analysis of MRD data (Boris et al., 2024).

In the realm of clinical cytometry, the detection and monitoring of chronic lymphocytic leukemia (CLL) remain pivotal for patient prognosis and treatment efficacy. The presented work by Bazin et al. marks a significant advancement in this field. The authors evaluated DeepFlow, an AI assisted MFC analysis software capable of performing automated clustering and identification of cell populations. Therefore, they used manually gated FCS files from a training set to build an AI model using the DeepFlow software. This AI model was then applied to the validation set to evaluate the algorithm's ability to perform automated CLL MRD analysis. Bazin et al. report that, within the validation group, the AI-enhanced analysis accurately identified MRD-negative versus MRD-positive cases in 96% of cases. Only in some cases, the AI model deviated from the expert analysis, such as in cases of CLL with trisomy 12. Previous studies have shown the importance of accurate CLL detection and the role of flow cytometry in treatment monitoring (Wierda et al., 2021; Wierda et al., 2022). However, the integration of AI presents a novel approach that could streamline the diagnostic process and provide a higher level of precision. As the field of clinical cytometry continues to evolve, studies like this pave the way for more innovative and effective diagnostic tools.

In their retrospective study “A lasso and random forest model using flow cytometry data identifies primary myelofibrosis,” Zhang and colleagues investigated the potential use of immunophenotypical aberrations as a diagnostic tool for differentiating the Philadelphia chromosome-negative (Ph-negative) MPNs in 211 patients: thrombocythemia (ET), polycythemia vera (PV), primary myelofibrosis (PMF), prefibrotic/early (pre-PMF), and overt fibrotic PMF (overt PMF). The authors were able to identify PMF with a sensitivity and specificity of 90% by a lasso and random forest model based on five variables (CD34+CD19+cells and CD34+CD38− cells on CD34+cells, CD13dim+CD11b− cells in granulocytes, CD38str+CD19+/−plasma, and CD123+HLA-DR− basophils). Additionally, Zhang et al. generated a classification and regression tree model to distinguish between ET and pre-PMF, with accuracies of 94.3% and 83.9%, respectively. The study highlights the potential of flow cytometry as a diagnostic tool for classifying different subtypes of Ph-Negative MPNs, which can be challenging to differentiate using traditional methods. The use of specific immunophenotypic markers and advanced modeling techniques enhances the accuracy of diagnosis and can lead to better patient management.

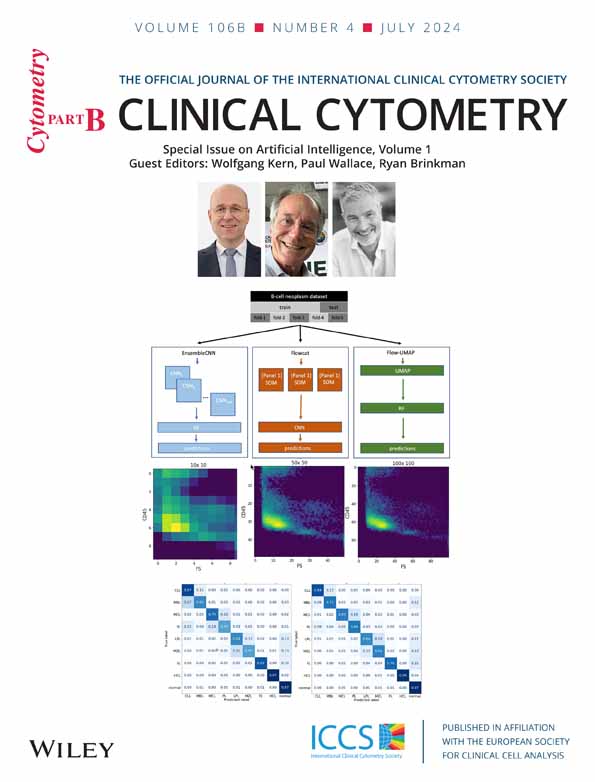

In the article “Comparison of three machine learning algorithms for classification of B-cell neoplasms using clinical flow cytometry data” from Dinalankara et al., researchers evaluated the effectiveness of three AI algorithms − Flowcat, EnsembleCNN, and UMAP-RF − in classifying B-cell neoplasms using flow cytometry data. EnsembleCNN showed similar accuracy to Flowcat but was faster in training, whereas UMAP-RF lagged in both speed and accuracy. The study highlighted the potential of these algorithms to enhance diagnostic accuracy in flow cytometry analysis, possibly even replacing a second hematopathology reviewer in the future. Despite some misclassification issues, the algorithms showed promise in processing flow data to predict diagnoses. Further improvements, such as incorporating Shapley Additive Explanations (SHAP) for better feature identification and applying transfer-learning techniques like FlowSOM, could improve their performance. The algorithms' ability to classify various specimen types with sufficient training data suggests their versatility and potential for broader application in hematopathology, pending further validation.

AI tools are revolutionizing diagnostic medicine through the creation of new algorithms and applications. However, flow cytometry has been slow to cross the “AI Chasm”—a translational gap that prevents the implementation of medical software into diagnostic practice. Algorithms are frequently trained and validated on selected data sets, which hampers their generalizability and portability. These innovations aim to digitize and integrate data, streamline workflows, and enhance quantitative measurements and result interpretations. In their article titled “Translating the regulatory landscape of medical devices to create fit-for-purpose artificial intelligence (AI) cytometry solutions,” Bogdanoski et al. introduce European Union (EU) and United States (US) regulatory frameworks. Their effort seeks is to foster interdisciplinary dialogue among cytometry software developers, computational scientists, researchers, industry professionals, diagnostic physicians, and pathologists. Their work emphasizes the significance of regulatory compliance in developing reliable AI cytometry solutions and highlights how laboratory developed tests (LDTs) and software code used in discovery settings face different challenges. However, they note the lack of guidance or examples on how to create fit-for-purpose cytometry AI solutions that comply with regulatory requirements highlights the disconnect between developers, researchers, regulators, and end-users. Independent of the origin and purpose of software tools the authors highlight that successful clinical implementation of any AI tool in cytometry requires interdisciplinary collaboration with knowledge transfer, data sharing, careful consideration of risk assessments and outcomes, and continuous development and revision of policies and standards.

In “Automation in Flow Cytometry: Guidelines and Review of Systems” Al-Attar et al. reviewed the latest sample preparation instruments from Beckman, Sysmex, and BD. The CellMek SPS was identified as a programmable instrument for automating sample preparation tasks in flow cytometry, including staining, RBC lysis, and cell washing. Applications of that stood out were for intracellular staining and other liquid handling processes, enhancing laboratory efficiency and accuracy. The authors noted that its key features include barcode tracking, flexible programming, and network connectivity, which together significantly reduces hands-on time. When used in conjunction with appropriate reagent management and careful attention to processing parameters it was found to be highly beneficial tool for contemporary clinical laboratories.

The authors review of the Sysmex PS-10 Sample Preparation System noted that it automates processes like staining, RBC lysis, and antibody cocktailing for flow cytometry applications, including intracellular staining. It can load up to 50 specimen tubes using Sysmex Hematology specimen racks. They highlighted the advantages of the features like dual probes as bringing efficient pipetting, barcode tracking, and a touchscreen workstation for comprehensive control. The PS-10 integrates with laboratory information systems (LIS) for patient data and test orders, and it offers customizable protocols, including wash steps with a benchtop cell washing centrifuge. Its robust audit trail and tracking system ensure meticulous documentation of all operations. The system can also be paired with the Helmer UltraCW®II Cell Washing System to add Lyse-wash. While occasional minor software issues were experienced, they found the PS-10 to be reliable with the potential to significantly reduce hands-on time for sample preparation in clinical settings.

The BD FACSDuet™ Sample Preparation System was found to automate pipetting and antibody preparation for high-volume flow cytometry labs, handling up to 120 specimens per run. Key values features identified by the authors included barcode tracking, the ability to prepare antibody cocktails and like the Sysmex cross-vendor reagent support. The system lacked built-in lyse/wash capability but offers customizable protocols. It integrates with BD FACSLyric and LIS via BD FACSLinkfor streamlined workflows. While reliability issues such as software crashes and mechanical failures occurred, BD provides prompt support. Overall, the FACSDuet was found to enhance lab efficiency and reduces manual errors. While none of the reviewed systems was seen as fully stand-alone solutions, and further improvements to the design, function and software were identified as being needed, they were all found to automate many tasks typically performed by medical lab scientists, saving significant hands-on time despite taking longer overall. This allows lab staff to focus on more analytical aspects of flow cytometry testing.

In conclusion, the articles featured in this issue provide critical insights into the integration of AI with flow cytometry for hematology diagnostics. This collaboration between AI and flow cytometry is set to revolutionize the detection, classification, and monitoring of hematological diseases by enhancing diagnostic precision and efficiency. As we continue to explore and expand the capabilities of AI within this field, the prospects for early detection, accurate disease characterization, and improved patient management are profoundly promising. These developments underscore the pivotal role of AI in advancing hematology diagnostics and optimizing treatment strategies.