MAGIC-DR: An interpretable machine-learning guided approach for acute myeloid leukemia measurable residual disease analysis

Abstract

Multiparameter flow cytometry is widely used for acute myeloid leukemia minimal residual disease testing (AML MRD) but is time consuming and demands substantial expertise. Machine learning offers potential advancements in accuracy and efficiency, but has yet to be widely adopted for this application. To explore this, we trained single cell XGBoost classifiers from 98 diagnostic AML cell populations and 30 MRD negative samples. Performance was assessed by cross-validation. Predictions were integrated with UMAP as a heatmap parameter for an augmented/interactive AML MRD analysis framework, which was benchmarked against traditional MRD analysis for 25 test cases. The results showed that XGBoost achieved a median AUC of 0.97, effectively distinguishing diverse AML cell populations from normal cells. When integrated with UMAP, the classifiers highlighted MRD populations against the background of normal events. Our pipeline, MAGIC-DR, incorporated classifier predictions and UMAP into flow cytometry standard (FCS) files. This enabled a human-in-the-loop machine learning guided MRD workflow. Validation against conventional analysis for 25 MRD samples showed 100% concordance in myeloid blast detection, with MAGIC-DR also identifying several immature monocytic populations not readily found by conventional analysis. In conclusion, Integrating a supervised classifier with unsupervised dimension reduction offers a robust method for AML MRD analysis that can be seamlessly integrated into conventional workflows. Our approach can support and augment human analysis by highlighting abnormal populations that can be gated on for quantification and further assessment. This has the potential to speed up MRD analysis, and potentially improve detection sensitivity for certain AML immunophenotypes.

1 INTRODUCTION

Acute myeloid leukemia (AML) is an aggressive hematological malignancy with considerable genetic and immunophenotypic heterogeneity. Although AML is often fatal, there has been recent progress in several areas of AML care including diagnostic testing, risk stratification, and treatment. One such example is the emergence of measurable residual disease (MRD) testing, which uses highly sensitive laboratory techniques to detect minute quantities of persistent disease after the initial phases of treatment. Whether or not a patient is MRD positive or negative can have important implications for prognosis, treatment, and clinical trial eligibility (Heuser et al., 2021; Jongen-Lavrencic et al., 2018). Multiparameter flow cytometry (MFC) is commonly used for MRD detection. This works by identifying abnormal populations of leukemic cells through their aberrant protein expression patterns, which can differ from normal in myriad ways (Schuurhuis et al., 2018). Analyzing AML MRD data is challenging due to AML's heterogeneity along with ongoing immunophenotypic shift of the leukemic blasts that can occur over time. Using MFC to identify residual AML therefore requires detailed knowledge of marker expression patterns on hematopoietic precursor subsets and the changes that occur during normal maturation and leukemogenesis. It further requires awareness of technical caveats, which can be particular to each MFC assay panel, in order to distinguish rare residual malignant cells from artifactual signals arising from imperfect compensation, signal spillover/spreading, autofluorescence, and so forth (Wood, 2020). Analyzing and interpreting AML MRD flow data, which often involves meticulously reviewing up to one hundred biplots, is thus arguably one of the most challenging and time-consuming tasks in the clinical flow cytometry lab (Wood, 2020).

Computer-assisted automated analysis and machine-learning techniques in MFC applications have emerged in recent years, providing novel ways to analyze increasingly complex flow cytometry data (Guldberg et al., 2023; Montante & Brinkman, 2019). This can take advantage of unsupervised and supervised machine learning approaches. Examples of unsupervised machine learning include dimensionality reduction techniques and clustering, which can aid in visualizing complex multidimensional data patterns and facilitate automated gating strategies. Supervised machine learning techniques can be used to classify different cell types at the individual event level in the clinical flow cytometry domain: different approaches ranging from traditional machine-learning classifiers such as support vector machines or Gaussian Mixed Models, to clustering algorithms have been reported (Reiter et al., 2019; Shopsowitz et al., 2022; Vial et al., 2021; Weijler et al., 2022). More recently, a multi-label deep neural network method was reported for classifying abnormal cells B cells as well as other normal cell types in peripheral blood and bone marrow specimens (Salama et al., 2022). XGBoost is a scalable supervised machine learning algorithm that employs a gradient-boosting framework to capture complex data patterns, including interactions between multiple predictive variables, and has proven highly effective as a classifier in many domains (Chen & Guestrin, 2016). However, relying solely on the predictions of even highly accurate machine learning classifiers is problematic in the context of MRD analysis since even very low-level false positive events are unacceptable. More importantly, the raw predictions from a supervised classifier do not provide information regarding the clustering properties of a suspicious population or its immunophenotype, both of which are important to consider when assessing MRD.

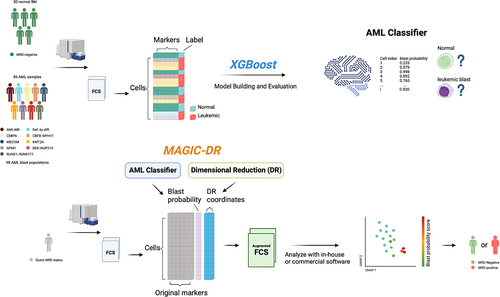

In the present study, we aimed to construct an interpretable human-in-the-loop machine learning framework for AML MRD analysis by integrating complementary features of modern supervised and unsupervised techniques. We found that XGBoost classifiers, when trained on a comprehensive labeled dataset, could reliably identify immunophenotypically diverse AML blasts/promonocytes with high accuracy. By superimposing XGBoost probability scores on Uniform Manifold Approximation and Projection (UMAP) plots (McInnes et al., 2018), we created a process for directly visualizing, gating on, and verifying the model predictions. When tested on real-world cases, this novel hybrid approach was simple to apply and yielded accurate AML MRD assessments, paving the way to streamlined analysis of increasingly complex MFC assays in the clinical labs.

2 METHODS

2.1 Cohort

The study was approved by The University of British Columbia Ethical Review Board (REB). Data from samples collected between December 2020 and April 2023 with flow cytometry run as part of routine clinical tests were retrospectively analyzed. Eighty six samples with flow cytometry run at initial diagnosis (bone marrow aspirate or peripheral blood) were included to provide abnormal blast populations for model training. These were identified by an initial data accrual and exploratory analysis phase, after which we sought out additional samples from underrepresented WHO categories (less than 10 populations based on WHO 5th edition) (Khoury et al., 2022) in our training dataset in order to try and maximize immunophenotypic heterogeneity. Thirty post-therapy bone marrow aspirate samples with flow cytometry data that were confirmed to be MRD negative by validated flow cytometry-based MRD or by molecular testing (minimum 4-log reduction from baseline) were included to provide normal events for model training and cross-validation. In addition, 13 MRD positive and 12 MRD negative posttreatment bone marrow aspirate samples were identified by conventional MFC MRD and used for final evaluation of the analysis pipeline.

2.2 Flow cytometry assay

Diagnostic and post-therapy AML bone marrow aspirate or peripheral blood samples were collected, bulk lysed and stained at room temperature for 15 min with in-house developed 3-tube acute leukemia (ACL)/AML-MRD panel including the following markers: CD2, CD4, CD7, CD10, CD11b, CD13, CD14, CD15, CD19, CD22, CD33, CD34, CD36, CD38, CD45, CD56, CD64, CD117, CD123, CD133, HLA-DR (see Table S1). Samples were acquired on two BD FACSLyrics flow cytometers with regular inter-instrument quality controls being performed as routine (BD, San Jose, CA, USA).

2.3 Data pre-processing, software, and hardware used

FCS3.0 files exported from BD FACSLyrics were loaded to Flowjo v10.7 (BD, Ashland, OR, USA) and pre-gated to remove doublets and debris. Leukemic (blast or promonocyte) populations to be used in the training set were gated on and exported as individual FCS3.0 files from 86 samples collected at initial AML diagnosis (care was taken to exclude residual normal events). Abnormal promonocytic populations were first identified with tube 3, which contains key monocytic markers including CD64 and CD14, and then identified in the other tubes based on shared expression of backbone markers such as CD33, CD13, and HLA-DR. Normal events included in the training data setfrom 30 MRD negative posttreatment samples were gated on with a CD45 versus side scatter (SSC) strategy capturing all monocytes and a small part of mature myeloid and lymphoid cells (extended blast gate). Events within the extended blast gate positive for CD34 and/or CD117 were then gated on and exported separately from CD34/CD117 negative events within the extended blast region to facilitate their enrichment in the training set. MRD positive cases used for validation were gated using a CD45 versus SSC-A extended blast gate without any further gating applied. All downstream analyses were subsequently performed in R (v3.6.3) or Python (v3.6). Daily quality control was performed following the default settings provided in the FACSuit software (BD, San Jose, CA, USA). No batch normalization was performed. Infinicyt v2.0.6 software (Cytognos, Salamanca, Spain) was used for performing manual analysis. Model training and evaluation were carried out using a Lenovo computer with Intel CORE vPRO i9 CPU, 32 GB RAM, and NVIDIA GeForce RTX 3080 Laptop GPU. Scripts used for data processing and training models, as well as the trained models are available at https://github.com/UBCFlowInformaticsGroup/MAGIC-DR. The anonymized flow cytometry data are available upon request, subject to UBC REB approval.

2.4 Building and evaluating an AML cell-level classifier using XGBoost

XGBoost uses gradient boosting, whereby an ensemble model is produced by sequentially adding decision trees until no further improvements can be made. As a supervised machine learning technique, XGBoost classifiers require ground truth labels during the initial training phase. Our approach for applying XGBoost to MRD analysis was to train an XGBoost classifier that could predict whether individual cells are either normal events or abnormal MRD events. The requirements for this type of training data are a feature matrix for each tube where each row corresponds to a single flow cytometry event and each column is a feature to be used for predictions (i.e., the measured flow cytometry parameters from each tube). In addition there must be a vector with accurate labels for each event (normal or abnormal, where in this case abnormal corresponds to an immature leukemic cell). Our training dataset was compiled with the objective of balancing abnormal AML events possessing diverse immunophenotypes with normal events encompassing the expected cell types to be encountered during actual MRD analysis. To achieve this, events labeled as normal were assembled from the extended blast regions of the 30 confirmed MRD negative cases. These were enriched for the relatively rare normal precursors by including all of the CD34 and/or CD117 positive events whereas subsampling CD34/CD117 negative events (10,000 from each sample) to reduce the number of mature granulocytes and monocytes. For the abnormal events, 6000 events were subsampled from each of 98 leukemic populations from 86 distinct patients from data collected at the time of initial diagnosis, with care taken to exclude residual normal events (rarely populations had fewer than 6000 events in which case all events were included). Features used in the model training included all available flow cytometry markers as well as the SSC parameter. XGBoost hyperparameters were optimized for each tube using a Bayesian grid search over the parameter space with sample stratified 10-fold cross-validation (sample stratification was used to account for the correlation of expression patterns expected for events gated form the same patient/cell population). The tree_method was set to “gpu_hist” to speed up model training. As an example, the time to train the tube 1 model with a 1,186,725 × 12 training matrix, a hyperparameter space containing 144 possible permutations, and 10-fold cross validation took 21.75 min (training a single model with default parameters/no cross-validation took only 1.25 s). This procedure resulted in a similar set of optimized hyperparameters for all three tubes (any not listed were left as the default setting): max_depth = 10, colsample_bytree = 0.6, gamma = 1, eta = 0.1, min_child_weight = 1, n_estimators = 200 (165 for tube 2 models). Refer to XGBoost Parameters—xgboost 2.0.2 documentation for detailed definitions.

To assess model performance/generalizability on the distinct blast populations present in the training dataset, a leave-one-population-out cross-validation approach was employed (Chulián et al., 2020). In each run, one leukemic population along with 20,000 randomly selected normal events were held out and models for each tube were trained using the remaining held in events. Performance metrics such as the area under the precision recall curve, area under the receiver operator curve (AUC), median probability output of known abnormal events, and F1 score, were calculated for each leukemic population by making predictions on the held out events. The most important feature for making predictions for each held out abnormal population was calculated using permutation importance from scikit-learn (with AUC as metric) and the SHapley Additive exPlanations (SHAP) method (ranked based on median score for each abnormal cell population) (Lundberg & Lee, 2017). Both methods have been reported in the literature as useful tools for understanding the contribution of individual features to predictions made by complex/nonlinear models including XGBoost (Cava et al., 2019). Briefly, permutation feature importance is determined by randomly shuffling each feature one at a time and comparing the model performance before and after shuffling (a greater decrease in performance after shuffling implies greater feature importance). SHAP scores on the other hand use concepts from cooperative game theory to provide a calculation of each feature's contribution to individual model predictions. For a known abnormal flow cytometry event (in this context blasts), the marker with the highest positive SHAP score can be thought of as the marker that the model considers to be most aberrant for the event (by extension the marker with the greatest median SHAP score for an abnormal population can be thought of as the marker that the model considers most aberrant for the population). UMAP plots were generated in each run to visualize the clustering attributes of model predictions. To evaluate performance on MRD-negative cases, a leave-one-sample-out approach was utilized with the model trained on the entire training set except for the query negative case, with predictions then made on all of the extended blast gate events for the held out negative case.

2.5 MRD analysis guided by integrated classifier and dimensionality reduction

To incorporate machine learning predictions into an MRD workflow that can be applied to clinical cytometry workflows, we developed a pipeline termed MAGIC-DR, which integrates an XGBoost-based AML classifier with UMAP for dimensionality reduction. UMAP was selected for its effectiveness at visualizing continuous maturation pathways of progenitors within our collected data along with its relative speed/scalability compared to other nonlinear techniques such as t-SNE. The final XGBoost model for each tube was used to make predictions on extended blast events gated from the query case, which were output as a probability score. The time for an XGBoost model to make predictions on a typical case with ~85,000 extended blast events was 62 ms. The UMAP coordinates for each query case were then calculated by merging events from the case's extended blast region with 100,000 known normal events sampled from the training data (included to help anchor the positions of the UMAP output). The following parameters were used for the UMAP calculations: n_neighbors (number of neighbors) = 20, min_dist (minimum distance) = 0.5, maxiter (maximum number of iterations) = 500 (see Basic UMAP Parameters—umap 0.5 documentation umap-learn.readthedocs.io). The time for computing the UMAP coordinates on the same case was 4.6 min for one tube, therefore approximately 14 min are required to process all three tubes for a given sample (note that this is without graphics processing unit (GPU) acceleration of UMAP, which would be expected to provide a considerable speed-up). The resulting coordinates, along with XGBoost model predictions output as probability scores, were appended to the original FCS3.0 files for subsequent evaluation.

For MRD analysis and quantification, 25 test cases (13 positive and 12 negative) were processed using MAGIC-DR, randomized and loaded into FlowJo for blinded analysis. Model probabilities were displayed as a heatmap parameter overlay on the UMAP plot for each tube in the panel to enable the identification of suspicious populations with elevated blast probabilities predicted by the XGBoost models. Suspicious populations were gated on from the UMAP plots for each tube in order to quantify the median blast probability, number of events, and assess the immunophenotype. Cases were deemed positive for residual disease if a cluster of at least 50 events exhibited a median abnormal probability greater than 85% in at least one tube of the panel, which for monocytic populations had to include tube 3 and for CD34+ precursors had to include tube 1 or 2, and the immunophenotype was compatible with a myeloid blast or promonocyte population (cutoff rules were determined empirically during the cross-validation phase with known positive and negative cases).

2.6 Statistical analysis

Statistical comparisons were all performed using R (v3.6.3). All summary statistics are reported as medians unless otherwise indicated in the text. Pairwise statistical comparisons were carried out by Mann–Whitney test due to lack of normality, with Bonferroni correction for multiple comparisons where applicable to correct the family-wise error rate (Wilcoxon signed rank test used when comparing the same samples across tubes). p values <0.05 were considered statistically significant. Correlation coefficients were calculated using the Spearman method. Bland–Altman analysis was used to compare MRD blast quantification between XGBoost-guided and manual methods.

3 RESULTS

3.1 Phenotypic characterization of the training cohort

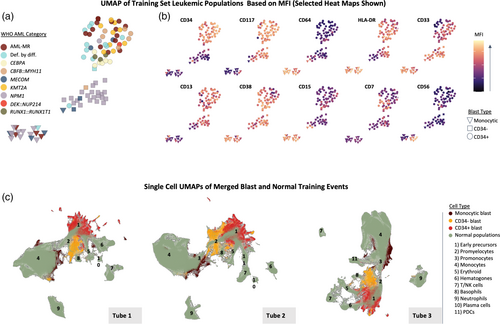

A schematic of our overall approach to machine learning guided AML MRD analysis is shown in Figure 1. We assembled a machine learning training data set from 98 diagnostic leukemic populations, spanning 9 AML categories as defined by the WHO's 5th edition criteria (Figure 1 and Table 1). These events were combined with normal events gated from 30 negative samples, which were collected at different posttreatment stages ranging from the end of induction to posttransplant, totaling approximately 1,200,000 events. For tube 1 there were 582,750 abnormal events and 603,975 normal events (303,975 CD34+ and/or CD117+; 300,000 CD34/CD117−). Similar breakdowns were achieved for the other two tubes. The training set contained diverse leukemia associated immunophenotypes (LAIPs): 54% of the abnormal populations were CD34+ myeloid blasts, 27% were CD34− myeloid blasts, and 19% had an immature monocytic phenotype. Aberrant cross-lineage marker expression was present in some populations: 15% expressed CD7, 9% expressed CD56, 2% expressed CD2, and 4% expressed CD19. Analyzing the median fluorescence intensities (MFI) of all markers revealed three major clusters of AML cell populations, which are visualized by UMAP in Figure 2a. With few exceptions, the major clusters are seen to correspond to the broad categories of CD34+ blasts, CD34− blasts, and immature monocytes. Distinct immunophenotypic variations were noted across the AML categories, consistent with previous reports. For example, NPM1 mutated populations were almost exclusively CD34−, whereas cases with CEBPA mutations and RUNX1::RUNX1T1 showed frequent aberrant CD7 and CD19 (data now shown), respectively (Figure 2a). There appeared to be differences in immunophenotypic heterogeneity across samples for different AML categories. (Figure 2a). This was quantitatively confirmed by comparing the mean distance from the MFI centroid for each WHO category, with significant trends found for both blast (p = 0.002) and monocytic populations (p = 0.02, see Figure S1).

| AML category | CD34+ blasts (N) | CD34− blasts (N) | Monocytic (N) | All |

|---|---|---|---|---|

| NPM1 | 1 | 21 | 10 | 32 |

| Defined by diff. | 11 | 1 | 1 | 13 |

| AML-MR | 14 | 1 | 4 | 19 |

| MECOM | 9 | 0 | 0 | 9 |

| KMT2A | 2 | 2 | 4 | 8 |

| CBFB::MYH11 | 6 | 1 | 0 | 7 |

| RUNX1::RUNX1T1 | 3 | 0 | 0 | 3 |

| CEBPA | 6 | 0 | 0 | 6 |

| DEK::NUP214 | 1 | 0 | 0 | 1 |

| Total | 53 | 26 | 19 | 98 |

To characterize the normal populations in the training set and their relationship to the AML populations, we applied UMAP at the single cell level with merged normal and abnormal events (Figure 2b). This delineated normal maturation trajectories, with a wide spectrum of cell types that include early hematopoietic stem cells, hematogones, and various mature myeloid and lymphoid populations (also see Figures S2–S4 for expression heatmaps of the normal events). The different types of AML populations separated from normal populations on the UMAP plots for all three tubes, highlighting the potential utility of UMAP for identifying abnormal cells when visualizing query MRD cases. As expected, CD34+ blasts overall were closest to the normal early precursor populations, CD34− blasts were closest to promyelocytes, and abnormal monocytic populations were closest to normal promonocytes. There were some differences in grouping behavior between the different tubes. For example, CD34+ blasts showed more heterogeneity and separation from normal precursors in tube 1 compared with other tubes, which may be due to tube 1 containing markers important in early maturation (e.g., CD38 and CD133) along with frequently aberrant markers (e.g., CD7 and CD56).

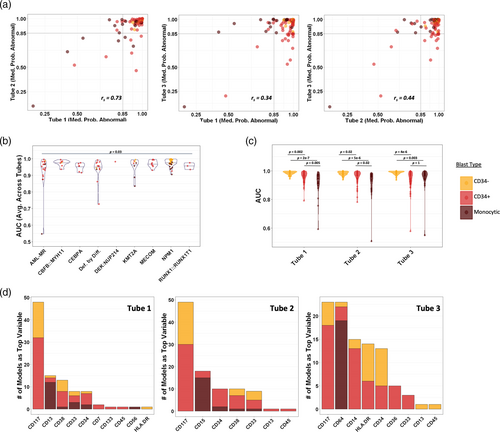

3.2 AML classifier performance across different AML populations

As the starting point for building a machine learning guided AML MRD analysis tool, we used the assembled data described above to train XGBoost based AML classifiers for each tube in our panel. To compare and evaluate performance of the classifiers for each abnormal population in our training dataset we used a leave-one-out cross-validation (LOOCV) strategy, where each abnormal population was sequentially held out of the model training. We compared the median probability scores (MPS) output by the machine learning models, which could potentially be used as a quantitative metric to assess suspicious cell populations in MRD analysis (i.e., the higher the score, the higher the likelihood of the population being abnormal). The cross-validated MPS output by the AML classifier for each abnormal population were generally high in all three tubes with interquartile ranges of 0.933–0.996 for tube 1, 0.951–0.994 for tube 2, and 0.899–0.996 for tube 3, indicating that our AML classifier generally identified true positive events with high confidence. By contrast the MPS for known negative samples calculated by LOOCV had an interquartile range of 0.001–0.03 in all three tubes. All but one of the AML populations had an MPS of 0.5 in at least one tube. Even when the stringency was increased to a probability cutoff of 0.85 in at least one tube (~99th percentile of true negative probability scores) only two additional populations were missed resulting in a 97% detection rate of the classifier. However, further increasing the probability cutoff to 95% led to a more substantial decrease in detection rate to 90%. In addition, requiring an MPS of 0.85 in all three tubes led to a considerably lower detection rate of 67%. This is highlighted graphically in Figure 3a, which shows the correlation of MPS between tubes for all populations. The highest correlation was found for tube 1 versus tube 2 (rs = 0.73, p < 2 × 10−16), whereas lower correlations were seen between tube 1 versus tube 3 (rs = 0.34, p = 0.0008) and tube 2 versus tube 3 (rs = 0.44, p = 9 × 10−6). Notably, tubes 1 and 2 more often gave a high MPS for CD34+ blast populations compared with tube 3 with no CD34+ populations having MPS >0.85 in tube 3 only. The converse was true for monocytic populations with none having MPS >0.85 in tubes 1 and 2, but not tube 3. In contrast, all of the CD34− blast populations were identified with high confidence in all three tubes.

To further quantify the classifiers' performance, we calculated cross-validated AUC for each of the 98 leukemic populations compared to the same held out subset of pooled normal events (calculated based on the precision-recall curve to account for class imbalance). We did not detect meaningful trends related to the date of sample collection on AUC over a 2 year period (see Figure S5). The median cross-validated AUC for each tube was consistently high at 0.973–0.974 indicating the AML classifiers could readily distinguish abnormal from normal events for most cell populations. Small differences in the median AUC were seen when comparing across the AML WHO categories as shown in Figure 3b (median AUC averaged across tubes ranged from 0.954 to 0.982, p = 0.03). The populations with the lowest AUC values were either monocytic or CD34+ blasts and tended to come from AML categories with greater immunophenotypic heterogeneity. The two individual populations with the lowest AUC scores in all three tubes were a monocytic population with expression of CD34 and variable CD14 (from a case of AML, MR) followed by a CD34 positive blast population with aberrant CD7 but completely absent CD117 (AML defined by differentiation). There was a significant trend in AUC when comparing across the major immunophenotypic categories of CD34+ blasts, CD34− blasts, and monocytic populations, which is highlighted in Figure 3c (see also Table S2). Performance was overall the best for CD34− blast populations, which had significantly higher AUC values than the other categories in all three tubes. Comparing between tubes, we found tube 3 performed significantly better with monocytic populations compared with the other tubes (p = 0.01 vs. tube 1, p = 0.003 vs. tube 2), with no significant differences found between tubes for CD34+ or CD34− negative blast populations.

To better understand the AML classifier behavior, we calculated feature importance scores for each held out leukemic population using the permutation importance method, with Figure 3d showing the distribution of the most important feature found for each population. Interestingly, aberrant cross-lineage markers were only rarely the top feature, with CD7 found to be most important for two populations and CD56 most important for one. On the other hand, markers that are important for delineating normal maturation were frequently most important. For example, CD117 was the most important feature for the greatest number of CD34+ and CD34− blast populations, whereas CD34 and CD38 were also frequently found to be most important. Notably for tube 3, CD64 was the most important feature in all except one of the monocytic populations (similar results were found using SHAP feature importance scores, see Figure S6). Given that CD64 was only present in tube 3, this may help explain why the tube 3 classifier overall performed the best at identifying the abnormal monocytic populations.

3.3 Integrated AML classifier and UMAP for AML MRD analysis

The XGBoost based AML classifiers performed well at distinguishing diverse AML blasts from normal events; however, there are several issues with using event-level predictions directly for MRD analysis: namely, the black box nature of predictions makes them difficult to verify and the classifier does not provide information on the clustering properties of events, which are of fundamental importance for interpreting MFC data. In addition, for MRD analysis even low-level false positive event predictions (i.e., on the order of 0.01%) can confound analysis. Highlighting this point, when the AML classifier predictions were made on each MRD negative sample in the training set using LOOCV, we found that false positive predictions (using the default predicted probability cutoff of 0.5) exceeded 0.1% (averaged across tubes) for all 30 samples with a median of 0.47%. Using a more stringent probability cutoff of 0.85 led to some improvement; however, the false positive predictions still exceeded 0.01% of WBCs for all 30 negative cases and were only below 0.1% in 15 out of 30 cases (median 0.11%).

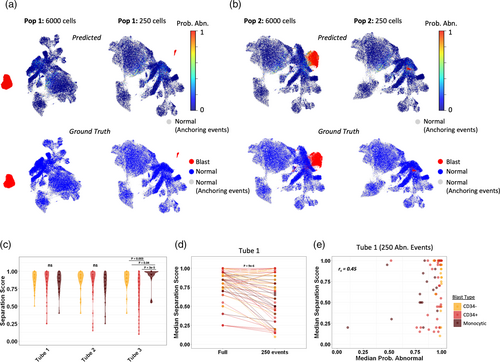

We reasoned that a more specific and interpretable approach would be to use the AML classifier predictions to highlight abnormal populations on UMAP and conventional 2D plots, which could leverage the intrinsic clustering of true positive populations and facilitate verification by conventional immunophenotypic analysis. To explore this, we overlaid LOOCV AML classifier probability scores as a color parameter on UMAP plots for all leukemic populations and MRD negative samples present in the training set. The AML classifier probability scores combined with UMAP clearly highlighted the vast majority of abnormal populations as regions with tightly grouped bright red events (i.e., uniformly high predicted probability of being abnormal) in at least one tube (Figure 4a,b). These were often well-separated from normal events on the UMAP plots, which we quantified using a nearest neighbor based separation score (Figure 4c). The separation score did not show any significant differences between blast types for tubes 1 and 2; however, in tube 3 CD34+ blasts showed significantly worse separation than the other populations, whereas monocytic blast populations showed significantly better separation than both CD34− and CD34+ blasts. Interestingly, the two populations with the worst XGBoost performance described above showed good separation on at least one of the UMAP plots despite not being highlighted by the XGBoost scores.

To simulate rare event detection in MRD analysis, we downsampled each leukemic population in the training set to 250 events (Figure 4 and Figure S7). This led to a variable but overall significant decrease in the UMAP separation scores compared with the full populations before downsampling (p < 0.001 for all tubes; Figure 4d and Figure S7). Unlike the UMAP separation, the AML MPS were agnostic to downsampling and showed only limited correlation with the separation scores (Figure 4e). As a result, downsampled abnormal populations were generally still highlighted on UMAPs as closely grouped bright red (predicted abnormal) events based on the AML probability scores, even when they were in close proximity to normal events (Figure 4b). In contrast to the simulated MRD positive cases, for most of the negative cross-validation cases there were only scattered predicted positive events highlighted by the probability overlay on the UMAPs (Figure S8). Certain types of false-positive events were more common in certain tubes: for example, false-positive monocytic events were sometimes present in tubes 1 and/or 2, but these were far less common in tube 3 (Figure S8B). In the several negative cases that had more abundant false-positive events predicted by the AML classifier, these almost always overlapped normal events on the UMAP plots and had visibly lower/more variable probability scores compared with true positive leukemic populations.

3.4 Evaluating MAGIC-DR in real-world AML MRD sample analysis

To apply XGBoost predictions and UMAP to MRD analysis, we developed a pipeline that automatically calculates AML probability scores and UMAP coordinates for each cell of a query case, appends this new data to the original FCS files, and then exports updated FCS files that can be loaded onto any standard flow cytometry software for further analysis (termed MAGIC-DR; see Figure 1 and Methods Section 2 for more details). Guided by our cross-validation studies, this strategy was designed to enhance accuracy compared to the raw AML classifier's predictions by visualizing the XGBoost predictions on UMAP plots, where any cell clusters highlighted by the models could then be gated on to calculate a median probability score of being abnormal. Furthermore, it enabled detailed immunophenotypic characterization of suspicious clusters, making the results more interpretable.

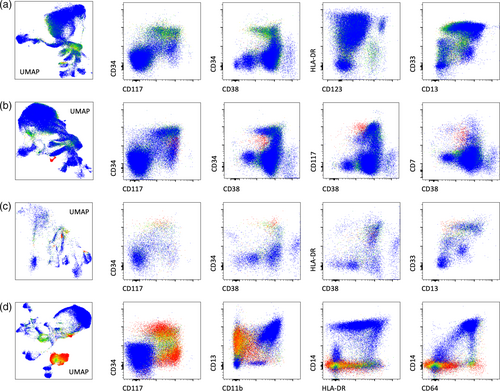

We validated this approach with 12 MRD negative and 13 MRD positive cases, with comparisons made between MAGIC-DR and conventional analysis. The positive samples came from cases of NPM1 mutated AML (n = 8), AML-MR (n = 2), and AML defined by differentiation (n = 3) and contained both CD34+ and CD34− blast populations identified by conventional analysis (abnormal immature monocyte populations were not assessed for by conventional analysis). Overall, we found 100% concordance between MAGIC-DR analysis and conventional analysis in terms of binary classification as MRD positive versus MRD negative when assessing for myeloid blast populations (overall threshold at 0.1%, extended to 0.01% in two cases). Most of the MRD negative cases did not show any suspicious populations based on MAGIC-DR, and were determined to be negative based solely on evaluation of the UMAP plots with the classifier score heatmap (Figure 5a). In a few cases, there were initially suspicious populations highlighted on the UMAP plots that were further interrogated and determined to be negative based on features including median probability score, immunophenotype, and the distribution of high probability events on conventional plots. On the other hand, true positive populations were quickly identified as closely grouped events on UMAP plots that were highlighted by high probability scores (illustrative examples shown in Figure 5b–d). By gating on the suspicious populations, we were able to both quantify the MRD percentage and calculate MPS, which were found to be uniformly high for the abnormal populations, in agreement with our cross-validation studies (>95% in at least one tube for all positive populations). Although there were some individual positive cases with differences in the absolute quantification of abnormal blasts between the two methods, the overall correlation was high at 0.94 (p < 0.0001), with no significant bias identified based on Bland–Altman analysis and a Wilcoxon test (Figure S9). Interestingly, there were immature monocytic populations identified as abnormal by the tube 3 model in eight of the positive samples (all of these cases also had identifiable myeloid blast populations) and in one of the negative cases. These populations were all negative for CD14 and CD13, and typically had bright CD64 along with variable aberrancies in other markers including CD36 and HLA-DR. They were not initially assessed by conventional analysis, but on subsequent review were confirmed to be compatible with atypical monoblasts/promonocytes, and were exclusively from patient's who had previously had significant leukemic populations with monocytic differentiation.

4 DISCUSSION

MFC remains the most broadly applicable technique for AML MRD assessment. This requires looking for small populations with abnormal expression patterns, which can be complex and time consuming. For example, a 3 tube, 12 color panel has close to 200 possible biplots to analyze: knowing which ones to focus on requires considerable expertise and often relies on knowledge of the diagnostic immunophenotype. In our study, we effectively developed a robust framework for MRD analysis in AML patients by leveraging both supervised and unsupervised machine learning techniques to bridge the gap between traditional MRD approaches and modern computational techniques. We achieved this by effectively training XGBoost classifiers to identify diverse AML blasts/promonocytes and overlaying the predictions as a color parameter on UMAP plots, with the outputs incorporated into FCS files. This allowed for direct visualization of the model predictions, which could be gated on for quantification and immunophenotypic characterization, thus facilitating human interpretation and verification of the machine learning output. Applying this approach to real-world MRD cases allowed for accurate and rapid identification of both positive and negative cases.

UMAP and other dimensionality reduction techniques in principle allow for simplified visualization of data with complex high dimensional clustering properties; however, interpreting the output of these plots is not always straightforward. For instance, UMAP plots often have many discrete populations whose exact compositions and positions vary from sample to sample. In the setting of MRD analysis, it is therefore generally not possible to know a priori where to look for a small abnormal population on a UMAP plot. On the other hand, to trust the results of a black box event classifier for an MRD case would require specificity on the order of 99.9%–99.99%, which is very difficult to achieve for heterogeneous AML samples while maintaining adequate sensitivity. The novel approach that we developed harnesses complementary properties of dimension reduction and machine learning classifiers: the XGBoost classifier visually highlights suspicious populations on a UMAP plot, whereas UMAP helps to filter out many of the false positive XGBoost predictions. Although UMAP and XGBoost were chosen in this study, the same approach could be applied to other combinations of dimension reduction techniques (e.g., t-SNE and PCA) and machine learning classifiers (e.g., neural networks and random forests, etc.).

Our training dataset encompassed a wide spectrum of AML categories, mirroring the complex landscape of leukemic populations seen in clinical practice. The performance of the XGBoost models across AML categories was excellent across diverse populations including immature monocytes; however, as highlighted in our results, certain populations (e.g., CD34− blasts) were more readily identified than others. This may in part reflect that CD34− populations displayed relatively little immunophenotypic heterogeneity (majority were from cases of AML with mutated NPM1), thus giving the models more consistent examples to learn from. Our results also provide a quantitative measure of how panel design affects the detection of different types of AML blast populations. For example, we found that immature monocytic populations were more reliably detected in one tube of the panel, which was the only tube to include several key markers of monocytic development, namely CD64, CD14, CD36, and CD4. Interestingly, our analysis of feature importance revealed an unexpected trend. Although aberrant cross-lineage markers are traditionally viewed as vital for MRD detection, they were seldom the most critical features for our models. Instead, markers associated with normal maturation often took precedence such as CD117, CD34, CD38, and CD64. This observation may suggest that the models place significant emphasis on aberrant intensities and/or asynchronous expression patterns.

MRD analysis on AML with monocytic differentiation can be particularly challenging. Alterations in phenotypes, including reduced levels of CD13, CD36, and HLA-DR, along with elevated levels of CD15, are not specific and are frequently observed in reactive or dysplastic monocytes (Xu et al., 2017). Our approach highlighted “atypical” promonocyte events in 9 out of 25 validation samples. There are several lines of evidence that suggest these may be abnormal: all came from patients that previously had documented monocytic differentiation or a type of AML that is commonly associated with monocytic differentiation and the phenotypes showed similar features to known positive cases in our training set. Furthermore, similar populations were not found by the model in the 30 negative cross-validation cases, indicating that this is not expected to be a common false positive finding. It therefore seems possible that MAGIC-DR could improve the sensitivity of detecting certain abnormal monocyte populations; however, further validation is needed to confirm this finding.

Previous studies have used a “clustering with normal” approach, where clusters of cells are considered abnormal if their percentage of normal events falls below a certain threshold (Vial et al., 2021; Weijler et al., 2022). One of the merits of this approach is that it does not require a labeled training set. However, the result is influenced by the normal events used as reference and the clustering algorithm. For instance, due to under-clustering, a small group of abnormal cells might be subsumed within predominant normal clusters instead of being identified as a distinct abnormal cluster. Other approaches have been reported that have incorporated supervised classifiers for MRD detection; however, these have not provided methodologies for real-word use in the clinical lab (Salama et al., 2022). Our approach has several interesting and unique features that build on what has previously been demonstrated. By exporting model predictions into FCS files, we are able to visualize and interpret the results with readily available commercial flow cytometry software. By using XGBoost probability scores, populations are labeled with a scale of confidence as opposed to a simple binary classification. This confidence can be visualized as a heatmap parameter, either on UMAP or conventional biplots, and can also be readily quantified as a median probability score using flow cytometry software, similar to how a median fluorescence intensity is calculated. Notably, we showed how our approach can highlight abnormal populations even when they do not form obviously separated clusters on commonly used bi-plots.

An important limitation of our approach is that the supervised machine learning models are specific to the panels used to train them. In general, we would expect that even minor modifications to a panel could have significant impacts to model performance and might require retraining the models with newly acquired data. Future work could investigate whether acceptable performance could be retained by directly applying our models to panels containing a subset of the markers that we have investigated. Another caveat is that the current approach is in many ways analogous to the difference from normal approach to MRD analysis, since the LAIP of the query sample is not required or directly incorporated into the assessment. This has advantages, since LAIPs are not always known and can potentially shift over time; however, it is also possible that incorporating LAIP information into our pipeline when available could further improve accuracy. Future work could look at ways to integrate the LAIP, for example by augmenting the model with events from the diagnostic population in question prior to analysis, which would be computationally feasible given the short time required for XGBoost model training. Also, pregating steps in our pipeline currently rely on a simple manual gating strategy—these steps could be automated with the FlowDensity algorithm to fully automate the first parts of the pipeline, and possibly enhance reproducibility (Malek et al., 2015). Another limitation is that despite best efforts, there were some AML categories/immunophenotypes that had either few or no representative populations in our training set. For example, the two abnormal populations that XGBoost fared worst with during cross-validation had unique phenotypes compared to other populations in the dataset, yet had evidence of aberrancy, for example, based on their clear separation from normal populations on UMAP plots. We therefore expect performance/generalizability of the classifiers can be further improved with more training data. Additional checks can also be readily built into our MRD pipeline, for example including a step to look for CD34+ populations that separate from normal events on UMAP plots, which could pick up the rare populations that the XGBoost model may miss due to insufficient training data. However, it also remains possible that certain AML immunophenotypes are intrinsically difficult to separate from normal events. For now, the cross-validation results and concordance rate between traditional and XGBoost-guided analysis are promising, yet it is essential to view these in the context of the study's scope and the cases examined. Expanding this methodology to larger, more diverse datasets will be critical moving forward.

In conclusion, we have shown how the integration of a supervised XGBoost classifier with unsupervised UMAP offers a synergistic approach that leverages the strengths of both techniques, creating a robust method for AML MRD analysis by MFC that can be seamlessly integrated into conventional analysis workflows. This has the potential to speed up MRD analysis, and potentially improve detection sensitivity for certain AML immunophenotypes. We would expect this approach to scale well to larger AML MRD flow cytometry panels, where the need for computationally assisted analysis will be even greater.

ACKNOWLEDGEMENTS

This study was supported by a new faculty award from Faculty of Medicine, The university of British Columbia and investigator sponsored study funding from BD Canada.