Machine learning for automatic assignment of the severity of cybersecurity events

Department of Mathematics, Universidad de León, Campus de Vegazana s/n, 24071 León, Spain

Abstract

The detection, treatment, and prediction of cybersecurity events is a priority research area for the security response centers. In this scenario, the automation of several processes is essential in order to manage and solve, in an efficient way, the possible damaged caused by the threats and attacks to the institutions, citizens, and companies. In this work, automatic multiclass categorization models are created by using machine learning techniques in order to assign the severity for different types of cybersecurity events. Once the machine learning algorithms are applied, we propose a mathematical formula to decide, automatically and based on a scoring system, which model is the best for each type of event. In addition, we present the scoring system applied over a real cybersecurity events data store, then the features are extracted from the usual registers that are collected in a center of response to cyber-events. The results show that the ensemble methods are the most suitable for obtaining more than 99% of accuracy.

1 INTRODUCTION

A cybersecurity event can be described as an observable occurrence that puts at risk the integrity, confidentiality, or the availability, aka CIA, of services of organizations, governments, and companies. This type of cyber-event can also threaten citizen security from many points of view. The need to treat the cybersecurity events has opened a new research area in which two main problems have arisen: the prediction models and the automation of processes for the cyber-incident response management systems.1, 2 In this work, we focus on the second one.

Characterizing the severity or the inherent damage of a cybersecurity event is a key procedure in the field of cybersecurity to detect, predict, and react to an event in an efficient way. Indeed, the level of danger of an event is one of the main indicators included in the frames of international reference for the reporting, management, and treatment of cybersecurity events. Nowadays, there are different evaluation methodologies or scoring standards that allow assessing the severity of a cyber-event. These are based on different combinations of taxonomies that characterize the existing threats in the field of cybersecurity through several dimensions. These taxonomies assign the severity of the attacks taking into account the information that is addressed in international reports. However, these indicators do not usually have a quantitative formal measure of the involved factors, and they are currently assigned manually by experts using laborious qualitative descriptions. This procedure is one of the main current problems in cyber-incidents management centers. In order to solve this situation, there are available automatic algorithms that let us classify the severity of a cyber-event using machine learning techniques. However, the obtained models are focused on a concrete threat and tend to perform only binary classifications. In addition, the registers that are available in a Computer Emergency Response Team (CERT) or a Security Operations Center (SOC) are not used in the construction of these models. The first result of this article is the creation of different machine learning models to assign a level of severity to the registers of cybersecurity events in a data store.

On the other hand, the usual methodology in machine learning problems is as follows: first, the data store is analyzed to decide the type of analytics that is needed; secondly, transformations and analyses are carried out to construct a suitable data set. At this time, the classification models by the different machine learning algorithms are created, and the associated indicators are computed to measure the likelihood of each one. Once at this point, the usual situation is that an expert analyzes, manually, all the metrics and the models to select the best one based on his knowledge. The another remarkable point in this article is the proposition of a mathematical formula that allows us to rank automatically the machine learning models that we have obtained, employing not only the accuracy but also the values of the confusion matrix and the learning curve of each model.

A case study is presented constructing supervised machine learning models and unsupervised ones (used as semisupervised) that automatically categorize the severity of three different types of cyber-events (belonging to harmful content and vulnerabilities categories3) from 5232336 registers of a real data set of cyber-events records. The final model has been achieved comparing, automatically, the obtained results with several machine learning techniques: AdaBoost (AB), gradient boosting (GB), random forest (RF), decision tree (DT), multilayer perceptron (MLP) and K-means classifier (KM). The performance of each model has been evaluated through indicators such as the accuracy, Matthews correlation coefficient, the sensitivity or the specificity, among others. The capability of generalization of the models has been analyzed using the data extracted from the learning curves and it has been verified in a validation phase.

The results show that the ensemble algorithms, and those based on logical construction diagrams, provide the best predictive models of categorization, obtaining a success rate higher than 99% for each event.

This article is organized as follows: In Section 2, we give a general overview of the background and the motivation of this work. In Section 3, the materials and methods of this study are described. In Section 4, we develop the main results, including the automatic method to select the best machine learning models, and the detailed results of the case study. Finally, the conclusions, future work, and references are given.

2 FRAMEWORK OVERVIEW

We begin this section with two basic definitions in order to frame the study.

Definition 1.A cyber-event is any occurrence of an adverse nature in a public or private sphere, within the area of information and communications networks of a country.

Definition 2.The severity of a cyber-event is understood as a measure of its importance from different perspectives: riskiness, caused damage, or impact.

The need to obtain meaningful metrics which allow to quantify and assess the severity of a cyber-event has led to the creation of several evaluation methodologies. These standards use different ways and factors to measure the severity of discovered threats, and they are based on rating ranges, qualitative schemes of nominal values or systems which combine the impact and the exploitability of the components involved. In addition, the severity has not the same meaning for all of them.

In the case of vulnerabilities classification systems, one of the main evaluation vulnerabilities system, at least until 2017, has been the Microsoft Security Bulletin Vulnerability Rating.4 It is based on the monthly reports of vulnerabilities of the Microsoft Security Response Center, and it classifies the severity in four levels of risk (critical, important, moderate, and low). Currently, for the case of vulnerabilities, the most used system is the Common Vulnerability Scoring System (CVSS),5 developed by the Forum of Incident Response and Security Teams (FIRST). It is based on the measurements of base, temporal, and environmental indicators, and it provides five degrees of severity (critical, high, medium, low, and none). In addition, it is used by the National Institute of Standards and Technology (NIST) for its National Vulnerability Database (NVD) to characterize and manage vulnerabilities.

If we consider the severity evaluation systems for more general risks and threats, there are several available methodologies. The first one is the Open Web Application Security Project (OWASP) Risk Rating Methodology that is included in its testing guide.6 It estimates the risk of the threats that are associated with a company taking into account two dimensions: the probability or likelihood, related to the threat and the vulnerability, and the technical and business impact. A level of severity (high, medium, and low) is computed combining both dimensions. From a country perspective, we have the available Cyber Incident Scoring System developed by the Cybersecurity and Infrastructures Security Agency (CISA) of the United States.7 Dimensions such as functional, information, and potential impact, the activity, the recovery capability, the location, and the involved agents are evaluated in this system that categorizes the severity in six levels (emergency, severe, high, medium, low, and baseline). Another example is the Spanish National Guide for reporting and management of cyber-events.3 It measures the impact of a threat in the national and citizen safety by means of indicators such as the typology of the broken service, the effect degree, the time, and costs of the recovery, the economical loss, the affected geographical locations, or the associated reputation damages. The levels are five (critical, very high, high, medium, and low).

From the taxonomies perspective, and based on the study carried out in the work of van Heerden et al,8 the most complete and rigorous taxonomy would be found in the work of Simmons et al.9 This methodology is based on five main elements that are the attack vector, the operational impact, the defenses against the attack, the impact on the information, and the target. It allows the characterization of mixed attacks by labeling the attack vectors in a tree structure. Besides, it takes into account the impact on the information whose measurement is addressed in Cybersecurity and Infrastructure Security Agency (CISA),7 employing different weights.

However, the described evaluation methodologies have some limitations. They are based on classifying cyber-events using levels that focus on intrinsic characteristics to the type of threat and its behavior, relying on inflexible factors. This is an adverse situation because, in the field of cybersecurity, it is very usual the appearance of new types of threats. Then, these must be included in one existent category or the taxonomy needs to be reconstructed with the participation of all agents involved. At the same time, the assignment of the levels implies a special complexity in the cataloging of mixed attacks (which contain other attacks) that can belong to different categories. However, the main limitation of these type of scoring systems is the lack of formalized scoring intervals; that is to say, these indicators are usually assigned manually through of qualitative descriptions and examples. This fact makes it difficult to categorize the severity of an event in an automatic way being an arduous and laborious task for CERTs or SOCs.

In this scenario, the available data such as website features, event reports, or log files provide a suitable scenario for the application of automated learning techniques. Machine learning is an alternative to be taken into account because these analytics are appropriate in situations where a hidden trend in a database can be found, building a model that learns to generalize the pattern and that is applied to other data of the same nature, replacing the small-scale heuristic with large-scale statistics.10-14 The application of machine learning techniques to obtain the risk of a cyber-event has led to get classification models that allow us, to a certain extent, to determine the severity of specific cyber-events with a high success rate compared to the systems described previously. This is the case, for example, of the classification models that manage to discriminate malicious and nonmalicious URLs with high success rates by using a reduced number of lexical and intrinsic features.15, 16 In this same line of research, we also find works that try to establish if a report about a bug can be considered as severe or not in order to give them a dangerousness value.17-19 On the other hand, in the work of Chen et al,20 supervised learning techniques have been applied to the evaluation of severity in phishing attacks. This study takes into account the knowledge embedded in previous phishing attacks for the creation of models that allow evaluating the severity of future attacks, being the financial impact they imply a fundamental indicator. This work supposes an increase of the complexity of the process concerning the previous one since this type of attack is made up of different phases unlike the studies on URLs.

Although machine learning techniques have already been applied before to categorize the risk of cyber-events, they present some limitations. Among them, we can highlight that the models have focused on the individualized treatment of very specific cybersecurity events, so we cannot use the models for more type of events. Besides, the models do usually provide a binary categorization of severity, being insufficient in a large number of cyber-events. However, the biggest drawback is that these studies use features as the immediacy and the probability of exploitation. Then, these models need the manual intervention of experts and, also, they do not use the registers that are available in a center of cyber-incidents response.

3 MATERIALS AND METHODS

3.1 Data sets

In this case study, we have a data store, , that contains N=5232336 real registers of cyber-events with 113 features of different nature. This data store includes information about different types of cyber-events that are variable in time. We denote each type of event by where j=1,…,38. These are provided by 27 dynamic sources (public, internal, and private). We denote by the kth register belonging to the jth cyber-event type where k=1,…,Nj, Nj being the number of registers that belong to . In addition, we have found four labels for the degree of the severity: (0) - Unknown; (1) - Low; (2) - Medium, and (3) - High.

This data store is provided by the Spanish National Cybersecurity Institute (INCIBE) under a confidentiality agreement. This sample has been selected under criteria of representativeness.

It should be noted that not all sources contribute the same amount or the same type of information (about the different types of cyber-events they report) due to the different nature of the same. In turn, the stored data are heterogeneous, presenting different types of processing and creation rates that are not constant, which leads to cyber-events correlated in some cases and mostly unbalanced. In addition, not all have data in all features or this may be incomplete.

3.2 Analytics

- . This group needs unsupervised machine learning.

- . In this group, we do not need any analytic due to the severity is automatically assigned by the source.

- . In this group, we will apply supervised/semisupervised machine learning techniques.

In this study, we focus only on G3.

The weight of is 90% (80% and 20%, respectively) and the validation set is 10%. In addition, the partitions have been computed in a random way due to, in a real situation, usually it is not possible to balance the data under the most favorable conditions. We have filtered those cases with = 0.

Once we have selected the registers in which we are going to apply supervised machine learning algorithms, it is necessary selecting the most relevant features in order to obtain a model to assign the severity. The process of selecting these features could be performed automatically, based on different indices (Gini Index, χ2, etc). We have computed the mutual information function (MIF) between every feature and the target feature.21, 22 This nonparametric method is based on the estimation of the entropy between two random variables, thus providing a positive coefficient measuring the dependency between them. A high value of MIF implies a high dependency, and thus, more relevancy to predict one of the variables depending on another. The choice of this fit index is due to its being the most used method in artificial intelligence and learning analytics environments as it can perform with categorical features (such as the recoded response features of the study). Once the coefficient of MIF has been computed, we have introduced a threshold (percentile 80) to input in the model those among the most relevant features.

We have applied the common supervised machine learning algorithms RF, GB, DT, AB, and MLP and, also, one unsupervised machine learning algorithm, KM, that is used as semisupervised one. In addition, an early-stop automatic method of hyperparameter optimization, RHOASo,23, 24 has been applied for the case of two-dimensional algorithms, and grid search has carried out over one-dimensional algorithms.

- The accuracy=Acc. The accuracy is a global indicator that is easy to understand but it does not provide any information about the distribution of predicted labels.

- The Matthews correlation coefficient (MCC) for unbalanced data sets. The MCC is a correlation coefficient between the observed and predicted binary classifications25; it returns a value between −1 (total disagreement between prediction and observation) and +1 (perfect prediction). It should be noted that 0 indicates that the model is not better than random predictor. It is usually used as a measure of the accuracy of the unbalanced data sets

(2)where TP, TN, FP, and FN denote the total of cases that are true positives, true negatives, false positives, and false negatives, respectively.

- We have taken into account the confusion matrix results. This is a table that describes the performance of the model in terms of the comparison between the real labels and the predicted labels provided by the trained model. It is a table that includes the real labels in its rows, and the predicted labels in the columns. More cases at the diagonal imply that the success rate of the model is higher. The dimension of the table depends on the number of classes in the target feature.

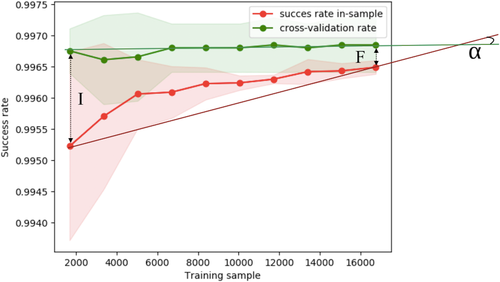

- We have analyzed the generalization capability of the learning curve of each model. This curve is a graphical representation of the training progress. Roughly speaking, this curve measures if the patterns learned by the model are general enough or not. Overfitting in the learning process will be reflected through a significant difference between the curve corresponding to the training accuracies and the curve corresponding to the cross-validations values. Fitting so much to the data yields a larger expected error while, if the model does not fit so much, the data yield a model with good generalization properties, although the training accuracy might be lower. To measure the capability of generalization or the possible overfitting, we have performed some affine transformations over the learning curves in order to treat the possible intersections of the curves without changing their relative position. The underlying idea of these transformations are represented in Figure 1 where I is the initial variance between the training accuracy curve and the validation accuracy curve, F is the bias, and α is the angle that we obtain when we construct two lines between the initial and the final points.

- Finally, we have computed the macro and micro average of the indicators such as the sensitivity, the specificity, the F1-score, or the precision.

Remark 1.In the case of multiclass problem, the way to compute the above metrics is to transform the multiclass data into two-class data. Namely, if the target feature can take the value of m labels with m>2, y={y1,…,yh}, we can obtain two-class target feature as follows: , where includes all the data labeled by y1,…,yi−1,yi+1,…,yh. Then, we will get a set of indicators, one for each new target feature . If we want to compute only one global indicator for the multiclass problem, we can calculate the micro or macro average of each of these metrics. We have used the first option.

3.3 Technical details

The technical details are described in Table 1.

| Components | Details |

|---|---|

| RAM memory | 54 GiB |

| Processor | Intel Xeon® CPU X5670 @ 2.93 GHz |

| Number of processors | 2 |

| Operative system | Ubuntu 18.04.1 LTS 64 bits |

| Language | Python 2.7.15 |

| Library | Scikit-learn |

- Source: Own elaboration.

4 RESULTS

This section is organized following the phases of the methodology that we have carried out, and that it is explained above.

4.1 Analysis of the data store

The analysis of the data store shows that the groups of cyber events G1,G2, and G3 are distributed in 14.4312%, 24.9216%, and 60.6473%, respectively. Then, if we solve the categorization problem for G3, we have assigned the severity of the G2+G3=85.5689% of the data store. In addition, we have found three type of cyber-events in G3; , , and (60.55%, 0.07%, and 0.02%, respectively over ). These cyber-events are from different typologies such as vulnerabilities and harmful content. The available labeled data in G3 is described in Table 2.

| Type of event | Number of sources | ||||

|---|---|---|---|---|---|

| 7 | 0% | 2.4428% | 51.8950% | 45.6621% | |

| 1 | 53.2967% | 0% | 1.8053% | 44.8979% | |

| 1 | 45.1207% | 8.6956% | 46.1836% | 0% |

- Source: Own elaboration.

4.2 Filtration and selection of features

The filtration and selection of features are described in Table 3. We start from 113 features. It should be noted that the redundancy of the data store is greater than 84% for all the events in the group.

| Features | |||

|---|---|---|---|

| Filtering | |||

| Removed features by experts | 26 | 26 | 26 |

| Empty, constant, or equivalent features | 30 | 55 | 63 |

| Recodification step | |||

| Candidate features | 57 | 32 | 24 |

| Selected features by MIF | 16 | 7 | 5 |

| Mandatory features | 2 | 2 | 2 |

| Rate of discarded features | 84.0708% | 92.0354% | 93.8053% |

- Source: Own elaboration. Abbreviation: MIF, mutual information function.

4.3 Results for cyber-event

The results of all machine learning algorithms for the cyber-event are included in Table 4. In this cyber-event, we have a tie among the results that we have gotten with the algorithms RF, GB, and the DT.

| Model | Indicator | Macro Avg | Micro Avg |

|---|---|---|---|

| Accuracy | 0.9997 | ||

| AB | MCC | 0.9997 | 0.9996 |

| Recall | 0.9998 | 0.9997 | |

| Specificity | 0.9998 | 0.9998 | |

| Precision | 0.9998 | 0.9997 | |

| F1-score | 0.9998 | 0.9997 | |

| Accuracy | 0.9999 | ||

| DT | MCC | 0.9999 | 0.9999 |

| Recall | 0.9999 | 0.9999 | |

| Specificity | 0.9999 | 0.9999 | |

| Precision | 0.9999 | 0.9999 | |

| F1-score | 0.9999 | 0.9999 | |

| Accuracy | 0.9999 | ||

| GB | MCC | 0.9999 | 0.9999 |

| Recall | 0.9999 | 0.9999 | |

| Specificity | 0.9999 | 0.9999 | |

| Precision | 0.9999 | 0.9999 | |

| F1-score | 0.9999 | 0.9999 | |

| Accuracy | 0.5806 | ||

| KM | MCC | 0.2811 | 0.3709 |

| Recall | 0.4418 | 0.5806 | |

| Specificity | 0.8048 | 0.7903 | |

| Precision | 0.5200 | 0.5806 | |

| F1-score | 0.4547 | 0.5806 | |

| Accuracy | 0.9999 | ||

| RF | MCC | 0.9999 | 0.9999 |

| Recall | 0.9999 | 0.9999 | |

| Specificity | 0.9999 | 0.9999 | |

| Precision | 0.9999 | 0.9999 | |

| F1-score | 0.9999 | 0.9999 | |

| Accuracy | 0.5189 | ||

| MLP | MCC | 0.0 | 0.2784 |

| Recall | 0.3333 | 0.5189 | |

| Specificity | 0.6666 | 0.7594 | |

| Precision | 0.1729 | 0.5189 | |

| F1-score | 0.2277 | 0.5189 |

- Source: Own elaboration. Abbreviations: AB, AdaBoost; DT, decision tree; GB, gradient boosting; KM, K-means classifier; MCC, Matthews correlation coefficient; MLP, multilayer perceptron; RF, random forest.

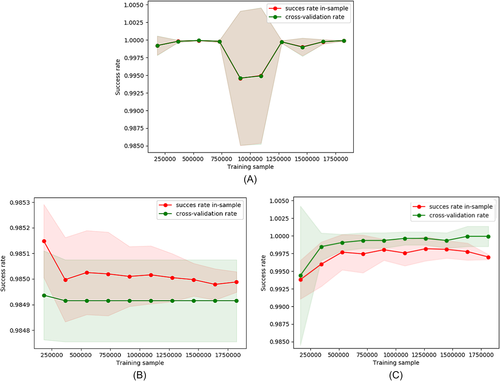

The obtained learning curves for the machine learning algorithms that have shown a tie in their metrics for are in Figure 2. We can observe that the first and the third ones are the best situations.

4.4 Results for cyber-event

In the case of the cyber-event , the results of all machine learning algorithms are included in Table 5. We can observe that we have obtained a tie among the results of four models: RF, GB, DT, and AB.

| Model | Indicator | Macro Avg | Micro Avg |

|---|---|---|---|

| Accuracy | 1.0 | ||

| AB | MCC | 1.0 | 1.0 |

| Recall | 1.0 | 1.0 | |

| Specificity | 1.0 | 1.0 | |

| Precision | 1.0 | 1.0 | |

| F1-score | 1.0 | 1.0 | |

| Accuracy | 1.0 | ||

| DT | MCC | 1.0 | 1.0 |

| Recall | 1.0 | 1.0 | |

| Specificity | 1.0 | 1.0 | |

| Precision | 1.0 | 1.0 | |

| F1-score | 1.0 | 1.0 | |

| Accuracy | 1.0 | ||

| GB | MCC | 1.0 | 1.0 |

| Recall | 1.0 | 1.0 | |

| Specificity | 1.0 | 1.0 | |

| Precision | 1.0 | 1.0 | |

| F1-score | 1.0 | 1.0 | |

| Accuracy | 0.9389 | ||

| KM | MCC | 0.4273 | 0.8779 |

| Recall | 0.7824 | 0.9389 | |

| Specificity | 0.7824 | 0.9389 | |

| Precision | 0.6616 | 0.9389 | |

| F1-score | 0.7022 | 0.9389 | |

| Accuracy | 1.0 | ||

| RF | MCC | 1.0 | 1.0 |

| Recall | 1.0 | 1.0 | |

| Specificity | 1.0 | 1.0 | |

| Precision | 1.0 | 1.0 | |

| F1-score | 1.0 | 1.0 | |

| Accuracy | 0.9565 | ||

| MLP | MCC | −0.0114 | 0.9130 |

| Recall | 0.4983 | 0.9565 | |

| Specificity | 0.4983 | 0.9565 | |

| Precision | 0.4797 | 0.9565 | |

| F1-score | 0.4888 | 0.9565 |

- Source: Own elaboration. Abbreviations: AB, AdaBoost; DT, decision tree; GB, gradient boosting; KM, K-means classifier; MCC, Matthews correlation coefficient; MLP, multilayer perceptron; RF, random forest.

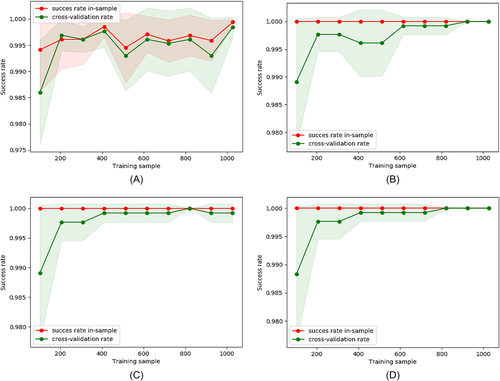

The obtained learning curves for the machine learning algorithms that have shown a tie in their metrics for are in Figure 3. In this case, it is intuitively that the best model will be the DT or the AB.

4.5 Results for cyber-event

Finally, the results of all machine learning algorithms for the cyber-event are included in Table 6. Again, we have a four-way tie between the ensemble approaches (bagging and boosting) and the decision tree. Then, we will have to analyze the learning curves of these models.

| Model | Indicator | Macro Avg | Micro Avg |

|---|---|---|---|

| Accuracy | 1.0 | ||

| AB | MCC | 1.0 | 1.0 |

| Recall | 1.0 | 1.0 | |

| Specificity | 1.0 | 1.0 | |

| Precision | 1.0 | 1.0 | |

| F1-score | 1.0 | 1.0 | |

| Accuracy | 1.0 | ||

| DT | MCC | 1.0 | 1.0 |

| Recall | 1.0 | 1.0 | |

| Specificity | 1.0 | 1.0 | |

| Precision | 1.0 | 1.0 | |

| F1-score | 1.0 | 1.0 | |

| Accuracy | 1.0 | ||

| GB | MCC | 1.0 | 1.0 |

| Recall | 1.0 | 1.0 | |

| Specificity | 1.0 | 1.0 | |

| Precision | 1.0 | 1.0 | |

| F1-score | 1.0 | 1.0 | |

| Accuracy | 0.7338 | ||

| KM | MCC | −0.1507 | 0.4677 |

| Recall | 0.4360 | 0.7338 | |

| Specificity | 0.4360 | 0.7338 | |

| Precision | 0.4111 | 0.7338 | |

| F1-score | 0.4232 | 0.7338 | |

| Accuracy | 1.0 | ||

| RF | MCC | 1.0 | 1.0 |

| Recall | 1.0 | 1.0 | |

| Specificity | 1.0 | 1.0 | |

| Precision | 1.0 | 1.0 | |

| F1-score | 1.0 | 1.0 | |

| Accuracy | 0.8349 | ||

| MLP | MCC | 0.0 | 0.6699 |

| Recall | 0.5 | 0.8349 | |

| Specificity | 0.5 | 0.8349 | |

| Precision | 0.4174 | 0.8349 | |

| F1-score | 0.4550 | 0.8349 |

- Source: Own elaboration. Abbreviations: AB, AdaBoost; DT, decision tree; GB, gradient boosting; KM, K-means classifier; MCC, Matthews correlation coefficient; MLP, multilayer perceptron; RF, random forest.

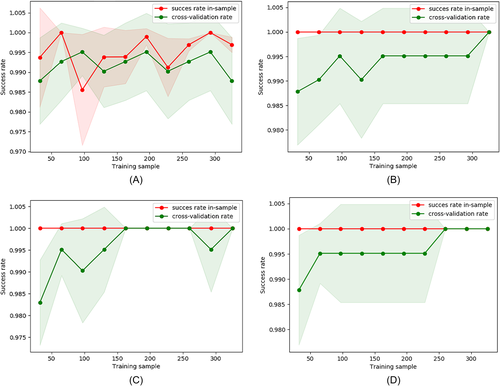

The obtained learning curves for the machine learning algorithms that have shown a tie in their metrics for are in Figure 4. In this last case, we have that the best curves are achieved by GB and AB.

4.6 Scoring system

For the automated model selection, we have proposed a scoring system that provides a ranking of the models. This system takes into account different metrics rewarding those positive indicators and penalizing those negative. This type of ranking is very useful in those cases in which we have a tie between the obtained accuracies of several machine learning algorithms.

First, we consider the accuracy or the Matthews correlation coefficient (for unbalanced data sets). In addition, we have taken into account the confusion matrix data by the relative frequencies of each component fa,b. These frequencies could be weighted by wa,b (in this example, ). In this study, we have not weighted the above relative frequencies due to this process is directly related to the type of event and the action policies of the cyber-events management center. In addition, we have included the information that the learning curve provides.

The ranking obtained for each type of event in G3 is included in Table 7. As we can observe, the best machine learning model for is the DT. In the case of , the algorithm that has obtained the best score is RF. Finally, for , the best machine learning model is AB.

| Algorithm | |||

|---|---|---|---|

| AB | 4th | 4th | 1st |

| DT | 1st | 3rd | 4th |

| GB | 3rd | 2nd | 2nd |

| KM | 5th | 6th | 6th |

| RF | 2nd | 1st | 3rd |

| MLP | 6th | 5th | 5th |

- Source: Own elaboration. Abbreviations: AB, AdaBoost; DT, decision tree; GB, gradient boosting; KM, K-means classifier; MLP, multilayer perceptron; RF, random forest.

It should be noted that the ensemble algorithms, and those based on logical construction diagrams, provide the best predictive models of categorization, obtaining a success rate higher than 99% for each event.

5 CONCLUSIONS

Machine learning has turned into an essential research tool due to its capability to learn patterns and recognize hidden trends in the data. In the field of cybersecurity, these types of techniques are very useful to automate several processes and to create prediction models that provide a more effective response to the cyber-events.

In this work, automatic multiclass categorization models to assign the severity for different types of cyber-events are created, and a mathematical scoring system to select the best one is proposed in order to take the expert knowledge of the procedure. Besides, the features that have been used are extracted from the usual registers that are collected in a center of cyber-incidents response.

The work in progress is focused on creating a software solution that is able to make the whole model creation and prediction process requiring minimal human intervention. Another goal is validating the ranking system with other targets such as the reliability or the priority of a cyber-event.

ACKNOWLEDGEMENTS

The authors would like to thank the Spanish National Cybersecurity Institute (INCIBE), who partially supported this work under contracts art.83, keys: X43 and X54.

CONFLICT OF INTEREST

The authors declare no potential conflict of interests.

Biographies

Noemí DeCastro-García received her MSc degree in Mathematics at University of Salamanca and her PhD degree in Computational Engineering from University of León, where she currently works as an assistant professor in the Department of Mathematics. Also, she is working on several research projects on different areas of cybersecurity in the Research Institute of Applied Sciences in Cybersecurity (RIASC). Her research focuses on data statistics and quality analysis in computational methods.

Ángel L. Muñoz Castañeda received his PhD in Mathematics from Freie Universität Berlín. Currently, he works as assistant professor at the Department of Mathematics of Universidad de León, and he belongs to the Research Institute of Applied Sciences in Cybersecurity (RIASC) of the same university. His research interests concern algebraic geometry and its applications in coding and systems theory, as well as the mathematical foundations of machine learning, and its applications to cybersecurity.

Mario Fernández-Rodríguez got his MSc degree in Computer Science at Universidad de León. Currently, he is a student of the Master of Research in Cybersecurity and he works as a junior researcher at the Research Institute of Applied Sciences in Cybersecurity (RIASC). His research interests are machine learning tools development and secure coding.