Restricted Tweedie stochastic block models

Abstract

enThe stochastic block model (SBM) is a widely used framework for community detection in networks, where the network structure is typically represented by an adjacency matrix. However, conventional SBMs are not directly applicable to an adjacency matrix that consists of nonnegative zero-inflated continuous edge weights. To model the international trading network, where edge weights represent trading values between countries, we propose an SBM based on a restricted Tweedie distribution. Additionally, we incorporate nodal information, such as the geographical distance between countries, and account for its dynamic effect on edge weights. Notably, we show that given a sufficiently large number of nodes, estimating this covariate effect becomes independent of community labels of each node when computing the maximum likelihood estimator of parameters in our model. This result enables the development of an efficient two-step algorithm that separates the estimation of covariate effects from other parameters. We demonstrate the effectiveness of our proposed method through extensive simulation studies and an application to international trading data.

Résumé

frLe modèle à blocs stochastiques (SBM) constitue un cadre de référence largement utilisé pour la détection de communautés au sein des réseaux, dans lesquels la structure est généralement représentée par une matrice d'adjacence. Cependant, les SBM classiques ne sont pas directement adaptés à une matrice d'adjacence comportant des poids d'arêtes continus, non négatifs et sujets à une inflation en zéros. Afin de modéliser le réseau commercial international, où les poids des arêtes correspondent aux valeurs des échanges entre pays, les auteurs proposent un SBM fondé sur une distribution de Tweedie restreinte. Par ailleurs, ils intègrent des informations nodales, telles que la distance géographique entre les pays, et prennent en compte l'effet dynamique de ces informations sur les poids des arêtes. Ils démontrent notamment que, lorsque le nombre de nœuds est suffisamment élevé, l'estimation de cet effet de covariable devient indépendante des étiquettes communautaires de chaque nœud lors du calcul de l'estimateur du maximum de vraisemblance des paramètres du modèle. Ce résultat permet de concevoir un algorithme efficace en deux étapes, séparant l'estimation des effets de covariables de celle des autres paramètres. L'efficacité de la méthode proposée est attestée par des études de simulation approfondies ainsi que par une application aux données du commerce international.

1 Introduction

1.1 Background

A community can be conceptualized as a collection of nodes that exhibit similar connection patterns in a network. Community detection is a fundamental problem in network analysis, with wide applications in social networks (Bedi and Sharma, 2016), marketing (Bakhthemmat and Izadi, 2021), recommendation systems (Gasparetti et al., 2021), and political polarization detection (Guerrero-Solé, 2017). Identifying communities in a network not only enables nodes to be clustered according to their connections with each other, but also reveals the hierarchical structure that many real-world networks exhibit. Furthermore, it can facilitate network data processing, analysis, and storage (Lu et al., 2018).

As indicated in the assumption labelled (1), an SBM provides an interpretable representation of the network's community structure. Moreover, an SBM can be efficiently fitted with various algorithms, such as maximum likelihood estimation and Bayesian inference (Lee and Wilkinson, 2019). In recent years, there has been extensive research into the theoretical properties of the estimators obtained from these algorithms (Lee and Wilkinson, 2019).

In this paper, we are motivated to leverage the capability of the SBM in detecting latent community structures to tackle an interesting problem—clustering countries into different groups based on their international trading patterns. However, in this application we encounter three fundamental challenges that cannot be addressed by existing SBM models.

1.2 Three main challenges

1.2.1 Edge weights

The classical SBM, as originally proposed by Holland et al. (1983), is primarily designed for binary networks, as indicated in assumption (1). However, in the context of the international trading network, we are presented with richer data, encompassing not only the presence or absence of trading relations between countries but also the specific trading volumes in dollars. These trading volumes serve as the intensity and strength of the trading relationships between countries. In such cases, thresholding the data to form a binary network would inevitably result in a loss of valuable information.

In the literature, several methods have been developed to extend the modelling of edge weights beyond the binary range. Some methods leverage distributions capable of handling edge weights. For instance, Aicher et al. (2013, 2015) adopted a Bayesian approach to model edge weights using distributions from the exponential family. Ludkin (2020) allowed for arbitrary distributions in modelling edge weights and sampled the posterior distribution using a reversible jump Markov Chain Monte Carlo method. Ng and Murphy (2021) and Motalebi et al. (2021) used a compound Bernoulli-Gamma distribution and a Hurdle model to represent edge weights, respectively. Haj et al. (2022) applied the binomial distribution to networks with integer-valued edge weights that are bounded from above. Moreover, there is a growing interest in multilayer networks, where edge weights are aggregated across network layers. Notable examples of research in this area include the work by MacDonald et al. (2022) and Chen and Mo (2022).

However, the above approaches cannot properly deal with financial data that involve nonnegative continuous random variables with a large number of zeros and a right-skewed distribution.

1.2.2 Incorporating nodal information

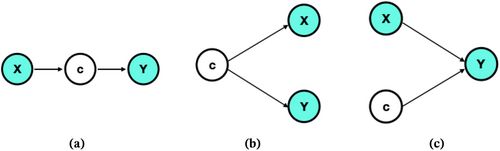

Many SBMs assume that nodes within the same community exhibit stochastic equivalence. However, this assumption can be restrictive and unrealistic, as real-world networks are influenced by environmental factors, individual node characteristics, and edge properties, leading to heterogeneity among community members that affects network formation. Depending on the relationship between communities and covariates, there are generally three classes of models, as shown in Figure 1. Models (b) and (c) have been previously discussed in Huang et al. (2023). We are particularly interested in model (c), where latent community labels and covariates jointly shape the network structure. In our study of international trading networks, factors such as the geographical distance between countries, along with community labels, play critical roles in shaping trading relations. Neglecting these influential factors can significantly compromise the accuracy of SBM estimation.

Various investigators have considered the incorporation of nodal information. For instance, Roy et al. (2019) and Choi et al. (2012) considered a pairwise covariate effect in the logistic link function when modelling the edge between two nodes. In contrast, Ma et al. (2020) and Hoff et al. (2002) incorporated the pairwise covariate effect but with a latent space model. Other research considering covariates in an SBM includes Tallberg (2004), Vu et al. (2013) and Peixoto (2018). Moreover, Mariadassou et al. (2010) and Huang et al. (2023) addressed the dual challenge of incorporating the covariates and modelling the edge weights by assuming that each integer-valued edge weight follows a Poisson distribution and accounting for the pairwise covariates in the mean structure.

While the aforementioned literature has made significant progress in incorporating covariate information into network modelling, the complexity escalates when we confront the third challenge—the observed network is changing over time. This challenge necessitates a deeper exploration of how covariates influence network formation dynamically—a facet that remains unaddressed in the existing literature.

1.2.3 Dynamic network

Recent advances in capturing temporal network data demand the extension of classic SBMs to dynamic settings, as previous research predominantly focused on static networks.

Researchers have attempted to adapt SBMs to dynamic settings, employing various strategies such as state-space models, hidden Markov chains, and change point detection. Fu et al. (2009) and Xing et al. (2010) extended a mixed membership SBM for static networks to dynamic networks by characterizing the evolving community memberships and block connection probabilities with a state space model. Both Yang et al. (2011) and Xu and Hero (2014) studied a sequence of SBMs, where the parameters were dynamically linked by a hidden Markov chain. Matias and Miele (2017) applied Markov chains to the evolution of the node community labels over time. Bhattacharjee et al. (2020) proposed a method to detect a single change point such that the community connection probabilities are different constants within the two intervals separated by it. Xin et al. (2017) characterized the occurrence of a connection between any two nodes in an SBM using an inhomogeneous Poisson process. Zhang et al. (2020) proposed a regularization method for estimating the network parameters at adjacent time points to achieve smoothness.

1.3 Our contributions

The main contribution of this paper is to extend the classical SBM to address the three challenges mentioned above. Given the community membership of each node, we generalize the assumption that edges in the network follow Bernoulli distributions to one where they follow compound Poisson-Gamma distributions instead (Section 2). This allows us to model edges that can take on any nonnegative real value, including exactly zero itself. In Section 6, we apply the proposed model to an international trading network, where each edge between two countries represents the dollar amount of their trading values, for which our model is more appropriate than the classical one. Moreover, not only do we incorporate nodal information in the form of covariates, we also allow the effects of these covariates to be time-varying (Section 2).

We use a variational approach (Section 4) to conduct statistical inference for such a time-varying network. We also prove an interesting result (Section 3) that, asymptotically, the covariate effects in our model can be estimated irrespective of how community labels are assigned to each node. This result enables us to use an efficient two-step algorithm (Section 4), separating the estimation of the covariate effects and that of the other parameters—including the unknown community labels. A similar two-step procedure is also used by Huang et al. (2023).

2 Methodology

In this section, we first give a brief review of the Tweedie distribution, which can be used to model network edges with zero or positive continuous weights. Next, we propose a general SBM using the Tweedie distribution in three successive steps, each addressing one of the challenges mentioned in Section 1.2. More specifically, we start with a vanilla model, a variation of the classic SBM where each edge value between two nodes now follows the Tweedie distribution rather than the Bernoulli distribution. We then incorporate covariate terms into the model, before finally arriving at a time-varying version of the model by allowing the covariates to have dynamic effects that change over time.

2.1 The Tweedie distribution

The compound Poisson-Gamma distribution, known as a special case of the Tweedie distribution (Tweedie, 1984), is related to an exponential dispersion (ED) family. If follows an ED family distribution with mean and variance function , then for some dispersion parameter . The Tweedie distribution belongs to the ED family with for some constant . Specified by different values of , the Tweedie distribution includes the normal (), the Gamma (), the inverse Gaussian (), and the scaled Poisson (). Tweedie distributions exist for all values of outside . Of special interest to us here is the restricted Tweedie distribution with , which is the aforementioned compound Poisson-Gamma distribution with a positive mass at zero but a continuous distribution of positive values elsewhere. We use the word “restricted” to indicate that is constrained to lie in in this Tweedie distribution. In Section 4 it will become clear that this restriction simplifies the overall estimation procedure.

2.2 The vanilla model

Let denote a weighted graph, where represents a set of nodes with cardinality and denotes the set of edges between the nodes. For SBMs, each node in the network can belong to one of non-overlapping groups. Throughout this paper, we assume that the number of groups is pre-specified. When is unknown, how to determine its “right” value is an active research problem on its own (e.g., Lei, 2016; Ma et al., 2021; Le and Levina, 2022; Wang et al., 2023). While there is no universal consensus on how to make this decision, a very common practice is to rely on the Bayesian information criterion or some of its variants. Instead of adopting this approach, for the real-data analysis in Section 6, we will simply report results for a few different choices of . In fact, we think this is more informative for real problems anyway, as we do not really believe there is just one “right” answer. Let denote the unobserved community membership of node ; it follows a multinomial distribution with the probability .

Usually, is represented by an matrix . In classical SBMs, each is modelled either as a Bernoulli random variable taking on binary values of 0 or 1, or as a Poisson random variable taking on nonnegative integer values. We first relax this restriction by allowing to take on nonnegative real values. Since we focus on undirected weighted networks without self-loops, for us is a symmetric matrix with nonnegative, real-valued entries and zeros on the diagonal.

2.3 A model with covariates

2.4 A time-varying model

Now suppose we observe an evolving network at a series of discrete time points , with a common set of nodes. Specifically, our dataset is of the form . Without loss of generality, we assume for .

The likelihood functions for the vanilla model (Section 2.2) and for the static model with covariates (Section 2.3) are simply special cases of equation (8).

3 Theory

- •

replaced the unknown labels with an arbitrary set of labels , where each is independently multinomial with probability ;

- •

profiled out the parameter by replacing it with , while presuming and to be known and fixed; and

- •

re-scaled it by the total number of pairs, .

This quantity identified in equation (9) turns out to be very interesting. Not only does have an explicit expression, but the function identified in equation (9) itself can also be shown to converge to a quantity not dependent on as tends to infinity.

In other words, it does not matter that is a set of arbitrarily assigned labels! This has immediate computational implications (see Section 4). Some high-level details of this theory are spelled out below in Section 3.1, while actual proofs are given in Appendix B.

3.1 Details

Condition 3.1.The covariates are i.i.d., and there exists some such that for any , and satisfying .

Condition 3.2.The function is continuous on , for all .

Theorem 1.As while remains constant,

Remark 1.So far, we have simply written , , and in order to keep the notation short. To better appreciate the conclusion of the theorem, however, it is perhaps important for us to emphasize here that these quantities are more properly written as , , , and .

The implication of Theorem 1 is that, asymptotically, our inference about is not affected by the community labels—nor is it affected by the total number of communities, , since can follow any multinomial distributions with probabilities , including those with some . Thus, even if we got wrong, our inference about would still be correct.

4 Estimation method

4.1 Two-step estimation

In this section, we outline an algorithm to fit the restricted Tweedie SBM. Since, for us, the parameter is restricted to the interval , we find it sufficient to simply perform a grid search (e.g., Dunn and Smyth, 2005, 2008; Lian et al., 2023) over an equally spaced sequence, say, , to determine its “optimal” value. However, our empirical experiences also indicate that a sufficiently accurate estimate of is important for making correct inferences on other quantities of interest, including the latent community labels .

4.1.1 Step 1: Estimating the covariate coefficients

It is clear from Theorem 1 in Section 3 that, when is given and fixed, the log-likelihood function identified in expression (9) serves as an objective function for estimating . To begin, here one can fix the parameter at , since it only appears as a scaling constant in and the maximizer is not affected. The main computational saving afforded by Theorem 1 is that we can use an arbitrary set of labels to carry out this step, estimating separately without simultaneously concerning ourselves with or having to make inference on . Both of those tasks can be temporarily delayed until after has been estimated.

4.1.2 Step 2: Variational inference

In Step 2, with the estimate from Step 1 (and, again, a pre-fixed ), we estimate the remaining parameters , , and , as well as make inferences on the latent label .

If we directly maximized the likelihood function identified in expression (8) using the EM algorithm, the E-step would require us to compute but, here, the conditional distribution of the latent variable given is complicated because and are not conditionally independent in general. We will use a variational approach instead.

To proceed, it will be more natural for us to emphasize the fact that the likelihood function in equation (8) is really just the joint distribution of . Thus, instead of writing it as , in this section we will write it simply as , where we have also dropped and to keep the notation short because, within this step, and are both fixed and not being estimated.

Instead of maximizing expression (12) directly, one maximizes the ELBO term—not only over , but also over . Since the original objective function—the left-hand side of expression (13)—does not depend on , maximizing the ELBO term over is also equivalent to minimizing the KL term. When the KL term is small, not only is the variational distribution close to , but the ELBO term is also automatically close to the original objective function, which justifies why this approach often gives a good approximate solution to the otherwise intractable problem stated in expression (12) and why the variational distribution can be used to make approximate inferences about .

4.2 Tuning parameter selection

We use leave-one-out cross-validation to choose the tuning parameters when fitting our model. In particular, each time we utilize observations made at time points to train the model and then test the trained model on the observations made at the remaining time point. To avoid boundary effects, our leave-one-out procedure is repeated only times (as opposed to the usual times), because we always retain the observations at times and in the training set—only those at times are used (one at a time) as test points. In our implementation, the loss is defined as the negative log-likelihood of the fitted model, and the overall loss is taken as the average across the repeats. We select the 's that give rise to the smallest loss.

5 Simulations

In this section, we report the results of simulation studies that demonstrate the performance of our restricted Tweedie SBM. In Section 5.1, we focus on the vanilla model alone; and in Section 5.2, we consider both fixed and time-varying covariate effects.

For all simulations, we fixed the true number of communities to be , with prior probabilities . For the true matrix , we set all diagonal entries to be equal, and all off-diagonal entries to be equal as well—this way, the entire matrix is completely specified by just two numbers.

To avoid getting stuck at poor local optima, we used multiple initial values in each run.

5.1 Simulation from the vanilla model

First, we assessed the performance of our vanilla model (Section 2.2), and compared it with the Poisson SBM and spectral clustering. The Poisson SBM assumes the edges follow Poisson distributions; we simply rounded each into an integer and fitted it using the function estimateSimpleSBM in the R package sbm (Chiquet et al., 2023, v0.4.5). To implement spectral clustering, we used the function reg.SSP from the R package randnet (Li et al., 2022, v0.5). The function estimateSimpleSBM uses results from a bipartite SBM as its initial values. To make a more informative comparison, we used two different initialization strategies to fit our model: (i) starting from 30 sets of randomly drawn community labels and picking the best solution afterward, and (ii) starting from the Poisson SBM result itself.

According to the discrepancy in between -pairs that belong to the same group and those that belong to different groups, the clustering difficulty of the three designs can be roughly ordered as Scenario 1 Scenario 2 Scenario 3.

Tables 1–3 summarize the averages and the standard errors of NMI for different methods over 50 simulation runs, respectively, for Scenarios 1–3. As expected, all methods perform the best in Scenario 1 and the worst in Scenario 3. Their performances improved as the sample size increased, or as the parameter decreased; as the dispersion parameter, a smaller means a reduced variance and an easier problem.

| Restricted Tweedie SBM | Poisson | Spectral | ||||

|---|---|---|---|---|---|---|

| Random Init. | Poisson Init. | SBM | Clustering | |||

| 2 | 1.2 | 50 | 0.9097 (0.016) | 0.8275 (0.023) | 0.8099 (0.022) | 0.5547 (0.012) |

| 100 | 0.9958 (0.002) | 0.9958 (0.002) | 0.9950 (0.002) | 0.9185 (0.019) | ||

| 1.5 | 50 | 0.8647 (0.019) | 0.7780 (0.020) | 0.7275 (0.020) | 0.5152 (0.012) | |

| 100 | 0.9878 (0.003) | 0.9878 (0.003) | 0.9865 (0.003) | 0.7690 (0.025) | ||

| 1.8 | 50 | 0.7644 (0.017) | 0.7180 (0.020) | 0.6539 (0.020) | 0.4857 (0.015) | |

| 100 | 0.9828 (0.004) | 0.9828 (0.004) | 0.9826 (0.004) | 0.6597 (0.015) | ||

| 1 | 1.2 | 50 | 0.9918 (0.005) | 0.9946 (0.004) | 0.9880 (0.004) | 0.7529 (0.027) |

| 100 | 1 (0) | 1 (0) | 1 (0) | 1 (0) | ||

| 1.5 | 50 | 0.9778 (0.008) | 0.9859 (0.006) | 0.9745 (0.008) | 0.7034 (0.023) | |

| 100 | 1 (0) | 1 (0) | 1 (0) | 0.9991 (0.001) | ||

| 1.8 | 50 | 0.9653 (0.010) | 0.9644 (0.010) | 0.9512 (0.012) | 0.6702 (0.019) | |

| 100 | 0.9992 (0.001) | 0.9992 (0.001) | 0.9992 (0.001) | 0.9656 (0.013) | ||

| 0.5 | 1.2 | 50 | 1 (0) | 1 (0) | 1 (0) | 0.9934 (0.007) |

| 100 | 1 (0) | 1 (0) | 1 (0) | 1 (0) | ||

| 1.5 | 50 | 1 (0) | 1 (0) | 1 (0) | 0.9297 (0.019) | |

| 100 | 1 (0) | 1 (0) | 1 (0) | 1 (0) | ||

| 1.8 | 50 | 1 (0) | 1 (0) | 0.9985 (0.001) | 0.8307 (0.025) | |

| 100 | 1 (0) | 1 (0) | 1 (0) | 1 (0) | ||

| Restricted Tweedie SBM | Poisson | Spectral | ||||

|---|---|---|---|---|---|---|

| Random Init. | Poisson Init. | SBM | Clustering | |||

| 2 | 1.2 | 50 | 0.7490 (0.023) | 0.6713 (0.024) | 0.640 (0.021) | 0.4515 (0.014) |

| 100 | 0.9698 (0.007) | 0.9592 (0.011) | 0.9603 (0.011) | 0.6936 (0.023) | ||

| 1.5 | 50 | 0.6921 (0.023) | 0.6327 (0.021) | 0.6031 (0.021) | 0.4596 (0.018) | |

| 100 | 0.9568 (0.009) | 0.9650 (0.007) | 0.9430 (0.011) | 0.6133 (0.014) | ||

| 1.8 | 50 | 0.7052 (0.022) | 0.6315 (0.023) | 0.5727 (0.020) | 0.4174 (0.020) | |

| 100 | 0.9803 (0.004) | 0.9539 (0.013) | 0.9362 (0.013) | 0.6433 (0.012) | ||

| 1 | 1.2 | 50 | 0.9490 (0.013) | 0.9284 (0.014) | 0.9037 (0.013) | 0.6489 (0.021) |

| 100 | 0.9992 (0.001) | 0.9992 (0.001) | 0.9984 (0.001) | 0.9918 (0.003) | ||

| 1.5 | 50 | 0.9330 (0.014) | 0.9193 (0.014) | 0.9127 (0.014) | 0.6304 (0.018) | |

| 100 | 1 (0) | 1 (0) | 0.9976 (0.001) | 0.9926 (0.003) | ||

| 1.8 | 50 | 0.9288 (0.013) | 0.9235 (0.014) | 0.9103 (0.014) | 0.6437 (0.015) | |

| 100 | 0.9992 (0.001) | 0.9992 (0.001) | 0.9967 (0.002) | 0.9375 (0.017) | ||

| 0.5 | 1.2 | 50 | 0.9961 (0.004) | 1 (0) | 1 (0) | 0.8504 (0.027) |

| 100 | 1 (0) | 1 (0) | 1 (0) | 0.9991 (0.001) | ||

| 1.5 | 50 | 0.9847 (0.009) | 1 (0) | 1 (0) | 0.8193 (0.026) | |

| 100 | 1 (0) | 1 (0) | 1 (0) | 1 (0) | ||

| 1.8 | 50 | 0.9879 (0.007) | 1 (0) | 0.9973 (0.002) | 0.7947 (0.026) | |

| 100 | 1 (0) | 1 (0) | 1 (0) | 1 (0) | ||

| Restricted Tweedie SBM | Poisson | Spectral | ||||

|---|---|---|---|---|---|---|

| Random Init. | Poisson Init. | SBM | Clustering | |||

| 2 | 1.2 | 50 | 0.4385 (0.032) | 0.4340 (0.027) | 0.4243 (0.025) | 0.2889 (0.022) |

| 100 | 0.8497 (0.013) | 0.8025 (0.019) | 0.774 (0.020) | 0.5134 (0.016) | ||

| 1.5 | 50 | 0.5611 (0.023) | 0.5226 (0.023) | 0.5071 (0.022) | 0.3462 (0.018) | |

| 100 | 0.9097 (0.012) | 0.8606 (0.016) | 0.8146 (0.017) | 0.5737 (0.012) | ||

| 1.8 | 50 | 0.6179 (0.022) | 0.5771 (0.024) | 0.522 (0.021) | 0.4102 (0.018) | |

| 100 | 0.9567 (0.009) | 0.8736 (0.020) | 0.8377 (0.020) | 0.5985 (0.013) | ||

| 1 | 1.2 | 50 | 0.8710 (0.016) | 0.7404 (0.017) | 0.7325 (0.016) | 0.5379 (0.011) |

| 100 | 0.9893 (0.006) | 0.9967 (0.002) | 0.9842 (0.003) | 0.862 (0.022) | ||

| 1.5 | 50 | 0.8709 (0.016) | 0.7763 (0.017) | 0.7684 (0.016) | 0.5601 (0.012) | |

| 100 | 0.9950 (0.004) | 0.9992 (0.001) | 0.9876 (0.003) | 0.8311 (0.022) | ||

| 1.8 | 50 | 0.8806 (0.017) | 0.8039 (0.019) | 0.7901 (0.018) | 0.6092 (0.013) | |

| 100 | 0.9992 (0.001) | 0.9992 (0.001) | 0.9934 (0.002) | 0.8876 (0.022) | ||

| 0.5 | 1.2 | 50 | 0.9414 (0.014) | 0.8998 (0.017) | 0.8817 (0.016) | 0.7379 (0.028) |

| 100 | 0.9956 (0.004) | 1 (0) | 1 (0) | 0.9983 (0.001) | ||

| 1.5 | 50 | 0.9591 (0.012) | 0.9112 (0.015) | 0.8999 (0.015) | 0.7354 (0.026) | |

| 100 | 1 (0) | 1 (0) | 1 (0) | 1 (0) | ||

| 1.8 | 50 | 1 (0) | 0.9727 (0.01) | 0.9550 (0.01) | 0.7549 (0.022) | |

| 100 | 1 (0) | 1 (0) | 1 (0) | 1 (0) | ||

Overall, our restricted Tweedie SBM and the Poisson SBM tended to outperform spectral clustering. Among all 54 sets of simulation results, our model with a random initialization compares favourably with other methods in 50 out of 54 cases. In the remaining four sets (marked by a superscript “” in the tables), the Poisson SBM was slightly better. But our method could still be superior to the Poisson SBM in these four sets if we had initialized our algorithm with the estimates from the Poisson SBM. It is evident that in all cases our restricted Tweedie SBM can further improve the clustering result of the Poisson SBM.

5.2 Simulation from a model with covariates

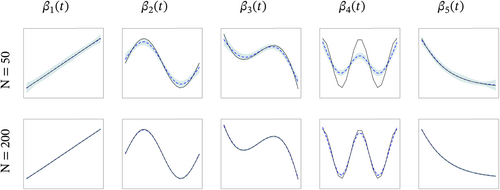

We considered 20 time-fixed covariates and 5 time-varying covariates , all independently generated from the uniform distribution on . The true time-fixed covariate effect consisted of 20 equally spaced values ranging from −1 to 1. The five true time-varying coefficients were specified as: , , , , and . Finally, the datasets were simulated in such a way that the network was observed at equally spaced time points on .

In general, the tuning parameters are to be selected by cross-validation. To reduce the computational cost, here we simply fixed all of them at . The Supplementary Material contains a small sensitivity study using and , from which one can see that the clustering performance is minimally affected by the choice of , but larger values lead to smoother estimates of . We can also see that the optimal tuning parameter is not the same for each function. Most notably, the function has a substantially larger total variation than the other four functions, and would require a smaller tuning parameter as a result. The inclusion of such a function in our simulation study has indeed been deliberate, so that we can highlight this important point.

For all simulated cases with different combinations of and , Table 4 summarizes the metrics, NMI, and , for , across 50 repeated simulation runs. Figure 2 shows visually how well the time-varying coefficients were estimated for Scenario 5 with , the most challenging case in our entire simulation study. In particular, the pointwise mean and five times the standard deviation of each are plotted, for and 200. Similar plots for Scenario 4 and Scenario 5 with are included in the Supplementary Material. From these plots, as well as from Table 4 itself, it is quite clear that the estimation performance of covariate effects across different scenarios is largely unaffected by the varying levels of clustering difficulty, as foreshadowed by Theorem 1. Finally, we also report computational times in the Supplementary Material for all simulation scenarios.

| Scenario 4: | ||||

|---|---|---|---|---|

| NMI | 1 | 1 | 1 | 1 |

| (0) | (0) | (0) | (0) | |

| Err() | ||||

| () | () | () | () | |

| Err() | ||||

| () | ) | () | () | |

| Err() | ||||

| () | () | () | () | |

| Err() | ||||

| () | () | () | () | |

| Err() | ||||

| () | () | () | () | |

| Err() | ||||

| () | () | () | () | |

| Scenario 5: | ||||

|---|---|---|---|---|

| NMI | 1 | 1 | ||

| () | (0) | () | (0) | |

| Err() | ||||

| () | () | () | () | |

| Err() | ||||

| () | () | () | () | |

| Err() | ||||

| () | () | () | () | |

| Err() | ||||

| () | () | () | () | |

| Err() | ||||

| () | () | () | () | |

| Err() | ||||

| () | () | () | () | |

6 An application: International trading

In this section, we apply the restricted Tweedie SBM to study international trading relationships among different countries and how these relationships are influenced by geographical distances. As an example, we focus on the trading of apples—not only are these data readily available from the World Bank (World Integrated Trade Solution, 2023), but one can also surmise a priori that geographical distances will likely have a substantial impact on the trading due to the weight and perishable nature of this product.

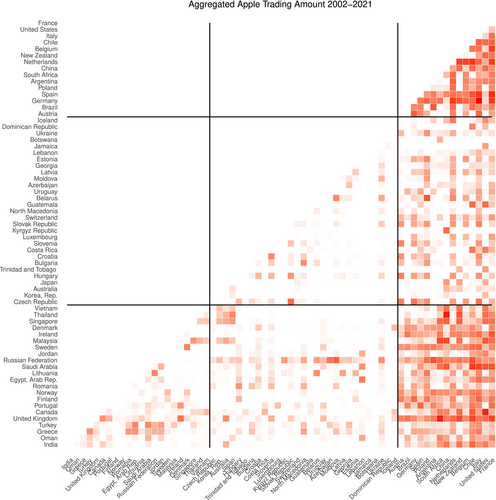

From the international trading datasets provided by the World Bank (World Integrated Trade Solution, 2023), we have collected annual import and export values of edible and fresh apples among countries from to . In each given year , we observe a 66-by-66 matrix where each cell represents the trading value from country to country in thousands of US dollars during that year. We then average with its transpose to ensure symmetry. Finally, a small number of entries with values ranging from 0 to 1 (i.e., total trading values less than $1,000) are thresholded to 0, and the remaining entries are logarithmically transformed. For the covariate , we use the shortest geographical distance between the two trading countries based on their borders, which we calculate using R packages maps (code by Becker et al., 2022, v3.4.1) and geosphere (Hijmans, 2022, v1.5–18).

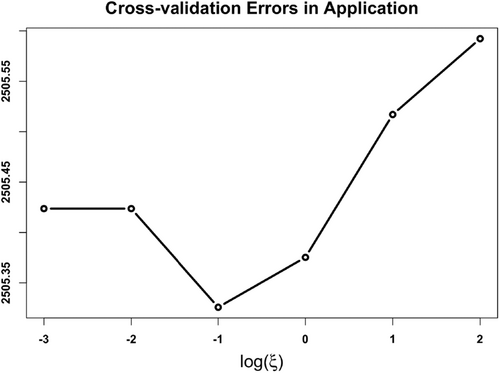

We employ the cross-validation procedure outlined in Section 4.2 to choose the tuning parameter . Figure 3 displays the CV error, showing the optimal tuning parameter to be .

Table 5 shows how the 66 countries are clustered into three communities by our method. Figure 4 displays the aggregated matrix, , with rows and columns having been permuted according to the inferred community labels. Clearly, countries in the first community trade intensively with each other and with countries in the third community. While both the second and third communities consist of countries that mainly trade with countries in the first community (rather than among themselves or between each other), the trading intensity with the first community is much higher for the third community than it is for the second.

| Community | Countries |

|---|---|

| 1 | France, United States, Italy, Chile, Belgium, New Zealand, Netherlands, China, South Africa, Argentina, Poland, Spain, Germany, Brazil, Austria |

| 2 | Iceland, Dominican Republic, Ukraine, Botswana, Jamaica, Lebanon, Estonia, Georgia, Latvia, Moldova, Azerbaijan, Uruguay, Belarus, Guatemala, North Macedonia, Switzerland, Slovak Republic, Kyrgyz Republic, Luxembourg, Slovenia, Costa Rica, Croatia, Bulgaria, Trinidad and Tobago, Hungary, Japan, Australia, Korea Rep., Czech Republic |

| 3 | Vietnam, Thailand, Singapore, Denmark, Ireland, Malaysia, Sweden, Jordan, Russian Federation, Saudi Arabia, Lithuania, Egypt Arab Rep., Romania, Norway, Finland, Portugal, Canada, United Kingdom, Turkey, Greece, Oman, India |

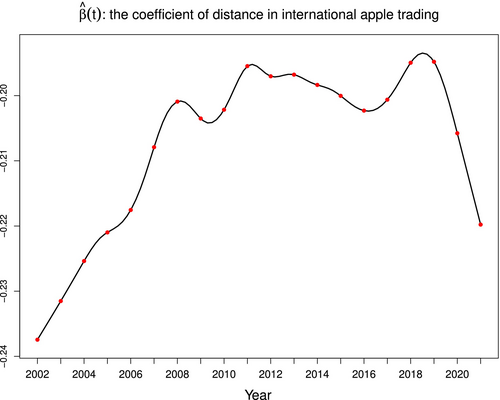

Figure 5 displays , the estimated effect of geographical distances on apple trading over time. We can make three pertinent observations. First, is negative during the entire study period—not surprising since longer distances can only increase the cost and the duration of transportation, and negatively impact fresh apple trading. Next, the magnitude of shows a generally decreasing trend over the twenty-year period of observation, implying that the negative effect of geographical distances is diminishing. This may be attributed to more efficient transportation methods and a reduced cost of shipment with time. Finally, two relatively big “dips” in are clearly visible—one after the financial crisis in 2008, and another after the onset of the COVID-19 pandemic in 2020.

As we stated earlier in Section 2.2, methodologically we do not focus on the choice of in this paper. Instead, we include in the Supplementary Material some additional clustering results for these data using and , so as to provide some additional insights on the effect of varying . One key message of our paper has been that the functional estimate, , is unaffected by the community structure or the choice of , and indeed this is the case. For these data from 2002 to 2021, appears to be “a maximum possible choice”, as an empty community occurs if is chosen. The additional clustering results in the Supplementary Material indicate that, as increases, more detailed patterns start to emerge. For instance, two groups of countries—(Germany, Spain, China, Netherlands, Belgium, Italy, United States, France) and (Brazil, Argentina, South Africa, New Zealand, Chile)—are consistently clustered together whether , 5, or 9. These countries are all active in apple trading. When , they are clustered into a single community, but at and , they divide into two distinct communities. The latter group trades intensively with specific partners, while the former group trades broadly with nearly all countries. Therefore, at the lower value of , general trading patterns are already detectable, whereas at larger values of , more nuanced differences in trading behaviours are uncovered. As is often the case, there is not one “right” answer for these type of problems, and we can gain different insights by adopting various answers.

7 Discussion

In this paper, we have presented an extension of the classical SBM to address several critical challenges. Our main contributions can be summarized as follows.

First, we replaced the Bernoulli distribution with the restricted Tweedie distribution, allowing us to model nonnegative zero-inflated edge weights. This represents a significant improvement over traditional community detection approaches and addresses a previously unmet need in the existing literature for handling such network data. Moreover, this advancement is poised to have even broader applications, particularly in the analysis of financial-related network data.

Second, our methods further incorporate dynamic effects of nodal information, making them suitable for a wide range of real-world applications and providing a new mechanism for explaining the dynamics of network formation via the effect of covariates.

One of the most striking findings of our research is that, in the limit as the number of nodes approaches infinity, estimating the covariate coefficients becomes asymptotically independent of the community labels when maximizing the likelihood function. This insight has led to the development of an efficient two-step algorithm, enhancing the practicality of our framework.

The application of our model to an international trading network, focusing on the dynamic effects of geographic distance between countries, has provided valuable insights into the complexities of global economic relationships. Additionally, our simulation studies have demonstrated good clustering performance of our proposed framework, further highlighting its effectiveness in capturing hidden patterns within networks. In terms of theoretical contributions, our main focus is on showing that the profile likelihood specified in expression (9) is asymptotically independent of the initial community assignment, as established by Theorem 1. But as pointed out by one referee, it is desirable to investigate asymptotic properties such as consistency and the convergence rate of the proposed estimator. However, these are challenging problems for the following reasons. Our target is to maximize the log-likelihood function given in equation (8). As indicated in statement (12), ideally we want to maximize the marginal distribution of over a proper parameter space if we are able to compute the summation of the log-likelihood function over all possible configurations of . To deal with the infinite-dimensional objects in this setting, we may consider using the sieve estimation method to approximate each with proper basis functions. Then this problem can be formulated as a standard -estimation problem. Theoretical analysis of the large-sample properties of the estimator entails a quantification of the complexity of the sieve space. However, it should be noted that this procedure is not feasible as it is quite challenging to compute the summation over terms for a large . Thus, we have to resort to variational inference to restrict the joint distribution of to a tractable family. Then we apply the EM algorithm to deal with the latent variable under this tractable setting. This treatment renders theoretical analysis of the asymptotic properties much more difficult, since it is no longer a standard -estimation problem. Thus, we will leave this to future work.

While our framework demonstrates its effectiveness, in practice community labels themselves may also evolve over time, which presents a promising avenue for future research. Extending our model to accommodate time-varying community labels, as demonstrated in works such as Xu and Hero (2014) and Matias and Miele (2017) where Markov chains are employed, is a straightforward next step. However, it is crucial to address the identifiability issues associated with these parameters, which will undoubtedly be a key focus of further exploration.

In conclusion, our work extends the classic SBM framework to better model nonnegative zero-inflated edge weights and analyze complex networks with dynamic nodal information. We believe that the methodologies presented here will inspire future research in the field of network analysis, opening doors to new insights and applications across various domains.

Data sharing

The R code used for conducting the simulation studies and for analyzing the trading dataset is available at https://github.com/JieJJian/TweedieSBM. The trading dataset itself can be downloaded from https://wits.worldbank.org/.

Acknowledgements

We sincerely thank the Editor, the Associate Editor, and two reviewers for their valuable feedback and constructive suggestions, which have helped improve the quality and clarity of our manuscript.

Funding

Mu Zhu and Peijun Sang are supported by the Natural Sciences and Engineering Research Council (NSERC) of Canada under grants RGPIN-2023-03337 and RGPIN-2020-04602, respectively.

Appendix A: MLE of

In this section, we provide the detailed derivation of as defined in expression (9). Subsequently, we substitute this resulting maximum likelihood estimate of back into expression (9), demonstrating how the equation presented in the first line of statement (10) is established.

Appendix B: Proof of Theorem 1

In this section, we prove Theorem 1. Before laying out the main proof, we first introduce several lemmas.

Proof.According to Conditions 3.1 and 3.2, and are iid random variables, with mean and respectively. Specifically, is a positive constant. By the weak law of large numbers, we have

Proof.The proof is similar to that of Lemma 1. If we can show that, at each time point , , for are iid with a nonzero mean, we complete the proof. For each node pair , both their pairwise covariate and community labels and are iid. Moreover, conditional on , and are iid as well. Therefore, for are iid, with mean

Next, we prove Theorem 1.

Proof of Theorem 1.By Lemmas 1 and 2 and the Continuous Mapping Theorem,