Predictive processing of music and language in autism: Evidence from Mandarin and English speakers

Abstract

Atypical predictive processing has been associated with autism across multiple domains, based mainly on artificial antecedents and consequents. As structured sequences where expectations derive from implicit learning of combinatorial principles, language and music provide naturalistic stimuli for investigating predictive processing. In this study, we matched melodic and sentence stimuli in cloze probabilities and examined musical and linguistic prediction in Mandarin- (Experiment 1) and English-speaking (Experiment 2) autistic and non-autistic individuals using both production and perception tasks. In the production tasks, participants listened to unfinished melodies/sentences and then produced the final notes/words to complete these items. In the perception tasks, participants provided expectedness ratings of the completed melodies/sentences based on the most frequent notes/words in the norms. While Experiment 1 showed intact musical prediction but atypical linguistic prediction in autism in the Mandarin sample that demonstrated imbalanced musical training experience and receptive vocabulary skills between groups, the group difference disappeared in a more closely matched sample of English speakers in Experiment 2. These findings suggest the importance of taking an individual differences approach when investigating predictive processing in music and language in autism, as the difficulty in prediction in autism may not be due to generalized problems with prediction in any type of complex sequence processing.

INTRODUCTION

The human brain actively makes predictions of upcoming events through their associations with the current context (Bar, 2007; Bendixen, 2014). This predictive process enables efficient adaptation to a dynamically changing world (Clark, 2013; Sinha et al., 2014). In daily interactions, prediction facilitates language comprehension and speech communication through rapid and accurate anticipation of upcoming words in a sentence based on existing knowledge and experience (Kuperberg & Jaeger, 2016; Miller & Selfridge, 1950). Similar to language, music also has a hierarchical structure that unfolds rapidly in time following tonal and syntactic rules (Krumhansl & Kessler, 1982; Krumhansl & Shepard, 1979; Patel, 2013; Patel & Morgan, 2017). Consequently, music perception and appreciation also require predictive processing in deriving anticipation and gaining enjoyment/pleasure (Cheung et al., 2019; Gold et al., 2019; Huron, 2006). The similarities between language and music in hierarchical predictive processing make them excellent candidates to investigate the mechanisms of prediction in comparable domains (Fogel et al., 2015; Patel & Morgan, 2017).

Regardless of domain, predictive processing involves learning the regularities between antecedents and consequents, and detecting and applying the learned associations to a similar situation (Cannon et al., 2021; Perruchet & Pacton, 2006). Apart from top-down mechanisms, prediction in language and music also builds on implicit statistical learning, a bottom-up process (Emberson et al., 2013) where the probability of occurrence of an upcoming event is predictable based on a given context (Conway et al., 2010; Fogel et al., 2015; Kuperberg & Jaeger, 2016; Miller & Selfridge, 1950; Morgan et al., 2019). While predictive processing is evident in early infancy as a learning mechanism (Háden et al., 2015; Köster et al., 2020; Trainor, 2012), extensive research has shown that autism spectrum disorder (autism hereafter) is associated with atypical predictive skills, including predictive learning and predictive response (Cannon et al., 2021; Lawson et al., 2014; Pellicano & Burr, 2012; Sinha et al., 2014).

Autism is a neurodevelopmental condition characterized by reduced social communication and social interaction skills, repetitive and restricted behaviors and interests, and atypical sensory processing (DSM-5, American Psychiatric Association, 2013). Despite mixed results in the literature (Cannon et al., 2021), autistic individuals generally demonstrate atypical predictive skills in social functioning (Greene et al., 2019; Kinard et al., 2020; Palumbo et al., 2015), visual processing (Karaminis et al., 2016; Sheppard et al., 2016), auditory processing (Font-Alaminos et al., 2020; Goris et al., 2018), theory of mind (Balsters et al., 2017), recognizing emotions (Leung et al., 2022, but see Leung et al., 2023; Zhang et al., 2022), and action prediction (Amoruso et al., 2019; Schuwerk et al., 2016). According to the most recent computational theoretical accounts, prediction difficulties in autism may arise due to imbalanced precision at higher (e.g., less precise prior beliefs) versus lower (e.g., more precise sensory inputs) levels (Brock, 2012; Lawson et al., 2014; Pellicano & Burr, 2012), atypical contextual modulation of this balance (Van de Cruys et al., 2014), difficulties in learning regularities (i.e., statistical learning ability; Sinha et al., 2014), imbalanced processing of global and local regularities (Xu et al., 2022), and the reduced speed of integrating new information to guide behavior—the “slow-updating” hypothesis (Lieder et al., 2019; Vishne et al., 2021).

In the language domain, autistic individuals show difficulties in semantic prediction and/or language comprehension (Booth & Happé, 2010; Frith & Snowling, 1983; Happé, 1997). They have a greater tendency than neurotypical individuals to complete sentences like “In the sea there are fish and …” in a local manner (“chips”) than a global manner (“sharks”) (Booth & Happé, 2010). Autistic children also tend not to adjust the pronunciation of homographs based on their semantic/syntactic context, e.g., by pronouncing “BOW” (/bəʊ/ vs. /baʊ/) incorrectly in a certain context such as “He had a pink BOW” versus “He made a deep BOW” (Frith & Snowling, 1983). Based on this evidence, weak central coherence (WCC) theory proposes that autistic individuals have reduced central coherence due to their preference for the parts over the whole (Frith & Happé, 1994; Happé, 1997). However, other studies suggest that irrespective of an autism diagnosis, individuals' language abilities affect behavioral performance during language processing, since poorer performance (i.e., reduced sensitivity to sentence context) is associated with poorer language scores (Brock et al., 2008; Norbury, 2005). Mixed results have also been reported in neuroimaging studies. Some studies indicate atypical N400 responses (an index of semantic processing) (Ring et al., 2007) and restricted neural networks in autism when processing sentences with semantically congruent versus incongruent endings (Catarino et al., 2011). Other studies show that semantic processing may be preserved in autism, although atypical processing is observed at a later stage due to reduced top-down control (Henderson et al., 2011), or is associated with delayed processing speed (DiStefano et al., 2019).

In the music domain, autism has been associated with typical or extraordinary musical skills, including perception of pitch, melody, and musical emotions (Chen et al., 2022; Janzen & Thaut, 2018; O'Connor, 2012; Ouimet et al., 2012; Quintin, 2019; Wang, Ong, et al., 2023), as well as enculturation to the pitch structure of Western music (DePape et al., 2012). However, reduced performance was also observed in pitch, emotion, and melodic processing (Bhatara et al., 2010; Ong et al., 2023; Sota et al., 2018), beat synchronization (Kasten et al., 2023; Morimoto et al., 2018; Vishne et al., 2021), active rhythmic engagement (Steinberg et al., 2021), and metrical enculturation in autism (DePape et al., 2012). In a musical imagery task involving predictive processing, despite having impaired language abilities, autistic children showed comparable or better performance than non-autistic children in judging pitch and tempo manipulations of the continuations of familiar song excerpts (Heaton et al., 2018). Furthermore, autistic individuals showed intact predictive processing of rhythmic tones when presented with standard and deviant rhythmic tone sequences (Knight et al., 2020). Additionally, autistic children, adolescents, and adults were able to identify positive and negative emotions in music (Gebauer et al., 2014; Heaton et al. 1999; Quintin et al., 2011), using neural networks in cortical and subcortical brain areas that are typically implicated for emotion processing and reward (Frühholz et al., 2016), such as the amygdala, ventral striatum, medial orbitofrontal cortex, ventral tegmental area, and caudate nucleus (Caria et al., 2011; Gebauer et al., 2014). Since both the perception/appreciation of music and musical emotion identification require prediction (Cheung et al., 2019; Gold et al., 2019), it could be assumed that autistic individuals have largely intact predictive processing of music based on the above evidence.

Taken together, previous literature on linguistic and musical prediction in autism seems to suggest atypical performance in the language domain but not in the music domain, even though predictive processing involves implicit statistical models in both domains (Conway et al., 2010; Fogel et al., 2015; Miller & Selfridge, 1950; Morgan et al., 2019). However, there has been no direct comparative investigation of prediction based on naturally learned associations across language and music in autism. It remains unclear whether autistic individuals would show difficulties with prediction as “future-directed information processing” in hierarchically structured sequences as constrained by temporal, syntactic, or semantic rules across the two domains (Ferreira & Chantavarin, 2018; Koelsch et al., 2019; Kuperberg & Jaeger, 2016; Patel, 2003; Rohrmeier & Koelsch, 2012; Slevc, 2012). In addition, most studies used artificial and arbitrary cue-outcome associations rather than naturalistic antecedents and consequents to examine predictive skills in autism (Cannon et al., 2021). Using ecologically valid musical and linguistic stimuli, the current study examined naturally formed statistical predictive behavior across domains in autism, adopting the approach by recent studies of musical and linguistic prediction (Fogel et al., 2015; Patel & Morgan, 2017).

Specifically, prediction in language can be examined through sentence processing tasks, during which the brain pre-activates forthcoming words following a probabilistic approach based on the current context (DeLong et al., 2005; Kutas & Hillyard, 1984; Nieuwland et al., 2020). Sentence cloze tasks have been widely used to assess linguistic predictive ability, where participants are required to complete a context/sentence with an appropriate word/phrase (Chik et al., 2012; Di Vesta et al., 1979; Neville & Pugh, 1976; Taylor, 1953). The production of a word/phrase when completing a sentence in a cloze task involves contextual semantic processing and a degree of expectation (DeLong et al., 2005; Nieuwland et al., 2020). Based on the context, highly expected words are more likely to be produced than less expected words. For example, the highly expected word “wet” is more frequently produced than the word “cold” when completing the sentence “You need a raincoat to avoid getting ___,” even though both words are semantically correct in this context. The degree of expectation for a particular word/phrase in a sentence is usually determined by calculating the proportion of people (cloze probability) who have completed the sentence using the word/phrase (Taylor, 1953). Behaviourally, words with high cloze probabilities are activated more rapidly and read with faster speeds than low probability words (Smith & Levy, 2013; Staub et al., 2015). Linguistic prediction can thus be assessed through production of final words/phrases in sentence cloze tasks (Staub et al., 2015), or through perception of final words/phrases in semantic congruency tasks where participants judge the semantic predictability of a final word, which can either be congruent, neutral, or anomalous in a sentence context (Stringer & Iverson, 2020).

Like language processing, music perception also involves multiple levels of prediction (Koelsch et al., 2019; Patel & Morgan, 2017; Vuust et al., 2022) and engages probabilistic predictive processing (Egermann et al., 2013; Morgan et al., 2019; Pearce & Wiggins, 2006). Using perceptual rating tasks, studies of music expectation showed that notes/chords that violate harmonic or tonal structure are deemed unexpected by both musicians and non-musicians (Egermann et al., 2013; Jiang et al., 2016; Marmel et al., 2008; Schellenberg, 1996; Steinbeis et al., 2006). Recently, Fogel et al. (2015) developed a novel melodic cloze task asking participants to sing the next note after hearing the opening of an unfamiliar, naturalistic tonal melody. Responses demonstrated that musical expectancy is influenced by statistical learning of note transition probabilities, gestalt principles of auditory processing, and the tonal hierarchy and implied harmonic structure (Fogel et al., 2015; Morgan et al., 2019; Verosky & Morgan, 2021). Thus, both the melodic cloze task and the sentence cloze task allow the direct comparison of predictive processing through production tasks across music and language (Fogel et al., 2015).

Capitalizing on these recent advances in the field we employed the melodic cloze task from Fogel et al. (2015) and created a matched sentence cloze task to compare predictive production in music and language in autism (Figure 1). Specifically, we matched the items (melodic or sentence stems) in the number of notes/syllables and in the cloze probabilities of the most frequently produced final notes/words, based on the norms. The tasks required participants to either sing the note or say the word/phrase they expected to come next in a melody/sentence. We included a pitch imitation task to assess participants' pitch matching abilities because musically untrained participants may be less able to sing accurately (Dalla Bella & Berkowska, 2009; Pfordresher et al., 2010). We also included perceptual rating tasks of completed versions of the melodies and sentences to examine whether participants' perceptual ratings of the melodies and sentences correlated with their production performance.

Finally, evidence suggests that pitch, music, and language processing abilities in autism interact with each other (Eigsti & Fein, 2013; Germain et al., 2019; Globerson et al., 2015; Jones et al., 2009; Wang et al., 2022), and at the same time they are also modulated by cognitive abilities such as non-verbal IQ, receptive vocabulary, and memory (Chowdhury et al., 2017; Jamey et al., 2019; Kargas et al., 2015; Ong et al., 2023) and impacted by age (Jamey et al., 2019; Mayer et al., 2016; Ong et al., 2023; Wang et al., 2021) and language background (Wang, Xiao, et al., 2023; Yu et al., 2015). Put simply, differences in music and/or language processing between autistic and non-autistic individuals may be due to differences in cognitive abilities and individual factors rather than autism per se. Thus, we reported data from a Mandarin-speaking sample (Experiment 1) and an English-speaking sample (Experiment 2) and compared group performance with and without including cognitive factors, age, pitch matching ability, and musical training experience in the models. We hypothesized that, across both samples, the autistic group would show intact prediction in the music domain but atypical prediction in the language domain, with participants' production performance associated with their perceptual ratings in both domains. We also predicted that background measures including age, pitch, musical, and cognitive abilities would impact predictive processing of music and language across both Mandarin and English samples of autistic and non-autistic individuals.

EXPERIMENT 1

Method

Participants

Thirty-one autistic participants (4 females) were recruited from autism centres in Nanchang and Nanjing, China, and 32 age-matched non-autistic participants (5 females) were recruited from local mainstream schools and the University of Nanchang. All participants in the autistic group had a professional clinical diagnosis of autism, which was further confirmed using the Autism Diagnostic Observation Schedule, second edition (ADOS-2, Lord et al., 2012) by author LW (with clinical and research reliability for administration and scoring). Participants in the non-autistic group reported no neurodevelopmental/psychiatric disorders or a family history of autism. Two participants in the autistic group did not complete the melody rating task, due to fatigue, loss of interest, or difficulty in concentrating on the task, and their data are marked as “NA” in the dataset. All participants had normal hearing with pure-tone air conduction thresholds of 25 dB HL or better at frequencies of 0.5, 1, 2, and 4 kHz. The study protocol was approved by the research ethics committees of University of Reading and Shanghai Normal University. Parents provided written informed consent for their children's participation.

Background measurements

Participants' verbal ability was estimated using the Peabody Picture Vocabulary Test, revised edition (PPVT-R, Dunn & Dunn, 1981), and nonverbal intelligence assessed with Raven's Standard Progressive Matrices (RSPM, Raven et al., 1998). Given that the Chinese norms for PPVT-R only included ages from 3.5 to 9 (Sang & Miao, 1990), standardized scores were calculated based on American norms (Dunn & Dunn, 1981). Correlation analysis revealed a significant positive relationship between the standardized scores obtained based on the Chinese norms and those based on the American norms (r = 0.95) for participants at or below 9 years old, thus confirming the validity of this approach. RSPM scores were normalized using the means and standard deviations across different age ranges based on a Chinese sample (Zhang & Wang, 1989). Participants' short-term memory was tested using the forward Digit Span task (Wechsler, 2008), implemented via the Psychology Experiment Building Language (PEBL) test battery (Piper et al., 2016), where digit span was calculated as the maximum number of digits correctly recalled at least once among two trials of the same length of digits. Demographic information and musical training experience of participants were collected through a questionnaire, where musical training in years was calculated by summing across experience with all instruments including voice (Pfordresher & Halpern, 2013). As can be seen from Table 1, the two groups were matched on age, gender, musical training experience, non-verbal IQ, and digit span. Although both groups showed advanced receptive vocabulary skills, the non-autistic group scored significantly higher than the autistic group on this measure.

| Measures | Autistic group | Non-autistic group | t or chi-squared test between groups |

|---|---|---|---|

| n | 31 | 32 | NA |

| Age | 10.49 (2.52) | 11.47 (2.71) | t(61) = −1.46, p = 0.150 |

| Age range | 7.00–15.91 | 7.55–15.69 | NA |

| Sex | F = 4, M = 27 | F = 5, M = 27 | χ2(1) = 0.00, p = 1 |

| Musical training | 0.81 (1.21) | 0.47 (1.06) | t(61) = 1.16, p = 0.250 |

| NVIQ | 0.72 (0.96) | 0.86 (0.65) | t(61) = −0.68, p = 0.500 |

| Receptive vocabulary | 129.06 (24.28) | 141.41 (12.63) | t(61) = −2.50, p = 0.015 |

| Digit span | 8.35 (1.03) | 8.13 (1.08) | t(61) = 0.85, p = 0.400 |

- Note: F = female, M = male. NVIQ: standard score of Raven's standard progressive matrices; Receptive vocabulary: standard score of The Peabody Picture Vocabulary Test, revised edition (PPVT-R).

Cloze production and pitch matching tasks

Stimuli

Stimuli in the melodic cloze task were from Fogel et al. (2015), which included 45 pairs of melodic stems in 12 major keys, three meters (3/4, 4/4, and 6/8), and with a tempo of 120 beats per minute. Containing 5–9 notes, the stems in each pair had the same length, rhythm, and melodic contour, but differed in the underlying harmonic structure as influenced by the pitch of some of the notes. In each pair, one stem implied an authentic cadence (AC) at the end, and the other did not (non-cadence, NC). Whereas each AC stem elicited a strong expectation for a particular subsequent note (the tonic, or central tone of the prevailing key), the NC stem did not create a strong expectation for any particular subsequent note. Thus, AC stems were “high constraint” and NC stems were “low constraint” in terms of how they constrained expectations for the subsequent note. Two versions of the stems were created, with the latter one octave lower than the former, for use with males vs. females (Fogel et al., 2015). Although Western music and traditional Chinese music have different systems, owing to globalization Chinese participants are widely exposed to Western music and its tonal system (Huang, 2012). Previous studies have reported that Mandarin speakers who were not musicians could differentiate tonal regularities from irregularities and were sensitive to tonality and emotions in Western music (Fang et al., 2017; Jiang et al., 2016, 2017; Sun et al., 2020; Zhou et al., 2019). Thus, the use of Western tonal melodies from Fogel et al. (2015) was ecologically valid for our Mandarin-speaking participants.

Matching the cloze probabilities of the melodic stems, a list of 204 sentence stems were selected based on the norms established from previous studies in English (Arcuri et al., 2001; Block & Baldwin, 2010) and translated into Chinese. To establish the cloze probabilities of these sentence stems in Chinese, a validation study was conducted with a group of Mandarin-speaking neurotypical adults (n = 34) via an online survey (https://www.onlinesurveys.ac.uk). Using the same instruction as in Block and Baldwin (2010), these volunteers were asked to provide a word/phrase that they thought would best complete each of the sentence stems. The cloze probability for each sentence was then calculated according to the participants' responses. A final battery of 90 cloze sentences was selected based on their cloze probabilities and numbers of syllables, individually matched with those of the melodic stems (within ±5% difference in cloze probability and within ±2 difference in the number of notes/syllables). These sentence stems were then recorded by a native female speaker of Mandarin using Praat (Boersma & Weenink, 2001), with 44.1 kHz sampling rate and 16-bit amplitude resolution. The details of the melodic and sentence stems are shown in Table 2.

| Melodic stem | Sentence stem | |||||

|---|---|---|---|---|---|---|

| Range | Mean | SD | Range | Mean | SD | |

| Cloze probability (%) | 20.00–100.00 | 55.37 | 21.32 | 14.71–97.06 | 55.78 | 21.59 |

| Lengtha | 6–9 | 8.40 | 0.83 | 6–9 | 8.36 | 0.87 |

| Pitch range (stb)c | 5.20–29.11 | 14.83 | 6.01 | 5.80–28.91 | 13.47 | 6.43 |

| Duration (s) | 2.50–8.66 | 5.02 | 1.23 | 1.52–2.74 | 2.23 | 0.25 |

- a The number of notes/syllables.

- b Semitone.

- c The mean distance between the highest and lowest pitch in the stem.

Using a Latin square design, the melodic stems were pseudorandomised into 8 lists of 45 items, among which 22/23 were AC stems and the rest were NC stems (Fogel et al., 2015). The stems from the same pair appeared in different lists, which were assigned to participants in a counterbalanced order. Using the same randomization method as in the melodic cloze task, the 90 matched sentence stems were also grouped into 8 lists of 45 items and presented in counterbalanced order across participants.

Procedure

The experiment was carried out in classrooms in local autism centres in Nanchang and Nanjing, China. Cloze stems were presented with PsychoPy (version 1.9.1) through Sennheiser HD280 pro headphones connected to a laptop via a Roland RUBIX22 USB Audio Interface. Prior to the melodic cloze task, participants' note production accuracy was evaluated using a pitch matching task. Eight notes were played one at a time: F4, A4, B3, G#4, A#3, D4, C#4, and Eb4, corresponding to 349.2, 440.0, 246.9, 415.3, 233.1, 293.7, 277.2, 311.1 Hz, respectively, for female participants. Notes that were one octave lower were used for male participants, with fundamental frequencies at 174.6, 220.0, 123.5, 207.7, 116.6, 146.9, 138.6, and 155.6 Hz. Participants were instructed to imitate the pitch of the notes as closely as possible. In the melodic cloze task, after hearing a melodic stem, participants were instructed to “sing the note you think comes next” by humming or on a syllable of their own choice (e.g., “la”, “da”, etc.) within a 5-sec recording window. In the sentence cloze task, after hearing a sentence stem, participants were instructed to “say the word/phrase you think best completes the sentence” within a 5-sec recording window (Figure 1). A short practice session was presented to familiarize participants with the task procedure and stimuli, using different cloze melodies/sentences from the actual task. From the practice sessions, all participants understood the 5-sec response window as required when performing the tasks.

Perceptual rating tasks

Stimuli

In the rating tasks, the stimuli included the melodies and sentences completed/produced in full, including the last notes/words that had the highest cloze probabilities based on the norms. The norms for the notes were from Fogel et al. (2015), and the norms for the words were from the validation study (described above).

Procedure

In the rating tasks, which followed the production task, participants were told that “In the previous production tasks, you have tried to complete the melodies/sentences yourself. In this task, another person has completed the melodies/sentences using their preferred notes/words. On a scale of 1 to 7, please rate how well you think the last note/word(s) continues/completes the melody/sentence (1: very badly; 7: very well). Would you use the same note/word(s) to continue/complete the melody/sentence?” To familiarize participants with the rating tasks, a short practice session was presented, using the same melodies/sentences as in the cloze practice sessions but with the last note/word(s) added.

Data analysis

Participants' production data were analyzed offline. The sung notes in the melodic cloze and pitch matching tasks were manually labeled, and their fundamental frequencies (F0) were extracted using ProsodyPro (Xu, 2013) in Praat (Boersma & Weenink, 2001). For the pitch matching task, the accuracy of note production was assessed individually, and the deviations (in cents; 100 cents = 1 semitone) in pitch from the actual notes were averaged across the eight notes for each participant. The sung notes produced in the melodic cloze task were also assessed individually for each participant. The F0 of each sung note was matched to the closest semitone (within 50 cents deviation) in the Western chromatic scale (e.g., A4 = 440 Hz). When analyzing the pitch matching and melodic cloze production data, we allowed octave jumps by the participants due to their different vocal ranges so that the final F0 values were adjusted (by ±12 semitones) before comparing them against the expected notes.

Using custom-written Matlab scripts (The MathWorks Inc., 2022), participants' sung notes were categorized into four categories based on the norms from Fogel et al. (2015): 1. no response; 2. a note that has not been reported in the norms; 3. a less frequent note from the norms; 4. the most frequent note from the norms. Participants' sentence cloze production was transcribed and categorized offline by author CZ, a Mandarin speaker, into four categories: 1. no response; 2. a grammatically/semantically incorrect word/phrase that has not been reported in the norms; 3. a less frequent word/phrase from the norms, or a grammatically and semantically correct word/phrase not from the norms; 4. the most frequent word/phrase from the norms. An independent Mandarin-speaking research assistant also coded 33 out of a total of 63 datasets, with high inter-rater reliability (ĸ = 0.965, p < 0.001). Disagreements were resolved through discussions between the two coders. No co-coding was done for the melodic cloze categories since categorization was done automatically by Matlab scripts (The MathWorks Inc., 2022). In addition, reaction time (RT) during the cloze production tasks was measured as the time between the offset of a stem and the onset of a vocalization using Praat (Boersma & Weenink, 2001).

For statistical analyses, t tests were used to compare group performance on pitch matching ability. Counts (in percentage) of the four response categories in the music and language tasks were summed and tabulated for each group, and chi-squared tests were used to evaluate if there were any group differences. The four response categories were then simplified into two categories and converted to binomial data as 1 (correct: the most frequent responses in the norms) or 0 (incorrect: no or all other responses).

Participants' binomial production responses (1 or 0) and their corresponding RT for producing the most frequent responses, as well as the perceptual rating data, were then analyzed using generalized linear mixed-effects models (i.e., logistic regression for production responses) or linear mixed-effects models (log-transformed RT data and rating) in R (version R-3.6.0; R: A Language and Environment for Statistical Computing, 2019), with packages of lme4 (Bates et al., 2015), car (Fox & Weisberg, 2018), and lmerTest (Kuznetsova et al., 2017). For each measure, we modeled a “simple model” across both music and language tasks, which consisted only of group (autistic versus non-autistic), task (music versus language), constraint (high versus low), and all interactions to examine whether there were any group differences in the measure when other background measures were not considered. Then, for the same measure, we modeled a “full model” to account for background measures and stimulus properties. Each full model consisted of the following predictors: group (autistic versus non-autistic), task (music versus language), constraint (high versus low), and all possible interactions between the three, as well as age (a continuous predictor), years of musical training (a continuous predictor), non-verbal IQ (a continuous predictor), receptive vocabulary (a continuous predictor), digit span (a continuous predictor), pitch matching deviation (a continuous predictor), and stimulus duration (a continuous predictor). To examine the relationship between production and perception, perceptual ratings of the items were added as a predictor for the production categories, and vice versa. In all the models, categorical predictors were effect-coded and continuous predictors were mean-centered. Mean centring for stimulus duration was done by task (i.e., separately for music and language) as the stimulus duration for the musical stimuli were longer than the linguistic stimuli (see Table 2). The variance inflation factor (VIF) was calculated to check multi-collinearity among the independent variables. Given that the VIF values were all smaller than 5, multi-collinearity among these independent variables was low (O'brien, 2007). In each model, the subject and item random intercepts were included as random effects. We tried modeling the random effects as maximal as possible (Barr et al., 2013), but due to convergence issues in some of the models, by-item and/or by-subject slopes were removed.

Statistical significance of the fixed effects was tested using the Anova() function from the car package for linear models (Type III Analysis of Variance Wald chisquare tests). Post-hoc analyses of the interaction effects were investigated with the emmeans package with p values adjusted using the holm method (Lenth et al., 2020) in R. Effect sizes for each predictor in the binomial models (production) were estimated using odds ratio whereas those in the linear models (log-transformed RT and rating) were estimated using R2 using the r2beta() function from the r2glmm package (Jaeger et al., 2017). In the interest of space, only statistically significant effects/interactions are reported in the Results section below, with the entire model outputs displayed in Supplementary Tables S1.1–S1.6.

Results

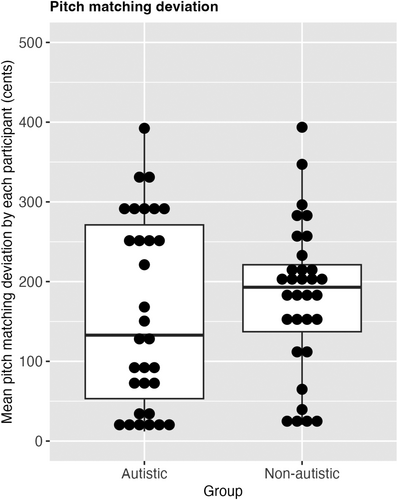

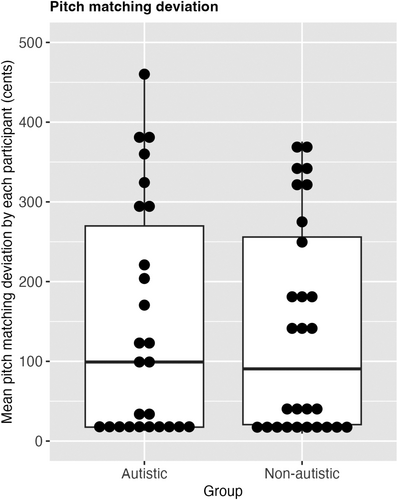

Pitch matching

Figure 2 shows the boxplots of pitch matching deviations by each participant in each group. There was no significant difference in pitch matching deviation between the two groups (t(61) = −0.71, p = 0.482; autistic mean (SD) = 161.69 (116.29); non-autistic mean (SD) = 180.65 (91.94)).

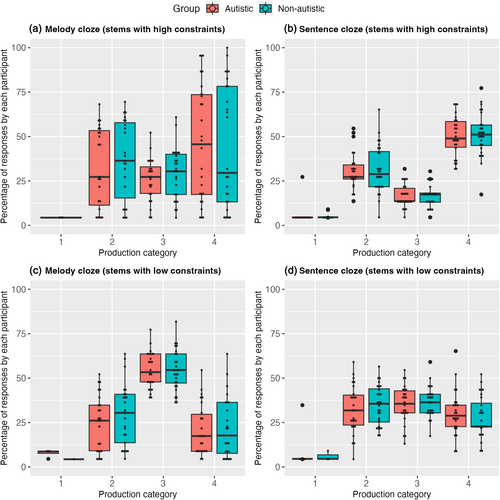

Melodic and sentence cloze production tasks

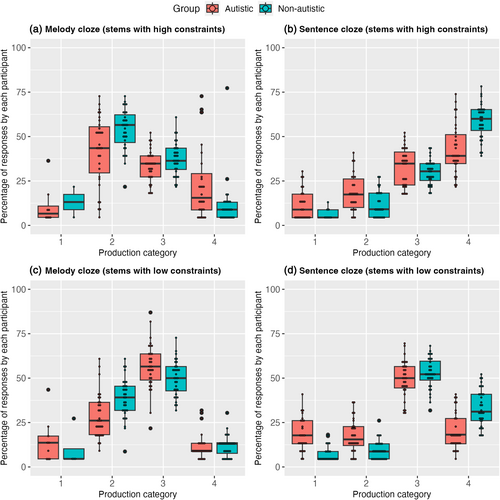

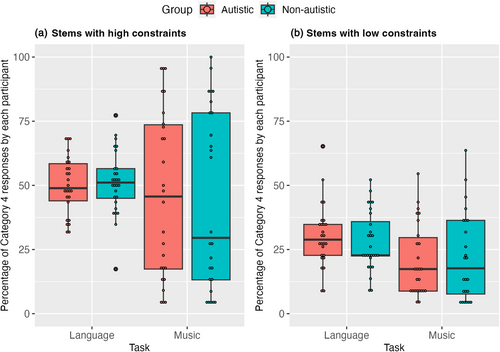

Figure 3 shows the boxplots of percentages of the responses from the four categories by each participant in each group separated by the task (music vs. language) and constraint (high vs. low) conditions, and Table 3 displays the response counts in percentage from the four categories and chi-squared tests comparing the distribution of the percentages by groups. The two groups differed significantly only in their response categories for the language task, but not the music task, regardless of whether the items had high-constraints or low-constraints.

| Task | Constraint | Group | Category 1 | Category 2 | Category 3 | Category 4 | Chi-squared test |

|---|---|---|---|---|---|---|---|

| Language | High | Autistic | 9 | 15 | 33 | 43 | χ2(3) = 8.10 |

| Language | High | Non-autistic | 2 | 10 | 30 | 59 | p = 0.044 |

| Language | Low | Autistic | 16 | 16 | 50 | 19 | χ2(3) = 11.51 |

| Language | Low | Non-autistic | 5 | 9 | 52 | 33 | p = 0.009 |

| Music | High | Autistic | 3 | 42 | 34 | 21 | χ2(3) = 6.18 |

| Music | High | Non-autistic | 1 | 52 | 38 | 10 | p = 0.103 |

| Music | Low | Autistic | 4 | 29 | 57 | 10 | χ2(3) = 3.73 |

| Music | Low | Non-autistic | 1 | 39 | 50 | 10 | p = 0.292 |

Production of the most frequent responses

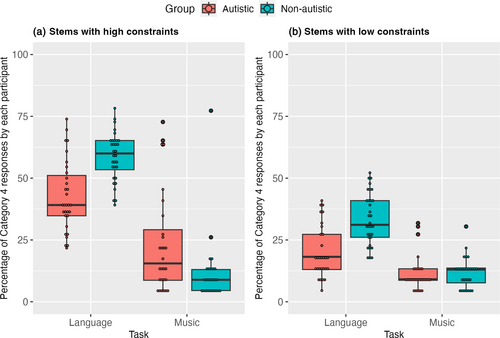

In the simple model on participants' binomial production responses (1: the most frequent responses based on the norms; 0: no or other responses; see Figure 4), significant effects of Constraint (χ2(1) = 24.21, p < 0.001, Odds Ratio (OR) = 1.51) and of Task (χ2(1) = 79.47, p < 0.001, OR = 2.33) were observed, as well as all the two-way interactions between Group, Constraint, and Task (Group × Task: χ2(1) = 29.00, p < 0.001, OR = 0.71; Group × Constraint: χ2(1) = 9.58, p = 0.002, OR = 1.15; Task × Constraint: χ2(1) = 10.34, p = 0.001, OR = 1.29). Importantly, all these effects were qualified by a significant three-way interaction of Group × Constraint × Task (χ2(1) = 11.43, p < 0.001, OR = 0.88). Pairwise comparisons revealed that production of the most frequent responses according to the norms was less common among autistic participants than non-autistic participants in the Language task for both high and low constraints (High: Autistic M (SD) = 42.55% (14.20%) vs. Non-autistic M (SD) = 58.70% (9.81%), z = 5.42, p < 0.001; Low: Autistic M (SD) = 20.39% (10.58%) vs. Non-autistic M (SD) = 33.55% (10.38%), z = 5.42, p < 0.001). Conversely, production of the most frequent responses was more common among autistic participants for the music task under high constraint (Autistic M (SD) = 23.54% (20.93%) vs. Non-autistic M (SD) = 11.99% (14.37%), z = 3.41, p < 0.001), but there was no group difference under low constraint (Autistic M (SD) = 11.59% (7.39%) vs. Non-autistic M (SD) = 11.72% (6.15%), z = 0.28, p = 0.778).

In the full model, that is, with the addition of other predictors involving participants' background measures and stimulus properties, there were significant effects of age (χ2(1) = 19.91, p < 0.001, OR = 1.10), receptive vocabulary (χ2(1) = 13.85, p < 0.001, OR = 1.01), and pitch matching deviation (χ2(1) = 5.99, p = 0.014, OR = 1.0), suggesting that older participants (B = 0.10, SE = 0.02), participants with higher receptive vocabulary (B = 0.01, SE = 0.00), and participants who were better able to pitch match (B = −0.001, SE = 0.001) were more likely to produce the most frequent responses. There was also a significant effect of perceptual rating (χ2(1) = 141.69, p < 0.001, OR = 1.35), suggesting that participants' perception and production performance was correlated (B = 0.30, SE = 0.02). Crucially, even after those factors were accounted for, similar findings as the simple model were found: there was a significant three-way interaction involving Group × Task × Constraint (χ2(1) = 11.13, p < 0.001, OR = 0.88). Similar to the simple model, group differences were found under high and low constraints in the language task, and only in the high constraint music task but not in the low constraint music task (Language High: Autistic M (SD) = 42.55% (14.20%) vs. Non-autistic M (SD) = 58.70% (9.81%), z = 4.87, p < 0.001; Language Low: Autistic M (SD) = 20.39% (10.58%) vs. Non-autistic M (SD) = 33.55% (10.38%), z = 4.36, p < 0.001; Music High: Autistic M (SD) = 23.54% (20.93%) vs. Non-autistic M (SD) = 11.99% (14.37%), z = 4.41, p < 0.001; Music Low: Autistic M (SD) = 11.59% (7.39%) vs. Non-autistic M (SD) = 11.72% (6.15%), z = 0.70, p = 0.485).

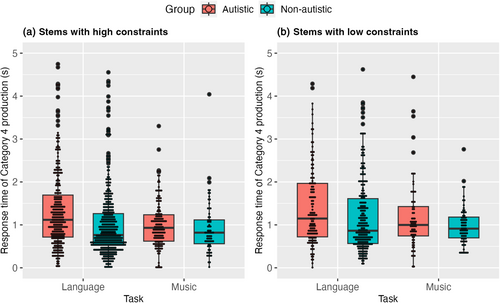

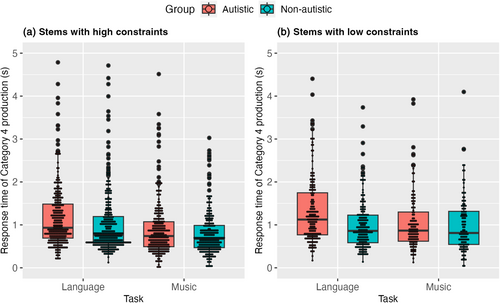

Reaction time

Regarding reaction times (see Figure 5), the simple model revealed a significant effect of Constraint (χ2(1) = 6.78, p = 0.009, R2 = 0.011), with reaction time being faster for high constraint items than for low constraints items (High M (SD) = 1.11 (0.80) vs. Low M (SD) = 1.21 (0.83), t(209) = 2.60, p = 0.010). There was also a significant effect of Task (χ2(1) = 5.53, p = 0.019, R2 = 0.018), which was qualified by a Group × Task interaction (χ2(1) = 5.39, p = 0.020, R2 = 0.014). Pairwise comparisons revealed that group differences were only evident in the language task, in which autistic participants had longer reaction times than non-autistic participants (Autistic M (SD) = 1.37 (0.92) vs. Non-autistic M (SD) = 1.07 (0.81), t(65.9) = 3.26, p = 0.002), whereas no group differences were found in the music task (Autistic M (SD) = 1.07 (0.65) vs. Non-autistic M (SD) = 0.95 (0.52), t(57.5) = 0.63, p = 0.530).

In the full model, the same findings as the simple model were found: there were significant effects of Constraint (χ2(1) = 6.80, p = 0.009, R2 = 0.010), with reaction time being faster for high constraint items than for low constraints items (High M (SD) = 1.11 (0.80) vs. Low M (SD) = 1.21 (0.83), t(212) = 2.60, p = 0.010), Task (χ2(1) = 5.49, p = 0.019, R2 = 0.017), which was qualified by a Group × Task interaction (χ2(1) = 4.37, p = 0.037, R2 = 0.011). Pairwise comparisons revealed that group differences were only evident in the language task, in which autistic participants had longer reaction times than non-autistic participants (Autistic M (SD) = 1.37 (0.92) vs. Non-autistic M (SD) = 1.07 (0.81), t(58.2) = 2.40, p = 0.002), whereas no group differences were found in the music task (Autistic M (SD) = 1.07 (0.65) vs. Non-autistic M (SD) = 0.95 (0.52), t(58.6) = 0.61, p = 0.542). No other predictors were significant in the model.

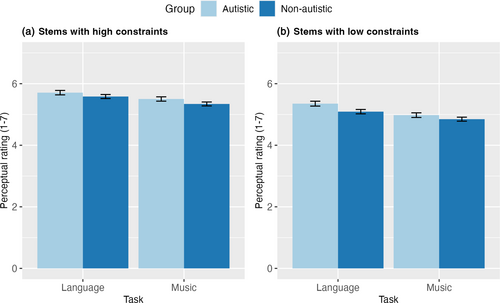

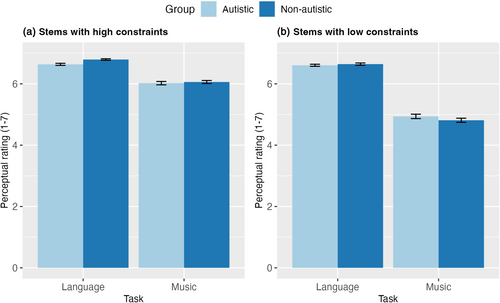

Perceptual rating tasks of the completed melodies and sentences

Figure 6 shows participants' ratings of the completed melodies and sentences with the most frequent responses based on the norms separated by items with high versus low constraints. The simple model revealed significant effects of Task (χ2(1) = 5.56, p = 0.018, R2 = 0.007), with music stimuli receiving lower ratings than language stimuli (Music M (SD) = 5.16 (1.81) vs. Language M (SD) = 5.43 (1.92), z = 2.36, p = 0.018), and of Constraint (χ2(1) = 32.55, p < 0.001, R2 = 0.018), as stimuli with high constraints received higher ratings than those with low constraints (High constraint M (SD) = 5.53 (1.79) vs. Low constraint M (SD) = 5.07 (1.92), z = 5.71, p < 0.001). No other effects or interactions were significant.

In the full model, there was a significant effect of Constraint (χ2(1) = 20.95, p < 0.001, R2 = 0.010), which was qualified by a Task × Constraint interaction (χ2(1) = 4.98, p = 0.026, R2 = 0.001). Subsequent pairwise comparisons revealed that the difference between High constraint vs. Low constraint was greater in the Music task than in the Language task (Music High: M (SD) = 5.42 (1.76) vs. Music Low: M (SD) = 4.91 (1.83), z = 5.03, p < 0.001; Language High: M (SD) = 5.65 (1.82) vs. Language Low: M (SD) = 5.22 (1.99), z = 2.46, p = 0.014). Additionally, there was a significant effect of production category (χ2(1) = 207.18, p < 0.001, R2 = 0.037), suggesting that participants' perception and production performance was correlated (Frequent Response: M (SD) = 5.96 (1.62) vs. Infrequent Response: M (SD) = 5.07 (1.90), z = 14.39, p < 0.001). No other predictors were significant in the model.

Discussion

Results from Experiment 1 on Mandarin speakers suggest that across both autistic and non-autistic groups, participants' predictive production of the final notes/words in a context and their perceptual ratings of the most frequent notes/words based on the norms mirrored each other across both domains. In the cloze production tasks, while the two groups showed similar distributions of responses classified into four categories for the music task, the non-autistic group produced more responses which were the most frequent in the norms than did the autistic group for the language task. When the production responses were examined based on two categories (the most frequent responses based on the norms vs. no/other responses), the autistic group produced more notes that were the most frequent in the norms for the music task compared to the non-autistic group for stems with high constraints only, but not for stems with low constraints. Regardless of the constraint condition of the stems, the non-autistic group produced more words that were the most frequent in the norms for the language task compared to the autistic group. While the two groups showed similar response times in the music task, the autistic group responded to sentences more slowly than the non-autistic group in the language task. In terms of expectedness ratings of the melodies and sentences that were completed with the most frequent responses in the norms, both autistic and non-autistic groups provided higher expectedness ratings for sentences than melodies, and for sentences and melodies with high constraints than those with low constraints. Finally, age, receptive vocabulary, and pitch matching ability were significant factors predicting the production of most frequent responses across both tasks. Overall, these findings suggest that autistic Mandarin speakers showed intact predictive processing of music but atypical prediction of language. However, given that the two groups differed somewhat in their background measures (see Table 1), which might partly explain the group differences observed, we repeated the experiment on a sample of English-speaking participants who were more closely matched in Experiment 2.

EXPERIMENT 2

Introduction

While the findings of Experiment 1 suggest a dissociation in music and language predictive processing abilities among autistic individuals, there are several caveats that need to be considered. Firstly, the findings might be influenced by potential confounding variables of the sample, such as musical training (no between-group difference but years of training in favor of the autistic group) and receptive vocabulary (significant between-group difference in favor of the non-autistic group). When comparing the simple model with the full model on production response, although the significant main effects and interactions remained in the full model after accounting for participant and stimulus characteristics, we still cannot rule out the possibility that the imbalanced musical training experience and receptive vocabulary ability across the two groups might have contributed to the interaction effects of group, task, and constraint in the models. Previous studies have shown a link between receptive language skills and predictive language processing in young autistic children (Prescott et al., 2022). It has also been proposed that atypical predictive processing may cause language difficulties in autism (Ellis Weismer & Saffran, 2022). Therefore, it needs to be acknowledged that the current findings may not generalize to the entire autism spectrum which manifests a range of cognitive abilities across different autistic individuals (Lenroot & Yeung, 2013; Tager-Flusberg & Kasari, 2013).

Secondly, it is worth noting that the norms in the melodic cloze task were collected from musically-trained Western adults (9 ± 4.8 years) (Fogel et al., 2015), whereas we focused on Chinese children with little musical training in Experiment 1 (autistic: 0.81 ± 1.23 years; non-autistic: 0.47 ± 1.08 years). As a result, our participants showed reduced pitch matching abilities and produced fewer numbers of the most frequent notes across the different melodic stems as compared with the norms established by the adult musicians in Fogel et al. (2015). While Mandarin-speaking children are exposed to Western music due to globalization, they nonetheless likely differ from Western musician adults in their degree of implicit knowledge of Western tonal music (Yang & Welch, 2023). Thus, the current findings may not generalize to the entire autism spectrum which manifests a diversity of musical abilities (Ong et al., 2023; Sota et al., 2018).

Thirdly, in the current Mandarin sample, participants aged between 7 and 16, which might have introduced potential confounding factors related to developmental differences within this range (Eccles, 1999). The wide age span could impact the participants' cognitive abilities, language skills, and musical training (Nippold, 2000; Paus, 2005; Tierney et al., 2015), which in turn could influence their predictive processing abilities. Thus, it is important to acknowledge that developmental differences within the age range could influence the current findings.

To address these limitations, we conducted the same experiment on a well-matched sample of English speakers in Experiment 2 to further elucidate the relationship between predictive processing, music, and language in autism.

Method

Participants

A total of 79 British English-speaking individuals, aged between 12 and 57, participated in Experiment 2. Participants in the autistic group (n = 28) received a clinical diagnosis of autism by a licensed clinician whereas non-autistic participants (n = 51) reported no psychiatric or neurological disorders. All participants completed the Raven's Standard Progressive Matrices (Raven et al., 1998) test as a measure of nonverbal IQ, the Receptive One-Word Picture Vocabulary Test (ROWPVT-4) (Martin & Brownell, 2011) as a measure of receptive vocabulary, and the digit span forward task as a measure of short-term memory (Piper et al., 2016). Participants' musical training background was collected through a questionnaire, where their years of musical training were summed across all instruments including voice (Pfordresher & Halpern, 2013). When comparing all participants between the two groups, no significant differences were observed in age, musical training experience, or cognitive abilities (see Table 4). However, there were more female participants in the non-autistic group (p = 0.055), and autistic participants had shorter digit spans than non-autistic participants (p = 0.089). To further match the two groups, a subset of 28 non-autistic participants were chosen to individually match with the 28 autistic participants on age, sex, digit span, musical training, nonverbal IQ, and receptive vocabulary. As can be seen from Table 4, the two groups of participants (n = 28 each) were closely matched on all background measures (all ps >0.24).

| Measures | Group 1: Autistic participants | Group 2: All non-autistic participants | Group 3: A subset of non-autistic participants | t or chi-squared test between groups 1–2 | t or chi-squared test between groups 1–3 |

|---|---|---|---|---|---|

| n | 28 | 51 | 28 | NA | NA |

| Age | 28.79 (15.27) | 24.57 (9.43) | 27.04 (11.81) | t(77) = 1.50, p = 0.139 | t(54) = 0.47, p = 0.639 |

| Age range | 12–57 | 13–55 | 13–55 | NA | NA |

| Sex | F = 14, M = 12, NB = 2 | F = 36, M = 15 | F = 14, M = 14 | χ2(2) = 5.81, p = 0.055 | χ2(2) = 2.15, = 0.341 |

| Musical training | 4.38 (5.64) | 5.64 (6.01) | 5.29 (6.79) | t(76) = −0.90, p = 0.371 | t(54) = −0.54, p = 0.594 |

| NVIQ | 51.79 (29.32) | 43.04 (24.54) | 48.93 (27.01) | t(77) = 1.39, p = 0.167 | t(54) = 0.37, p = 0.711 |

| Receptive vocabulary | 108.93 (16.07) | 107.86 (13.27) | 109.00 (13.49) | t(77) = 0.31, p = 0.756 | t(54) = −0.02, p = 0.986 |

| Digit span | 6.63 (1.52) | 7.22 (1.35) | 7.11 (1.45) | t(76) = −1.72, p = 0.089 | t(53) = −1.17, p = 0.247 |

- Note: F = female, M = male, NB = non-binary. Musical training is in years. NVIQ: percentiles of Raven's standard progressive matrices; Receptive vocabulary: standard score of the receptive one word picture vocabulary test, 4th edition (ROWPVT-4). There was one participant's data missing on musical training and digit span.

Cloze production and pitch matching tasks

Stimuli

Stimuli in the melodic cloze task were the same as those in Experiment 1. Ninety sentence stems were chosen to match the cloze probabilities (within ±3% difference) of the 90 melodic stems based on the norms established from previous studies in English (Arcuri et al., 2001; Block & Baldwin, 2010), as well as on number of notes/syllables (within ±2 difference). We replaced some names/pronouns in the original sentences with names of different syllables in order to match the number of syllables/notes across the melodies and sentences. For example, in the stem “For a runner Ted is rather,” “Ted” was replaced with “Connor” to make the stem nine syllables long rather than eight syllables. However, we did not make changes to some of the names/pronouns, because (1) some sentences sound more natural with pronouns rather than names, (2) we tried to match the number of syllables between the sentences rather than across the melodies and sentences, and (3) we tried to use the same word categories (names or pronouns) for the high-constraint versus low-constraint sentence pairs. The final set of sentence stems and the whole sentences were then recorded by a native female speaker of British English using Praat (Boersma & Weenink, 2001), with 44.1 kHz sampling rate and 16-bit amplitude resolution.

Using the same randomization method as in Experiment 1, the 90 matched melodic stems and sentence stems were grouped into 8 lists of 45 items and presented in counterbalanced order across participants.

Procedure

The experiment was carried out in a soundproof room at the University of Reading, following the same procedure for the cloze production and pitch matching tasks as in Experiment 1.

Perceptual rating tasks

Stimuli

In the rating tasks, the stimuli included the melodies and sentences completed/produced in full, including the last notes/words that had the highest cloze probabilities based on the norms. The norms for the notes were from Fogel et al. (2015), and the norms for the words were from previous studies (Arcuri et al., 2001; Block & Baldwin, 2010) as described above.

Procedure

The same procedure was used as in the rating tasks in Experiment 1.

Data analysis

Analysis of the pitch matching and melodic cloze production data followed the same procedure as in Experiment 1. The transcription of the sentence cloze responses was done independently and then cross-checked by two research assistants. Using custom-written Matlab and R scripts, participants' sung notes and spoken words were categorized into four categories based on the norms from Fogel et al. (2015), Arcuri et al. (2001), and Block and Baldwin (2010): 1. no response; 2. a note/word that has not been reported in the norms; 3. a less frequent note/word from the norms; 4. the most frequent note/word from the norms. Statistical analysis was performed using the same approach as in Experiment 1. In the interest of space, only statistically significant effects/interactions are reported in the Results section below, with the entire model outputs displayed in Supplementary Tables S2.1–S2.6.

Results

Pitch matching

Figure 7 shows the boxplots of pitch matching deviations by each participant in each group. There was no significant difference in pitch matching deviation between the two groups (t(52) = 0.18, p = 0.860; autistic mean (SD) = 144.82 (144.09); non-autistic mean (SD) = 138.08 (131.08)).

Melodic and sentence cloze production tasks

Figure 8 shows the boxplots of percentages of the responses from the four categories by each participant in each group separated by the task (music vs. language) and constraint (high vs. low) conditions, and Table 5 displays the responses counts in percentage from the four categories and chi-squared tests comparing the distribution of the counts by groups. The two groups did not differ significantly in their response categories in any of the conditions.

| Task | Constraint | Group | Category 1 | Category 2 | Category 3 | Category 4 | Chi-squared test |

|---|---|---|---|---|---|---|---|

| Language | High | Autistic | 3 | 30 | 17 | 50 | χ2(3) = 0.26 |

| Language | High | Non-autistic | 2 | 31 | 16 | 51 | p = 0.968 |

| Language | Low | Autistic | 2 | 33 | 35 | 30 | χ2(3) = 0.48 |

| Language | Low | Non-autistic | 1 | 35 | 36 | 28 | p = 0.924 |

| Music | High | Autistic | 1 | 29 | 24 | 46 | χ2(3) = 1.61 |

| Music | High | Non-autistic | 0 | 30 | 28 | 41 | p = 0.658 |

| Music | Low | Autistic | 1 | 24 | 54 | 21 | χ2(3) = 1.19 |

| Music | Low | Non-autistic | 0 | 26 | 55 | 19 | p = 0.756 |

Production of the most frequent responses

In the simple model on participants' binomial production responses (1: the most frequent responses based on the norms; 0: no or other responses; see Figure 9), there were significant effects of Task (χ2(1) = 10.15, p = 0.001, OR = 1.50) and Constraint (χ2(1) = 54.47, p < 0.001, OR = 2.04), both of which were qualified by a Task × Constraint interaction (χ2(1) = 22.60, p < 0.001, OR = 0.84). Pairwise comparisons revealed that the difference between High constraint vs. Low constraint was greater in the Music task than in the Language task (Music High: M (SD) = 45.40% (33.43%) vs. Music Low: M (SD) = 21.30% (15.57%), z = 8.33, p < 0.001; Language High: M (SD) = 50.66% (11.69%) vs. Language Low: M (SD) = 29.09% (11.78%), z = 5.33, p < 0.001). There were no significant effects or interactions involving Group.

In the full model, there were significant effects of age (χ2(1) = 6.26, p = 0.012, OR = 1.02) and pitch matching deviation (χ2(1) = 8.57, p = 0.003, OR = 1.0), suggesting that older participants (B = 0.02, SE = 0.01) and participants who were better able to pitch match (B = −0.002, SE = 0.001) were more likely to produce the most frequent responses. There was also a significant effect of perceptual rating (χ2(1) = 74.73, p < 0.001, OR = 1.36), suggesting that participants' perception and production performance was correlated (B = 0.30, SE = 0.04). Unlike in the simple model, there was only a significant effect of Constraint (χ2(1) = 38.68, p < 0.001, OR = 1.69), with there being more frequent responses for the High constraint items than for the Low constraint items (High: M (SD) = 48.08% (24.87%) vs. Low: M (SD) = 25.34% (14.21%), z = 6.22, p < 0.001). Like the simple model, there were no significant effects or interactions involving Group.

Reaction time

The simple model for reaction times (see Figure 10) revealed significant effects of Task (χ2(1) = 7.60, p = 0.006, R2 = 0.033), Constraint (χ2(1) = 8.31, p = 0.004, R2 = 0.022), and a significant Task × Constraint interaction (χ2(1) = 7.78, p = 0.005, R2 = 0.006). Pairwise comparisons showed that whereas there was no significant difference in reaction times between High constraint items and Low constraint items in the Language task (High: M (SD) = 1.09 (0.71) vs. Low: M (SD) = 1.17 (0.70), t(123) = 1.30, p = 0.196), reaction times were faster in the High constraint items than in the Low constraint items in the Music task (High: M (SD) = 0.85 (0.59) vs. Low: M (SD) = 1.03 (0.66), t(151) = 3.77, p = 0.002). There were no significant effects or interactions involving Group.

In the full model, similar to the simple model, there were significant effects of Task (χ2(1) = 8.59, p = 0.003, R2 = 0.030), Constraint (χ2(1) = 7.64, p = 0.006, R2 = 0.018), and a significant Task × Constraint interaction (χ2(1) = 4.78, p = 0.029, R2 = 0.004), such that differences in constraint were only observed in the Music task but not in the Language task (Music High: M (SD) = 0.85 (0.59) vs. Music Low: M (SD) = 1.03 (0.66), t(194) = 3.33, p = 0.001; Language High: M (SD) = 1.09 (0.71) vs. Language Low: M (SD) = 1.17 (0.70), t(125) = 1.38, p = 0.169). There was also a significant effect of sound duration (χ2(1) = 41.89, p < 0.001, R2 = 0.043), such that participants tended to be faster for longer stimuli (B = −0.09, SE = 0.01). Like the simple model, there were no significant effects or interactions involving Group.

Perceptual rating tasks of the completed melodies and sentences

Figure 11 shows participants' ratings of the completed melodies and sentences with the most frequent responses based on the norms separated by items with high versus low constraints. The simple model revealed significant effects of Task (χ2(1) = 126.46, p < 0.001, R2 = 0.232), Constraint (χ2(1) = 64.54, p < 0.001, R2 = 0.074), and a significant Task × Constraint interaction (χ2(1) = 339.85, p < 0.001, R2 = 0.056). Pairwise comparisons showed that whereas there was no significant difference in ratings between High constraint items and Low constraint items in the Language task (High: M (SD) = 6.71 (0.76) vs. Low: M (SD) = 6.62 (0.89), z = 1.07, p = 0.286), ratings were higher in the High constraint items than in the Low constraint items in the Music task (High: M (SD) = 6.04 (1.31) vs. Low: M (SD) = 4.88 (1.74), z = 13.98, p < 0.001). There were no significant effects or interactions involving Group.

In the full model, similar to the simple model, there were significant effects of Task (χ2(1) = 120.25, p < 0.001, R2 = 0.233), Constraint (χ2(1) = 54.47, p < 0.001, R2 = 0.059), and a significant Task × Constraint interaction (χ2(1) = 326.20, p < 0.001, R2 = 0.057), such that differences in ratings between High constraint items and Low constraint items were only significant in the Music task but not in the Language Task (Music High: M (SD) = 6.04 (1.31) vs. Music Low: M (SD) = 4.88 (1.74), z = 13.42, p < 0.001; Language High: M (SD) = 6.71 (0.76) vs. Language Low: M (SD) = 6.62 (0.89), z = 0.33, p = 0.739). There were also significant effects of pitch matching deviation (χ2(1) = 5.00, p = 0.025, R2 = 0.009) and sound duration (χ2(1) = 8.88, p = 0.003, R2 = 0.003), such that ratings were higher among those who could better pitch match (B = −0.0008, SE = 0.0004) and for longer stimuli (B = −0.05, SE = 0.02). Additionally, there was a significant effect of production category (χ2(1) = 81.39, p < 0.001, R2 = 0.018), suggesting that participants' perception and production performance was correlated (Frequent Response: M (SD) = 6.49 (1.09) vs. Infrequent Response: M (SD) = 5.82 (1.55), z = 9.02, p < 0.001). Similar to the simple model, there were no significant effects or interactions involving group.

Discussion

Table 6 shows a summary of the significant effects and interactions on the three measures in the simple and full models from both experiments. Across both experiments, participants' perception and production performance was significantly correlated, with age and pitch matching ability predicting the production of the most frequent responses across music and language tasks. However, the significant effect of receptive vocabulary on production response was only observed in Experiment 1. Furthermore, Experiment 2 revealed no significant effects or interactions involving Group across all measures. Thus, matching musical training experience and receptive vocabulary between the two groups in Experiment 2 eliminated the Group × Task × Constraint interaction on production response and the Group × Task interaction on reaction time as observed in Experiment 1. While pitch matching ability and sound duration were significant predictors of expectedness ratings of the music and language stimuli in Experiment 2, these effects were not observed in Experiment 1. We discuss the implications of the findings from both experiments below.

| Experiment 1 (Mandarin speakers) | Experiment 2 (English speakers) | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Production | RT | Rating | Production | RT | Rating | |||||||

| Simple | Full | Simple | Full | Simple | Full | Simple | Full | Simple | Full | Simple | Full | |

| Group | ||||||||||||

| Task | √ | √ | √ | √ | √ | √ | √ | √ | √ | √ | ||

| Constraint | √ | √ | √ | √ | √ | √ | √ | √ | √ | √ | √ | √ |

| Group × Task | √ | √ | √ | √ | ||||||||

| Group × Constraint | √ | √ | ||||||||||

| Task × Constraint | √ | √ | √ | √ | √ | √ | √ | √ | ||||

| Group × Task × Constraint | √ | √ | ||||||||||

| Age | √ | √ | ||||||||||

| Musical training | ||||||||||||

| Non-verbal IQ | ||||||||||||

| Receptive vocabulary | √ | |||||||||||

| Digit span | ||||||||||||

| Pitch matching deviation | √ | √ | √ | |||||||||

| Sound duration | √ | √ | ||||||||||

| Perceptual rating | √ | √ | ||||||||||

| Production category | √ | √ | ||||||||||

GENERAL DISCUSSION

Examining two samples of autistic and non-autistic individuals with different language, music, and cognitive abilities, we compared predictive processing of language and music in autism using closely matched melodic and sentence cloze tasks as well as perceptual rating tasks. Participants in both experiments were generally sensitive to the different degrees of expectation based on the linguistic or melodic stem, as indexed by the significant effect of Constraint in all the models examined. This suggests that the prediction tasks used in this study are indeed measuring predictive processing (i.e., otherwise, there would not be a difference in performance between high constraint and low constraint stems). Importantly, the results from both experiments suggest that the initial group differences qualified by a Group × Task × Constraint interaction as observed in Experiment 1 were likely driven by variations in musical training experience and receptive vocabulary skills in the Mandarin sample. That is, individual differences in musical and linguistic abilities among the participants in Experiment 1, rather than autism diagnosis, might have mediated the relationship between group (autistic vs. non-autistic) and predictive processing of language and music. In keeping with the extensive evidence indicating the significant roles cognitive abilities play in pitch and melodic processing in autism (Chowdhury et al., 2017; Jamey et al., 2019; Kargas et al., 2015; Ong et al., 2023), our findings suggest the importance of accounting for confounding factors in studies of music and language processing in autism.

Regarding musical prediction, we observed generally similar performance between autistic and non-autistic groups in both production (in number of most frequent notes produced and in reaction time) and perceptual rating tasks, in both experiments. It should be noted that whereas the Chinese autistic participants produced more frequent notes than the Chinese non-autistic participants for the high constraint melodic stems in Experiment 1, the same pattern of results was not seen among the English participants in Experiment 2. At first glance, it might seem like the Chinese autistic participants showed enhanced musical skills (at least with respect to producing the most frequent notes) whereas the English autistic participants showed intact musical skills relative to their respective non-autistic counterparts. However, we caution against such an interpretation due to how the task was scored. Specifically, performance on the music task in both experiments was scored against norms established by Western musicians. That is, in Experiment 1 on Chinese speakers, although the sentence cloze task was re-normed in a Mandarin-speaking sample, we did not re-norm the melodic cloze task considering that performance on that task should be judged against expert performance. However, one may question whether performance on Western melodies among Chinese participants relative to the performance of Western musicians is itself a cognitively informative measure. Indeed, while Chinese non-musicians have been shown to be familiar with the tonality, syntactic structure, and phrase boundary of Western music (Jiang et al., 2016, 2017; Nan et al., 2009), we cannot rule out the possibility that the Chinese autistic participants in Experiment 1 may be more aware (or alternatively, the Chinese non-autistic participants being less aware) of the “rules” in Western tonal music than the Chinese non-autistic participants because of their musical training experience. Future studies should replicate the melodic cloze task with norms from a Mandarin-speaking sample to see if group differences among Chinese participants persist. What is clear, however, is that the autistic participants in both experiments showed intact musical processing skills at the very least. This is consistent with the literature suggesting intact musical processing in autism (Chen et al., 2022; Janzen & Thaut, 2018; O'Connor, 2012; Ouimet et al., 2012; Quintin, 2019; Ong et al., 2023), including memory and labeling of musical pitch and segmentation of chords (Heaton, 2003), perception of pitch intervals and melodic contours (Heaton, 2005; Jiang et al., 2015), local and global processing of music (Germain et al., 2019; Mottron et al., 2000), musical phrase boundary processing (DePriest et al., 2017), perception of musical melodies (Jamey et al., 2019), processing of temporal sequences and musical structures (Quintin et al., 2013), and recognition of musical emotions (Gebauer et al., 2014; Heaton et al. 1999; Quintin et al., 2011). Note, however, that there are counter-examples in which autistic individuals had lower performance than non-autistic individuals in musical tasks (e.g., Bhatara et al., 2010; Ong et al., 2023; Sota et al., 2018). Some autistic individuals also possess exceptional musical skills such as perfect pitch (Heaton, Pring, et al., 1999; Mottron et al., 2009; Rimland & Fein, 1988; Young & Nettelbeck, 1995). Thus, although impaired pitch, emotion, melodic (Bhatara et al., 2010; Ong et al., 2023; Sota et al., 2018), beat (Kasten et al., 2023; Morimoto et al., 2018; Vishne et al., 2021), rhythmic (Steinberg et al., 2021), and metrical processing (DePape et al., 2012) has also been observed in autism, our finding of intact musical prediction in autistic Mandarin and English speakers is in line with meta-analyses suggesting intact implicit learning in autism as evidenced in contextual cueing and other statistical learning tasks (Foti et al., 2015; Obeid et al., 2016). Most importantly, our findings are consistent with research suggesting intact enculturation to the pitch structure of Western music (DePape et al., 2012) and utilizing prediction during music listening in autism (Venter et al., 2023).

A few computational models have tried to explain how melodic expectations are formed, including the domain-specific model of Gestalt-like principles derived from music theory (Probabilistic Model of Melody Perception; Temperley, 2008, 2014), the domain-general Markov model of statistical learning based on transition probabilities and n-grams (Information Dynamics of Music; IDyOM model; Hansen & Pearce, 2014; Pearce & Wiggins, 2006), and the expectation networks of statistical learning inspired by generalized scale degree associations (Verosky & Morgan, 2021). Using the data from the melodic cloze task (Fogel et al., 2015), Morgan et al. (2019) compared the Temperley and IDyOM models and suggested that both Gestalt principles and statistical learning of n-gram probabilities contribute to melodic expectations, with the latter playing a stronger role than the former (Morgan et al., 2019). However, neither model can fully explain the variance in the data, especially related to how the tonic is expected in authentic cadence (Morgan et al., 2019). Taking into account another type of statistical learning, expectation networks (Verosky, 2019), Verosky and Morgan (2021) compared all three models and concluded that expectation networks can better distinguish authentic cadence from non-cadential melodies and that the three models each explain distinct aspects of melodic expectations. Thus, melodic expectations are driven by a combination of Gestalt principles (Temperley, 2008, 2014), statistical learning of n-gram probabilities (Hansen & Pearce, 2014; Pearce & Wiggins, 2006), expectation networks (Verosky, 2019), as well as other non-local melodic relationships (Krumhansl, 1997; Kuhn & Dienes, 2005; Morgan et al., 2019; Verosky & Morgan, 2021). Our finding of intact musical prediction in autism thus suggests that, like non-autistic individuals, autistic individuals are able to make predictions of musical events following the principles of melodic expectations, which may be related to their intact implicit/statistical learning skills across a variety of statistical learning tasks (Foti et al., 2015; Obeid et al., 2016).

Regarding linguistic prediction, our results suggest a significant group difference in both response pattern and reaction time in the sentence cloze task among Mandarin speakers in Experiment 1, although no group difference was observed in the English-speaking sample in Experiment 2. Thus, it is important to acknowledge that it may not be autism diagnosis per se, but individual differences in linguistic abilities as reflected by receptive vocabulary, that have driven the reduced predictive processing of language among autistic participants in Experiment 1. In previous studies, autistic individuals also show difficulties in global sentence processing and semantic integration in context (Booth & Happé, 2010; Frith & Snowling, 1983; Happé, 1997; Henderson et al., 2011; Jolliffe & Baron-Cohen, 1999) and demonstrate delayed N400 latency during semantic processing (DiStefano et al., 2019). Extensive research has also shown reduced reading comprehension in autism, especially in text comprehension at sentence and passage levels (Lucas & Norbury, 2014; McIntyre et al., 2017; Nation et al., 2006; Ricketts et al., 2013). The difficulties in oral language and reading comprehension in autism have been attributed to “weak central coherence” (Happé & Frith, 2006), decreased top-down modulation (Henderson et al., 2011) and monitoring (Koolen et al., 2013), social impairments (Ricketts et al., 2013), limited vocabulary or semantic knowledge (Brown et al., 2013; Lucas & Norbury, 2014), reduced processing speed of world knowledge (Howard et al., 2017), and impaired structural language ability (Eberhardt & Nadig, 2018). Comparing results from Experiments 1 and 2, it is likely that Mandarin-speaking autistic individuals' atypical linguistic prediction as revealed in Experiment 1 may be mediated by their reduced linguistic skills, rather than be due to autism per se.

Similar to melodic expectations, linguistic expectations are also based on rule-like principles such as syntactic structure (Gibson, 1998) and statistical learning of word n-gram probabilities (Arnon & Cohen Priva, 2013; Arnon & Snider, 2010; Saffran, 2003). Language processing also involves regularity extraction and integration via statistical learning (Erickson & Thiessen, 2015). Generally speaking, prediction during language comprehension involves probabilistic computation of upcoming events in a context at multiple levels of representation, including perceptual, phonological, syntactic, semantic, and orthographic (Kuperberg & Jaeger, 2016). These predictions are made by drawing on information from the speaker, prior context, world knowledge, as well as extra-linguistic cues such as gestures or other visual stimuli (Hagoort & van Berkum, 2007). Studies and theoretical accounts examining autistic differences in perceptual adaptation stipulate increased sensory overload at subcortical levels (Font-Alaminos et al., 2020), atypical top-down influence on sensory and higher-level information processing (Gomot & Wicker, 2012), attenuated use of prior higher-level social stimuli (Pellicano & Burr, 2012; Sinha et al., 2014; van Boxtel & Lu, 2013; Van de Cruys et al., 2014), and reduced speed of integrating new information to guide behavior—“slow-updating” (Lieder et al., 2019; Vishne et al., 2021). In our sentence production and rating tasks, participants need to process higher-level complex stimuli by a novel talker, which requires vigorous integration of bottom-up and top-down processes (Heald & Nusbaum, 2014; Kleinschmidt & Jaeger, 2015). It has been reported that autistic adults show delayed auditory feedback during speech production (Lin et al., 2015), and they also lack perceptual adaptation to novel talkers (Alispahic et al., 2022). Other studies have also shown atypical semantic processing (Ahtam et al., 2020; Grisoni et al., 2019; Kamio et al., 2007; O'Rourke & Coderre, 2021) and reduced top-down modulation during online semantic processing in autism (Henderson et al., 2011). Nevertheless, our finding of intact linguistic prediction among autistic English speakers in Experiment 2 suggests that, if equipped with intact linguistic skills, autistic individuals are equally capable of performing predictive processing during language production and comprehension.

While we propose that the differences in findings between experiments may be attributed to how well the groups are matched within each experiment, there are two other possibilities that we should address. Across both experiments, participants also differed in their language experience and age (Mandarin speakers with a mean age between 10 and 11 in Experiment 1 and English speakers with a mean age between 27 and 28 in Experiment 2). Concerning language experience, given that the language task was conducted in their own native language, we think it is unlikely that this is the reason for the group differences observed among Mandarin speakers but not among English speakers in the language task. Concerning age, one might argue that perhaps autistic individuals' predictive processing ability impacts the language domain more so than the music domain when they are younger. Even though predictive processing is said to be domain-general (e.g., Cannon et al., 2021; Sinha et al., 2014), it may operate on domain-specific representations, which may be weaker in the language domain among autistic individuals given that autism is associated with delayed language development (Hart & Curtin, 2023). Thus, group differences in the language task may be more likely to be observed among younger participants and disappear when they are older or when their language ability “catches up” with their non-autistic peers (Brignell et al., 2018). This should be confirmed in future research with younger English speakers. Moreover, participants (particularly autistic participants) with varying levels of linguistic ability should be examined in future research as the current study has focused on matching the autistic participants with their non-autistic peers, resulting in limited generalisability to the broader autistic population.

Apart from music and language processing, atypical predictive processing in autism has been frequently reported in other domains, including social functioning, visual processing, sensory processing, theory of mind, and motor anticipation (Cannon et al., 2021; Lawson et al., 2014; Pellicano & Burr, 2012; Sinha et al., 2014; Van de Cruys et al., 2014). However, combining the results from Experiments 1 and 2, we conclude that predictive processing of music and language in autism is likely influenced by individual differences in musical, linguistic, and cognitive abilities. Future studies should investigate the role of other factors (e.g., motor skills, comorbid conditions, and other potentially confounding variables) that may influence predictive processing in autism. Finally, given that our stimuli are relatively simple, i.e., involving isolated melodies and sentences without larger contexts, future studies should explore how autistic individuals make predictions from more complex musical and linguistic inputs in their everyday environments.

In conclusion, our study is the first attempt to compare prediction in music and language in autistic and non-autistic individuals using matched cloze probability tasks across the two domains. Based on results from two experiments on two different samples, our findings suggested that performance on musical and linguistic prediction in autism is largely dependent on individual differences in musical, linguistic, and cognitive abilities. Future studies should employ different tasks (e.g., with more complex stimuli in naturally occurring settings) and different samples to further explore the complex relationship between autism, music, and language, while considering potential confounding variables impacting task performance.

AUTHOR CONTRIBUTIONS

Fang Liu, Aniruddh D. Patel, Allison R. Fogel, Chen Zhao, Anamarija Veic, and Bhismadev Chakrabarti designed the study. Allison R. Fogel, Chen Zhao, Anamarija Veic, Li Wang, and Jia Hoong Ong created the stimuli. Chen Zhao, Li Wang, Qingqi Hou, and Anamarija Veic collected the data. Chen Zhao, Anamarija Veic, Dipsikha Das, Cara Crasto, and Fang Liu processed the data. Chen Zhao, Fang Liu, and Jia Hoong Ong analyzed the data. Fang Liu, Aniruddh D. Patel, Jia Hoong Ong, and Chen Zhao wrote the manuscript. Cunmei Jiang, Tim I. Williams, and Ariadne Loutrari commented on the manuscript. All authors read and approved the final manuscript.

ACKNOWLEDGMENTS

This study was supported by a European Research Council (ERC) Starting Grant, ERC-StG-2015, CAASD, 678733, to Fang Liu and Cunmei Jiang. We thank Sanrong Xiao for assistance with participant recruitment in China, Georgia Matthews for help with literature review of the sentence task, Ellie Packham, Roshni Vagadia, and Zivile Bernotaite for help with participant recruitment and data collection in the United Kingdom, Leyan Zheng for help with pre-processing of the Mandarin data, and Hiba Ahmed, Leah Jackson, and Maleeha Sujawal for help with pre-processing of the English data. We would also like to thank the associate editor and two anonymous reviewers for their insightful suggestions and comments which have helped us significantly improve our work.

Open Research