Sunk-Cost Bias and Knowing When to Terminate a Research Project

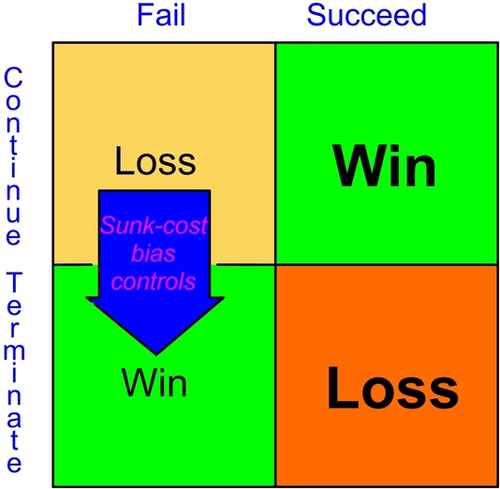

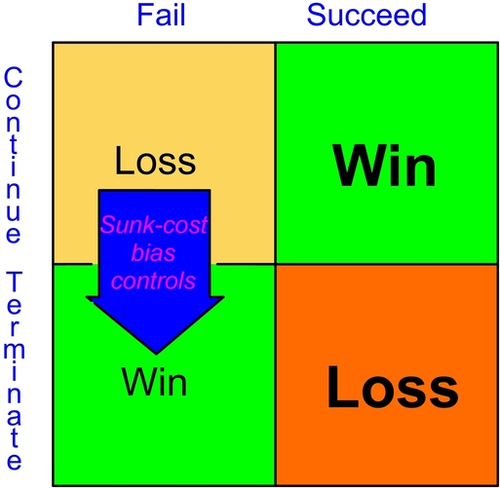

Graphical Abstract

An awareness of sunk-cost bias provides an antidote to the scientific optimism that exacerbates the tendency to continue research projects when the likelihood of success is minimal. A survey of academic and industrial practices, as summarized in the sunk-cost bias decision matrix, captures how implementing controls facilitates data-driven decisions to terminate or continue research while avoiding emotionally driven decisions that can sap resources.

Abstract

Scientific research is an open-ended quest where success usually triumphs over failure. The tremendous success of science obscures the tendency for the non-linear discovery process to take longer and cost more than expected. Perseverance through detours and past setbacks requires a significant commitment that is fueled by scientific optimism; the same optimism required to overcome challenges simultaneously exacerbates the very human tendency to continue a line of inquiry when the likelihood of success is minimal, the so-called sunk-cost bias. This Viewpoint Article shows how the psychological phenomenon of sunk-cost bias influences medicinal, pharmaceutical, and organic chemists by comparing how the respective industrial and academic practitioners approach sunk-cost bias; a series of interviews and illustrative quotes provide a rich trove of data to address this seldom discussed, yet potentially avoidable research cost. The concluding strategies recommended for mitigating against sunk-cost bias should benefit not only medicinal, pharmaceutical, and organic chemists but a wide array of chemistry practitioners.

1 Introduction

Scientific advances emerge from the fruit of imaginative insight after experimental validation. The ideal is the distillation of a brilliant idea into a clear, definitive test that is subsequently verified in the first experiment; the reality is that scientific advances are more often made by long, arduous searches punctuated by revelatory insight and serendipitous discoveries.1

Equally necessary as imaginative insight, though more prosaic, is the maximal translation of finite resources through the discovery process to verified new knowledge. The non-linear progression from ideation through experimentation to verification usually involves a less than optimal use of resources because the open-ended nature of research means that early experiments are planned with insufficient or apparently conflicting data. During the early phase of research, advances often come by intuiting which data to focus on and which to discard. The art of discerning the optimal discovery path requires a combination of logic and intuition, both of which are subject to the subconscious vagaries of the mind.2

Despite a researcher's best intentions to be efficient and economical, the reality is that some research projects lead to dead ends.3 Identifying dead ends early is advantageous because terminating projects allows valuable resources to be redirected to potentially more fruitful lines of inquiry. Terminating a project is difficult because a crucial breakthrough is always possible with the next experiment. Scientists have an “unreasonable optimism” in the fecundity of scientific exploration; an optimism that fosters the drive needed to overcome challenges but carries a risk of over-committing resources to projects with a poor likelihood of uncovering an important discovery.4

Dead ends and detours are seldom discussed, even though failure is usually an integral experience on the road to success.5 The sunk cost involved in exploratory forays that end in blind alleys remains hidden from most of the scientific community, with few journals devoted to publishing negative results.6 Even more valuable is understanding the reasons why failing projects advance and, on the positive side, how successful research projects minimize detours and overcome setbacks.

The problem is neither new nor limited to the least of practitioners. The race between the two Nobel laureates, Sir Robert Robinson and Robert Woodward, to determine the structure of strychnine is illustrative. Robinson spent four decades attacking the problem whereas Woodward took up the challenge much later and solved the structure in just five years.7

The current manuscript seeks to provide guidance on avoiding the very human tendency to continue a line of inquiry when the likelihood of success is minimal, the so-called sunk-cost bias, by exploring three key questions: When does sunk-cost bias emerge? 8 What practices are used by industrial and academic scientists to avoid sunk-cost bias? What strategies might be implemented to avoid continuing to devote resources to a likely dead end?

2 Background

Decision-making studies in psychology reveal an almost universal susceptibility of people to continue investing in projects when further investment outweighs the benefits, the so-called sunk-cost bias or sunk-cost fallacy.9 The bias emanates from people's predisposition to escalate a commitment to a failing project or activity based on the prior investment of resources rather than on the likely benefit of future returns relative to alternatives.10

Psychologists have determined that sunk costs (costs that are not recoverable) are a strong stimulus in the decision-making process because people perceive the investment as a loss.11 People are naturally more likely to persist with an unfavourable project to avoid the fear of wasting or losing investments rather than cutting losses and terminating the project. The joint British-French development of the first supersonic commercial aircraft, the Concorde, is illustrative of the sunk-cost bias where money continued to be spent on a lost cause. The project was originally projected to cost £ 70 million but eventually cost £ 1.3 billion, “an outstanding example of how not to develop and build a supersonic airliner—or any other high technology product.” 12

Psychological research has shown that continued investment is more likely if the resource investment was financial and less if the resource was time.13 Continued investment in a failing project is driven by the potential reward of a psychological win if the project is completed. Ironically, people who succumb to sunk-cost bias are influenced by a psychological desire to avoid being wasteful.5

The sunk-cost bias negatively impacts virtually all human endeavors from business decisions to economic investments to medical diagnoses. An internal investigation among World Bank staff revealed a significant sunk-cost bias from well-meaning individuals who continued investing in failing projects by unconsciously advocating practices that led directly to financial losses.14 In the medical field, the sunk-cost bias has been linked with professional decisions to continue ineffective treatments15 and the erroneous use of patients’ cumulative histories to inform future diagnostic testing.16

Three decades of psychological studies confirm the inherent nature of the sunk-cost bias in decision-making processes.8 Given that scientists make similar decisions, the sunk-cost bias is equally expected to influence scientific discovery. Anecdotal evidence supports the idea that sunk-cost biases are rampant within science: computer hardware and software that is obsolete upon release17 and cost overruns for experimentation.18

Decision making is rarely a straightforward process of following a rubric or an algorithm to arrive at the optimal discovery process.19 Faced with complex decisions, people revert to heuristics or mental short-cuts.20 The result is often a less than ideal decision but science is such a fruitful venture that asking any question usually elicits interesting information while raising yet more questions. Seldom does the process lead an investigator to admit that the wrong question was asked; continuing a research inquiry avoids an admission of wrong doing.

In research, the sunk-cost bias is particularly pernicious because intelligent, educated people are able to build elaborate arguments that justify continued investment in non-profitable projects.21 Albert Einstein spent twenty-five years pursuing an elusive Unified Theory which, he admitted, ended up “in the graveyard of disappointed hopes.” 22 Einstein's brilliant intuition that served him so well in his miracle year of 1904, led him to ignore the evidence of nuclear forces that frustrated his search for a unifying theory, even to the point of despising highly efficacious quantum theory.23 Einstein recognized that he spent too much of his intellectual acumen on his pet idea writing in a letter to his friend Louis de Broglie that: “I must seem like an ostrich who forever buries its head in the relativistic sand in order not to face the evil quanta.” 5

Einstein is not alone in having doggedly pursued unproductive ideas. Several prominent scientists have over-expended resources chasing after a bad idea rather than cutting their losses and embarking on other ventures. Two-time Nobel laureate Linus Pauling spent several decades, and much funding from the National Institute of Health, in an unsuccessful bid to show that high vitamin doses cure cancer.24 Richard Feynman, often considered the last great genius, confided during his Nobel Prize Lecture that: “We have a habit in writing articles published in scientific journals to make the work as finished as possible, to cover all the tracks, to not worry about the blind alleys or to describe how you had the wrong idea first, and so on. So there isn't any place to publish, in a dignified manner, what you actually did in order to get to do the work … But, I did want to mention some of the unsuccessful things on which I spent almost as much effort, as on the things that did work.” 25 The recognition of sunk-cost bias in Nobel laureates like Einstein, Pauling, and Feynman suggests that a proactive approach to mitigating against this type of bias will be profitable for all scientists.

The current manuscript provides insight into sunk-cost bias by evaluating practices in industrial pharmaceutical companies and for comparison, practices of faculty specializing in organic and medicinal chemistry. Decision making in the development of experimental therapeutics are under-researched with clear evidence of personal bias in the decision-making process.26 The potential benefits of understanding and avoiding sunk-cost bias are not only on the budgets and time devoted to scientific projects but through a redistribution of resources to advance other high-impact research. This study appears to be one of the first to chronicle ways to mitigate against spending precious resources on failing projects during scientific discovery.

3 Methodology

The study is based on a series of interviews that provide a qualitative data collection suitable for information and/or behaviors that cannot otherwise be observed or replicated.27 A snowball sampling strategy (i.e. asking experts to identify other experts in a certain area) was used to identify and recruit scientists for this study.28 Candidates were selected with consideration for diversity as well as sufficient experience and oversight of a team of researchers and/or projects to ensure familiarity with sunk-cost bias (Figure 1). From 20 scientists and 15 academics who were invited to participate in the study, 19 mid- to senior-level scientists, 11 from the pharmaceutical industry and 8 from academia participated. 13 scientists were male and 6 were female, 67 % were white Americans, 11 % were Asian Americans, 11 % were Asian representing other nationalities, and 11 % were white representing other nationalities. A table of the participant demographics is provided in the Supporting Information. Participation in the study was voluntary with consent obtained prior to the interviews in accordance with Drexel University's Human Research Protection protocol (Protocol number: 2008008063).

Interview participant experience.

The interview process employed a semi-structured interview protocol (using predetermined yet flexible open-ended questions) to guide each interview.29 Following best practices for semi-structured interviewing, the questions were initially created and reviewed by the authors and then passed through a series of revisions until a set of core questions (provided in the Supporting Information) were agreed upon.30 The interviews followed a consistent order of questioning, with sufficient flexibility to allow participants to elaborate and provide examples to clarify their ideas.31 The interviews probed 1) programmatic resource costs, 2) reasons for halting, terminating, or continuing programs, 3) the decision-making process and decision points for halting or terminating programs, and 4) whether a sunk-cost bias existed within scientific research.

E.P. led, transcribed, recorded, and coded the interviews because of her expertise in qualitative research and because having minimal experience in chemistry served to reduce any response bias among participants; she has no tertiary education in chemistry, nor has she worked in the chemistry sector. E.P.’s positionality includes an epistemological perspective grounded in qualitative research, social science, and pragmatism. Her positionality, therefore, provided the opportunity to reduce fear and response bias among participants, knowing that she was external to their professions.32 Each participant was interviewed once for a duration of 45–60 minutes, using an internet-based virtual meeting platform. Each interview was recorded, transcribed, and coded.

The interview transcripts were qualitatively coded, a heuristic or process of discovery for reviewing data to identify key themes in response to the research question.33 The initial coding analysis employed an inductive approach followed by an in vivo coding strategy (using participants’ exact words and phrases to identify themes).33 An “intercoder agreement” ensured code reliability by reconciling any potential discrepancies between the transcripts and emergent codes by review and discussion with a second researcher;34 the “emergent” codes in qualitative research, are themes identified through the inductive and iterative process of data analysis.35 The final “emergent” themes provided qualitative trends that served as evidence for the existence and basis of sunk cost biases in pharmaceutical and academic research. Following best practices for qualitative research, member checking (i.e., soliciting feedback from interview participants to ensure the interpretations and analyses match the participants’ intended meaning) was employed to ensure interpretive validity; the final manuscript was provided to participants for comment prior to submission.36

4 Decision-Making Processes in the Pharmaceutical Industry

Applied research, as in pharmaceutical research and development, involves scientific discovery directed toward a specific commercial objective.37 The attendant decision-making during the discovery and development of an experimental therapeutic is challenging because the inherent uncertainty in discovery, development, and market evolution is compounded by the multi-million-dollar economic stakes; typically the journey from bench to approved therapeutic requires an average of ten years research and a financial investment over $2 billion.38 Drug discovery and development costs lie in funding chemical development, personnel, labs, and equipment, with the greatest cost incurred from clinical studies. Of potential drugs that begin clinical development, less than 10 % ultimately emerge as new pharmaceuticals.39 Consequently, there is an incentive to terminate, or as described by one manager, “kill”, drug candidates as early as possible to avoid unnecessary expenses on a potentially unsuccessful drug lead:

“We say, ‘What is the next ‘kill’ experiment? What is going to be that next piece of information that you need to get to make that decision, whether it's time to stop or not!’ Our business is really expensive. And so even killing a project is a successful decision, if it's for the right reasons. And we get rewarded. We always reward people for getting the drug, but we also reward people who made the tough decisions as well.”

Pharmaceutical companies usually have several strategies to mitigate anticipated losses of money, time, and labor during the drug discovery and development processes. One strategy is to allow failure and losses as early in the project as possible, the so-called “Fail-Fast” strategy.40 Some pharmaceutical companies incentivize a fail-fast approach to “kill” programs early throughout the drug development process to conserve resources, not only by stopping expenditure, but by redirecting resources toward other projects. Failure becomes part of the research culture where finding a reason to terminate a project is rewarded in the same way as finding an effective solution. One scientist explained the value of fail-fast as follows:

“So, I've seen a lot of quick ‘kills’. In fact, the first company I worked for … used to give awards out for people that would kill projects. So, it's the antithesis of what you might think. Everyone wants to be successful, but they had a program where if you could show, with very clear data, that this was not worth pursuing, you could get rewarded for that.”

Sunk-cost bias transpires when decisions are made based on the committed time, energy, and money already spent, as opposed to the likely future cost to achieve success. Loss of time, energy, and money is inherent to the drug discovery process for many reasons among which is the specificity of the experimental therapeutic to act on one target in the highly complex human body where safety is paramount. In the pharmaceutical industry, more than 90 % of projects are terminated.21 Scientists in drug discovery come to expect that a project is likely to fail, particularly when the biological efficacy is in question. As one scientist explained:

“So one that I worked on was for melanoma skin cancer. But once it actually got into humans, it was actually one of the cleanest ‘kills’. I've ever seen. It didn't hit any of the stuff it was supposed to hit, and it gave everybody a screaming migraine. I've never seen one ‘killed’ that fast or that cleanly.“

The development process may be terminated for reasons other than the efficacy of the experimental therapeutic. In some cases, markets change, competitive treatments reach the market first, or the cost of goods increases, all of which require difficult business decisions.

“One of our chief chemists was a leader on the synthesis of an antibiotic. And the antibiotic was wonderful. But it turned out to be much too expensive. And we knew it would never sell. But it was wonderful with respect to the lack of side effects and advocacy. There are hard decisions, you know. When we have the compound in hand, something like dollars stop it.”

The decision makers must balance the increasing challenge of solving recalcitrant disease states, the cost of finding a solution, and the potential to recoup those costs from the market.

“A good example of this is Alzheimer's disease. Many, many people have been trying to solve Alzheimer's disease. It's a complex disease state to begin with, and there are a number of biological pathways that are implicated in the disease state. A medication for Alzheimer's would be huge, and so people were after it in earnest. But they didn't find it. Soon after, [many companies] exited neuroscience completely … So with that, obviously any project or program in those areas that were not aligned with the strategic purpose of the company were just cut off wholesale, regardless of how promising things looked.”

Rewarding the recognition of failure removes the emotional investment that may otherwise drive projects for reasons other than pharmaceutical potential. For example, a powerful individual may promote a project so they are seen to have succeeded by avoiding failure.16 Two quotes capture the sentiment felt by scientists charged with moving a project forward with little chance of success:

“The only time projects continue on is for a stupid reason like someone much higher up is emotionally invested and they can dictate. It's rare, but it does happen. Thank God it's rare or we'd all be out of business.”

“Well, they continued a project that I knew was going to fail. And it just made no sense. And then someone finally whispered in my ear, ‘Well, so and so is really emotionally invested in this.’ And it was a physician who had a lot of power in the company … They finally ‘killed’ it. But it took a year just because someone was emotionally invested in it and they really wanted it to work.”

Countering the emotional attachment to processes is important because the drive to overcome difficult technical challenges creates a strong bond with the project. There is a tension between successful projects requiring a support from a champion, or not, for the right reasons. One chemist captured an often-reported sentiment:

“And the other thing too is that chemists and scientists in general, we all love our projects. And it's really hard to know when to say, let it go.”

The massive investment for drug discovery has led companies to implement systematic checks and balances to avoid unnecessary losses; discontinuing drug development is a major cost saving in pharmaceutical research and development.41 Most companies incorporate committee reviews, a variety of incentives to encourage scientists to identify pitfalls, and a series of stage gates in the development of new chemical entities. Stage gates are predetermined points for reporting the progress of discovery and development of pharmaceutical compounds that are typically set by a company's senior leadership team. One chemist described the process:

“You can view it as a series of yes or no queries; it's kind of a process map. You make a compound. Does this compound meet results? Then for those that move forward, there's … another stage gate. That's a filter. So, there's a lot of stage gates throughout that process.”

Ultimately decisions are made by people, individuals or groups, comprised of scientists and business executives. Usually there are particularly important decision points at which directors of individual programs across different areas, chemistry, biology, toxicology, present the data with a recommendation to a decision-making committee. The high economic stakes at these strategic decision points have fostered numerous studies to better understand and inform the decision-making process. Research into the process emphasizes the importance of high-quality data, which, with the advent of data mining, offers the possibility of using artificial intelligence in the decision-making process.42 Equally real is the import of pressing time constraints, the impact of organizational philosophy, and political influences.4

Analytical, stepwise, and systematized decision-making is often employed to promote transparency and efficacy, though personal subjectivity, a failure to learn from past mistakes, and overconfidence continue to plague how decisions are made.43 Understanding the decision-making process, particularly the tools and strategies available, is recognized as being highly valuable. Internal steering committees and external advice from regulatory agencies provide another source of valuable guidance.

One scientist explained the process this way:

“Those decision-making processes are more from the leaders from each function and we collaborate together. Is this something we can fix from a formulation standpoint? Can you study this compound in a different way to see if we can maximize our chances of being able to move this compound forward? Then together, we work with the leaders from the other functions and we give the recommendation. And then they give their input. And so, it's sort of a back and forth. It's not so much a one-person decision.”

As with any human endeavor, the benefit of personal insight comes with a risk of bias. The extreme cost of pharmaceutical research has caused the industry to implement several different strategies that mitigate against sunk-cost bias:

-

Embracing fail-fast strategies to eliminate leads early in the development process.

-

Rewarding scientists whose key experiments demonstrate that projects should be terminated.

-

Implementing predefined decision points, stage gates, that minimize the risk of projects continuing without evaluation.

-

Focusing on data, particularly biological evaluations and return on investment, to minimize emotion-driven decisions.

-

Employing diverse teams in the decision-making process to prevent group think.

5 Decision-Making Processes of Academic Organic and Medicinal Chemists

Decision making in academic research ultimately resides with the faculty principal investigator (PI). Faculty usually exert autonomy in the original project design, reflecting their personal interests, fascination and enthusiasm for specific topics, notwithstanding varying input from students and their prior research. As a result, projects with similar goals can vary greatly from one researcher to another. Regardless of the specific project, academic scientists have two common goals: 1) to advance scientific knowledge, and 2) to train students in the technical and intellectual craft of executing research. The focus on student training, which is recognized as a valuable component of total synthesis,44 was mentioned by all academic interviewees. For one PI:

“My primary mission as an academic scientist is to create new science, and to create new scientists, in equal measure.” And again: “The whole goal is for a PhD to be an independent scientist.”

Many research ideas are created by a PI based on promising preliminary results that often serve as the core of a grant proposal. The arrival of funding and an enthusiastic student begins the process in which new knowledge is gained and the student receives training. The partnership between PI and student, expert and novice, involves a strong investment by the PI to see the project succeed.45 The potential for PIs to over-invest resources early in their career is particularly high because faculty applying for tenure need to demonstrate an ability to transform projects into publications. One academic, musing on their early years, captured the sentiment well:

“So, you've got a novel idea; you're sure it's going to work. You're going for tenure [so] you've got six years or really five because the first year is pretty rough getting set up. And it's terrifying, but that's definitely a time where you would be tempted to throw good money after bad.”

Ideally, a research project will lead to new discoveries and student training. All the faculty interviewed had a broad appreciation for “learning through failure”,46 using failure as an opportunity to teach students valuable problem-solving skills. As one PI wryly noted:

“You make a mistake, the best way to learn from it is to not repeat it. But you've got to make that mistake in the first place.”

A common theme among the faculty interviewed was in trying to determine whether a project doesn't work because of a student's technique or the idea. Especially in the early phase of a student's training, difficulties may be due more to challenges in experimental execution rather than the chemistry. Even with senior students, the field may be insufficiently developed to understand complex reaction outcomes. Many scientific discoveries are dependent on the insights of previously failed experiments. The challenge is to use failure as an advantage for training and publication while avoiding the loss of over-committing resources. As one PI said:

“I want the student to record what he or she did for posterity, even if it wasn't a success. Because the whole writing process could catalyze that ‘Aha moment’. And with the wisdom of that person's analysis, we can get back in and solve it.”

As institutions of higher education, students are provided with direct intellectual and practical training whereas project management skills, such as avoiding sunk-cost bias, is more likely to be taught indirectly. Few academics have formal training in decision-making, though some try to improve their skills through reading. One said:

“Maybe I should read more books on management or whatever.”

Another was explicit about using the decision-making process as training:

“And the training that the students get is watching me make a value decision about: do I believe that the failure in advancing this project is because it's fundamentally not going to work, because the student who's doing it doesn't believe in the future of the project, or if they are not technically capable of being able to advance that project?”

Academic research has few formal analogies to industrial stage gates, though most of the faculty interviewed had implemented regular higher-level project evaluations at periods ranging from one to six months. Project evaluations may also be triggered by a loss of funding or an absence of bioactivity in collaborative projects. As one described:

“We get [compounds] tested by biology labs and if the toxicity data [comes back unfavorable, I make] the decision that we don't work in that area anymore, we'll move on.”

Many academics see projects as ostensibly student driven. Students are expected to manage the daily operations, purchase resources, review results, and influence decisions regarding project direction. Student passion can also drive the decision process causing a project to persist longer than perhaps was warranted. As one faculty PI explained:

“Stopping a project is something that I really don't like to do and it's because there is so much that goes into a project in terms of students’ dedication. They really get emotionally invested in this. And in order to pull the plug on a project, we have to get to a point where the student does the appropriate experiments to show that our initial hypothesis was flawed.”

Decisions to terminate projects may result from a student's frustration at a lack of success. In response, faculty may shift students from failing projects to an area with a higher potential for success. As one PI explained:

“Students don't like to work on things that aren't working. They're the ones to say it isn't working, nothing's happening, it's frustrating. And so, we stop the project.”

The decision to terminate a project that partially works is particularly challenging. The challenge lies in discerning whether the scope limitations can be offset through tweaking the reaction components or design. One academic captured the tension by saying:

“I think a lot of times, things are just gradients. And so it's sort of like … we got some data here that might be useful to someone, versus like, wow this catalysis is just amazing. It seems to have great turnover and it's applicable to lots of different situations.”

Deciding on whether to continue projects at these decision points was often a conversation between the student and the PI. Factors that influence the pursuit of a modest-value project include whether the student is nearing the end of their degree and needs only to complete a final project to graduate, and the likely impact of the research. For one PI the conversation focuses on where a best-case scenario might be published:

“And I tell the students, in your wildest dreams, if this project is successful, as you dream it will be, are we going to publish it in [a mid-tier journal] or in Science.“

Academic projects have a tendency to persist without regard for evidence of plausible failure, due in part to the PI's passion for the subject or project. Passion is a powerful force of motivation among scientists.47 One academic admitted:

“My career is strewn with my inability to let go of a project that ultimately became tremendously successful.”

Passion drives success in many cases by focusing enthusiasm toward a specific goal. Academia cultivates a culture of passion-driven faculty and students eager to uncover a new scientific discovery. Many of the faculty who were interviewed reflected upon the ways in which their own passion led them to persist with projects. One PI shared:

“This project was scientifically so intriguing to me that I held on to it way too long. But in the end, it turned out to be beautiful, with [the] ending resolved. And a beautiful paper out of it.”

The idea that persistence is the best path to success, even for longer than might be thought reasonable, is embedded within the stories of scientific heroes. Scientists are no less immune to the lure of persistence in the face of what might be thought of as insurmountable obstacles. And those scientists who succeed through perseverance go on to become role models. One faculty member shared the sentiment:

“My PhD supervisor had great words of advice, ‘If you have a hypothesis try to ‘kill’ it, and if you can't ‘kill’ it then keep going!’ But the problem is our hypotheses are our babies, and we love our babies, and it's hard to say I just killed my hypothesis.”

In academia, projects may never be fully terminated, they may merely be set aside until another student expresses interest, or another opportunity is presented. A real tension exists to keep pursuing projects to an extent that redefines sunk-cost bias to effectively not exist. Even if a project does not work some faculty redefine the value of the experience to emphasize student training and the gain in the knowledge of what does not work. In other words, some faculty unconsciously eliminate sunk-cost bias by fiat. Ruminations by two academics capture the tension in terminating projects:

“The hardest decision that you did not ask is when do you decide to just totally cancel a project. That is the hardest thing ever because we always believe that our ideas are brilliant. And that when they're not working it is due to students’ incompetence and not a failed idea.”

“The great thing is that even though I've stopped some of these projects, that doesn't mean they're dead … And then the next cohort of students come in and I give that same project to a new student and lo-and-behold, within two weeks they're successful.”

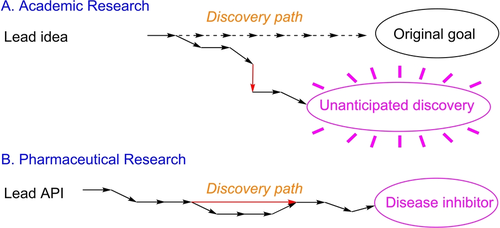

Compounding identification of sunk-cost bias in academic research is the ability to redefine the project goal. Academic research is curiosity driven for the discovery of new knowledge. En route to a projected discovery, the daily experimental design may be driven more by what questions are most readily answered than whether they align with the original research goal. Research drift occurs as the most promising results redefine the original goal (Figure 2, part A). As one scientist captured the dynamic nature of decision-making:

Divergent discovery process of industrial and academic research.

“I always tell my students, ‘Let's let science drive the decision-making process for us.’ ”

Serendipity often changes the direction of academic research (Figure 2, red arrow in part A). Discovery drift in an academic setting can lead to redefining success in ways that pharmaceutical research cannot. In industry, a serendipitous discovery needs to align with the synthesis of the targeted experimental therapeutic so is more likely to increase overall efficiency than change the overall goal (Figure 2, red arrow in part B).

Academic research is inherently flexible which complicates an evaluation of sunk-cost bias. University researchers tend to be only minimally aware of sunk-cost bias, though most faculty have implemented regular project evaluation as part of the discovery process and some embrace discussions of project planning as part of student training. Only if the pursuit of tangential interests does not pan out might the diversions be viewed as having spent too great an investment on research ventures; then again, the decisions were part of valuable student training. For academic researchers:

-

Most faculty regularly evaluate projects at decision points in periods ranging from one to six months.

-

A real tension exists between the tenacity required to bring challenging ideas to fruition, the malleability of academic goals, and redefining goals to such an extent as to effectively render sunk-cost bias a non sequitur.

-

In parsing out whether project failure is due to a failed idea or technique, faculty favor giving students ample leeway to demonstrate success. The approach aligns with the university's mission to educate but with an attendant risk of allowing a project to continue too long before termination.

-

Student training and a gain of knowledge occurs regardless of a project's success or failure. Learning from mistakes is a valuable component of student development that requires actually making mistakes.

6 Recommended Strategies for Mitigating Sunk-Cost Bias

The influence of sunk-cost bias across an array of sectors has stimulated evidence-based strategies for mitigating against sunk-cost bias. Offered below are several strategies to minimize sunk-cost bias in scientific research based on insight from prior studies and the interviews conducted for this research.

First, consider implementing frequent project reviews with explicit decision points. The reviews should be based on measurable research outcomes with pre-agreed criteria for continuing or terminating a project.48 Identifying specific decision points in advance, such as knowledge milestones, scope evaluation, and efficacy or project completion benchmarks provides a neutral scoring mechanism that can reduce emotive decisions.

Research in sunk-cost bias has shown that leaders who start a project are more likely to want to continue the project because of sunk costs like time, effort, and emotional attachment. Incorporating review teams of around twelve participants is more likely to facilitate consideration of alternatives than are groups of three or four individuals who are more likely to seek consensus.35 In other words, to avoid the sunk-cost biases, organizing reviews with diverse voices to generate multiple options will be of most value for deciding whether to continue or abandon a project.

Pausing a project to determine progress against independent, pre-determined assessment rubrics provides an opportunity to evaluate the broad goals. The change in perspective is an escape from the tendency to answer questions from the latest discovery, instead aligning future experiments with the overall research goal. Enlisting decision makers external to the project facilitates objective decisions on whether to continue, expand, or terminate a project.

Second, consider establishing a culture of reflective practice that embraces the diversity of the project team: thinking styles, differing perspectives, and approaches to problem solving.49 Teams benefit from an environment where failures are examined for learning potential and on the other extreme, policies or procedures that protect people with diverging opinions. Diverse thinkers as well as dissenters are essential for reducing sunk-cost bias because they may identify alternative solutions or offer oppositional ideas that broaden the array of potential options. Unfortunately, dissenters are often silenced if they do not feel protected or supported to share their divergent ideas. Creating psychologically safe environments protects dissenting ideas that have the potential to be transformative.

Thirdly, consider offering people time and training to improve reflective skills. Mindfulness meditation, the practice of cultivating an awareness of the present, often by experiencing body sensations to better identify and control feelings, can mitigate against sunk-cost bias.50 An awareness of feelings and triggers, can be beneficial even through relatively short exercises because they anchor people in the present rather than considering the past.51 A sense of grim determination, for example, is likely to foster continued commitment to an existing program as can a loss of drive for a project.

Mindfulness can prevent mind wandering which is associated with sunk-cost bias. During mind wandering people think about the past, possible future events, and events that may never happen. Mindfulness focuses attention on the present, counteracts anger, anxiety, and anticipated regret that can escalate commitment to a previous decision. People who practice mindfulness are better able to adopt a detached perspective, are less defensive when criticized, and are more willing to consider alternatives.37

Fourthly, reframing projects to identify possible paths to failure can provide an opportunity to implement strategies to avoid potential problems. The reframing process not only helps to identify alternatives to overcome possible obstacles but can identify intractable problems that might best be avoided by discontinuing a particular line of inquiry.35 Reframing has the added advantage of identifying opportunity costs, the cost of doing or not doing something else, which can assist in making salient project decisions.52

The open-ended nature of research creates a paradox; the perseverance required to succeed in new ventures fosters continuing projects when the chance of success is minimal. The sunk-cost bias decision matrix provides a visual resource for understanding the risk-reward tension. Continuing a doomed project incurs a loss (Figure 3, top left) whereas continuing a project that ultimately succeeds leads to a large win (Figure 3, top right); terminating a project that will fail is a small win (Figure 3, bottom left) while terminating a project that will ultimately succeed is a large loss (Figure 3, bottom right). Implementing effective controls to sensitize decision makers toward the likelihood of failure minimizes the resources allocated to a project, transferring the initial resource allocation from a past loss to a future win (Figure 3, arrow). An awareness of sunk-cost bias leverages scientific optimism into strategic decisions to continue or terminate research projects that are more likely to have favorable outcomes.

Effect of sunk-cost awareness on the decision matrix.

7 Conclusion

Sunk-cost bias in science is significant, affecting everyone from novices to Nobel laureates. The ability to discern a tendency to continue investing in projects with minimal chance of success has plagued businesses, industries, and research ventures of all types. Pulling the plug on a project is difficult because there is always the potential that the next investment will provide the crucial breakthrough.

Scientific discovery involves an inherent level of risk. Expending resources to search blind alleys is part of the non-linear discovery process. An awareness of sunk-cost bias has the potential to mitigate against allocating further resources to failing projects. Driven by the enormous development costs, the pharmaceutical industry has implemented several strategies to mitigate against sunk-cost bias. Academic and industrial scientists operate differently. Industrial research is targeted toward a specific goal with an associated financial reward whereas academic research is curiosity driven with goals that can vary which allows academics to redefine goals and objectives. Universities may benefit from implementing industry practices to avoid sunk-cost bias. Redirecting resources might increase graduation and publications rates as well as the completion rate of high-impact projects.

Sunk-cost bias arises because people behave in ways that are simultaneously logically inconsistent and inherently human. The analysis here provides strategies that are effective in promoting important decisions to terminate or continue research projects to maximize precious resources. An awareness of sunk-cost bias and appropriate practices should prove valuable in advancing scientific discoveries to the maximum extent with the available resources.

Disclaimer

The opinions expressed in this publication are the view of the author(s) and do not necessarily reflect the opinions or views of Angewandte Chemie International Edition/Angewandte Chemie, the Publisher, the GDCh, or the affiliated editors.

Acknowledgements

Financial support for this research from NSF (IGE 1855925) is gratefully acknowledged. Suggestions on an earlier draft from Karl Sohlberg are much appreciated. The interview participants are gratefully acknowledged for participating in the study.

Conflict of interest

The authors declare no conflict of interest.

Open Research

Data Availability Statement

The data that support the findings of this study are available in the supplementary material of this article.

Biographical Information

Elaine Perignat earned a B.S. in Business Administration from the University of Pittsburgh, an M.Ed. from Temple University, and a Ph.D. from Drexel University involving interdisciplinary education and creativity research. She completed a two-year postdoctoral position at Drexel University before moving to Immaculata University where she teaches business management, marketing, and entrepreneurship.

Biographical Information

Fraser Fleming obtained a BS (Hons) from Massey University (New Zealand), a Ph.D. specializing in organic chemistry from the University of British Columbia (Canada), and completed postdoctoral research at Oregon State University. He advanced through the academic ladder at Duquesne University (Pittsburgh) then served as a Program Director at the National Science Foundation for two years before moving to Drexel University. He has taught courses in chemistry, science and religion, and creativity. He has a strong interest in the philosophical aspects of science, particularly those influenced by the vagaries of the human condition.